D Historical Perspective

This appendix provides historical background for assessing the current state of the art in unmanned ground vehicle (UGV) technologies. The focus is on specific perception research that has directly contributed to autonomous mobility. It is representative, not exhaustive.1 It traces developments in machine perception relevant to a mobile robot operating outdoors, and it identifies the major challenges in machine perception and disadvantages of different approaches to these challenges.

THE EARLY DAYS 1959–1981

The first mobile robot to use perception, called “Shakey,” was developed at the Stanford Research Institute (Nilson, 1984) beginning in 1969 and continuing until 1980. Shakey operated in a “blocks world”: an indoor environment containing isolated prismatic objects. Its sensors were a video camera and a laser range finder, and it used the MIT blocks-world image-understanding algorithms (Roberts, 1965) adapted for use with video images.

Each forward move of the robot was a few meters and took about 1 hour, mostly for vision processing. Shakey emerged during the first wave of artificial intelligence that emphasized logic and problem solving. For example, Shakey could be commanded to push a block that rested on top of a larger block. The robot’s planner, a theorem prover, would construct a plan that included finding a wedge that could serve as a ramp, pushing it against the larger block, rolling up the ramp, and then pushing the smaller block off. Perception was considered of secondary importance and was left to research assistants. It would become very clear later that what a robot can do is driven primarily by what it can see, not what it can plan.

In 1971, Rod Schmidt of Stanford University published the first Ph.D. thesis on vision and control for a robot operating outdoors. The Stanford Cart used a single black and white camera with a 1-Hz frame rate. It could follow an unbroken white line on a road for about 15 meters before breaking track. Unlike Shakey, the Cart moved continuously at “a slow walking pace” (Moravec, 1999). The Cart’s vision algorithm, like Shakey’s, first reduced images to a set of edges using operations similarly derived from the blocks-world research. While adequate for Shakey operating indoors, these proved inappropriate for outdoor scenes containing few straight edges and many complicated shapes and color patterns. The Cart was successful only if the line was unbroken, did not curve abruptly, and was clean and uniformly lit. The Cart’s vision system2 used only about 10 percent of the image and relied on frame-to-frame predictions of the likely position of the line to simplify image processing.

Over the period from 1973 to 1980, Hans Moravec of Stanford University (Moravec, 1983) developed the first stereo3 vision system for a mobile robot. Using a modified Cart, he obtained stereo images by moving a black and white video camera side to side to create a stereo baseline. Like Shakey, Moravec’s Cart operated mostly indoors in a room with simple polygonal objects, painted in contrasting black and white, uniformly lit, with two or three objects spaced over 20 meters.

The robot had a planner for path planning and obstacle avoidance. The perception system used an interest algorithm (Moravec, 1977) that selected features that were highly distinctive from their surroundings. It could track and match about 30 image features, creating a sparse range map for navigation. The robot required about 10 minutes for each 1-meter move, or about 5 hours to go 30 meters, using a 1-MIP processor. Outdoors, using the same algorithms, the Cart could only go about 15 meters before becoming confused, most frequently defeated by shadows that created false edges to track. Moravec’s work demonstrated the utility of a three-dimensional representation of a robot’s environment and further highlighted the difficulties in perception for outdoor mobility.

By the end of 1981, the limited ventures into the real world had highlighted the difficulties posed by complex shapes, curved surfaces, clutter, uneven illumination, and shadows. With some understanding of the issues, research had begun to develop along several lines and laid the groundwork for the next generation of perception systems. This work included extensive research on image segmentation4 and classification with particular emphasis on the use of color (Laws, 1982; Ohlander et al., 1978; Ohta, 1985), improved algorithms for edge detection5 (Canny, 1983), and the use of models for road following (Dickmanns and Zapp, 1986). At this time there was less work on the use of three-dimensional data. While Shakey (rangefinder) and Moravec (stereo) demonstrated stereo’s value to path planning (more dense range data), stereo remained computationally prohibitive and the range finders of the time, which required much less computation, were too slow.6

THE ALV ERA 1984–1991

In 1983, DARPA started an ambitious computer research program, the Strategic Computing program. The program featured several integrating applications to focus the research projects and demonstrate their value to DARPA’s military customers. One of these applications was the Autonomous Land Vehicle (ALV),7 and it would achieve the next major advance in outdoor autonomous mobility.

The ALV had two qualitative objectives: (1) It had to operate exclusively outdoors, both on-road and off-road across a spectrum of conditions: improved and unimproved roads, variable illumination and shadows, obstacles both on-and off-road, and in terrain with varying roughness and vegetative background. (2) It had to be entirely self-contained and all computing was to be done onboard. Quantitatively, the Strategic Computing Plan (DARPA, 1983) called for the vehicle to operate on-road beginning in 1985 at speeds up to 10 km/h, increasing to 80 km/h by 1990, and off-road beginning in 1987 at speeds up to 5 km/h, increasing to 10 km/h by 1989. On-road obstacle avoidance was to begin in 1986 with fixed polyhedral objects spaced about 100 meters apart.

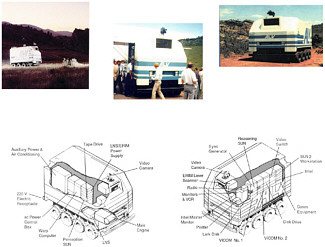

The ALV was an eight-wheeled all-terrain vehicle with an 18-inch ground clearance and good off-road mobility (Figure D-1). A fiberglass shelter sufficient to enclose generators, computers, and other equipment was mounted on the chassis. The navigation system included an inertial land navigation system that provided heading and distance traveled, ultrasonic sensors to provide information about the pose of the vehicle with respect to the ground for correcting the scene model geometry, and a Doppler radar to provide ground speed from both sides of the ALV to correct for wheel slippage errors in odometry.

The ALV had two sensors for perception: a color video camera (512[h] by 485[v], 8 bits of red, blue, green data) and a two-mirror custom laser scanner that had a 30°-vertical by 80°-horizontal field of view, produced a 256(h) × 64(v) range image with about 3-inch resolution, had a 20-meter range, a 2-Hz frame rate,8 and an instantaneous field of view of 5-8.5 mrad. The scanner also yielded a 256 × 64 reflectance image. The laser scanner used optics and signal processing to produce a real-time depth map suitable for obstacle avoidance that was impractical to obtain from stereo by calculation. The ALV was the first mobile robot to use both these sensors.

The first algorithm used for road following (Turk et al., 1988) projected a red, green, blue (RGB) color space into two dimensions and used a linear discriminant function to differentiate road from nonroad in the image. This was a fast algorithm, but it had to be tuned by hand for different roads and different conditions. Using this algorithm, the ALV made a 1-km traverse in 1985 at an average speed of 3 km/h,

FIGURE D-1 Autonomous land vehicle (ALV). Courtesy of John Spofford, SAIC.

a 103 gain over the prior state of the art. This increased to 10 km/h over a 4-km traverse in 1986. In 1987, the ALV reached a top speed of 20 km/h with an improved color segmentation algorithm using a Bayes’ classifier (Olin et al., 1987) and used the laser scanner to avoid obstacles placed on the road. The obstacle detection algorithm (Dunlay and Morgenthaler, 1986) made assumptions about the position of ground plane and concluded that those range points that formed clusters above some threshold height over the assumed ground plane represented obstacles. This worked well for relatively flat roads but was inadequate in open terrain, where assumptions about the location of the ground plane were more likely to be in error. Work on road following continued, experimenting with algorithms primarily from Carnegie Mellon University (CMU) (e.g., Hebert, 1990) and the University of Maryland (e.g., Davis et al., 1987; Sharma and Davis, 1986), but the emphasis in the program shifted in 1987 to cross-country navigation.

In doing cross-country navigation, the ALV became the first robotic vehicle to be intentionally driven off-road, and the principle goal for perception became obstacle detection. The ALV relied primarily on the laser range scanner to identify obstacles. Much of the early work on obstacle detection used geometric criteria and mostly considered isolated, simple prismatic objects.

However, using such geometric criteria in cross-country terrain may cause traversable objects, such as dense bushes that can return a cloud of range points, to be classified as obstacles even though the vehicle can push through them. This signaled the need to introduce such additional criteria as material type to better classify the object. As the ALV began to operate cross-country, it also became clear that the concept of an obstacle should be generalized to explicitly include any terrain feature that leads to unsafe vehicle operation (e.g., a steep slope), taking into account vehicle dynamics and kinematics. This expanded definition acknowledged the dependence of object or feature classification on vehicle speed, trajectory, and other variables, extending the concept of an obstacle to the more inclusive notion of traversability.

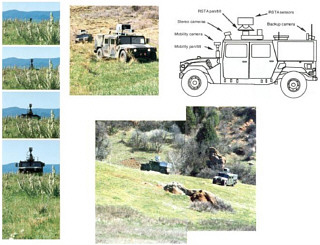

In August 1987, the ALV performed the first autonomous cross-country traverse based on sensor data (Olin and Tseng, 1991). During this and subsequent trials extending for about a year, the ALV navigated around various kinds of isolated positive obstacles over traverses of several kilometers. The terrain had steep slopes (some over 15 degrees), ravines and gulleys, large scrub oaks, brushes, and rock out-crops (see Figure D-2). Some manmade obstacles were emplaced for experiments. The smallest obstacles that could be reliably detected were on the order of 2 feet to 3 feet in height. On occasion, the vehicle would approach and detect team members and maneuver to avoid them. The vehicle reached speeds of 3.5 to 5 km/h9 and completed about 75 percent of the traverses.10 These speeds were enabled by the

FIGURE D-2 ALV and Demo II operating areas. Courtesy of Benny Gothard, SAIC.

Hughes architecture, which tightly coupled the path planner11 to perception and allowed the vehicles to be more reactive in avoiding nontraversable terrain.

This architecture was the first for a vehicle operating off-road that was influenced by the reactive paradigm of Arkin (1987), Brooks (1986), and Payton (1986). The reactive paradigm was a response to the difficulties in machine vision and in planning that caused robots to operate slowly. Brooks reasoned that insects and some small animals did very well without much sensor data processing or planning. He was able to create some small robots in which sensor outputs directly controlled simple behaviors;12 there was no planning and the robot maintained no external representation of its environment. More complex behaviors arose as a consequence of the robot’s interaction with its environment. While these reactive approaches produced rapid response, because they eliminated both planning and most sensor processing, they did not easily scale to more complex goals. A third paradigm then arose that borrowed from both the deliberative and reactive paradigm. Called the hybrid deliberative or reactive paradigm, it retained planning but used the simple reflexive behaviors as plan primitives. The planner scheduled sequences of these to achieve higher-level goals. Sensor data was provided directly to these behaviors as in the reactive architecture but was also used to construct a global representation for the planner to use in reasoning about higher-level goals.

In a hybrid architecture, the highest level of planning may operate on a cycle time of minutes while the lowest-level sensor-behavior loops may cycle at several hertz. These lower-level loops are likely hard-wired to ensure high-speed operation. At the lowest level, multiple behaviors will be active simultaneously, for example, road-edge following and obstacle avoidance. An arbitration mechanism is used to control execution precedence when behaviors conflict.

The ALV program demonstrated that architectures do matter, that they are a key to performance, and that they affect how perception is done. Without the hybrid deliberative and reactive architecture UGVs would not perform at today’s level. There is still room for improvement within the paradigm.

In the ALV program, the cross-country routes were carefully selected to exclude obstacles smaller than those that could be detected. There were no surprises that could endanger the vehicle or operators. Demo II, to be described subsequently, and Demo III (see Appendix C) were also scenario driven with obstacles selected to be detectable. The emphasis in these programs was on tuning performance to meet the demonstration objectives of the scenarios that describe plausible military missions (see, for example, Spofford et al., 1997). Hence, cross-country speeds reported must be viewed as performance obtained in those specific scenarios under controlled conditions not reflective of performance that might be obtained in unknown terrain. For the most part, such experiments remain to be done.

Several other organizations were operating robotic vehicles outdoors during roughly the same period as the ALV (i.e., mid-1980s to about 1990). These included CMU, the Jet Propulsion Laboratory (JPL), and the Bundeswehr University in Munich. Much of the CMU work, by design, was incorporated into the ALV’s software. There was however, one significant advance that was not incorporated into the ALV because of timing. It was to have a most singular impact on perception, initially for on-road navigation and

later for off-road operation. This was the re-appearance, after nearly a 20-year absence from mainstream perception research, of the artificial neural network—not the two-layer Perceptron for the reasons detailed by Minsky and Papert (1969) but a more capable three-layer system.13 The reason for the renaissance was the invention of a practical training procedure for these multilayer networks. This opened the way for a surge of applications; one of them was ALVINN (autonomous land vehicle in a neural network), and it would increase on-road speeds from 20 km/h in 1998 to over 80 km/h in 1991.14 ALVINN (Pomerleau, 1992) was motivated by the desire to reduce the hand tuning of perception algorithms and create a system which could robustly handle a wide variety of situations. It was a perception system that learned to control a vehicle by watching a person drive, and learned for itself what features were important in different driving situations.

The two other organizations also made noticeable advances: JPL developed the first system to drive autonomously across outdoor terrain using a range map derived from stereo rather than a laser scanner (Wilcox et al., 1987). The system required about 2.5 hours of computation for every 6 meters of travel. The Bundeswehr University operated at the other extreme, achieving speeds of 100 km/h using simple detectors for road edges and other features and taking advantage of the constraints of the autobahn to simplify the perception problem (Dickmanns and Zapp, 1987). It was highly tuned and could be confused by road imperfections, shadows, and puddles or dirt that obscured the road edge or road markings. It did not do obstacle detection. When the program ended in 1989, the system was using 12 special purpose computers (10 MIPS each), each tracking a single image patch.15

By 1990, JPL had developed near real-time stereo algorithms (Matthies, 1992) producing range data from 64 × 60 pixel images at 0.5-Hz frame rate. This vision system was demonstrated as part of the Army Demo I program16 in 1992 showing automatic obstacle detection and safe stopping (not avoidance) at speeds up to 10 mph. This stereo system used area correlation and produced range data at every pixel in the image, analogous to a LADAR. It was therefore appropriate for use in generic off-road terrain. This was a new paradigm for stereo vision algorithms. Up to that time the standard wisdom in the computer vision community was that area-based algorithms were too slow and too unreliable for real-time operation; most work up to that point had used edge detection, which gave relatively sparse depth maps not well suited to off-road navigation. This development spurred the use of stereo vision at other institutions and in the Demo II program, which followed shortly thereafter.

By the early 1990s, the state-of-the-art perception systems used primarily a single color video camera and a laser scanner. Computing power had risen from about the 1/2 MIPS of the Stanford Cart to about 100 MIPS at the end of the ALV program in 1989. Algorithms had increased in numbers and complexity. The ALV used about 1,000,000 executable source lines of code; about 500,000 were allocated to mobility. Road-following speeds increased by about a factor of 104 from 1980 to 1989 (meters per hour to tens of kilometers per hour); this correlated with the increase in computing power. Road following was restricted to daylight, dry conditions, no abrupt changes in curvature, and good edge definition. ALVINN showed how to obtain the more general capability that had been lacking, and the Bundeswehr’s work hinted at further gains that might be made through distributed processing.

Cross-country mobility was mostly limited to traverses of relatively flat, open terrain with large, isolated obstacles and in daylight, dry conditions. Feature detection was primarily limited to detecting isolated obstacles on the order of 2 to 3 feet high or larger. There was a limited capability to classify terrain (e.g., into traversable or intraversable), but performance was strongly situation dependent and required considerable hand tuning. The detection of negative obstacles (e.g., ditches or ravines) was shown to be a major problem, and no work had been done on identifying features of tactical significance (e.g., tree lines). The image understanding community was vigorously working on approaches to obtain depth maps from stereo imagery (e.g., Barnard, 1989; Horaud and Skordas, 1989; Lim, 1987; Medioni and Nevatia, 1985; Ohta and Kanade, 1985). However, the computational requirements of most of these approaches placed severe limits on the speed of vehicles using stereo for cross-country navigation. The simpler JPL area-correlation algorithm provided a breakthrough and would enable the use of stereo vision in subsequent UGV programs. Robust performance was emerging as an important goal as vehicles began to operate under more demanding conditions; a limit was being reached on how much hand tuning could be done to cope with each change in the environment (e.g., changes in visibility or vegetation background).

THE DEMO II ERA 1992–1998

The next major advance in the state of the art was driven by the DARPA/OSD-sponsored UGV/Demo II program17

FIGURE D-3 The Demo II vehicle and environment. Courtesy of John Spofford, SAIC.

that began in 1992. The emphasis in the Demo II program was on cross-country A-to-B traverses that might be typical of those required by a military scout mission, including some road following on unimproved roads. The program integrated research from a number of organizations. For a detailed description of the program and the technical contributions of the participants see Firschein and Strat (1997). The Demo II vehicles (see Figure D-3) were HMMWVs equipped with stereo black-and-white video cameras on a fixed mount for obstacle avoidance,18 and a single color camera on a pan-and-tilt mount for the road following. Experiments were conducted with stereo FLIR (3–5 µm) to demonstrate the feasibility of nighttime obstacle detection, but the results were not used to control the vehicle. Some target detection and classification experiments were conducted with LADAR, but it was not used to control the vehicle. The program manager gave first priority to improving the state of the art of vehicle navigation using passive sensors. The ALVINN road-following system was shown to be robust in Demo II. Neural networks trained on paved roads worked well on dirt roads. Speeds up to 15 mph were achieved on dirt roads. ALVINN was also extended to detect road intersections (Jochem and Pomerleau, 1997) but encountered difficulties at speeds much greater than 5 mph. The researchers concluded that active vision was likely required. The value of active vision was shown in a subsequent experiment (Panacea) by Sukthankar et al. (1993). In this experiment, ALVINN’s output was used to pan the camera to keep the road in view and maintain important features within the field of view. The planner could also provide an input. Active vision allowed the CMU Navlab to negotiate a sharp fork it could not otherwise negotiate with a fixed camera.

Significant road curvature and vertical changes were problems, particularly with a fixed camera. There was a problem with a network trained on a road with tall grass on either side when the grass was cut and had turned brown. Low contrast was also a problem (e.g., a brown dirt road against brown grass). A solution for low-contrast situations is to use other features such as texture.

A radial basis function neural network road follower (ROBIN) was developed by Martin-Marietta (Rosenblum, 2000). It was trained using exemplar or template road images. Unlike ALVINN, the input to the neural network was not a real-time road image but a measure of how close the actual images were to each of the templates. It could drive on a diverse set of roads and reached speeds of up to 10 mph on ill-defined dirt roads with extreme geometrics.

Three obstacle detection and avoidance systems were evaluated: GANESHA, an earlier project (Langer and Thorpe, 1997), Ranger (Kelly and Stentz, 1997), and SMARTY (Stentz and Hebert, 1997). Each used somewhat different criteria for obstacle classification and each used the resultant obstacle map in different ways to generate steering

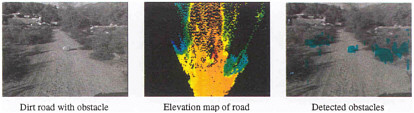

FIGURE D-4 Stereo obstacle detection results. Courtesy of Larry Matthies, Jet Propulsion Laboratory.

commands. SMARTY, with a simpler classification scheme, was selected.

SMARTY was a point-based system for rapidly processing range data (either from a laser scanner or stereo). It updated obstacle maps, on-the-fly, as soon as data became available, rather than waiting until an entire frame or image was acquired and then batch processing all the data at once. In tests with the ERIM scanner it reduced obstacle detection time from about 700 msec to about 100 msec (Hebert, 1997).

The real significance of SMARTY was the introduction of a new paradigm for data processing: time stamp each data point as it is generated and use vehicle state data (e.g., x, y, z, roll, pitch and yaw)19 to locate that point in space. This provides a natural way to fuse data from alternative sensors or from a single sensor over time.

Perhaps the greatest change in the perception system for Demo II was the use of stereo images to provide range data. Stereo vision was used in Demo I for obstacle detection at up to 10 mph. Demo II added obstacle avoidance off-board demonstrations of night stereovision with 3–5 µm FLIR, and increased the speed and resolution of the system to 256 × 80 pixel range images at the 1.4-Hz frame rate. This was accomplished through refinement of the algorithms and advances in processor speed.

The stereo algorithms are described in Matthies et al. (1996). They worked particularly on ways to reduce false positives or false matches (i.e., the appearance of false obstacles), which had limited the usefulness of stereo obstacle detection. A typical result from Matthies et al. (1996) is shown in Figure D-4. Note that the obstacle detection algorithm used only geometric criteria; hence, bushes on the left and right were also classified as obstacles. They reported speeds of 5 km/h to 10 km/h over relatively flat terrain with scattered positive obstacles 0.5 meters or higher embedded in 12-inch grass cover. During Demo II(B) the stereo system was integrated with the SMARTY obstacle avoidance system and reached speeds up to 10.8 km/h on grassy terrain with 1-meter or greater positive obstacles.

Obstacle detection performance for Demo II is described in a paper by Spofford and Gothard (1994). Their analysis assumes 128 × 120 stereo depth information from the JPL system used in SMARTY. The system could detect an isolated, positive 8-inch obstacle at about 6 meters and a 16-inch obstacle at about 10 meters. These distances correspond to about 3 mph and 8 mph respectively for the HMMWV. Obstacles less than 8 inches could not be reliably detected. In some experiments 1.5-meter-high fake rocks constructed of foam were used. These could be detected at about 40 meters and allowed for a speed of about 20 mph.20

Demo II was remarkable in its demonstration of stereovision as a practical means for obstacle detection, but it came at a cost. The perception system required higher resolution to detect smaller obstacles: the same number of vertical pixels (5-10) must be placed on a smaller surface. The field of view was therefore limited21 and there was a real possibility that the vehicle might end up in cul-de-sacs; the vehicle lacked perspective. It avoided local obstacles in a way that might have been globally sub-optimal. There are two solutions: Use multiple cameras with different fields of view or use active vision where orientation is controlled to scan over a wider area.

Negative obstacles were successfully detected with algorithms that looked for large discontinuities in range. Any solution was still limited by viewing geometry. No capability was demonstrated in Demo II for detecting thin obstacles such as fences, or for use in other situations where geometry-based methods fail.

There was no on-vehicle demonstration of terrain classification as part of the Demo II program. However, some

research on doing terrain classification in real time was done by Matthies et al. (1996). Prior work had been successful in doing classification but it took on the order of tens of minutes per scene. Matthies et al. looked for observable properties that could be used with very simple and fast algorithms. They used ratios of spectral bands in the red, green and near infrared (0.08 µm) provided by a color camera with a rotating filter wheel that was not sufficiently robust for on-vehicle use. At that time, no camera was available that could get the required bands simultaneously. This has since changed. With this approach, they were able to label regions as vegetation or nonvegetation at 10 frames per second. Near infrared and RGB was better than RGB alone. They did not extend the classification further (e.g., for vegetation was it grass, trees, bushes; for nonvegetation was it soil, rocks, roads?). Similar work was reported by Davis et al. (1995). They used six spectral bands in the red to the near infrared and obtained classification rates of 10 fields per second. Their conclusions were the same as that of Matties et al., namely, that using near infrared and RGB gave better results than RGB alone.

By the end of the Demo II era, road following had been demonstrated on a wide variety of roads in daylight and in good weather. The potential to use FLIR for road following at night had been demonstrated. There were still issues with complex intersections and abrupt changes in curvature but approaches to both had been demonstrated. Obstacle avoidance was not solved, but performance had improved on isolated, positive obstacles. Smaller objects could be detected

TABLE D-1 Performance Trends for ALV and Demo II

faster and at greater distance. Requirements were better understood as were the strengths and weaknesses of different sensors and algorithms. The detection of negative obstacles had improved but was still a problem. The use of simple geometric criteria to classify objects as obstacles had been fully exploited. It resulted in many false positives as such traversable features as clumps of grass were classified as obstacles. Additional criteria such as material type would have to be used to improve performance. The goal of classifying terrain on the move into traversable and intraversable regions considering vehicle geometry and kinematics was still in the future although much fundamental work had been accomplished. There was modest progress on real time classification of terrain into categories such as vegetated or not but it fell short of what was needed both to support obstacle avoidance and tactical behaviors. Progress was made on characterizing surface roughness based on inertial measurement data. To obtain a predictive capability would require texture analysis of imaging sensor data. This was difficult to do in real-time. There was no progress on identifying tactically significant features, such as tree lines that fell beyond the nominal 50-meter to 100-meter perception horizon. There was, however, explicit acknowledgment that extending the perception horizon was an important problem. Most of the work continued to be under daylight, good weather conditions with no significant experiments at night or with obscurants. No research was focused on problems unique to operations in urban terrain, arguably the most complicated environment. Leader-follower operation was demonstrated in Demo II, but it was based on GPS, not perception.

Perception continued to rely primarily on video cameras. Some experiments were done with FLIR. LADAR continued in use elsewhere but was not used to enable autonomous mobility in Demo II. Stereo was shown to be a practical way to detect obstacles. There were situations, such as maneuvering along tree lines or in dense obstacle fields, where LADAR was preferred. There were experiments using simple color ratios for terrain classification but there was no work to more fully exploit spectral differences for material classification (e.g., multiband cameras). There was no work in sensor data fusion to improve performance or robustness. Major gains in robustness in road following were achieved with machine learning, but it was not used more generally.

Table D-1 summarizes the state of the art at the end of this period of research and contrasts it with the end of the ALV program.

REFERENCES

Arkin, R.C. 1987. Motor schema-based navigation for a mobile robot: An approach to programming by behavior. Pp. 264–271 in Proceedings of the 1987 IEEE International Conference on Robotics and Automation. New York, N.Y.: Institute of Electrical and Electronics Engineers, Inc.

Ballard, D., and C. Brown. 1982. Computer Vision. Englewood Cliffs, N.J.: Prentice Hall, Inc.

Barnard, S.T. 1989. Stochastic stereo matching over scale. International Journal of Computer Vision 3(1): 17–32.

Brooks, R.A. 1986. A robust layered control system for a mobile robot. IEEE Journal of Robotics and Automation 2(1): 14–23.

Canny, J.F. 1983. Finding Edges and Lines in Images, TR-720. Cambridge, Mass.: Massachusetts Institute of Technology Artificial Intelligence Laboratory.

DARPA (Defense Advanced Research Projects Agency). 1983. Strategic Computing—New Generation Technology: A Strategic Plan for Its Development and Application to Critical Problems in Defense, October 28. Arlington, Va.: Defense Advanced Research Projects Agency.

Davis, I.L., A. Kelly, A. Stentz, and L. Matthies. 1995. Terrain typing for real robots. Pp. 400–405 in Proceedings of the Intelligent Vehicles ’95 Symposium. Piscataway, N.J.: Institute of Electrical and Electronics Engineers, Inc.

Davis, L.S., D. Dementhon, R. Gajulapalli, T.R. Kushner, J. LeMoigne, and P. Veatch. 1987. Vision-based navigation: A status report. Pp. 153–169 in Proceedings of the Image Understanding Workshop. Los Altos, Calif.: Morgan Kaufmann Publishers.

Dickmanns, E.D., and A. Zapp. 1986. A curvature-based scheme for improving road vehicle guidance by computer vision. Pp. 161–168 in Mobile Robots, Proceedings of SPIE Volume 727. W.J. Wolfe and N. Marquina, eds. Bellingham, Wash.: The International Society for Optical Engineering.

Dickmanns, E.D., and A. Zapp. 1987. Autonomous high speed road vehicle guidance by computer vision. Pp. 221–226 in Automatic Control— World Congress, 1987: Selected Papers from the 10th Triennial World Congress of the International Federation of Automatic Control. Munich, Germany: Pergamon.

Dunlay, R.T., and D.G. Morgenthaler. 1986. Obstacle avoidance on roadways using range data. Pp. 110–116 in Mobile Robots, Proceedings of SPIE Volume 727. W.J. Wolfe and N. Marquina, eds. Bellingham, Wash.: The International Society for Optical Engineering.

Firschein, O., and T. Stratt. 1997. Reconnaissance, Surveillance, and Target Acquisition for the Unmanned Ground Vehicle: Providing Surveillance “Eyes” for an Autonomous Vehicle. San Francisco, Calif.: Morgan Kaufmann Publishers.

Fischler, M., and O. Firschein. 1987. Readings in Computer Vision. San Francisco, Calif.: Morgan Kaufmann Publishers.

Hebert, M. 1990. Building and navigating maps of road scenes using active range and reflectance data. Pp. 117–129 in Vision and Navigation: The Carnegie Mellon Navlab. C.E. Thorpe, ed. Boston, Mass.: Kluwer Academic Publishers.

Hebert, M.H. 1997. SMARTY: Point-based range processing for autonomous driving. Pp. 87–104 in Intelligent Unmanned Ground Vehicles. M.H. Hebert, C. Thorpe, and A. Stentz, eds. New York, N.Y.: Kluwer Academic Publishers.

Horaud, R., and T. Skordas. 1989. Stereo correspondence through feature grouping and maximal cliques. IEEE Transactions on Pattern Analysis and Machine Intelligence 11(11): 1168–1180.

Jochem, T., and D. Pomerleau. 1997. Vision-based neural network road and intersection detection. Pp. 73–86 in Intelligent Unmanned Ground Vehicles. M.H. Hebert, C. Thorpe, and A. Stentz, eds. Boston, Mass.: Kluwer Academic Publishers.

Kelly, A., and A. Stentz. 1997. Minimum throughput adaptive perception for high-speed mobility. Pp. 215–223 in IROS ’97, Proceedings of the 1997 IEEE/RSJ International Conference on Intelligent Robot and Systems: Innovative Robotics for Real-World Applications . New York, N.Y.: Institute of Electrical and Electronics Engineers, Inc.

Langer, D., and C.E. Thorpe. 1997. Sonar-based outdoor vehicle navigation. Pp. 159–186 in Intelligent Unmanned Ground Vehicles: Autonomous Navigation Research at Carnegie Mellon. M. Hebert, C.E Thorpe, and A. Stentz, eds. Boston, Mass.: Kluwer Academic Publishers.

Laws, K.I. 1982. The Phoenix Image Segmentation System: Description and Evaluation, Technical Report 289, December. Menlo Park, Calif.: SRI International.

Lim, H.S. 1987. Stereo Vision: Structural Correspondence and Curved Surface Reconstruction, Ph.D. Dissertation. Stanford, Calif.: Stanford University.

Matthies, L. 1992. Stereo vision for planetary rover: Stochastic modeling to near real-time implementation. International Journal of Computer Vision, Vol. 8, No. 1.

Matthies, L., A. Kelly, T. Litwin, and G. Tharp. 1996. Obstacle detection for unmanned ground vehicles: A progress report. Pp. 475–486 in Robotics Research: Proceedings of the 7th International Symposium. G. Giralt and G. Hirzinger, eds. Heidelberg, Germany: Springer-Verlag.

Medioni, G., and R. Nevatia. 1985. Segment based stereo matching. Computer Vision, Graphics, and Image Processing 31(1): 2–18.

Minsky, M.L., and S. Papert. 1969. Perceptrons: An Introduction to Computational Geometry. Cambridge, Mass.: The MIT Press.

Moravec, H.P. 1977. Towards Automatic Visual Obstacle Avoidance. Proceedings of the 5th International Joint Conference on Artificial Intelligence.

Moravec, H.P. 1983. The Stanford CART and the CMU Rover. Proceedings of the IEEE 71(7): 872–884.

Moravec, H.P. 1999. Robot: Mere Machine to Transcendent Mind. New York, N.Y.: Oxford University Press.

Nalwa, V. 1993. A Guided Tour of Computer Vision. Chicago, Ill.: R.R. Donnelley & Sons Company.

Nilson, N.J. 1984. Shakey the Robot, Technical Report 323, April. Menlo Park, Calif.: SRI International.

Ohlander, R., K. Price, and D.R. Reddy. 1978. Picture segmentation using a recursive region splitting method. Computer Graphics and Image Processing 8(3): 313–333.

Ohta, Y. 1985. Knowledge-Based Interpretation of Outdoor Color Scenes. Boston, Mass.: Pitman.

Ohta, Y., and T. Kanade. 1985. Stereo by intra-scanline and inter-scanline search using dynamic programming. IEEE Transactions on Pattern Analysis and Machine Intelligence 7(2): 139–154.

Olin, K.E., F.M. Vilnrotter, M.J. Daily, and K. Reiser. 1987. Developments in knowledge-based vision for obstacle detection and avoidance. Pp. 78–86 in Proceedings of the Image Understanding Workshop. Los Altos, Calif.: Morgan Kaufmann Publishers.

Olin, K.E., and D.Y. Tseng. 1991. Autonomous cross-country navigation— an integrated perception and planning system. IEEE Expert—Intelligent Systems and Their Applications 6(4): 16–32.

Payton, D.W. 1986. An architecture for reflexive autonomous vehicle control. Pp. 1838–1845 in Proceedings of the IEEE Robotics and Automation Conference. New York, N.Y.: Institute of Electrical and Electronics Engineers, Inc.

Pomerleau, D.A. 1992. Neural Network Perception for Mobile Robot Guidance, Ph.D. thesis. Pittsburgh, PA.: Carnegie Mellon University.

Roberts, L. 1965. Machine perception of three-dimensional solids. Pp. 159– 197 in Optical and Electro-optical Information Processing. J. Tippett and coworkers, eds. Cambridge, Mass.: MIT Press.

Rosenblum, M. 2000. Neurons That Know How To Drive. Available online at <http://www.cis.saic.com/projects/mars/pubs/crivs081.pdf> [August 6, 2002].

Rosenfeld, A. 1984. Image analysis: Problems, progress, and prospects. Pattern Recognition 17(1): 3–12.

Sharma, U.K., and L.S. Davis. 1986. Road following by an autonomous vehicle using range data. Pp. 169–179 in Mobile Robots, Proceedings of SPIE Volume 727. W.J. Wolfe and N. Marquina, eds. Bellingham, Wash.: The International Society for Optical Engineering.

Spofford, J.R., and B.M. Gothard. 1994. Stopping distance analysis of ladar and stereo for unmanned ground vehicles. Pp. 215–227 in Mobile Robots IX, Proceedings of SPIE Volume 2352. W.J. Wolfe and W.H. Chun, eds. Bellingham, Wash.: The International Society for Optical Engineering.

Spofford, J.R., S.H. Munkeby, and R.D. Rimey. 1997. The annual demonstrations of the UGV/Demo II program. Pp. 51–91 in Reconnaissance, Surveillance, and Target Acquisition for the Unmanned Ground Vehicle: Providing Surveillance “Eyes” for an Autonomous Vehicle. O. Firschein and T.M. Strat, eds. San Francisco, Calif.: Morgan Kaufman Publishers.

Stentz, A., and M. Hebert. 1997. A navigation system for goal acquisition in unknown environments. Pp. 227–306 in Intelligent Unmanned Ground Vehicles: Autonomous Navigation Research at Carnegie Mellon. M. Hebert, C.E Thorpe, and A. Stentz, eds. Boston, Mass.: Kluwer Academic Publishers.

Sukthankar, R., D. Pomerleau, and C. Thorpe. 1993. Panacea: An active controller for the ALVINN autonomous driving system, CMU-RI-TR-93-09. Pittsburgh, Pa.: The Robotics Institute, Carnegie Mellon University.

Turk, M.A., D.G. Morgenthaler, K.D. Gremban, and M. Marra. 1988. VITS—a vision system for autonomous land vehicle navigation. IEEE Transactions on Pattern Analysis and Machine Intelligence 10(3): 342– 361.

Wilcox, B.H., D.B. Gennery, A.H. Mishkin, B.K. Cooper, T.B. Lawton, N.K. Lay, and S.P. Katzmann. 1987. A vision system for a Mars rover. Pp. 172–179 in Mobile Robots II, Proceedings of SPIE Volume 852. W.H. Chun and W.J. Wolfe, eds. Bellingham, Wash.: The International Society for Optical Engineering.