3

Framework for Evaluating Curricular Effectiveness

In this chapter, we present a framework for use in evaluating mathematics curricula. By articulating a framework based on what an effective evaluation could encompass, we provide a means of reviewing the quality of evaluations and identifying their strengths and weaknesses. The framework design was formed by the testimony of participants in the two workshops held by the committee, and by a first reading of numerous examples of studies.

The framework’s purpose is also to provide various readers with a consistent and standard frame of reference for defining what is meant by a scientifically valid evaluation study for reviewing mathematics curriculum effectiveness. In addition to providing readers with a means to critically examine the evaluations of curricular materials, the framework should prove useful in guiding the design of future evaluations.

The framework is designed to be comprehensive enough to apply to evaluations from kindergarten through 12th-grade and flexible enough to apply to the different types of curricula included in this review.

With the framework, we established the following description of and definition for curricular effectiveness that is used in the remainder of this report:

Curricular effectiveness is defined as the extent to which a curricular program and its implementation produce positive and curricularly valid outcomes for students, in relation to multiple measures of students’ mathematical proficiency, disaggregated by

content strands and disaggregated by effects on subpopulations of students, and the extent to which these effects can be convincingly or causally attributed to the curricular intervention through evaluation studies using well-conceived research designs. Describing curricular effectiveness involves the identification and description of a curriculum and its programmatic theory and stated objectives; its relationship to local, state, or national standards; subsequent scrutiny of its program contents for comprehensiveness, accuracy and depth, balance, engagement, and timeliness and support for diversity; and an examination of the quality, fidelity, and character of its implementation components.

Effectiveness can be defined in relation to the selected level of aggregation. A single study can examine whether a curricular program is effective (at some level and in some context), using the standards of scientifically established as effective outlined in this report. This would be termed, “a scientifically valid study.” Meeting these standards ensures the quality of the study, but a single, well-done study is not sufficient to certify the quality of a program. Conducting a set of studies using the multiple methodologies described in this report would be necessary to determine if a program can be called “scientifically established as effective.” Finally, across a set of curricula, one can also discern a similarity of approach, such as a “college preparation approach,” “a modeling and applications approach,” or a “skills-based, practice-oriented approach,” and it is conceivable that one could ask the question of whether an approach is effective, and if so, producing an approach that’s “scientifically established as effective.” The methodological differences among these levels of aggregation are critical to consider and we address the potential impact of these distinctions in our conclusions.

Efficacy is viewed as considering issues of cost, timeliness and resource availability relative to the measure of effectiveness. Our charge was limited to an examination of effectiveness, thus we did not consider efficacy in any detail in this report.

Our framework merged approaches from method-oriented evaluation (Cook and Campbell, 1979; Boruch, 1997) that focus on issues of internal and external validity, attribution of effects, and generalizability, with approaches from theory-driven evaluations that focus on how these approaches interact with practices (Chen, 1990; Weiss, 1997; Rossi et al., 1999). This permitted us to consider the content issues of particular concern to mathematicians and mathematics educators, the implementation challenges requiring significant changes in practice associated with reform curricula, the role of professional development and teaching capacity, and the need for rigorous and precise measurement and research design.

We chose a framework that requires that evaluations should meet the high standards of scientific research and be fully dedicated to serving the information needs of program decision makers (Campbell, 1969; Cronbach, 1982; Rossi et al., 1999). In drawing conclusions on the quality of the corpus of evaluations, we demanded a high level of scientific “validity” and “credibility” because of the importance of this report to national considerations of policy. We further acknowledge other purposes for evaluation, including program improvement, accountability, cost-effectiveness, and public relations, but do not address these purposes within our defined scope of work. Furthermore, we recognize that at the local level, decisions are often made by weighing the “best available evidence” and considering the likelihood of producing positive outcomes in the particulars of context, time pressures, economic feasibility, and resources. For such purposes, some of the reported studies may be of sufficient applicability. Later in this section, we discuss these issues of utility and feasibility further and suggest ways to maintain adequate scientific quality while addressing them.

Before discussing the framework, we define the terms used in the study. There is ambiguity in the use of the term “curriculum” in the field (National Research Council [NRC], 1999a). In many school systems, “curriculum” is used to refer to a set of state or district standards that broadly outline expectations for the mathematical content topics to be covered at each grade level. In contrast, at the classroom level, teachers may select curricular programs and materials from a variety of sources that address these topics and call the result the curriculum. When a publisher or a government organization supports the development of a set of materials, they often use the term “curriculum” to refer to the physical set of materials developed across grade levels. Finally, the mathematics education community often finds it useful to distinguish among the intended curriculum, the enacted curriculum, and the achieved curriculum (McKnight et al., 1987). Furthermore, in the curriculum evaluation literature, some authors take the curriculum to be the physical materials and others take it to be the physical materials together with the professional development needed to teach the materials in the manner in which the author intended. Thus “curriculum” is used in multiple ways by different audiences.

We use the term “curriculum” or “curricular materials” in this report as follows:

A curriculum consists of a set of materials for use at each grade level, a set of teacher guides, and accompanying classroom assessments. It may include a listing of prescribed or preferred classroom manipulatives or technologies, materials for parents, homework booklets, and so forth. The curricula reviewed in this report are

written by a single author or set of authors and published by a single publisher. They usually include a listing or mapping of the curricular objectives addressed in the materials in relation to national, state, or local standards or curricular frameworks.

We also considered the meaning of an evaluation of a curriculum for the purposes of this study. To be considered an evaluation, a curriculum evaluation study had to:

-

Focus primarily on one of the curriculum programs or compare two or more curriculum programs under review;

-

Use a methodology recognized by the fields of mathematics education, mathematics, or evaluation; and

-

Study a major portion of the curriculum program under investigation.

A “major portion” was defined as at least one grade-level set of materials for studies of intended curricular programs and a significant piece (more than one unit) of curricular materials and a significant time duration of use (at least a semester) for studies of enacted curricular programs. Evaluation studies were also identified and distinguished from research studies by requiring evaluation studies to include statements about the effectiveness of the curriculum or suggestions for revisions and improvements. Further criteria for inclusion or exclusion were developed for each of the four classes of evaluation studies identified: content analyses, comparative analyses, case studies, and synthesis studies. These are described in detail in Chapters 4 through 6. Many formative, as opposed to summative, assessments were not included.

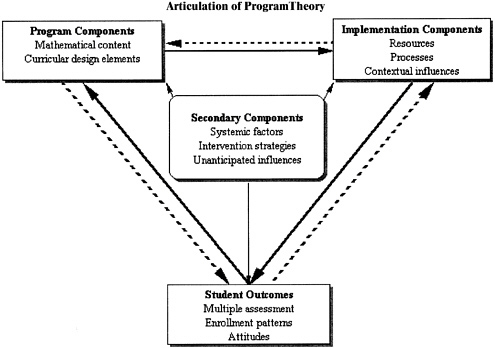

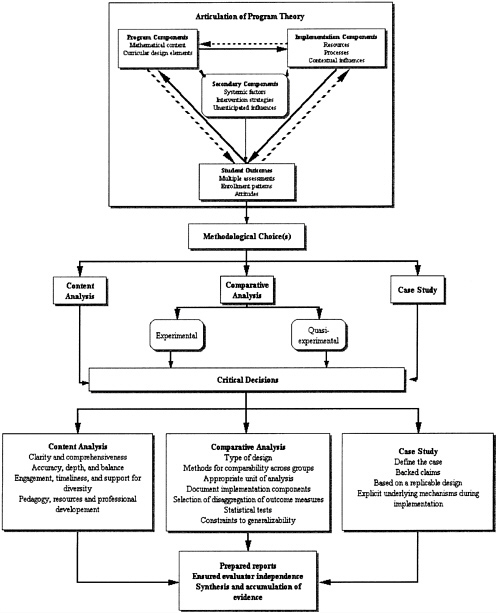

The framework we proposed consists of two parts: (1) the components of curricular evaluation (Figure 3-1), and (2) evaluation design, measurement, and evidence, (Figure 3-2). The first part guides an evaluator in specifying the program under investigation, while the second part articulates the methodological design and measurement issues required to ensure adequate quality of evidence. Each of these two parts is described in more detail in this chapter.

The first part of the framework consists of primary and secondary components. The primary components are presented in Figure 3-1: program components, implementation components, and student outcomes. Secondary components of the framework include systemic factors, intervention strategies, and unanticipated influences.

The second part of the framework, evaluation design, measurement, and evidence, is divided into articulation of program theory, selection of research design and methodology, and other considerations.

FIGURE 3-1 Primary and secondary components of mathematics curriculum evaluation.

PRIMARY COMPONENTS

For each of the three major components (program, implementation, and student outcomes), we articulated a set of subtopics likely to need consideration.

Program Components

Examining the evaluation studies for their treatments of design elements was a way to consider explicitly the importance, quality, and sequencing of the mathematics content. Our first consideration was the major theoretical premises that differentiate among curricula. Variations among the evaluated curricula include the emphasis on context and modeling activities: the importance of data; the type and extent of explanations given; the role of technology; the importance of multiple representations and problem solving; the use and emphasis on deductive reasoning, inductive reasoning, conjecture, refutation, and proof; the relationships among the mathematical subfields such as algebra, geometry, and probability; and the focus on calculation, symbolic manipulations, and conceptual development. Views of learning and teaching, the role of practice, and the directness of

FIGURE 3-2 Framework for evaluating curricular effectiveness.

instruction also vary among programs. It is important for evaluators to determine these differences and to design evaluations to assess the advantages and disadvantages of these decisions in relation to student learning.

At the heart of evaluating the quality of mathematics curriculum materials is the analysis of the mathematical content that makes up these mate

rials. We call this “content analysis” (Box 3-1). A critical area of debate in conducting a content analysis is how to assess the trade-offs among the various choices. Curricular programs must be carried out within the constraints of academic calendars and school resources, so decisions on priorities in curricular designs have real implications for what is subsequently taught in classrooms. An analysis of content should be clear and specific as to what trade-offs are made in curricular designs.

A second source of controversy evolves from a debate over the value of conducting content analysis in isolation from practice. Some claim that until one sees a topic taught, it is not really possible to specify what is

|

BOX 3-1 Listing of topics Sequence of topics Clarity, accuracy, and appropriateness of topic presentation Frequency, duration, pace, depth, and emphasis of topics Grade level of introduction Overall structure: integrated, interdisciplinary, or sequential Types of tasks and activities, purposes, and level of engagement Use of prior knowledge, attention to (mis)conceptions, and student strategies Reading level Focus on conceptual ideas and algorithmic fluency Emphasis on analytic/symbolic, visual, or numeric approaches Types and levels of reasoning, communication, and reflection Type and use of explanation Form of practice Approach to formalization Use of contextual problems and/or elements of quantitative literacy Use of technology or manipulatives Ways to respond to individual differences and grouping practices Formats of materials Types of assessment and relation to classroom practice |

learned (as argued by William McCallum, University of Arizona, and Richard Lehrer, Vanderbilt University, when they testified to the committee on September 18, 2002). In this sense, a content analysis would need to include an assessment of what a set of curricular tasks makes possible to occur in a classroom as a result of activity undertaken, and would depend heavily on the ability of the teacher to make effective use of these opportunities and to work flexibly with the curricular choices. This kind of content analysis is often a part of pilot testing or design experiments. Others prefer an approach to content analysis that is independent of pedagogy to ensure comprehensiveness, completeness, and accuracy of topic and to consider if the sequencing forms a coherent, logical, and age-appropriate progression. Both options provide valuable and useful information in the analysis of curricular effectiveness but demand very different methodologies.

Another consideration might be the qualifications of the authors and their experience with school and collegiate mathematics. The final design element concerns the primary audience for curricular dissemination. One publisher indicated its staff would often make decisions on curricular design based on the expressed needs or preferences of state adoption boards, groups of teachers, or in the case of home schooling, parents. Alternatively, a curriculum might be designed to appeal to a particular subgroup, such as gifted and talented students, or focus on preparation for different subsequent courses, such as physics or chemistry.

Implementation Components

Curricular programs are enacted in a variety of school settings. Curriculum designers consider these settings to various degrees and in various ways. For example, implementation depends heavily on the capacity of a school system to support and sustain the curriculum being adopted. This implies that a curricular program’s effectiveness depends in part on if it is implemented adequately and how it fits within the grade-level band for which it is designed as well as whether it fits with the educational contexts that proceed or follow it.

Implementation studies have provided highly convincing evidence that implementation is complicated and difficult because curricula are enacted within varying social contexts. Factors such as participation in decision making, incentives such as funding or salaries, time availability for professional development, staff turnover or student attendance, interorganizational arrangements, and political processes can easily hinder or enhance implementation (Chen, 1990).

In evaluation studies, these issues are also referred to as process evaluation or program or performance monitoring. Implementation includes examining the congruity between the instruction to students and the goals

of the program, whether the implemented curriculum is reaching all students, how well the system is organized and managed to deliver the curricular program, and the adequacy of the resources and support. Process evaluation and program and performance monitoring are elements of program evaluation that can provide essential data in judging the effectiveness of the program and in providing essential feedback to practitioners on program improvement (Rossi et al., 1999).

In the use of curricula in practice, many variations enter the process. We organized the factors in the implementation component into three categories: resource variables, process variables, and community/cultural influences. Examples of each are listed in Table 3-1.

Resource variables refer to the resources made available to assist in implementation. Process variables refer to the ways and means in which implementation activities are carried out, decisions are made, and information is analyzed on the practice and outcomes of teaching mathematics. Community and cultural factors refer to the social conditions, beliefs, and expectations held both implicitly and explicitly by participants at the site of adoption concerning learning, teaching, and assessing student work and opportunities.

We also identified a set of mediating factors that would be most likely to influence directly the quality and type of implementation.

Appropriate Assignment of Students

Decisions concerning student placement in courses often have strong implications for the success of implementation efforts and the distribution of effects across various student groups. Evaluations must carefully document and monitor the range of student preparation levels that teachers must serve, the advice and guidance provided to students and parents as to what curricular choices are offered, and the levels of attrition or growth of student learning experienced over a curricular evaluation study period by students or student subpopulations.

Ensuring Adequate Professional Capacity

This was viewed as so critical to the success of implementation efforts that some argued that its significance exceeds that of curriculum in determining students’ outcomes (as stated by Roger Howe, Yale University, in testimony to the committee at the September 2002 workshop). Professional capacity has a number of dimensions. First, it includes the number and qualifications of the actual teachers who will instruct students. Many new curricula rely on teachers’ knowledge of topics that were not part of their own education. Such topics could include probability and statistics, the use

TABLE 3-1 Categories and Examples of Implementation Component Variables

|

Resource Variables |

Process Variables |

Community/Cultural Influences |

|

Teacher supply, qualifications, and rate of turnover |

Teacher organization and professional community |

Teacher beliefs concerning learning, teaching, and assessment |

|

Professional development and teacher knowledge |

Curricular decision making |

Expectations of schooling and future educational and career aspirations |

|

Length of class |

Course requirements |

Homework time |

|

Class size and number of hours of preparation per teacher |

Course placements, guidance, and scheduling |

Stability, language proficiency, and mobility of student populations |

|

Cost and access to materials, manipulatives, and technology |

Administrative or governance of school decision making |

Combinations of ethnic, racial, or socioeconomic status among students, teachers, and community |

|

Frequency and type of formative and summative assessment practices |

Forms and frequency of assessment and use of data |

Community interest and responses to publicly announced results on school performance |

|

Extent and type of student needs and support services |

|

Student beliefs and expectations |

|

Parental involvement |

|

Parental beliefs and expectations |

of new technologies, taking a function-based approach to algebra, using dynamic software in teaching geometry, contextual problems, and group methods. In addition, school districts are facing increasing shortages of mathematics teachers, so teachers frequently are uncertified or lack a major in mathematics or a mathematics-related field (National Center for Education Statistics [NCES], 2003). At the elementary level, many teachers are assigned to teach all subjects, and among those, many are required to teach mathematics with only minimal training and have a lack of confidence or affection for the discipline (Ma, 1999; Stigler and Hiebert 1999). Finally, especially in urban and rural schools, there is a high rate of teacher turnover

(Ingersoll, 2003; National Commission on Teaching and America’s Future, 2003), so demanding curricula may not be taught as intended or with consistency over the full duration of treatment.

Also in this category are questions of adequate planning time for implementing and assessing new curricula and adequate support structures for trying new approaches, including assistance, reasonable class sizes, and number of preparations. Furthermore, if teachers are not accorded the professional opportunities to participate in decision making on curricular choices, resistance from them, reverting to the use of supplemental materials with which teachers are more comfortable, or lack of effort can hamper treatment consistency, duration, and quality. In contrast, in some cases, reports were made of teacher-initiated and -dominated curricular reform efforts where the selection, adaptation, and use of the materials was highly orchestrated and professionally evaluated by practitioners, and their use of the materials typically was reported as far more successful and sustainable (as stated by Terri Dahl, Charles M. Russell High School, MT, and Timothy Wierenga, Naperville Community Unit School District #203, IL, in testimony to the committee on September 18, 2002).

Opportunities for professional development also vary. Documenting the duration, timing, and type of professional development needed and implemented is essential in the process of examining the effectiveness of curricular programs. Because many of the programs require that teachers develop new understandings, there is a need for adequate amounts of professional development prior to implementation, continued support during implementation, and reflective time both during and after implementation. Because many of these programs affect students from multiple grade levels, there is also the issue of staging, to permit students to enter the program and remain in it and to allow teachers at higher grade levels to know that students have the necessary prerequisites for their courses.

Finally, there are different types of professional development with varying amounts of attention to content, pedagogy, and assessment (Loucks-Horsley et al., 1998). These involve different amounts of content review and use of activities. In some, the teachers are shown the materials and work through a sample lesson, concentrating on management and pedagogy. In others, teachers work through all units and the focus is on their learning of the content. In a few, the teachers are taught the immediate content and provided coursework to ensure they have learned more of the content than is directly located in the materials (NCES, 1996). If limited time and resources devoted to professional development make the deeper treatments of content infrequent, then this can limit a teacher’s capacity to use new materials.

Opportunity to Learn

Not all curricula materials are implemented in the same way. In some schools, the full curricula are used, while in others units may be skipped or time limitations at the end of the year may necessitate abandoning the last units in the sequence. Even within units, topics can be dropped at teachers’ discretion. Thus, it is important for evaluations of curricula to document what teachers teach (Porter, 1995). Opportunity to learn is a particularly important component of implementation because it is critically involved in examining differential student performance on particular outcomes. It can be evaluated directly using classroom observation or indirectly through teacher and student logs, or by surveying teachers and students on which items on an end-of-year test were covered.

Instructional Quality and Type

It is also necessary to examine how well curricula are taught. As noted by Stigler and Hiebert (1999, pp. 10-11), “What we can see clearly is that American mathematics teaching is extremely limited, focused for the most part on a very narrow band of procedural skills. Whether students are in rows working individually or sitting in groups, whether they have access to the latest technology or are working only with paper and pencil, they spend most of their time acquiring isolated skills through repeated practice.”

Using different curricula may contribute to the opportunity to teach differently, but it is unlikely to be sufficient to change teaching by the mere presence of innovative materials. Typically, teachers must learn to change their practices to fit the curricular demands. In addition to materials, they need professional development, school-based opportunities to plan and reflect on their practices, and participation in professional societies as sources of additional information and support. There is considerable variation in teaching practices, and while one teacher may shift his/her practice radically in response to the implementation of a new curriculum, many will change only modestly, and a substantial number will not alter their instructional practices at all. Evaluation studies that consider this variable typically include classroom observation.

Assessment

Formative or embedded assessment refers to the system of classroom assessment that occurs during the course of normal classroom teaching and is designed in part to inform subsequent instruction. Often assessments are included in instructional materials, and attention must be paid to how these are used and what evidence they provide on curricular effec-

tiveness. It is particularly helpful when teachers use multiple forms of assessment, so that one can gauge student progress across the year on tests, quizzes, and projects, including conceptual development, procedural fluency, and applications. It is also important that such testing provides evidence on the variation among students in learning and creates a longitudinal progression on individual student learning. More and more, schools and school districts are working to coordinate formative assessment with high-stakes testing programs, and when this is done, one can gain an important window on the relationship between curricula and one standardized form of student outcomes.

Because it is in schools with large numbers of students performing below expected achievement levels that the high-stakes testing and accountability models exert the most pressure, it is incumbent upon curriculum evaluators to pay special attention to these settings. It has been widely documented that in urban and rural schools with high levels of poverty, students are likely to be given inordinate amounts of test preparation, and are subject to pull-out programs and extra instruction, which can detract from the time devoted to regular curricular activities (McNeil and Valenzuela, 2001). This is especially true for schools that have been identified as low performing and in which improving scores on tests is essential to ensure that teachers and administrators do not lose their jobs.

Parental Influence and Special Interest Groups

Parents and other members of the community influence practices in ways that can significantly and regularly affect curriculum implementation. The influence exerted by parents and special interest groups differs from systemic factors in that they are closely affiliated with the local school, and can exert pressure on both students and school practitioners.

Parents are influential and need to be considered in multiple ways. Parents provide guidance to students in course selection, they convey differing levels of expectation of performance, and they provide many of the supplemental materials, resources (e.g., computer access at home), and opportunities for additional education (informal or nonschool enrollments). In some cases, they directly provide home schooling or purchase schooling (distance education, Scholastic Aptitude Test [SAT] prep courses) for their children. It is also important to recognize that there are significant cultural differences among parents in the degree to which they will accept or challenge curricular decisions based on their own views of schooling, authority, and sense of welcome within the school or at public meetings (Fine, 1993). To ensure that parental satisfaction and concerns are adequately and fairly considered, evaluators must provide representative sources of evidence.

Measures of Student Outcomes

In examining the effectiveness of curricula, student outcome measures are of critical importance. One must keep in mind that the outcomes of curricular use should be the documentation of the growth of mathematical thinking and knowledge over time. We sought to identify the primary factors that would influence the perceived effectiveness of curricula based on those measures.

Most curricula evaluators use tests as the primary tool for measuring curricular effectiveness. Commonly used tests are large-scale assessments from state accountability systems; national standardized tests such as the SAT, Iowa Test of Basic Skills (ITBS), or AP exams or the National Assessment of Educational Progress (NAEP); or international tests such as items from the Third International Mathematics and Science Study (TIMSS). Among these tests, there are different choices in terms of selecting norm-referenced measures that produce national percentile rankings or criteria-referenced measures that are also subject to scaling influences. At a more specific level, assessments are used that measure particular cognitive skills such as problem solving or computational fluency or are designed to elicit likely errors or misconceptions. At other times, evaluators report outcomes using the tests and assessments included with the curriculum’s materials, or develop their own tests to measure program components.

For evaluation studies, there can be significant problems associated with the selection and use of outcome measures. We use the term “curricular validity of measures” to refer to the use of outcome measures sensitive to the curriculum’s stated goals and objectives. We believe that effectiveness must be judged relative to curricular validity of measures as a standard of scientific rigor.1 In contrast, local decision makers may wish to gauge how well a curricular program supports positive outcomes on measures that facilitate student progress in the system, such as state tests, college entrance exams, and future course taking. We refer to this as “curricular alignment with systemic factors.”2

One should not conflate curricular validity of measures with curricular alignment with systemic factors in evaluation studies. Additionally, whereas the use of measures demonstrating curricular validity is obligatory to determine effectiveness, curricular alignment with systemic factors may also be advised.

|

BOX 3-2 Curricular topics Question format: open ended, structured response, multiple choice Strands of mathematical proficiency* Cognitive level Cognitive development over time Reliance on prior knowledge or reading proficiency Length and timing of testing Familiarity of task Opportunity to learn |

An evaluator also needs to consider the possibility of including multiple measures; collecting measures of prior knowledge from school, district, and state databases; and identifying methods of reporting the data. To address this variety of measures, we produced a list of dimensions of assessments that can be considered in selecting student outcome measures (Box 3-2). In analyzing evaluations of curricular effectiveness, we were particularly interested in measures that could produce disaggregation of results at the level of common content strands because this is the most likely means of providing specific information on student success on certain curricular objectives. We also scrutinized evaluations of curricula for the construct validity, reliability, and fairness of the measures they employed. For measures of open-ended tasks, we looked for measures of interrater reliability and the development of a clear and precise rubric for analysis. Also important to gauge effectiveness would be longitudinal measures, a limited resource in most studies.

Another critical issue is how to present results. Results may be presented as mean scores with standard deviations, percentage of students who pass at various proficiency or performance levels, or gain scores. We also considered in our analysis of curriculum evaluations whether the results presented in those studies were accompanied by clear specification of the purpose or purposes of a test and how the test results were used in grading

or accountability systems. Finally, we considered whether evaluations included indications of attrition through the course of the study and explanations of how losses might influence the data reports.

After considering the selection of indicators of student learning, we explored the question of how those indicators present that information across diverse populations of students. Disaggregation of the data by subgroups—including gender, race, ethnicity, economic indicators, academic performance level, English-language learners, and students with special needs—can ensure that measures of effectiveness include considerations of equity and fairness. In considering issues of equity, we examined whether evaluations included comparisons between groups—by subgroup on gain scores—to determine the distribution of effects. We also determined whether evaluations reported on comparisons in gains or losses among the subpopulations of any particular treatment, to provide evidence on the magnitude of the achievement gap among student groups. Accordingly, we asked whether evaluations examined distributions of scores rather than simply attending to the percentage passing or “mean performance.” Did they consider the performance of students at all levels of achievement?

Conducting evaluations of curricula in schools or districts with high levels of student mobility presents another challenge. We explored whether a pre- or postevaluation design could be used to ensure actual measurement of student achievement in this kind of environment. If longitudinal studies were conducted, were the original treatment populations maintained over time, a particularly important concern in schools where there is high mobility, choice, or dropout problems?

Finally, in drawing conclusions about effectiveness, we considered whether the evaluations of curricula employed the use of multiple types of measures of student performance other than test scores (e.g., grades, course-taking patterns, attitudes and perceptions, performance on subsequent courses and postsecondary institutions, and involvement in extracurricular activities relevant to mathematics). An effective curriculum should make it feasible and attractive to pursue future study in a field and to recover from prior failure or enter or advance into new opportunities.

We also recognized the potential for “corruptibility of indicators” (Rossi et al., 1999). This refers to the tendency for those whose performance is monitored to improve the indicator in any way possible. When some student outcome measures are placed in an accountability system, especially one where the students’ retention or denial of a diploma is at stake, the pressure to teach directly to what is likely to be assessed on the tests is high. If teachers and administrators are subject to loss of employment, the pressures increase even more (NRC, 1999b; Orfield and Kornhaber, 2001).

SECONDARY COMPONENTS

Systemic Factors

Systemic factors refer to influences on curricular programs that lie outside the intended and enacted curricula and are not readily amenable to change by the curriculum designers. Examples include the standards and the accountability system in place during the implementation period. Standards are designed to provide a governance framework of curricular objectives in which any particular program is embedded. Various sets of standards (i.e., those written by National Council of Teachers of Mathematics [NCTM], those established by states or districts) represent judgments about what is important. These can differ substantially both in emphasis and in specificity.

Accountability systems are a means to use the combination of standards and typically high-stakes test to conform in offerings across a governance structure. As mentioned previously, curriculum programs may vary in relation to their alignment to standards and accountability systems.

Other policies such as certification and recertification processes or means of obtaining alternative certification for teachers can have a major impact on a curricular change, but these are typically not directly a part of the curriculum design and implementation processes. State requirements for course taking, college entrance requirements, college credits granted for Advanced Placement (AP) courses, ways of effecting grade point averages (i.e., an additional point toward the Grade Point Average [GPA] for taking an AP course), availability of SAT preparation courses, and opportunities for supplementary study outside of school are also examples of systemic factors that lie outside the scope of the specific program, but exert significant influences on it. Textbook adoption processes and cycles also would be considered systemic factors. As reported to the committee by publishers, the timing and requirements of these adoption processes, especially in large states such as Texas, California, and Florida, exert significant pressure on the scope, sequence, timing, and orientation of the curricula development process and may include restrictions on field testing or dates of publication (as reported by Frank Wang, Saxon Publishers Inc., in testimony to the committee at the September 2002 workshop).

Intervention Strategies

Intervention strategies refer to the theory of action that lies behind the enactment of a curricular innovation. We found it necessary to consider attention given by evaluation studies to the intervention strategies behind many of the programs reviewed. Examining an intervention strategy re-

quires one to consider the intent, the strategy, and the purpose and extent of impact intended by the funders or investors in a curricular program.

A program of curricular development supported by a governmental agency like the National Science Foundation (NSF) may have various goals, which can have a significant impact on the conclusions about their effectiveness when undertaking a meta-analysis of different curricula. A curricular development program could be initiated with the goal to improve instruction in mathematics across the board, and thus demand that the developers be able to demonstrate a positive impact on most students. Such an approach views the intent of the curricular initiative as comprehensive school improvement, and one would therefore examine the extent of use of the programs and the overall performance of students subjected to the curriculum over the time period of the intervention.

Alternatively, one could conceive of a program’s goal as a catalytic demonstration project, or proof of concept, to establish a small but novel variation to typical practice. In this case, evaluators might not seek broad systemic impact, but concentrate on data supplied by the adopters only, and perhaps only those adopters who embraced the fullest use of the program across a variety of school settings. Catalytic programs might concentrate on documenting a curriculum’s potential and then use dissemination strategies to create further interest in the approach.3

A third strategy often considered in commercially generated curriculum materials might be to gain market share. The viability of a publishing company’s participation in the textbook market depends on the success it has in gaining and retaining sufficient market share. In this case, effectiveness on student performance may be viewed as instrumental to obtaining the desired outcome, but other factors also may be influential, such as persuading decision makers to include the materials in the state-approved list, cost-effectiveness, and timing. Judgments on intervention strategies are likely to have a significant influence on the design and conduct of an evaluation.

Unanticipated Influences

Another component of the framework is referred to as unanticipated influences. During the course of implementation of a curriculum, which may occur over a number of years, factors may emerge that exert significant forces on implementation. For example, if calculators suddenly are permit-

ted during test taking, this change may exert a sudden and unanticipated influence on the outcome measures of curricula effectiveness. Equally, if an innovation such as block scheduling is introduced, certain kinds of laboratory-based activities may become increasingly feasible to implement. A third example is the use of the Internet to provide parents and school board members with information and positions on the use of particular materials, an approach that would not have possible a decade ago.

In Figure 3-2, an arrow links student outcomes to other components to indicate the importance of feedback, interactions, and iterations in the process of curricular implementation. Time elements are important in the conduct of an evaluation in a variety of ways. First of all, curricular effects accrue over significant time periods, not just months, but across academic years. In addition, the development of materials undergoes a variety of stages, from draft form to pilot form to multiple versions over a period of years. Also, developers use various means of obtaining user feedback to make corrections and to revise and improve materials.

EVALUATION DESIGN, MEASUREMENT, AND EVIDENCE

After delineating the primary and secondary components of the curriculum evaluation framework, we focused on decisions concerning evaluation and evidence gathering. We identified three elements of that process: articulation of program theory, selection of research design and methodology, and other considerations. These included independence of evaluators, time elements, and accumulation of knowledge and the meta-analysis.

Articulation of Program Theory

An evaluator must specify and clearly articulate the evaluation questions and elaborate precisely what elements of the primary and secondary components will be considered directly in the evaluation, and these elaborations can be referred to as specifying “the program theory” for the evaluation. As stated by Rossi et al. (1999, p. 102):

Depiction of the program’s impact theory has considerable power as a framework for analyzing a program and generating significant evaluation questions. First the process of making that theory explicit brings a sharp focus to the nature, range, and sequence of program outcomes that are reasonable to expect and may be appropriate for the evaluator to investigate.

According to Weiss (1997, p. 46), “programmatic theory … deals with mechanisms that intervene between the delivery of the program service and the occurrence of outcomes of interest.” Thus, program theory specifies the

|

BOX 3-3 New mathematics topics Links between mathematics and other disciplines Increased access for underserved students and elimination of tracking Use of student-centered pedagogies Increased uses of technologies Application of research on student learning Use of open-ended assessments SOURCE: NSF (1989). |

evaluator’s view of the causal links and covariants among the program components. In terms of our framework, program theory requires the precise specification of relationships among the primary components (program components, implementation components, and student outcomes) and the secondary components (systemic factors, intervention strategies, and unanticipated influences).

For example, within the NSF-supported curricula, there were a number of innovative elements of program theory specified by the Request for Proposals (Box 3-3). For example, the call for proposals for the middle grades curricula specified that prospective developers consider curriculum structure, teaching methods, support for teachers, methods and materials for assessment, and experiences in implementing new materials (NSF, 1989).

In contrast, according to Frank Wang of Saxon Publishing (personal communication, September 11, 2003), their curriculum development and production efforts follow a very different path. The Saxon product development model is to find something that is already “working” (meaning curriculum use increases test scores) and refine it and package it for wider distribution. They see this as creating a direct product of the classroom experience rather than designing a program that meets a prespecified set of requirements. Also, they prefer to select programs written by single authors rather than by a team of authors, which is more prevalent now among the big publishers.

The Saxon pedagogical approach includes the following:

-

Focus on the mastery of basic concepts and skills

-

Incremental development of concepts4

-

Continual practice and review5

-

Frequent, cumulative testing6

The Saxon approach, according to Wang, is a relatively rigid one of daily lesson, daily homework, and weekly cumulative tests. At the primary grades, the lesson is in the form of a scripted lesson that a teacher reads; Saxon believes the disciplined structure of its programs is the source of their success.

In devising an evaluation of each of these disparate programs, an evaluator would need to concentrate on different design principles and thus presumably would have a different view of how to articulate the program’s theory, that is, why it works. In the first case, particular attention might be paid to the contributions of students in class and to their methods and strategies of working with embedded assessments; in the second case, more attention would be paid to the varied paces of student progress and to the way in which the student demonstrated mastery of both previous and current topics. The program theory would be not simply a delineation of the philosophy and approach of the curriculum developer, but the way in which the measurement and design approach carefully considered those design elements.

Chen (1990) argues that by including careful development of program theory, one can increase the trustworthiness and generalizability of the evaluation study. Trustworthiness, or the view that the results will provide convincing evidence to stakeholders, is increased because with explicit monitoring of program components and interrelationships, evaluators can examine whether outcomes are sensitive to changes in interventions and process variables with greater certainty. Generalizability, or application of results to future pertinent circumstances, is increased because evaluators can determine the extent to which a new situation approximates the one in which the prior result was obtained. Only by clearly articulating program theory and then testing competing hypotheses can evaluators disentangle these complex issues and help decision makers select curricula on the basis of informed judgment.

Selection of Research Design and Methodology

As indicated in Scientific Research in Education (NRC, 2002), scientific evaluation research on curricular effectiveness can be conducted using a variety of methodologies. We focused on three primary types of evaluation design: content analyses, comparative studies, and case studies. (A fourth type, synthesis studies, is discussed under “Accumulation of Knowledge and the Meta-Analysis,” later in this chapter.) Typically, content analyses concentrate on program components while case studies tend to elaborate on issues connected to implementation. Comparative studies involve all three major components—program, implementation, and student outcomes—and tend to link them to compare their effects. Subsequent to describing each, a final section on syntheses studies, modeling, and meta-analysis is also provided to complete the framework. Our decision to focus on these three methodologies should not be understood to imply the rejection of other possibilities for investigating effectiveness, but rather a discussion of the most common forms submitted for review. Also, we should note that some evaluations incorporate multiple methodologies, often designing a comparative study of a limited number of variables and supplementing it with the kinds of detailed information found in the case studies.

Content Analyses

Evaluations that focus almost exclusively on examining the content of the materials were labeled content analyses. Many of these evaluations were of the type known as connoisseurial assessments because they relied nearly exclusively on the expertise of the reviewer and often lacked an articulation of a general method for conducting the analysis (Eisner, 2001). Generally, evaluators in these studies reviewed a specific curriculum for accuracy and for logical sequencing of topics relative to the expert knowledge. Some evaluators explicitly contrasted the curriculum being analyzed to international curricula in countries in which students showed high performance on international tests. In our discussions of content analysis in Chapter 4, we specify a number of key dimensions, while acknowledging that as connoisseurial assessments, they involve judgment and values and hence depend on one’s assessment of the qualifications and reputation of the reviewer. By linking these to careful examination of empirical studies of the classroom, one can test some of these assumptions directly.

Comparative Studies

A second approach to evaluation of curricula has been for researchers to select pertinent variables that permit a comparative study of two or more

curricula and their effects over significant time periods. In this case, investigators typically have selected a relatively small number of salient variables from the framework for their specific program theory and have designed or identified tools to measure these variables. The selection of variables was often critical in determining if a comparative study was able to provide explanatory information to accompany its conjectures about causal inference. Many of the subsequent sections of this chapter apply directly to comparative study, but can inform the selection, conduct, and review of case studies or content analyses.

Our discussion of comparative studies focuses on seven critical decisions faced by evaluators in the conduct of comparative studies: (1) select the study type: quasi-experimental or experimental, (2) establish comparability across groups, (3) select a comparative unit of analysis, (4) measure and document implementation fidelity, (5) conduct an impact assessment and choice of outcome measures, (6) the select and conduct statistical tests, and (7) determine limitations to generalizability in relation to sample selection. After identifying the type of study, the next five decisions relate to issues of internal validity, while the last one focuses on external validity. After introducing an array of comparative designs, each of these is discussed in relation to our evaluation framework.

Comparative Designs

In comparative studies, multiple research designs can be utilized, including:

-

Randomized field trials. In this approach, students or other units of analysis (e.g., classrooms, teachers, schools) are randomly assigned to an experimental group, to which the intervention is administered, and a control group, from which the intervention is withheld.

-

Matched comparison groups. In this approach, students who have been enrolled in a curricular program are matched on selected characteristics with individuals who do not receive the intervention to construct an “equivalent” group that serves as a control.

-

Statistically equated control. Participants and nonparticipants, not randomly assigned, are compared, with the difference between them on selected characteristics adjusted by statistical means.

-

Longitudinal studies. Participants who receive the interventions are compared before and after the intervention and possibly at regular intervals during the treatment.

-

Generic controls. Intervention effects are compared with established norms about typical changes in the target populations using indicators that are widely available.

The first type of study is referred to as “randomized experiments” and the other four are viewed as “quasi-experiments” (Boruch, 1997). The experimental approach assumes that all extraneous confounding variables will be equalized by the process of random assignment. As recognized by Cook (in press), randomized field trials will only produce interpretable results if one has a clear and precise description of the program, and one can ensure the fidelity of the treatment for the duration of the experiment. Developing practical methods that can ensure these conditions and thus make use of the power of this approach could yield more definitive causal results, especially if linked to complementary methodologies aiding in explanatory power.

Threats to validity in the quasi-experimental approaches are that the selection of relevant variables for comparison may not consider differences that actually affect the outcomes systematically (Agodini and Dynarski, 2001). For example, in many matched control experiments for evaluating the effectiveness of mathematics curricula, reading level might not be considered to be a relevant variable. However, in the case of the reform curricula that require a large amount of reading and a great deal of writing in stating the questions and producing results, differences in reading level may contribute significantly to the variance observed (Sconiers et al., 2002).

The goal of a comparative research design in establishing the effectiveness of a particular curriculum is to describe the net effect of a curricular program by estimating the gross outcome for an intervention group and subtracting the outcome for the comparable control group, while considering the design effects (contributed by the research methods) and stochastic effects (measurement fluctuations attributable to chance). To attribute cause and effect to curricular programs, one seeks a reasonable measure of the “counterfactual results,” which are the outcomes that would have been obtained if the subjects had not participated in the intervention. Quasi-experimental methods seek a way to estimate this that involve probabilities and research design considerations. An evaluator also must work tenaciously to eliminate other likely factors that might have occurred simultaneously from outside uncontrolled sources.

We identified seven critical elements of comparative studies. These were: (1) design selection: experimental versus quasi-experimental, (2) methods of establishing comparability across groups, (3) selection of comparative units of analysis, (4) measures of implementation fidelity, (5) choices and treatment of outcomes, (6) selection of statistical tests, and (7) limits or constraints to generalizability. These design decisions are discussed in more detail, in relation to the actual studies, in Chapter 5, where the comparative studies that were analyzed for this report are reviewed.

Case Studies

Other evaluations focused on documenting how program theories and components played out in a particular case or set of cases. These studies, labeled case studies, often articulated in detail the complex configuration of factors that influence curricular implementation at the classroom or school level. These studies relied on the collection of artifacts at the relevant sites, interviews with participants, and classroom observations. Their goals included articulating the underlying mechanisms by which curricular materials work more or less effectively and identifying variables that may be overlooked by studies of less intensity (NRC, 2002). Factors that are typically investigated using such methods include understanding how faculties work together on decision making and implementation, or how attendance patterns affect instruction, or how teachers modify pedagogical techniques to fit their context, the preferences, or student needs.

The case study method (Yin, 1994, 1997) uses triangulation of evidence from multiple sources, including direct observations, interviews, documents, archival files, and actual artifacts. It aims to include the richness of the context; hence its proponents claim, “A major technical concomitant is that case studies will always have more variables of interest than data points, effectively disarming most traditional statistical methods, which demand the reverse situation” (Yin and Bickman, 2000). This approach also clearly delineates its expectations for design, site selection, data collection, data analysis, and reporting. It stresses that a slow, and sometimes agonizing, process of analyzing cases provides the detailed structure of argument often necessary to understand and evaluate complex phenomena. In addition to documenting implementation, this methodology can also include pre- and post outcome measures and the use of logic models, which, like program theory, produces an explicit statement of the presumed causal sequence of events in the cause and effect of the intervention. Because of the use of smaller numbers of cases, evaluators often can negotiate more flexible uses of open-ended multiple tests or select systematic variants of implementation variables.

Sharing of other features of the case study in relation to depth of placement in context and use of rich data sources is the ethnographic evaluation. Such studies may be helpful in documenting cases where a strong clash in values permeates an organization or project or where a cultural group may experience differential effects because their needs or talents are typical (Lincoln and Guba, 1986).

Other Considerations

Evaluator Independence

The relationship of an evaluator to a curriculum’s program designers and implementers needs to be close enough to understand their goals and challenges, but sufficiently independent to ensure fairness and objectivity. During stages of formative assessment, close ties can facilitate rapid adjustments and modifications to the materials. However, as one reaches the stage of summative evaluation, there are clear concerns about bias when an evaluator is too closely affiliated with the design team.

Time Elements

The conduct of summative evaluations for examining curricular effectiveness must take into account the timeline for development, pilot testing, field testing, and subsequent implementation. Summative evaluation should be conducted only after materials are fully developed and provided to sites in at least field test versions. For curricula that are quite discontinuous with traditional practice, particular care must be taken to ensure that adequate commitment and capacity exists for successful implementation and change. It can easily take up to three years for a dramatic curricular change to be reliably implemented in schools.

Accumulation of Knowledge and the Meta-Analysis

For the purposes of this review of the evaluations of the effectiveness of specific mathematics curriculum materials, it is important to comment on studies that emphasize the accumulation of knowledge and meta-analysis. Lipsey (1997) persuasively argues that the accumulation of a knowledge base from evaluations is often overlooked. He noted that evaluations are often funded by particular groups to provide feedback on their individual programs, so the accumulation of information from program evaluation is left to others and often neglected. Lipsey (p. 8) argued that the accumulation of program theories across evaluations can produce a “broader intervention theory that characterizes … and synthesizes information gleaned from numerous evaluation studies.” This report itself constitutes an effort to synthesize the information gleaned from a number of evaluation studies in order to strengthen the design of subsequent work. A further discussion of synthesis studies can be found in Chapter 6.

Meta-analysis produces a description of the average magnitude of ef-

fect sizes across different treatment variations and different samples. Based on the incomplete nature of the database available for this study, we decided that a full meta-analysis of program effects was not feasible. In addition, the extent of the variation in the types and quality of the outcome measures used in these studies of evaluating curricula makes effect sizes a poor method of comparison across studies. Nonetheless, by more informally considering effect size, statistical significance and the distribution of results across content strands, and the effects on various subgroups, one can identify consistent trends, evaluate the quality of the methodologies, and point to irregularities and targets for closer scrutiny through future research or evaluation studies.

These results also suggest significant implications for policy makers. Cordray and Fischer (1994, p. 1174) have referred to the domain of program effects as a “policy space” where one considers the variables that can be manipulated through program design and implementation. In Chapter 5, we discuss our findings in relation to such a policy space and presume to provide advice to policy makers on the territory of curricula design, implementation, and evaluation.

Our approach to the evaluation of the effectiveness of mathematics curricula seeks to recognize the complexity of the process of curricular design and implementation. In doing so, we see a need for multiple methodologies that can inform the process by the accumulation and synthesis of perspective. As a whole, we do not prioritize any particular method, although individual members expressed preferences. One strength of the committee was its interdisciplinary composition, and likewise, we see the determination of effectiveness as demanding the negotiation and debate among qualified experts.

For some members of the committee, an experimental study was preferred because theoretical basis for randomized or “controlled” experiments as developed by R. A. Fisher is a large segment of the foundation by which the scientific community establishes causal inference. Fisher invented the tool so that the result from a single experiment could be tested against the null hypothesis of chance differences. Rejecting the hypothesis of chance differences is probabilistically based and therefore runs the risk of committing a Type I error. Although a single, well-designed experiment is valuable, replicated results are important to sustain a causal inference, and many replications of the same experiment make the argument stronger. One must keep in mind that causal inference decisions are always probabilistically based.

Furthermore, it is important to remember that randomization is only a necessary but not a sufficient, condition for causal attribution. Other required conditions include the “controlled” aspect of the experiment, meaning that during the course of the experiment, there are no differences other

than the treatment involved. The latter is difficult to ensure in natural school settings. But that does not negate the need for randomization because no matter how many theory-based variables can be controlled, one cannot refute the argument that other important variables may have been excluded.

The power of experimental approaches lies in the randomization of assignment to experimental and control conditions to avoid unintended but systematic bias in the groups. Proponents of this approach have demonstrated the dangers of a quasi-experimental approach in studies such as a recent one by Agodini and Dynarski (2001), which showed that a method of matching called “propensity studies” used in quasi-experimental design showed different results than an experimental study.

Others (Campbell and Stanley, 1966, pp. 2-3) developed these quasiexperimental approaches noting that many past experimentalists became disillusioned because “claims made for the rate and degree of progress which would result from experiment were grandiosely overoptimistic and were accompanied by an unjustified depreciation of nonexperimental wisdom.” Furthermore, Cook (in press) acknowledged the difficulties associated with experimental methods as he wrote, “Interpreting [RCTs] results depends on many other things, an unbiased assignment process, adequate statistical power, a consent process that does not distort the populations to which results can be generalized, and the absence of treatment-correlated attrition, resentful demoralization, treatment seepage and other unintended products of comparing treatments. Dealing with these matters requires observation, analysis, and argumentation.” Cook and Campbell and Stanley argue for the potential of quasi-experimental and other designs to add to the knowledge base.

In making these arguments, though experimentalists argue that experimentalism leads to unbiased results, their argument rests on idealized conditions of experimentation. As a result, they cannot actually estimate the level of departure of their results from such conditions. In a way, they thus leave the issues of external validity outside their methodological umbrella and largely up to the reader. Then, they tend to categorize other approaches in terms of how well they approximate their idealized stance. In contrast, quasi-experimentalists or advocates of other forms of method (case study, ethnography, modeling approaches) admit the questions of external validity to their designs, and rely on the persuasiveness of the relations among theory, method, claims, and results to warrant their conclusions. They forgo the ideal for the possible and lose a measure of internal validity in the process, preferring a porous relationship.

A critical component of this debate also has to do with the nature of cause and effect in social systems, especially those in which feedback is a critical factor. Learning environments are inevitably saturated with sources

of feedback from the child’s response to a question, to the pressures of high-stakes tests on curricular implementation. One can say with equal persuasion that use of a particular set of curricular materials caused the assignment of a student’s score, which caused the student to learn the material in a curriculum. Cause and effect is best used to describe events in a temporal sequence where results can be proximally tied to causes based on the elimination of other sources of effect.

It is worth pointing out that the issues debated by members of this committee are not new, but have a long history in complex fields where the limitations of the scientific method have been recognized for a long time. Ecology, immunology, epidemiology, and neurobiology provide plenty of examples where the use of alternative approaches that include dynamical systems, game theory, large-scale simulations, and agent-based models have proved to be essential, even in the design of experiments. We do not live on a fixed landscape and, consequently, any intervention or perturbation of a system (e.g., the implementation of new curricula) can alter the landscape. The fact that researchers select a priori specific levels of aggregation (often dictated by convenience) and fail to test the validity of their results to such choices is not only common, but extremely limiting (validity).

In addition, we live in a world where knowledge generated at one level (often not the desired level) must be used to inform decisions at a higher level. How one uses scientifically collected knowledge at one level to understand the dynamics at higher levels is still a key methodological and philosophical issue in many scientific fields of inquiry. Genomics, a highly visible field at the moment, offers many lessons. The identification of key genes (and even the mapping of the human genome) is not enough to predict their expression (e.g., cancers) or to have enough knowledge that will help us to regulate them (e.g., cures to disease). “Nontraditional methods” are needed to make this fundamental jump. The evaluation of curricula successes is not a less complex enterprise and no single approach holds the key.

The committee does not need to solve these essential intellectual debates in order to fulfill its charge; rather, it chose to put forward a framework that could support an array of methods and forms of inference and evidence.