6

Internet Navigation: Emergence and Evolution

As the previous chapters show, the Domain Name System has been a foundation for the rapid development of the Internet. Domain names appear on the signposts designating origins and destinations linked by the Internet and in the addresses used by the principal applications traversing the Internet—e-mail and the World Wide Web. And they have been useful for navigating across the Internet: given a domain name, many Web browsers will automatically expand it into the Uniform Resource Locator (URL) of a Web site; from a domain name, many e-mail users can guess the e-mail address of an addressee. For these reasons, memorable domain names may often acquire high value. Their registrants believe that searchers can more readily find and navigate to their offerings.

However, as the Internet developed in size, scope, and complexity, the Domain Name System (DNS) was unable to satisfy many Internet users’ needs for navigational assistance. How, for example, can a single Web page be found from among billions when only its subject and not the domain name in its URL is known? To meet such needs, a number of new types of aids and services for Internet navigation were developed.1 While, in the end, these generally rely on the Domain Name System to find specific Internet Protocol (IP) addresses, they greatly expand the range of ways in which searchers can identify the Internet location of the resource they seek.

These navigational aids and services have, in return, relieved some of the pressure on the Domain Name System to serve as a de facto directory of the Internet and have somewhat reduced the importance of the registration of memorable domain names. Because of these tight linkages between the DNS and Internet navigation, this chapter and the next ones address—at a high level—the development of the major types of Internet navigational aids and services. This chapter is concerned with their past development. The next chapter deals with their current state. And the final chapter on Internet navigation, Chapter 8, considers the technological prospects and the institutional issues facing them.

After describing the distinctive nature of Internet navigation, this chapter traces the evolution of a variety of aids and services for Internet navigation. While its primary focus is on navigating the World Wide Web, it does not cover techniques for navigation within Web sites, which is the subject of specialized attention by Web site designers, operators, and researchers.2

6.1 THE NATURE OF INTERNET NAVIGATION

Navigation across the Internet is sometimes compared to the well-studied problem of readers navigating through collections of printed material and other physical artifacts in search of specific documents or specific artifacts. (See the Addendum to this chapter: “Searching the Web Versus Searching Libraries.”) That comparison illustrates the differences in the technical and institutional contexts for Internet navigation. Internet navigation for some purposes is similar to searches in library environments and relies on the same tools, whereas navigation for other purposes may be performed quite differently via the Internet. The multiple purposes and diverse characteristics listed below combine to make navigating to a resource across the Internet a much more varied and complex activity than those previously encountered. The library examples provide a point of reference and a point of departure for discussion in subsequent chapters.

6.1.1 Vast and Varied Resources for Multiple Purposes

First, the Internet connects its users to a vast collection of heterogeneous resources that are used for many purposes, including the dissemination of information; the marketing of products and services; communication with others; and the delivery of art, entertainment, and a wide range of commercial and public services. The kinds of resources connected to the Internet include:

-

Documents that differ in language (human and programming), vocabulary (including words, product numbers, zip codes, latitudes and longitudes, links, symbols, and images), formats (such as the Hypertext Markup Language (HTML), Portable Document Format (PDF), or Joint Photographic Experts Group (JPEG) format), character sets, and source (human or machine generated).

-

Non-textual information, such as audio and video files, and interactive games. The volume of online content (in terms of the number of bytes) in image, sound, and video formats is much greater than that of most library collections and is expanding rapidly.

-

Transaction services, such as sales of products or services, auctions, tax return preparation, matchmaking, and travel reservations.

-

Dynamic information, such as weather forecasts, stock market information, and news, which can be constantly changing to incorporate the latest developments.

-

Scientific data generated by instruments such as sensor networks and satellites are contributing to a “data deluge.”3 Many of these data are stored in repositories on the Internet and are available for research and educational purposes.

-

Custom information constructed from data in a database (such as product descriptions and pricing) in response to a specific query (e.g., price comparisons of a product listed for sale on multiple Web sites).

Consequently, aids or services that support Internet navigation face the daunting problem of finding and assigning descriptive terms to each of these types of resource so that it can be reliably located. Searchers face the complementary problem of selecting the aids or services that will best enable them to locate the information, entertainment, communication link, or service that they are seeking.

6.1.2 Two-sided Process

Second, Internet navigation is two-sided: it must serve the needs both of the searchers who want to reach resources and of the providers that want their resources to be found by potential users.

From the searcher’s perspective, navigating the Internet resembles to some extent the use of the information retrieval systems that were developed

over the last several decades within the library and information science4 and computer science communities.5 However, library-oriented retrieval systems, reflecting the well-developed norms of librarians, were designed to describe and organize information so that users could readily find exactly what they were looking for. In many cases, the same people and organizations were responsible both for the design of the retrieval systems and for the processes of indexing, abstracting, and cataloging the information to be retrieved. In this information services world, the provider’s goal was to make description and search as neutral as possible, so that every document relevant to a topic would have an equal chance of being retrieved.6 While this goal of retrieval neutrality has carried over to some Internet navigation services and resource providers, it is by no means universal. Indeed, from the perspective of many resource providers, particularly commercial providers, attracting users via the Internet requires the application to Internet navigation of non-neutral marketing approaches deriving from advertising and public relations as developed for newspapers, magazines, radio, television, and yellow-pages directories.7 Research on neutral, community-based technology for describing Internet resources is an active area in information and computer science and is a key element of the Semantic Web (see Box 7.1).8

|

4 |

For an overview, see Elaine Svenonius, The Intellectual Foundation of Information Organization, MIT Press, Cambridge, Mass., 2000; and Christine L. Borgman, From Gutenberg to the Global Information Infrastructure: Access to Information in the Networked World, MIT Press, Cambridge, Mass., 2000. |

|

5 |

For an overview, see Ricardo Baeza-Yates and Berthier Ribiero-Neto, Modern Information Retrieval, Addison-Wesley, Boston, 1999; and Karen Sparck Jones and Peter Willett, editors, Readings in Information Retrieval, Morgan Kaufmann, San Francisco, 1997. For typical examples of early work on information retrieval systems, see, for example, George Schecter, editor, Information Retrieval—A Critical View, Thompson Book Company, Washington, D.C., 1967. For work on retrieval from large databases, see the proceedings of the annual text retrieval conference (TREC), currently sponsored by the National Institute of Standards and Technology and the Advanced Research and Development Activity, available at <http://trec.nist.gov>. |

|

6 |

See Svenonius, The Intellectual Foundation of Information Organization, 2000. |

|

7 |

See, for example, John Caples and Fred E. Hahn, Tested Advertising Methods, 5th edition, Prentice-Hall, New York, 1998. |

|

8 |

E. Bradley, N. Collins, and W.P. Kegelmeyer, “Feature Characterization in Scientific Datasets,” pp. 1-12 in Proceedings of the 4th International Conference on Advances in Intelligent Data Analysis (Lecture Notes in Computer Science, Vol. 2189), Springer-Verlag, 2001; V. Brilhante, “Using Formal Metadata Descriptions for Automated Ecological Modeling,” pp. 90-95 in Environmental Decision Support Systems and Artificial Intelligence, AAAI Press, Menlo Park, Calif., 1999; E. Hovy, “Using an Ontology to Simplify Data Access,” Communications of the ACM 46(1):47-49, 2003; OWL Web Ontology Language Guide, “W3C Recommendation (10 February 2004),” November 24, 2004, available at <http://www.w3.org/TR/owl-guide>; and P. Wariyapola, S.L. Abrams, A.R. Robinson, K. Streitlien, N.M. Patrikalakis, P. Elisseeff, and H. Schmidt, “Ontology and Metadata Creation for the Poseidon Distributed Coastal Zone Management System,” Proceedings of the IEEE Forum on Research and Technology Advances in Digital Libraries, IEEE Computer Society, Los Alamitos, Calif., 1999, pp. 180-189. |

For commercial providers, therefore, the challenge is how to identify and reach—in the complex, diverse, and global audience accessible via the Internet—potential users who are likely to be interested (or can be made interested) in the provider’s materials. That is done in traditional marketing and public relations through the identification of media and places (television or radio programs, magazines, newspapers in specific locations) that an audience with the desired common characteristics (for example, 18- to 24-year-old males) frequents. Similar approaches can be applied on the Internet (see Section 7.2.2), but unlike the traditional media, the Internet also offers providers the distinctive and extremely valuable opportunity to capture their specific audience during the navigation process itself, just when they are searching for what the provider offers—for example, by paying to be listed or featured in a navigation service’s response to specific words or phrases. (See “Monetized Search” in Section 7.1.7.) Marketers have found ways to use the specific characteristics of the Internet,9 just as they have developed methods appropriate for each new medium.10 This has led, for example, to the establishment of companies that are devoted to finding ways to manipulate Internet navigation services to increase the ranking of a client’s Web site and, in response, to the development of countermeasures by the services. (See “Search Engine Marketing and Optimization” in Section 7.1.7.)

For non-commercial resources, the situation is somewhat different, since the providers generally have fewer resources and may have less incentive to actively seek users, at least to the extent of paying for Web advertising or search engine marketing. At the same time, the existence of a specific non-commercial resource may be well known to the community of its potential users. For example, the members of a scholarly community or a non-profit organization are likely to be aware of the Internet resources relevant to their concerns. Those new to a community or outside it are dependent on Internet navigation tools to locate these resources.

Internet navigation is a complex interplay of the interests of both the searchers for and the providers of resources. On the Internet, the

librarian’s ideal of neutral information retrieval often confronts the reality of self-interested marketing.

6.1.3 Complexity and Diversity of Uses, Users, and Providers

Third, the complexity and diversity of uses of resources on the Internet, of their users, and of their providers significantly complicate Internet navigation. It becomes a multidimensional activity that incorporates behaviors ranging from random browsing to highly organized searching and from discovering a new resource to accessing a previously located resource.11

Studies in information science show that navigation in an information system is simplest and most effective when the content is homogeneous, the purposes of searching are consistent and clearly defined, and the searchers have common purposes and similar levels of skills.12 Yet, Internet resources per se often represent the opposite case in all of these respects. Their content is often highly heterogeneous; their diverse users’ purposes are often greatly varied; the resources the users are seeking are often poorly described; and the users often have widely varying degrees of skills and knowledge. Thus, as the resources accessible via the Internet expand in quantity and diversity of content, number and diversity of users, and variety of applications, the challenges facing Internet navigation become even more complex.

Indeed, prior to the use of the Internet as a means to access information, many collections of information resources, whether in a library or an online information system, were accessed by a more homogenous collection of users. It was generally known when compiling the collection whether the content should be organized for specialists or lay people, and whether skill in the use of the resource could be assumed. Thus, navigation aids, such as indexes or catalogs, were readily optimized for their specific content and for the goals of the people searching them. Health information in a database intended for searching by physicians could be indexed or cataloged using specific and highly detailed terminology that assumed expert knowledge. Similarly, databases of case law and statute law assumed a significant amount of knowledge of the law. In fields such as medicine and law, learning the navigation tools and the vocabularies of the field is an essential part of professional education. Many such databases are now accessible by specialists via the Internet and continue to assume a skillful and knowledgeable set of users, even though in some

cases they are also accessible by the general public. With the growth in Internet use, however, many more non-specialist users have ready access to the Web and are using it to seek medical, legal, or other specialized information. The user community for such resources is no longer well defined. Few assumptions of purpose, skill level, or prior knowledge can be made about the users of a Web information resource. Consequently, it is general-purpose navigation aids and services and less specialized (and possibly lower-quality) information resources that must serve their needs.

6.1.4 Lack of Human Intermediaries

Fourth, the human intermediaries who traditionally linked searchers with specific bodies of knowledge or services—such as librarians, travel agents, and real estate agents—are often not available to users as they seek information on the Internet. Instead, users generally navigate to the places they seek and assess what they find on their own, relying on the aid of digital intermediaries—the Internet’s general navigation aids and services, as well as the specialized sites for shopping, travel, job hunting, and so on. Human intermediaries’ insights and assistance are generally absent during the navigation process.

Human search intermediaries help by selecting, collecting, organizing, conserving, and prioritizing information resources so that they are available for access.13 They combine their knowledge of a subject area and of information-seeking behavior with their skills in searching databases to assist people in articulating their needs. For example, travelers have relied on travel agents to find them the best prices, best routes, and best hotels, and to provide services such as negotiating with hotels, airlines, and tour companies when things go wrong. Intermediaries often ask their clients about the purposes for which they want information (e.g., what kind of trip the seekers desire and how they expect to spend their time; what they value in a home or neighborhood; or what research questions their term paper is trying to address), and elicit additional details concerning the problem. These intermediaries also may help in evaluating content retrieved from databases and other sources by offering counsel on what to trust, what is current, and what is important to consider in the content retrieved.

With the growth of the Internet and the World Wide Web, a profound change in the nature of professional control over information is taking place. Travel agents and real estate agents previously maintained tight control over access to fares and schedules and to listings of homes for

sale. Until recently, many of these resources were considered proprietary, especially in travel and real estate, and consumers were denied direct access to their content. Information seekers had little choice but to delegate their searches to an expert—a medical professional, librarian, paralegal, records analyst, travel agent, real estate agent, and so on. Today, travel reservation and real estate information services are posting their information on the Internet and actively seeking users. Specialized travel sites, such as Expedia.com and travelocity.com, help the user to search through and evaluate travel options. Similar sites serve the real estate market. Travel agents that remain in business must get their revenue from other value-added services, such as planning customized itineraries and tours and negotiating with brokers. Although house hunters now can do most of their shopping online, in most jurisdictions they still need real estate agents with access to house keys to show them properties and to guide them in executing the legal transactions. Libraries have responded to the Web by providing “virtual reference services” in addition to traditional on-site reference services.14 Other entities, including the U.S. Department of Education, have supported the creation of non-library-based reference services that use the Internet to connect users with “people who can answer questions and support the development of skills” without going through a library intermediary.15

6.1.5 Democratization of Information Access and Provision

Fifth, the Internet has hugely democratized and extended both the offering of and access to information and services. Barriers to entry, whether cost or credentials, have been substantially reduced. Anyone with modest skill and not much money can provide almost anything online, and anyone can access it from almost anywhere. Rarely are credentials required for gaining access to content or services that are connected to the public Internet, although paid or free registration may be necessary to gain access to some potentially relevant material. Not only commercial and technical information are openly accessible, but also the full range of political speech, of artistic expression, and of personal opinion are readily available on the public Internet—even though efforts are continually be-

|

14 |

See, for example, the more than 600 references about such services in Bernie Sloan, “Digital Reference Services Bibliography,” Graduate School of Library and Information Science, University of Illinois at Urbana-Champaign, November 18, 2003, available at <http://www.lis.uiuc.edu/~b-sloan/digiref.html>. |

|

15 |

For example, the Virtual Reference Desk is “a project dedicated to the advancement of digital reference.” See <http://www.vrd.org/about.shtml>. This service is sponsored by the U.S. Department of Education. |

ing made to impose restrictions on access to some materials by various populations in a number of countries.16

In a great many countries, anyone can set up a Web site—and many people do. The freedom of the press does not belong just to those who own one; now nearly anyone can have the opportunity to publish via a virtual press—the World Wide Web.17 Whether or not what they publish will be read is another matter. That depends on whether they will be found, and, once found, whether they can provide content worthy of perusal—at least by someone.

For the most part, in many places, provision of or access to content or services is uncensored and uncontrolled. On the positive side, the Internet enables access to a global information resource of unprecedented scope and reach. Its potential impact on all aspects of human activity is profound.18 But the institutions that select, edit, and endorse traditionally published information have no role in determining much of what is published via the Internet. The large majority of material reachable via the Internet has never gone through the customary editing or selection processes of professional journals or of newspapers, magazines, and books.19 So in this respect as well, there has been significant disintermediation, leaving Internet users with relatively few solid reference points as they navigate through a vast collection of information of varying accuracy and quality. In response to this widely acknowledged problem, some groups have offered evaluations of materials on the World Wide Web.20 One

|

16 |

These range from the efforts of parents to prevent their children from accessing age-inappropriate sites to those made by governments to prevent their citizens from accessing politically sensitive sites. The current regulations placed on libraries in the United States to filter content are available at <http://hraunfoss.fcc.gov/edocs_public/attachmatch/FCC03-188A1.pdf>. See also, information on the increasing sophistication of filtering efforts in China in David Lee, “When the Net Goes Dark and Silent,” South China Morning Post, October 2, 2002, available at <http://cyber.law.harvard.edu/people/edelman/pubs/scmp100102-2.pdf>. For additional information, see Jonathan Zittrain and Benjamin Edelman, Empirical Analysis of Internet Filtering in China, Beckman Center for Internet & Society, Harvard University, 2002, available at <http://cyber.law.harvard.edu/filtering/china/>. |

|

17 |

The rapid growth in the number of blogs (short for Web logs) illustrates this. According to “How Much Information?,” there were 2.9 million active Web logs in 2003. See Peter Lyman and Hal R. Varian, “How Much Information?,” 2003, retrieved from <http://www.sims.berkeley.edu/research/projects/how-much-info-2003> on April 27, 2005. |

|

18 |

See Borgman, From Gutenberg to the Global Information Infrastructure, 2000; and Thomas Friedman, “Is Google God?”, New York Times, June 29, 2003. |

|

19 |

However, it must be acknowledged that many “traditional” dissemination outlets (e.g., well-known media companies) operate Web sites that provide material with editorial review. |

|

20 |

See, for example, The Information Quality WWW Virtual Library at <http://www.ciolek.com/WWWVL-InfoQuality.html> and Evaluating Web Sites: Criteria and Tools at <http://www.library.cornell.edu/okuref/research/webeval.html>. |

example is the Librarians’ Index to the Internet,21 whose motto is “Information You Can Trust.”

6.1.6 Lack of Context or Lack of Skill

Sixth, most general-purpose Internet navigation services can assume nothing about the context of a search beyond what is in the query itself. The circumstances of the person searching the Internet—who could be anyone, searching for almost any purpose, from almost any place—are generally not known or, if known, not used.22 While an unskilled user planning a European trip who types “Paris” into a navigation service may expect to receive back only information on the city of Paris, France, or if studying the Iliad may expect to find out about the Greek hero, the navigation service has no knowledge of that context. If it relies on the text alone, it may return information about both, as well as information about sources for plaster of paris, the small town in Texas, movies set in Paris, and people with the first or family name of Paris.

While any general-purpose navigation service would be unable to ascertain which of those specific answers was desired, the person initiating an Internet request always knows its context, and if experienced or trained, should be able to incorporate some of that information in the query. An experienced searcher could incorporate context by expanding the query to “Paris France,” “Paris and Iliad,” “Paris Texas,” or “plaster of paris.” So the lack of context in many navigation requests is closely related to the user’s level of training or experience in the use of navigation services.23

|

21 |

“Librarians’ Index to the Internet (LII) is a searchable, annotated subject directory of more than 12,000 Internet resources selected and evaluated by librarians for their usefulness to users of public libraries. LII is used by both librarians and the general public as a reliable and efficient guide to Internet resources.” Quoted from <http://lii.org/search/file/about>, accessed on May 2, 2004. |

|

22 |

An online advertising company, DoubleClick, launched a service in 2000 to track people’s Internet usage in order to serve ads based on personal taste. After considerable controversy, especially from federal regulators and privacy advocates, and an inability to develop an adequate market, the profiling service was terminated at the end of 2001. See Stefanie Olsen, “DoubleClick Turns Away from Ad Profiles,” c/net news.com, January 8, 2002, available at <http://news.com.com/2100-1023-803593.html>. However, Yahoo! indicates in its privacy policy that it does use information provided by those who register at its site “to customize the advertising and content you see, fulfill your requests for products and services….” |

|

23 |

See, for example, Steven Johnson, “Digging for Googleholes,” Slate.com, July 16, 2003, available at <http://slate.msn.com/id/2085668/>; Donald O. Case, Looking for Information: A Survey of Research on Information Seeking, Needs, and Behavior, Academic Press, San Diego, Calif., 2002; and Paul Solomon, “Discovering Information in Context,” Annual Review of Information Science and Technology, Blaise Cronin, editor, Information Today, Inc., Medford, N.J., 2002, pp. 229-264. |

The incorporation of context is also related to the degree to which the navigation service is itself specialized. When people searched in traditional information sources, the selection of the source usually carried information about the context for a search (e.g., seeking a telephone number in the White Pages for Manhattan in particular, or looking for a home in the multiple listing service for Boston specifically). Furthermore, there were often intermediaries who could obtain contextual information from the information seeker and use that to choose the right source and refine the information request. Although the former approach is also available on the Internet through Internet white pages and Internet real estate search sites, the latter is just developing through the virtual reference services mentioned earlier.

General-purpose navigation services are exploring a variety of mechanisms for incorporating context into search. For example, both Yahoo! and Google now allow local searches, specified by adding a location to the search terms. The site-flavored Google Search customizes searches originating at a Web site to return search results based on a profile of the site’s content. Other recent search engines such as Vivisimo (www.vivisimo.com) return search results in clusters that correspond to different contexts—for example, a search on the keyword “network” returns one cluster of results describing cable networks, another on network games, and so on.24

Still, issues of context complicate Internet navigation because the most widely used navigation services are general purpose—searching the vast array of objects on the Internet with equal attention—and because many users are not experienced or trained and have no access to intermediaries to assist them.

6.1.7 Lack of Persistence

Seventh, there is no guarantee of persistence for material at a particular location on the Internet. While there is no reason to believe that everything made accessible through the Internet should persist indefinitely, there are a great many materials whose value is such that many users would want to access them at the same Internet address at indefinite times in the future. For example, throughout this report there are two kinds of references: those to printed materials—books, journals, newspapers—and those to digital resources that are located via Web pages. There is a very high probability that whenever this report is read, even years after its publication, that every one of the referenced printed materials will be ac-

|

24 |

See Chris Gaither, “Google Offers Sites Its Services,” Los Angeles Times, June 19, 2004, p. c2. Also see <http://www.google.com/services/siteflavored.html>, accessed on June 18, 2004. |

cessible in unchanged form through (though not necessarily in) any good library (at least in the United States). There is an equal certainty that whenever this report is read, even in the year of its publication, some of the referenced Web pages either will no longer be accessible or will no longer contain the precise material that was referenced. The proportion of missing or changed references will increase over time. To the extent that it would be valuable to locate the referenced Web material, there is a problem of persistence (see Box 6.1) of resources on the Internet.25

The problem of persistence arises because material connected to the Internet is not generally governed by the conventions, standards, and practices of preservation that evolved with regard to printed material. The material may change in many ways and for many reasons. It may remain the same, but the location may change because of a change of computers, or a redesign of a Web site, or a reorganization of files to save space, or a change in Internet service providers (ISPs).26 Or it may be replaced at the same location by an improved version or by something entirely different but with the same title. It may disappear completely because the provider stops operating or decides not to provide it any more or it may be replaced by an adequate substitute, but at a different location. Thus, navigation to an item is often a one-time event. Incorporating a reference or a link to that item in another document is no guarantee that a future seeker will find the same item at the same location. Nor is there any guarantee that something once navigated to will be there when the recorded path is followed once more or that it will be the same as it was when first found.

The problem is exacerbated for material on the World Wide Web by its structure—a hyperlinked web of pages. Even if a specific Web page persists indefinitely, it is unlikely that the many pages to which it links will also persist for the same period of time, and so on along the path of linked pages. So to the extent that the persistent page relies on those to which it links, its persistence is tenuous.

|

25 |

One example of the lack of persistence of important information is U.S. Government documents published only on the Web. The U.S. Government Printing Office has begun an effort to find and archive such electronic documents. See Florence Olsen, “A Crisis for Web Preservation,” Federal Computer Week, June 21, 2004, available at <http://www.fcw.com/fcw/articles/2004/0621/pol-crisis-06-21-04.asp>. |

|

26 |

Thomas Phelps and Robert Wilensky of the University of California at Berkeley have suggested a way to overcome the difficulties caused by shifting locations. They add a small number of words carefully selected from the page to its URL. If the URL is no longer valid, the words can be used in a search engine to find the page’s new location. See Thomas Phelps and Robert Wilensky, “Robust Hyperlinks: Cheap, Everywhere, Now,” Proceedings of the 8th International Conference on Digital Documents and Electronic Publishing (Lecture Notes in Computer Science, Vol. 2023), Springer-Verlag, 2004. |

|

BOX 6.1 Navigation is a two-step process: discovery and then retrieval. The discovery process involves identifying the location of the desired material on a Web site. The retrieval process involves obtaining the located material at the identified URL. (See Box 6.2.) Discovery may find dynamically generated pages for which only a transient URL exists, such as driving directions between two locations or an online order, or a constant URL that has ever changing information, such as a weather service. It may not be possible or even feasible (last year’s weather forecast) to retrieve the same pages again. Thus, not even a hyperlink to that material can be embedded in other pages, nor can the page be bookmarked for future reference. Even if the resource is itself static, such as the text of a research report, it may be moved, invalidating the URL. More problematically, it may be necessary to decide whether what is retrieved a second time (even if it is in the same place) is the same as what was retrieved previously. Unless the original object and the (possibly) new one are identical bit-by-bit, the question of whether the two are “the same,” or identical, raises long-standing questions that are typically very subjective and/or contextual: Is a translation into another language the same as the original? If a document is reformatted, but all of the words are the same, is it identical to the original? If a document is compressed, or transposed into a different character code, or adapted to a different version of a viewer program, is it still the same document? Is a second edition an acceptable match to a request for the first edition? And so on. For each of these questions, the correct answer is probably “sometimes,” but “sometimes” is rarely a satisfactory answer, especially if it is to be evaluated by a computer system. If things are abstracted further so that, instead of discovering a URL directly, the user utilizes an updatable bookmark that incorporates a reference to the discovery processes, the meaning of persistence becomes even more ambiguous. Such a bookmark, intended to reference a list of restaurants in a particular city, might upon being used not only discover a newer version of the same list, but also find a newer, more comprehensive, list from a different source and at an entirely different location on the network. So what is persistent in this case is not the answer, but the question. These are arguably not new conundrums—analogies occur with editions of books and bibliographies, but the opportunities on the Internet for faster turnover and for changes of finer granularity, as well as the ability to update search indexes at very high frequency, make the meaning and achievement of Internet persistence more complex and ambiguous and difficult to understand for the casual user. |

6.1.8 Scale

Eighth, the Internet—in particular, the World Wide Web—vastly exceeds even the largest traditional libraries in the amount of material that it makes accessible.27 In 2003, the World Wide Web was estimated to contain 170 terabytes of content in its surface, readily accessible—public—sites. This compares with an estimated 10 terabytes of printed documents in the Library of Congress (which also contains many terabytes of material in other media). In addition, the “dark” Web is estimated to contain 400 to 450 times as much content in its databases and in other forms inaccessible to search engines,28 a ratio that may be increasing. (See in Section 7.1.7 the discussion titled “The Deep, Dark, and Invisible Web.”)

6.1.9 The Sum of the Differences

For all the reasons described above, navigation on the Internet is different from navigating through traditional collections of documents; finding information or services through the use of knowledgeable intermediaries; locating the television audience for a commercial or political message; or reaching the readers for an essay. Navigating the Internet is even more than the sum of all those differences over a much larger extent and variety of resources and a much greater number and diversity of users and providers.

Conclusion: Finding and accessing a desired resource via the Internet poses challenges that are substantially different from the challenges faced in navigating to resources in non-digital, non-networked environments because of differences in content, purpose, description, user community, institutional framework, context, skill, persistence, and scale.

Fortunately, the Internet has also served as the infrastructure for the development of new means for responding to these challenges. As the following section and the next chapter show, a wide range of navigation aids and services now permit large segments of the Internet to be traversed rapidly and efficiently in ways previously unimaginable, providing ready access to a vast world of human knowledge and experience to users across the globe and opening an international audience to purveyors of content and services no matter where they may be located.

|

27 |

Because the Web contains a large amount of “format overhead” and non-reliable information, this comparison was disputed for the Web of 2000 (25-50 terabytes estimated) by researchers from OCLC. See Edward T. O’Neill et al., “Trends in the Evolution of the Public Web 1998-2002,” D-Lib Magazine 9(4), 2003, at <http://www.dlib.org/dlib/april03/lavoie/04lavoie.html>. |

|

28 |

These data are taken from Lyman and Varian, “How Much Information?,” 2003. |

6.2 INTERNET NAVIGATION AIDS AND SERVICES—HISTORY

Aids and services to assist Internet navigation have had to evolve steadily to keep pace with the growth in the scale, scope, and complexity of material on the Internet.29

In the first years of the Internet,30 the 1970s and 1980s, information was accessed through a two-step process: first, the host on which a desired resource resided was found,31 and then the desired file was found.32 A good example of this host-oriented navigation is the File Transfer Protocol (FTP). Retrieving a file using FTP required that the user (using FTP client software) already know the name of the host (which would be using FTP server software) on which the file was stored,33 have a login name and password for that host (unless anonymous login was permitted), know a file name, and sometimes have other information such as an account name for computer time billing.34 The File Transfer Protocol made it possible to retrieve data from any FTP server to which one was connected by a network. File names and server locations could be obtained in various ways—from friends, from newsgroups and mailing lists, from files that identified particular resources, or from a few archive servers that contained very large collections of files with locally created indexing. However, such ad hoc and labor-intensive processes do not scale well. More automated processes were needed as the number of Internet users and topics of interest increased substantially.

|

29 |

The early period of rapid development of Internet navigation aids was not well documented, nor are there many good references. Many of the critical developments are included in this brief history, but it is not intended to be comprehensive. |

|

30 |

In this section, the “Internet” also includes the ARPANET. See Chapter 2. |

|

31 |

While a few protocols did not explicitly name a host, in most cases that meant that the host name was implicit in the service being requested (e.g., running a program that queried a central database that resides only on the Network Information Center (NIC) computer implies the host name of interest). |

|

32 |

At a technical level, this still occurs, but is less visible to the user. For example, a URL contains a domain (host) name, and the client (browser in the case of the Web) uses some protocol (usually the Hypertext Transfer Protocol (HTTP) in the case of the Web) to open a connection to a specified host. The rest of the URL is then transmitted to that host, which returns data or takes other action. |

|

33 |

Once the desired FTP server was located, FTP eventually included capabilities to travel down directory hierarchies to find the desired files. (At the time FTP was designed, the protocol did not contain any provision for dealing with hierarchical files—that was added somewhat later.) User names and the account command were, on many systems, the primary mechanism for providing context for the file names. That is, “user” and “acct” were navigational commands as much as they were authentication and authorization commands. |

|

34 |

Frequently, some documentation about the content of directories was provided in files with the file name of “readme.txt” or some variation thereof, such as AAREAD.ME, to take advantage of alphabetic sorting when the directory was retrieved. |

6.2.1 Aiding Navigation via the Internet

The first response to the need for automated processes was Archie,35 a selective index of those files thought to be “interesting” by those who submitted them to the index and of the FTP servers on which they were located. When Archie was first released in 1989, many people were skeptical of the idea of having an index of such large size on one computer, but Archie was a success. It consisted of three components: the data gatherer, the index merger, and the query protocol. The gathering of data was not done by brute force. The company that took over operation of the Archie service (Bunyip Information Systems) relied on licensed Archie server operators all over the world (who knew which FTP archives were reliable) to run a data gatherer that indexed local files by searching each local site.36 Each local gatherer passed the partial index upstream, and eventually the partial indexes were merged into a full index having fairly simple functionality, primarily limited to single-level alphabetic sorting. This process of collaborative gathering saved time and minimized the bandwidth required. A special protocol allowed clients to query the full index, which was replicated on servers around the world, to identify the FTP server that contained the requested file.

Archie still required users to employ FTP to download the files of interest for viewing on their local computers. The next aid, Gopher, was developed as a protocol for viewing remote files, which were in a specified format, on a user’s local computer. It became the first navigation aid on the Internet that was easily accessible by non-computer-science specialists.37 The Gopher “browser” provided users with the capability to look at the content of a file on the computer that stored it, and if the file included a reference to another file, to “click” directly on the link to see the contents of the second file. Gopher included some metadata38 so that, for example, when a user clicked on a link, the user’s client software would

|

35 |

“Archie” is not an acronym but is a shortening of the word “archive” to satisfy software constraints. Peter Deutsch, Alan Emtage, Bill Heelan, and Mike Parker at McGill University in Montréal created Archie. |

|

36 |

The burden this imposed on the FTP servers led the operators of many of them to create and keep up to date an Archie-usable listing of the server in its root directory and to keep those listings up to date, obviating the need for Archie to search the server. |

|

37 |

Gopher, created in 1991 by Marc McCahill and his technical team at the University of Minnesota, is not an acronym but is named after the mascot at the University of Minnesota. See Chris Sherman, “SearchDay,” Number 198, February 6, 2002, available at <http://searchenginewatch.com/searchday/02/sd0206-gopher.html>. |

|

38 |

Metadata are data that describe the characteristics or organization of other data. See Murtha Baca, Introduction to Metadata: Pathways to Digital Information, Getty Information Institute, Los Angeles, 1998. |

automatically check the metadata, choose the desired document format, and start the appropriate program to deal with the data format.

To overcome Gopher’s limitation to files on a single computer, a central search aid for Gopher files—Veronica—was created in 1992.39 It did for Gopher what Archie did for FTP. Veronica was the first service that used brute-force software robots (software that automatically searched the Internet) to collect and index information and, therefore, could be seen as the forerunner of Web search engines. In 1993, Jughead added keywords and Boolean search capabilities to Veronica.40

The next step in the evolution of Internet navigation aids was the wide area information server (WAIS) that enabled search using word indices of specified files available on the Internet.41 The WAIS search engine received a query, sought documents relevant to the question in its database by searching the word indices, and returned a list of documents ordered by estimated relevance to the user. Each document was scored from 1 to 1000 based on criteria such as its match to the user’s question as determined by how many of the query words it contained and their importance in the document. WAIS did not index the entire Internet, but rather only specific files and servers on it. At its peak, WAIS linked up to 600 databases, worldwide.42 It was used, for example, by Dow Jones to create a fully indexed online file of its publications. WAIS was an important step beyond FTP, Gopher, and Archie (and friends) because it built on known information-retrieval methods43 and standards, including the Z39.50 “search and retrieve” standard as its key data representation model.44

|

39 |

Veronica (Very Easy, Rodent-Oriented, Net-Wide Index to Computerized Archives) was created in November 1992 by Fred Barrie and Stephen Foster of the University of Nevada System Computing Services group. |

|

40 |

Jughead (Jonzy’s Universal Gopher Hierarchy Excavation And Display) was created by Rhett “Jonzy” Jones at the University of Utah computer center. The names of these two protocols were derived from characters in Archie comics. See <http://www.archiecomics.com/>. |

|

41 |

WAIS was developed between 1989 and 1992 at Thinking Machines Corporation under the leadership of Brewster Kahle. Unlike Archie (and friends), Gopher, and the Web, WAIS was firmly rooted in information sciences approaches and technology. |

|

42 |

Richard T. Griffiths, “Chapter Four: Search Engines,” History of the Internet, Leiden University, The Netherlands, 2002, available at <http://web.let.leidenuniv.nl/history/ivh/chap4.htm>. |

|

43 |

Many of the navigation aids and services described in this history drew inspiration and methodology from the long line of research in information science, as well as computer science. It has not been possible to give full credit to those antecedents in this brief history. |

|

44 |

For information about the Z39.50 protocol, see National Information Standards Organization, Z39.50 resource page, available at <http://www.niso.org/z39.50/z3950.html#other>; and Mark Needleman, “Z39.50–A Review, Analysis and Some Thoughts on the Future,” Library HiTech 18(2):158-65, 2000, available at <http://www.biblio-tech.com/html/z39.50.html>, accessed on June 23, 2004. |

FTP with Archie, Gopher with Veronica and Jughead, and WAIS demonstrated three ways to index and find information on the Internet.45 They laid the foundation for the subsequent development of aids for navigating the World Wide Web.

6.2.2 Aiding Navigation Through the World Wide Web

While those early navigation aids were being developed, a new way of structuring information on the Internet46—the World Wide Web—was under development at the European Organization for Nuclear Research (CERN) in Geneva.47 Many of the concepts underlying the Web existed in Gopher, but the Web incorporated more advanced interface features, was designed explicitly for linking information across sites, and employed a common language, called Hypertext Markup Language (HTML), to describe Web content. With the deployment of the Mosaic browser48 and its widely adopted commercial successors—Netscape’s Navigator and Microsoft’s Internet Explorer—it became easy to view HTML formatted documents and images located on the Web, making it much more appealing to most users than Gopher’s text-oriented system.

Indeed, the use of browsers on the Web eventually replaced the use of WAIS, FTP/Archie and Gopher/Veronica. At the same time, the Web unintentionally made domain names valuable as identifiers, thereby raising their profile and importance. While FTP and Gopher sites used do-

|

45 |

See Michael F. Schwartz, Alan Emtage, Brewster Kahle, and B. Clifford Neuman, “A Comparison of Internet Discovery Approaches,” Computing Systems 5(4):461-493, 1992. |

|

46 |

The Web’s structure is hypertext, which was anticipated in the form of “associative indexing” by Vannevar Bush in a famous article, “As We May Think,” published in the Atlantic Monthly in July 1945. The term “hypertext” was coined by Theodor (Ted) Nelson in the early 1960s. Nelson spent many years publicizing the concept and attempting early implementations. Douglas C. Engelbart’s NLS/Augment project at the Stanford Research Institute (which in 1977 became known as SRI International) first demonstrated a hypertext system in 1968. Apple Computer introduced HyperCard, a commercial implementation of hypertext for the Macintosh, in 1987. The practical implementation of a hypertext data structure on the Internet at a time when computer capacity and network speed were finally sufficient to make it practical was CERN’s contribution. |

|

47 |

Tim Berners-Lee and his team developed the Web in 1990. It was first released to the physics community in 1991 and spread quickly to universities and research organizations thereafter. See <http://public.web.cern.ch/public/about/achievements/www/history/history.html>. |

|

48 |

Mosaic, which was released in 1993 by the National Center for Supercomputing Applications (NCSA) at the University of Illinois, Urbana-Champaign, was the first Web browser with a graphical user interface for the PC and Apple environments. It had an immediate effect on the widespread adoption of the Web by non-research users. Marc Andreessen, one of the developers of Mosaic, became a co-founder of Netscape. See <http://www.ncsa.uiuc.edu/Divisions/Communications/MosaicHistory/>. |

main names, these names attracted little attention per se. However, the development of the Web and its meteoric growth linked domain names and the Web very closely through their prominent inclusion in browser addresses as a key part of Uniform Resource Locators (URLs). (See Box 6.2.) As noted in Chapter 2, it was commonplace to navigate across the World Wide Web just by typing domain names into the address bar, since browsers automatically expanded them into URLs. Often, at that time, the domain names were simply guessed on the assumption that trying a brand name followed by .com had a high probability of success.

The Web, whose underlying structure is hypertext—resources connected by links—also introduced a new and convenient means of navigation: “clicking” on hyperlinks on a page displayed in a browser. Hyperlinks generally have text, called anchor text, which many browsers display by underlining and color change. When the user moves the pointer to the anchor text of a hyperlink and clicks the mouse button, the browser finds the associated URL in the code for that page and accesses the corresponding page.49 Thus, navigation across the Web from an initial site can consist of nothing more than a series of clicks on the anchor text of hyperlinks.

The World Wide Web has experienced rapid and continual growth50 since the introduction of browsers. In 1993, when the National Center for Supercomputing Applications’ (NCSA) Mosaic browser was made publicly available, there were just 200 sites on the Web. The growth rate of Web traffic in 1993 in transmitted bytes was 340,000 percent, as compared with 997 percent for Gopher traffic.51 By March 1994, Web traffic exceeded Gopher traffic in absolute terms.52 Based on one estimate of the number of Internet hosts,53 the exponential growth of Web sites continued from

|

49 |

Since there is no required association between the hyperlink and its anchor text, there is an opportunity for malicious or criminal misdirection to deliberately bogus sites, which has been exploited recently as a vehicle for identity theft and is referred to as “phishing” or “brand spoofing.” |

|

50 |

There is difficulty in measuring the rate of growth of and the volume of information on the Web because of the lack of consistent and comprehensive data sources. The lack of consistent measurements over time is also attributable to the very factors that continue to enable the Web’s growth—as the technology and architecture supporting the Web have evolved, so have the methods of characterizing its growth. |

|

51 |

See Robert H. Zakon, “Hobbes’ Internet Timeline,” RFC 2235, November 1997, available at <http://www.rfc-editor.org>. |

|

52 |

See Susan Calcari, “A Snapshot of the Internet,” Internet World 5(1, September):54-58, 1994. Also, Merit Network, Inc., Internet statistics, 1995, available at <ftp://nic.merit.edu/nsfnet/statistics>. Graphics and tables of NSFNET backbone statistics are available at <http://www.cc.gatech.edu/gvu/stats/NSF/merit.html>. |

|

53 |

Internet Systems Consortium provides host data, which are available at <http://www.isc.org/>. |

|

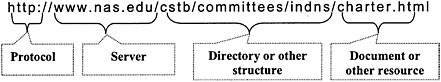

BOX 6.2 The Uniform Resource Identifier (URI)1 is a general name for resource identifiers on the Internet. Several distinct types of URIs have been defined: Uniform Resource Locators (URLs), Uniform Resource Names (URNs), and Uniform Resource Characteristics (URCs), each of which identifies a resource differently. URLs are, by far, the most commonly used of the three. URLs are URIs that identify resources by their location. Although URLs can be used to locate many different types of resources on the Internet, the most common URLs are those used to identify locations on the World Wide Web.2 Web URLs, such as <http://www.nas.edu>, include “http” to designate the Hypertext Transfer Protocol (HTTP) and “www.nas.edu” to designate the Web location of interest. The example below shows the URL for the committee’s charter document in HTML format located in the directory3 /cstb/committees/indns, located on the www.nas.edu HTTP server.

A URL cannot separate the location from the name of a resource. Therefore, a URL is outdated when a resource is moved to a new location, yet it remains unchanged even when the resource at that location is changed. The URN type of URI was defined to meet the need for a more persistent identifier. A URN identifies a resource by assigning it a permanent and globally unique name drawn from a specified name space that is not tied to a location.4 However, there needs to be a continually updated table linking each URN to the current location of the resource it names. A third class of URI, the URC, was proposed to incorporate metadata about resources and their corresponding URNs. URCs have not achieved widespread acceptance or use. |

1993 to 1998, with the number of hosts approximately doubling each year. After 1998, the rate of growth in hosts slowed to a doubling every 2 years. Web server data54 provides the number of active Web sites located via the DNS as a measure of the growth in the volume of information on the Web. Although data are discontinuous, from June 1993 to December 1995, the number of Web sites doubled every 3 months; from December 1997 to December 2000, the number doubled approximately every 10 months.

|

54 |

Matthew Gray of the Massachusetts Institute of Technology compiled data on the growth of Web sites from June 1993 to June 1995, which is available at <http://www.mit.edu/people/mkgray/net/web-growth-summary.html>; the Netcraft Web Server Survey began in August 1995 and is available at <http://www.netcraft.com/Survey/Reports/>. |

|

An approach different from URIs for naming resources on the Internet motivates the Handle System.5 A handle consists of two parts separated by a forward slash. The first part is a naming authority—a unique, arbitrary number assigned within the Handle System, which identifies the administrative unit that is the creator of the handle, but not necessarily the continuing administrator of the associated handles. The part after the slash is a local name that identifies the specific object. It must be unique to the given naming authority. The Handle System allows a handle to map to more than one version or attribute of a resource and resolve more than one piece of data. The multiple resolution capabilities of handles support extended services such as reverse lookup, multi-versioning, and digital rights management.

|

As the volume of material on the Web grew exponentially, simple guessing and hypertext linking no longer served to find the sites users wanted. Nor was the almost daily un-indexed list of new sites that NCSA published (as “NCSA What’s New”) from June 1993 to June 199655 sufficient. New methods for navigating to Web resources were badly needed and the need unleashed a flurry of innovation, much of it based in universities and often the product of graduate students and faculty, who recognized the opportunity and had the freedom to pursue it. The new naviga-

|

55 |

An archive of those listings is available at <http://archive.ncsa.uiuc.edu/SDG/Software/Mosaic/Docs/whats-new.html>. |

tion services took two primary forms: directories and search engines. Directories organized Web resources by popular categories. Search engines indexed Web resources by the words found within them.

The sequence of key developments from 1993 through 2004 in both forms of Web navigation system is shown in detail in Box 6.3. A broad overview of the development follows.

Web navigation service development began in 1993, the year the Mosaic browser became available, first to universities and research laboratories, and then more generally on the Internet. The first directory and the first search engines were created in that year. But it was 1994 when the first of the widely used directories—Yahoo!—and the first full-text search engine—WebCrawler—were launched. Over the next few years, technological innovation occurred at a rapid pace, with search engines adding new features and increasing their speed of operation and their coverage of the Web as computing and communication technology and system design advanced. Lycos, launched in 1994, was the first Web search engine to achieve widespread adoption as it indexed millions of Web pages. It was followed in 1995 by Excite and Alta Vista. Alta Vista in particular offered speed improvements and innovative search features. With the launch of Google in beta in 1998 and as a full commercial offering in 1999, the general nature of the technology of search engines appeared to reach a plateau, although there is continual innovation in search algorithms and approaches to facilitate ease of use. The commercial evolution of search services continued rapidly both through additional entries into the market and an increasingly rapid pace of consolidation of existing entries. The evolution of directory technology has been less visible and probably less rapid. The focus of that evolution appears, rather, to have been on the means of creating and maintaining directories and on the addition of offerings, including search engines, to the basic directory structure.

By the first years of this century, the two worlds of search engines and directories had merged, at least commercially. In 2004, Google offered Google directory (supplied by Open Directory) and Yahoo! offered Yahoo search (provided by its acquisition—Inktomi) with paid ads (provided by its acquisition—Overture). By 2004 most commercial navigation services offered advertisements associated with specific responses. These paid ads are the principal source of funding and profit for commercial navigation services. (Commercial Internet navigation is discussed in further detail in Section 7.2.) Associated with this latter development has been the rapid rise of the business of search engine marketing, which helps commercial Web sites to decide in which search engines and directories they should pay for ads; and search engine optimization, which helps to design Web sites so search engines will easily find and index them. (Organizations

that provide these services generally also provide assistance with bidding strategies and assistance in advertising design.) Finally, by 2004, many search services had established themselves as portals—sites whose front pages offer access to search; news, weather, stock prices, entertainment listings, and other information; and links to travel, job search, and other services.

The development of Internet navigation aids and services, especially those focused primarily on the Web, stands in interesting contrast to the development of the Domain Name System as described in Chapter 2.

Conclusion: A wide range of reasonably effective, usable, and readily available Internet navigation aids and services have been developed and have evolved rapidly in the years since the World Wide Web came into widespread use in 1993.

Large investments in research and development are currently being made in commercial search and directory services. Still, many of the unexpected innovations in Internet navigation occurred in academic institutions. These are places with strong traditions of information sharing and open inquiry. Research and education are “problem-rich” arenas in which students and faculty nurture innovation.

Conclusion: Computer science and information science graduate students and faculty played a prominent role in the initial development of a great many innovative Internet navigation aids and services, most of which are now run as commercial enterprises. Two of those services have become the industry leaders and have achieved great commercial success—Yahoo! and Google.

Conclusion: Because of the vast scale, broad scope, and ready accessibility of resources on the Internet, the development of navigation aids and services opens access to a much wider array of resources than has heretofore been available to non-specialist searchers. At the same time, the development of successful Internet navigation aids and services opens access to a much broader potential audience than has heretofore been available to most resource providers.

One cannot know if the past is prelude, but it is clear that the number and the variety of resources available on the Internet continue to grow, that uses for it continue to evolve, and that many challenges of Internet navigation remain. Some of the likely directions of technological development are described in Section 8.1

|

BOX 6.3 1989 Work started on the InQuery engine at the University of Massachusetts that eventually led to the Infoseek engine. 1993 First Web robot or spider.1 The World Wide Web Wanderer was created by MIT student Matthew Gray. It was used to count Web servers and create a database—Wandex—of their URLs. Work on the Architext search engine using statistical clustering was started by six Stanford University undergraduates, which was the basis for Excite search engine launched in 1995. First directory. WWW Virtual Library was created by Tim Berners-Lee. ALIWEB, an Archie-like index of the Web based on automatic gathering of information provided by webmasters, was created by Martijn Kosters at Nexor Co., United Kingdom. First robot-based search engines launched. The World Wide Web Worm, JumpStation, and Repository-Based Software Engineering (RBSE) were launched. None indexed the full text of Web pages. 1994 The World Wide Web Worm indexed 110,000 Web pages and Web-accessible documents; it received an average of 1500 queries a day (in March and April). First searchable directory of the Web. Galaxy, created at the MCC Research Consortium, provided a directory service to support electronic commerce. First widely used Web directory. Yahoo! was created by two Stanford graduate students, David Filo and Jerry Yang, as a directory of their favorite Web sites. Usage expanded with the growth in entries and addition of categories. Yahoo! became a public company in 1995. First robot-based search engine to index full text of Web pages. Web Crawler was created by Brian Pinkerton, a student at the University of Washington. Lycos was created by Michael Mauldin, a research scientist at Carnegie Mellon University. It quickly became the largest robot-based search engine. By January 1995 it had indexed 1.5 million documents and by November 1996, over 60 million—more than any other search engine at the time. |

|

Harvest was created by the Internet Research Task Force Research Group on Resource Discovery at the University of Colorado. It featured a scalable, highly customizable architecture and tools for gathering, indexing, caching, replicating, and accessing Internet information. Infoseek Guide, launched by Infoseek Corporation as a Web directory, was initially fee based, and then free. The OpenText 4 search engine was launched by Open Text Corporation based on work on full-text indexing and string search for the Oxford English Dictionary. (In 1996 it launched “Preferred Listings,” enabling sites to pay for listing in top-10 search results. Resultant controversy may have hastened its demise in 1997.) 1995 The Infoseek search engine was launched in February and in December became Netscape’s default search service, displacing Yahoo! First metasearch service. SearchSavvy, created by Daniel Dreilinger, a graduate student at Colorado State University, queried multiple search engines and combined their results. First commercial metasearch service. MetaCrawler, developed by graduate student Erik Selberg and faculty member Oren Etzioni at the University of Washington, was licensed to go2net. The Excite commercial search engine, based on the Stanford Architext engine, was launched. The Magellan directory was launched by the McKinley Group. It was complemented by a book, The McKinley Internet Yellow Pages, that categorized, indexed, and described 15,000 Internet resources and that accepted advertising. Search engine achieved record speed: 3 million pages indexed per day. AltaVista, launched by Digital Equipment Corporation, combined computing power with innovative search features—including Boolean operators, newsgroup search, and users’ addition and removal of their own URLs—to become the most popular search engine. 1996 First search engine to employ parallel computing for indexing: 10 million pages indexed per day. Inktomi Corporation launched the HotBot search engine based on work of faculty member Eric Brewer and graduate student Paul Gauthier of the University of California, Berkeley. HotBot used clusters of inexpensive workstations to achieve supercomputer speeds. It adopted the OEM search model—providing search services through others—and was licensed to Wired magazine’s Web site, HotWired. |

|

First paid listings in an online directory. LookSmart was launched as a directory of Web site listings. Containing both paid commercial listings and non-commercial listings submitted by volunteer editors, it also adopted the OEM model. First Internet archive. Archive.org was launched by Brewster Kahle as an Internet repository with the goal of archiving snapshots of the Web’s content on a regular basis. Consolidation begins: Excite acquired WebCrawler and Magellan. 1997 First search engine to incorporate automatic classification and creation of taxonomies of responses and to use multidimensional relevance ranking. The Northern Light search engine also indexed proprietary document collections. AOL launched AOL NetFind, its own branded version of Excite. The Mining Company directory service, started by Scott Kurnitt and others, used a network of “guides” to prepare directory articles. First “question-answer” style search engine. Ask Jeeves was launched. The company was founded in 1996 by a software engineer, David Warthen, and a venture capitalist, Garrett Gruener. The service emphasized ease of use, relevance, precision, and ability to learn. Alexa.com was launched by Brewster Kahle. It assisted search users by providing additional information—site ownership, related links, and a link to Encyclopedia Britannica—and also provided a copy of all indexed pages to Archive.org. Alta Vista, the largest search engine, indexed 100 million pages total and received 20 million queries per day. Open Text Corporation ceased operation. 1998 First search engine with paid placement (“pay-per-click”) in responses. Idealab! launched the GoTo search engine. Web sites were listed in an order determined by what they paid to be included in responses to a query term. First open source Web directory. Open Directory Project was launched (initially with the name GNUhoo and then NewHoo) with the goal of becoming the Web’s most comprehensive directory through the use of the open source model—contributions by thousands of volunteer editors. |

|

First search engine to use “page rank,” based on number of links to a page, in prioritizing results of Web keyword searches. Stanford graduate students Larry Page and Sergey Brin announced Google, which was designed to support research on Web search technology. Google became available as a “beta version” on the Web. Microsoft launched MSN Search using the Inktomi search engine. The Direct Hit search engine was introduced. It ranked responses by the popularity of sites among previous searchers using similar keywords. Yahoo! Web search was powered by Inktomi. Consolidation heats up: GoTo acquired WWW Worm; Lycos acquired Wired/HotBot; Netscape acquired the Open Directory; Disney acquired a large stake in Infoseek. 1999 Google, Inc. (formed in 1998) opened a fully operational search service. AOL/Netscape adopted it for search on its portal sites. The Norwegian company Fast Search & Transfer (FAST) launched the AllTheWeb search engine. The Mining Company was renamed About.com. Northern Light became the first engine to index 200 million pages. The FindWhat pay-for-placement search engine was launched to provide paid listings to other search engines. Consolidation continued: CMGI acquired AltaVista; At Home acquired Excite. 2000 Yahoo! adopted Google as the default search results provider on its portal site. Google launched an advertising program to complement its search services, added Netscape Open Directory to augment its search results, and began 10 non-English-language search services. Consolidation continued: Ask Jeeves acquired Direct Hit; Terra Networks S.A. acquired Lycos. By year’s end, Google had become the largest search engine on the Web with an index of over 1.3 billion pages, answering 60 million searches per day. 2001 Google acquired the Deja.com Usenet archive dating back to 1995. |

|

Overture, the new name for GoTo, became the leading pay-for-placement search engine. The Teoma search engine, launched in April, was bought by Ask Jeeves in September. The Wisenut search engine was launched. Magellan ceased operation. By the end of the year, Google had indexed over 3 billion Web documents (including a Usenet archive dating back to 1981). 2002 Consolidation continued: LookSmart acquired Wisenut. The Gigablast search engine was launched. 2003 Consolidation heated up: Yahoo acquired Inktomi and Overture, which had acquired AltaVista and AllTheWeb. FindWhat acquired Espotting. Google acquired Applied Semantics and Sprinks. Google indexed over 3 billion Web documents and answered over 200 million searches daily. 2004 Competition became more intense: Yahoo! switched its search from Google to its own Inktomi and Overture services. |

6.3 ADDENDUM—SEARCHING THE WEB VERSUS SEARCHING LIBRARIES

Searching on the public Web has no direct analog with searching libraries, which is both an advantage and a disadvantage. Library models provide a familiar and useful comparison for explaining the options and difficulties of categorizing Web resources.

First, locating an item by its URL has no direct equivalent in library models. The URL usually combines the name of a resource with its precise machine location. The URL approach assumes a unique resource at a unique location. Library models assume that documents exist in multiple

|

Amazon.com entered the market with A9.com, which added Search Inside the Book™ and other user features to the results of a Google search. Google included (in February) 6 billion items: 4.28 billion Web pages, 880 million images, 845 million Usenet messages, and a test collection of book-related information pages. Google went public in an initial public offering in August. Ask Jeeves acquired Interactive Search Holdings, Inc., which owned Excite and iWon. In November, Google reported that its index included over 8 billion Web pages.2 In December, Google, four university libraries, and the New York Public Library announced an agreement to scan books from the library collections and make them available for online search.3 SOURCES: Search Engine Optimization Consultants, “History of Search Engines and Directories,” June 2003, available at <http://www/seoconsultants.com/search-engines/history.asp>; iProspect, “A Brief History of Search Engine Marketing and Search Engines,” 2003, available at <http://www.iprospect.com/search_engine_placement/seo_history.htm>; Danny Sullivan, “Search Engine Timeline,” SearchEngineWatch.com, available at <http://www.searchenginewatch.com/subscribers/factfiles/article.php/2152951>; and Wes Sonnenreich, “A History of Search Engines,” Wiley.com, available at <http://www.wiley.com/legacy/compbooks/sonnenreich/history.html>.

|

locations, and separate the name of the document (its bibliographic description, usually a catalog record or index entry) from its physical location within a given library. Classification systems (e.g., Dewey decimal or Library of Congress) are used for shelf location only in open-stack libraries, which are prevalent in the United States. Even then, further coding is needed to achieve unique numbering within individual libraries.56 In

closed-stack libraries where users cannot browse the shelves, such as the Library of Congress, books usually are stored by size and date of acquisition. The uniqueness of name and location of resources that is assumed in a URL leads to multiple problems of description and persistence, as explained in this chapter.

Second, resources on the Web may be located by terms in their pages because search engines attempt to index documents in all the sites they select for indexing, regardless of type of content (e.g., text, images, sound; personal, popular, scholarly, technical), type of hosting organization (e.g., commercial, personal, community, academic, political), country, or language. By comparison, no single index of the contents of the world’s libraries exists. Describing such a vast array of content in a consistent manner is an impossible task, and libraries do not attempt to do so. The resource that comes closest to being a common index is WorldCat,57 which “is a worldwide union catalog created and maintained collectively by more than 9,000 member institutions” of the Online Computer Library Center (OCLC) and in 2004 contained about 54 million items.58 The contents of WorldCat consist of bibliographic descriptions (cataloging records) of books, journals, movies, and other types of documents; the full content of these documents is not indexed. Despite the scope of this database, it represents only a fraction of the world’s libraries (albeit most of the largest and most prestigious ones), and only a fraction of the collections within these libraries (individual articles in journals are not indexed, nor are most maps, archival materials, and other types of documents). The total number of documents in WorldCat (54 million) is small compared with those indexed by Google, InfoSeek, AltaVista, or other Internet search engines.

Rather than create a common index to all the world’s libraries, consistent and effective access to documents is achieved by dividing them into manageable collections according to their subject content or audience. Library catalogs generally represent the collections of only one library, or at most a group of libraries participating in a consortium (e.g., the campuses of the University of California59). These catalogs describe books, journals (but not individual journal articles), and other types of documents. Many materials are described only as collections. For example, just one catalog record describes all the maps of Los Angeles made by the U.S. Geological Survey from 1924 to 1963. It has been estimated that individual records in the library catalog represent only about 2 percent of the separate items in a typical academic library collection.60 Thus, library catalogs are far less

|

57 |

|

|

58 |

Accessed May 7, 2004. |

|

59 |

See <http://melvyl.cdlib.org/>. |

|

60 |

See David A. Tyckoson, “The 98% Solution: The Failure of the Catalog and the Role of Electronic Databases,” Technicalities 9(2):8-12, 1989. |

comprehensive than most library users realize. However, online library catalogs are moving away from the narrower model of card catalogs. Many online catalogs are merging their catalog files with records from journal article databases. Mixing resources from different sources creates a more comprehensive database but introduces the Web searching problem of inconsistent description.