2

Lessons Learned from Developing Metrics

Industry, academia, and federal agencies all have experience in measuring and monitoring research performance. This chapter describes lessons learned from these sectors as well as insight from retrospective analysis of the stratospheric ozone program of the 1970s and 1980s that might be useful to the Climate Change Science Program (CCSP).

INDUSTRY RESEARCH

Use of Metrics in Manufacturing

For more than 200 years,1 industry has employed metrics to monitor budget, safety, health, environmental impacts, material, energy, and product quality.2 A study group of 13 companies has been meeting since 1998 to

|

1 |

DuPont, E.I., 1811, Workers’ rules, Accession 146, Hagley Museum, Manuscripts and Archives Division, Wilmington, Del.; Hounshell, D.A., and J.K. Smith, 1988, Science and Corporate Strategy, DuPont, p. 2.; Kinnane, A., 2002, DuPont: From the Banks of the Brandywine to Miracles of Science, E.I. DuPont, Wilmington, Del., 268 pp. |

|

2 |

Examples of financial metrics can be found in the annual report of almost any major chemical company. Quality management metrics appear in the International Organization for Standardization’s ISO 9000 (<http://www.iso.ch/iso/en/iso9000-14000/index.html>). Examples of safety, health, environmental, material consumption, and energy consumption metrics are given in National Academy of Engineering (NAE) and National Research Council, 1999, Industrial Environmental Performance Metrics: Challenges and Opportunities, National Academy Press, Washington, D.C., 252 pp. |

identify metrics that could be useful tools for industry.3 The group found that the development of useful metrics in the manufacturing sector begins with careful formulation of the objectives for creating them. Important questions to be considered include the following:

-

What is the purpose of the measurement effort?

-

What are the “issues” to be measured?

-

How are goals set for each issue?

-

How is performance measured for that issue?

-

How should the metric be compared to a performance standard?

-

How will the metric be communicated to the intended audience?

Metrics that have proven useful in the manufacturing sector tend to have the following attributes:

-

few in number, to avoid confusing the audience with excessive data;

-

simple and thus easily understood by a broad audience;

-

sufficiently accurate to be credible;

-

an agreed-upon definition;

-

relatively easy to develop, preferably using existing data;

-

robust and thus requiring minimal exceptions and footnotes; and

-

sufficiently durable to remain relatively constant over the years.

Metrics used in manufacturing tend to focus on input, output, or process (see definitions in Box 1.3), and they are commonly normalized to enable comparisons. In general, output metrics (e.g., pounds of product per pound of raw material purchased) have been the most successful because they are highly specific, relatively unambiguous, and directly related to a specific end point. Over time, and frequently after adjustment based on learning, the use of metrics in the manufacturing sector has been so effective as to give rise to the maxim “what gets measured, gets managed.”

Extension to Research and Development

Success in the manufacturing sector encouraged efforts to develop quantifiable metrics for research and development (R&D) beginning in the late 1970s.4 However, problems immediately arose. The most successful manu-

|

3 |

The group meets under the sponsorship of the American Institute of Chemical Engineers’ (AIChE) Center for Waste Reduction Technology. See reports on AIChE collaborative projects, focus area on sustainable development at <http://www.aiche.org/cwrt/pdf/BaselineMetrics.pdf>. |

|

4 |

Blaustein, M.A., 2003, Managing a breakthrough research portfolio for commercial success, Presentation to the American Chemical Society, March 25, 2003; Miller, J., and J. Hillenbrand, |

facturing metrics measured a discrete item of output that could be produced in a short amount of time. These conditions are difficult to achieve in R&D. Research outputs are far less easily defined and quantified than manufacturing outputs, and the proof that a particular metric measured something useful, such as a profitable product or an efficient process, might take years.

Early metrics proposed for R&D included the following:

-

Input metrics: total expenses or other resources employed, expenses or resources consumed per principal investigator (PI), and PI activities such as number of technical meetings attended.

-

Output metrics: number of compounds or materials made or screened, and number of publications or patents per PI.

-

Outcome metrics: PI professional recognition earned.

The list of possible metrics was long and failures were common. For example, “number of compounds made” could lead to an emphasis on “easy” chemistry instead of groundbreaking effort in a difficult, but potentially fruitful, area. Moreover, absent any professional judgment on the relevance and quality of items such as “technical meetings,” measurement of these items merely consumed time and money that might have been better spent elsewhere.

Based on these early lessons learned, a small number of process and output metrics emerged that proved useful to some businesses. These included

-

elapsed time to produce and register a quality product, from discovery to commercialization; and

-

creation of an idealized vision for processing operations such as no downtime, no in-process delays, or zero emissions. Although such goals, stated as process metrics, might not be reachable, they serve to drive research in a desirable direction.

Ultimately, most R&D metrics fell from favor because of the long period between measurement and analysis of the result of R&D and the need for expert judgment in evaluating the quality of the item being measured. They are being widely replaced by a “stage-gate” approach for managing R&D. In the stage-gate approach, the R&D process is divided into three or more stages, ranging from discovery through commercialization (see Table 2.1). The number of stages is usually specific to the business, with the number increasing as the complexity and length of the R&D process increase. For example, there will be more stages in the R&D process

|

|

2000, Meaningful R&D output metrics: An unmet need of technology and business leadership, Presentation at the Corporate Technology Council, E.I. DuPont, June 20, 2000. |

TABLE 2.1 Example of the Stage-Gate Steps and Metrics for R&D in a Traditional Advanced Materials Chemical Industry

|

Metric Theme |

Stage 1 Feasibility |

Stage 2 Confirmation |

Stage 3 Commercialization |

|

Sustainable product |

• Customer needs have been analyzed • Improved properties, identified through analysis of customer needs, such as increased strength and corrosion or stain resistance, have been demonstrated |

• New discovery has led to an established patent position • Manufacturing or marketing strengths of the new discovery have been analyzed |

• Customer alternatives to the use of the new product have been analyzed |

|

Economics |

• Product or process concept has been proven, even though economic practicality has not been established |

• Materials cost, process yield, catalyst life, capital intensity, and competitors have been analyzed |

• Sustained pilot operation has been achieved • Impurities and recycle streams have been analyzed |

|

Customer acceptance |

• Target customers have been identified |

• Plan exists for partnerships and for access to market • Customer reaction to prototype has been satisfactory |

• Partnerships and access to market have been established |

|

Safety and environment |

• Alternative materials have been considered • Radical processing concept has been considered • Safety in use has been analyzed |

• Inherently safe and “green” concepts have been demonstrated • Toxicology tests have been completed |

• Design exists for “fail-safe” operation and “zero” emissions |

of a drug company than in the R&D process of a polymer developing company. Groups of metrics are identified within each stage, and a satisfactory response to the metrics must be achieved before the project is allowed to proceed to the next stage. Advancement to successive stages can easily be tracked and converted to process metrics reflecting the status or progress of a program (e.g., a yes or no answer to whether the program has been completed). The main difficulty with the stage-gate approach is in choosing the metric themes for each stage and assessing the quality of the results, both of which require professional judgment.

A stage-gate process such as that illustrated in Table 2.1 is generally initiated by the scientists in the organization, following an R&D discovery or a promising analysis.5 Generally, after a year of effort by a single principal investigator, the program either transitions to a managed stage-gate R&D program or is terminated due to apparent infeasibility or poor fit with the business intentions of the company. Whether or not an R&D project advances to Stage 1 depends on demonstration of the following:

-

technical feasibility,

-

scientific uniqueness,

-

availability of skills within the organization required to bring the research to fruition,

-

ability to identify a market within the growth areas promoted by the company,

-

ability to define realistic goals and objectives and to establish a clear focus and targets, and

-

ability to attract sponsorship by the business unit of the organization.

Tools for Strategic Analysis

Industry commonly uses metrics to guide strategic planning. Lessons learned from this experience include the following:

-

Metrics can be applied to most ongoing operations. The greatest value of metrics will be achieved by selecting a few key issues and monitoring them over a long time.

-

Data for measuring research progress are generally of poor quality initially. Standardization of data collection, quality assessment, and verification are necessary to produce broadly credible results.

-

Most successful R&D programs measure progress against a clearly constructed business plan that includes a statement of the task, goals and milestones, budget, internal or external peer review plan, and communication plan.

Applicability to the CCSP

The industrial experience with metrics has much to offer the CCSP. For example, the attributes of manufacturing metrics (e.g., few metrics, easily understood) and the importance of expert judgment in assessing the relevance and quality of process and output metrics are likely to be widely applicable. In addition, a number of industry approaches (e.g., analysis of program resource distribution, use of R&D process metrics and peer review rankings, graphical summaries) could be used to guide strategic planning and improve R&D quality and progress. Finally, a stage-gate process might be used to help CCSP agencies plan how to move a program emphasis from the discovery phase that precedes Stage 1 (feasibility of using basic research results to improve decision making), to Stage 2 (developing and testing decision-making tools), to Stage 3 (decision making and communicating program results).

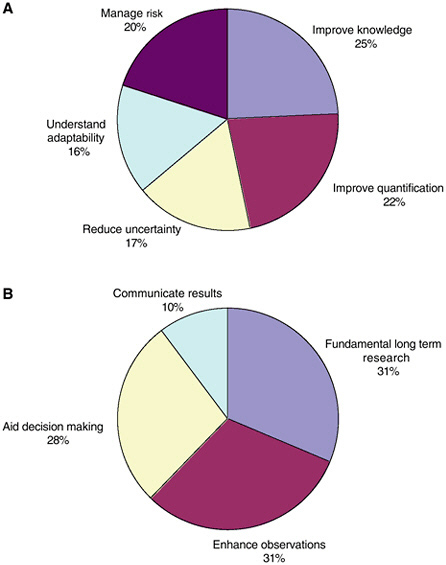

Following is a hypothetical example related to the CCSP. Suppose that R&D funding is $900 million and that it is divided among the CCSP goals and approaches according to Table 2.2 (shown graphically Figure 2.1).

TABLE 2.2 Hypothetical Distribution of Funding Applied to CCSP Overarching Goals and Core Approaches

|

|

Funding (millions of dollars) |

|||||

|

CCSP Goals→ |

Improve Knowledge |

Improve Quantification |

Reduce Uncertainty |

Under-stand Adaptability |

Manage Risk |

Percentage |

|

Approaches↓ |

||||||

|

Fundamental research |

100 |

85 |

20 |

40 |

37 |

31 |

|

Enhance observations |

72 |

63 |

73 |

24 |

45 |

31 |

|

Aid decision making |

19 |

37 |

48 |

65 |

80 |

28 |

|

Communicate results |

26 |

17 |

16 |

14 |

19 |

10 |

|

Percentage |

25 |

22 |

17 |

16 |

20 |

|

FIGURE 2.1 Distribution of effort (A) in the five CCSP overarching goals and (B) in the four CCSP core approaches, based on hypothetical data in Table 2.2.

From these data, program managers would decide if the distribution of effort is appropriate or if adjustments are needed. They may decide, for example, that too little of the effort is focused on communicating results.

Once program managers are satisfied, the process of evaluating the quality of research activities can begin. Again for the hypothetical example above, assume that the $63 million to improve quantification or enhance

observations (Table 2.2) is divided among six research projects. Assume also that 11 R&D measures of the management and leadership process have been developed and scored by peer review as shown in Table 2.3. The peer review panel might evaluate all six projects and rate the quality of the project management on, for example, a 1 to 5 scoring system. An illustration of that scoring system follows:

R&D Metric: Quality of the Internal or External Review Process for This Task

-

= Poor—no review plan in place; no reviews, even ad hoc

-

= Fair—no review plan in place; infrequent, ad hoc reviews; unreliable follow-up

-

= Average—review plan exists; irregularly followed; unreliable follow-up

-

= Good—plan exists; regularly followed; spotty follow-up

-

= Excellent—plan exists; regularly followed; excellent follow-up

TABLE 2.3 Hypothetical Example of 11 R&D Process Metrics Applied to Six Research Projects

|

Metric |

Project 1 |

Project 2 |

Project 3 |

Project 4 |

Project 5 |

Project 6 |

Average |

|

Quality of the internal or external peer review process for this task |

4 |

5 |

3 |

2 |

2 |

4 |

3.3 |

|

Statement of the task is sufficiently focused and specific to be evaluated by the peer review process |

4 |

5 |

3 |

3 |

3 |

3 |

3.5 |

|

Quality of the selection and definition of long-term goals |

4 |

5 |

2 |

1 |

3 |

2 |

2.8 |

|

Quality of the selection and definition of milestones |

3 |

3 |

2 |

1 |

1 |

2 |

2.0 |

From the information in Table 2.3 program managers could begin asking critical questions about the quality of the R&D effort, for example:

-

Is research project 3 so weak based on the average score that it should be discontinued or at least supervised more closely?

-

Why are the scores for progress in achieving milestones (fifth measure) uniformly low and what can be done?

UNIVERSITY RESEARCH

Metrics in academia are used to assess the performance of faculty, departments, and the university itself, as well as to manage resources. Metrics to evaluate the success of a university generally focus on outcomes and impacts, such as fraction of degrees completed, student satisfaction, success of the graduates, and national reputation.6

Faculty appointment and promotion systems are designed to evaluate a number of activities, including research, teaching, and service. Teaching and service metrics generally focus on outputs (e.g., number of undergraduates taught, courses developed, or committees served on), although judgment is required to assess the quality of teaching and to weigh the prestige of teaching awards and committee memberships. Peer review is the foundation of research assessment (Box 2.1), and it usually takes the form of internal committees that both review the person’s work and take account of outside letters of evaluation from experts in fields relevant to the particular candidate. These evaluations require a good deal of personal judgment—about qualities of mind, the influence of particular ideas or writings, and the person’s promise for future contributions—but usually these subjective judgments are bolstered by metrics of research performance. Examples of research metrics include the following:

-

number of articles or books that have been accepted in the published literature;

-

the subset of articles that have appeared in the “top” journals in a field (i.e., those viewed as having the toughest review);

|

6 |

A number of reports rank universities by reputational measures such as the quality of research programs (e.g., National Research Council, 1995, Research—Doctorate Programs in the United States: Continuity and Change, National Academy Press, Washington, D.C., 768 pp.) or other characteristics, such as selection, retention, and graduation of students; faculty resources; and alumni giving (e.g., U.S. News and World Report, 2005, Best Colleges Index, <http://www.usnews.com/usnews/edu/college/rankings/rankindex_brief.php>). A major criticism of such national rankings is that they distract universities from trying to improve scholarship. See National Research Council, 2003, Assessing Research—Doctorate Programs: A Methodology Study, National Academies Press, Washington, D.C., 164 pp. |

|

Box 2.1 Peer review is generally defined as a critical evaluation by independent experts of “the technical merit of research proposals, projects, and programs.”a A mainstay of the scientific process, peer review provides an “in-depth critique of assumptions, calculations, extrapolations, alternate interpretations, methodology and acceptance of criteria employed and conclusions drawn in the original work.”b While the focus on scientific expertise is paramount, commentators also note that the “peer review process is invariably judgmental and thus inevitably involves interplay between expert and personal judgments.”c Definitions of peer review generally focus on the independence and the appropriate expertise of the peer reviewer. An adequate peer review satisfies three criteria: (1) it includes multiple assessments, (2) it is conducted by scientists who have expertise in the research in question, and (3) the scientists conducting the review have no direct connection to the research or its sponsors.c The second criterion can be difficult to fulfill in evaluations of interdisciplinary work. Even if a peer review group with all of the relevant disciplines is assembled, its members may have difficulty seeing beyond the boundaries of their own disciplines to properly evaluate the integrated product. Ideally, each member of the evaluation group would invest significant time developing at least a basic understanding of the other relevant fields. However, this is a luxury that peer review committees rarely, if ever, have. The ideal of unconflicted peer review (criterion 3) is also usually not achieved, simply because there is a limited pool of experts and those most knowledgeable are also likely to be connected to the research and its sponsors. In such cases the objective becomes one of minimizing conflict of interest and bias. |

-

number of other publications—including book chapters, conference proceedings, and research reports—that may not have been subjected to peer review;

-

number of citations;

-

number of honors and awards; and

-

amount of extramural funding.7

By normalizing these metrics to the number of faculty members, it is possible to measure departmental performance and productivity. These metrics are used to make resource decisions, such as how much space to allocate to different departments, and to compare programs at different institutions.

Such metrics are useful but have significant shortcomings. For example, judgments about the quality and impact of publications are difficult because of the long lags in the publication process and even longer lags in scientific appreciation of the work. Moreover, results are influenced by the membership of the peer evaluation group and the process by which members are selected. This is especially true of assessments of interdisciplinary work (see Box 2.1). Nevertheless, peer review committees, supported by output and impact metrics, have proven to be the most successful means of evaluating research progress in most academic disciplines.8

Applicability to the CCSP

Peer review, supplemented by output and impact metrics, has generally been successful in evaluating academic research progress. Its most effective use is in assessing research plans, the potential for proposed research to succeed, and the quality of research results. Expert opinion is also essential for assessing long-term term research outcomes or impact or for determining when changes in research direction are required. This approach should apply equally well to CCSP basic science programs. Many, perhaps most, individual studies will require only the customary peer review of each discipline. However, expert groups with both the right expertise and sufficient time to learn about other relevant fields will be required to make informed judgments about cross-cutting problems such as climate change. Assessments of the human and environmental consequences of climate change, for example, will require experts in risk assessment, as well as in geophysics, biology, chemistry, socioeconomics, and statistics.

FEDERAL AGENCY RESEARCH

Measuring Government Performance

Some federal agencies have been collecting data to characterize and evaluate their scientific activities for decades.9 However, it was only with

the advent of the Government Performance and Results Act (GPRA) of 1993 and related Office of Management and Budget (OMB) policies that a concerted effort was made to measure progress throughout the federal government (Appendix A).

Federal agencies evaluate research programs to improve management and demonstrate to bureaucratic superiors and Congress that their programs have produced benefits that justify their cost. The preferred form of evaluation is peer review (Boxes 2.1 and 2.2), and OMB has stipulated that peer review of research and development programs be of high quality, of sufficient scope, unbiased and independent, and conducted on a regular basis.10 Performance measures include both qualitative (e.g., productivity, research quality, relevance of research to the agency’s mission, leadership)11 and quantitative measures.12 However, quantitative measures have proven to be difficult to apply on an annual basis for the following reasons:

-

The discovery and innovation process is complex and often involves many factors that are not related to research (e.g., marketing, intellectual property rights).

-

It may take many years for a research project to achieve results.

-

Outcomes are not always directly traceable to specific inputs or

|

|

ment of Defense since the 1960s and later by the National Science Foundation and the National Institutes of Health. See Cetron, M.J., J. Martino, and L. Roepcke, 1967, The selection of R&D program content—Survey of quantitative methods, IEEE Transactions on Engineering Management, EM-14, 4–13; Office of Technology Assessment, 1986, Research Funding as an Investment: Can We Measure the Returns? OTA-TMSET, U.S. Congress, Washington, D.C., 72 pp.; Kostoff, R., 1993, Evaluating federal R&D in the United States, in Evaluating R&D Impacts: Methods and Practice, B. Bozeman and J. Melkers, eds., Kluwer Academic Publishers, Boston, Mass., pp. 163–178; National Science Foundation, 2004, Science and Engineering Indicators 2004, NSB 04-07, <http://www.nsf.gov/sbe/srs/seind04/start.htm>. |

|

10 |

Office of Management and Budget, 2005, Guidance for Completing the Program Assessment Rating Tool (PART), pp. 28–30, <http://www.whitehouse.gov/omb/part/fy2005/2005_guidance.doc>. |

|

11 |

Army Research Laboratory, 1996, Applying the Principles of the Government Performance and Results Act to the Research and Development Function: A Case Study Submitted to the Office of Management and Budget, 27 pp., <http://govinfo.library.unt.edu/npr/library/studies/casearla.pdf>; National Research Council, 1999, Evaluating Federal Research Programs: Research and the Government Performance and Results Act, National Academy Press, Washington, D.C., 80 pp.; Memorandum on FY 2004 interagency research and development priorities, from John H. Marburger III, director of the Office of Science and Technology Policy, and Mitchell Daniels, director of the Office of Management and Budget, on May 30, 2002, <http://www.ostp.gov/html/ombguidmemo.pdf>. |

|

12 |

Roessner, D., 1993, Use of quantitative methods to support research decisions in business and government, in Evaluating R&D Impacts: Methods and Practice, B. Bozeman and J. Melkers, eds., Kluwer Academic Publishers, Boston, Mass., pp. 179–205. |

|

Box 2.2 Federal agencies employ several kinds of review. In addition to peer review,a agencies use the following methods to gain information and feedback:

The kind(s) of review used by an agency depends on circumstances. For example, defense agencies (and industry) rely heavily on internal reviews because of the highly specialized and/or confidential nature of the research. Regulatory agencies use notice-and-comment and stakeholder processes because public input from a wide variety of sources is desirable and/or mandated by law. In some cases, the type of review chosen depends on timing or funding constraints. Tight deadlines (court-ordered, administrative, political) can deter peer review planning and shorten or abort scheduled peer reviews. Also, insufficient funding may tempt agencies to substitute less costly review processes for peer review.

|

-

may result from a combination of inputs. As a result, the results of research are not usually predictable.

-

Negative findings or research results that contribute to objectives in other parts of the government are not valued as outcomes in the GPRA sense.

-

Information needed for an assessment may be unobtainable, inconsistent, or ambiguous.13

Federal agencies are responsible for developing performance measures both for their annual performance plans (a requirement of GPRA) and for OMB’s Program Assessment Rating Tool (PART) (see Appendix A). GPRA performance measures are relatively numerous and broad in scope (e.g., they may include process, output, and outcome measures). Approaches to GPRA measures vary, but most federal agencies are using expert review to judge progress in research and education, and are developing quantitative measures to evaluate management and process (e.g., increase award size or duration).14 An example of different approaches to GPRA performance measures used by the National Science Foundation (NSF) and the National Oceanic and Atmospheric Administration (NOAA) is given in Table 2.4.

The PART measures provide OMB with a uniform approach to assessing and rating programs across the federal government. They are consistent with GPRA measures, but are few in number, reflect program priorities, and focus on outcomes.15 If outcome measures cannot be devised, OMB allows research and development agencies to substitute output or process measures (e.g., Questions 2.3, 3.1, and 3.2 in Box A.2, Appendix A). The fiscal year (FY) 2005 PART measures associated with the climate change programs of participating agencies are given in Table 2.5.

The use of performance measures in the government has had mixed success. A 2004 General Accounting Office report found that R&D man-

|

13 |

Army Research Laboratory, 1996, Applying the Principles of the Government Performance and Results Act to the Research and Development Function: A Case Study Submitted to the Office of Management and Budget, 27 pp., <http://govinfo.library.unt.edu/npr/library/studies/casearla.pdf>; National Science and Technology Council, 1996, Assessing Fundamental Science, <http://www.nsf.gov/sbe/srs/ostp/assess/start.htm>; General Accounting Office, 1997, Measuring Performance: Strengths and Limitations of Research Indicators, GAO/RCED-97-91, Washington, D.C., 34 pp.; National Research Council, 1999, Evaluating Federal Research Programs: Research and the Government Performance and Results Act, National Academy Press, Washington, D.C., 80 pp.; National Research Council, 2001, Implementing the Government Performance and Results Act for Research: A Status Report, National Academy Press, Washington, D.C., 190 pp. |

|

14 |

Presentations to the committee by S. Cozzens, Georgia Institute of Technology, C. Robinson, National Science Foundation, and C. Oros, U.S. Department of Agriculture’s Cooperative State Research, Education, and Extension Service, on March 4, 2004. |

|

15 |

See <http://www.whitehouse.gov/omb/part/fy2005/2005_guidance.doc>. |

TABLE 2.4 Contrasting Types of Government Performance and Results Act Measures at NSF and NOAA

|

Strategic Goal |

Annual Performance Goal |

Performance Measure |

|

National Science Foundationa |

||

|

People: A diverse, competitive, and globally engaged U.S. work force of scientists, engineers, technologists, and well-prepared citizens |

NSF will demonstrate significant achievement for the majority of the following performance indicators related to the people outcome goal |

• Promote greater diversity in the science and engineering (S&E) work force through increased participation of underrepresented groups in NSF activities • Support programs that attract and prepare U.S. students to be highly qualified members of the global S&E work force, including providing opportunities for international study, collaborations, and partnerships • Promote public understanding and appreciation of science, technology, engineering, and mathematics and build bridges between formal and informal science education • Support innovative research on learning, teaching, and education that provides a scientific basis for improving science, technology, engineering, and mathematics education at all levels • Develop the nation’s capability to provide K-12 and higher-education faculty with opportunities for continuous learning and career development in science, technology, engineering, and mathematics |

|

Ideas: Discovery across the frontier of science and engineering, connected to learning, innovation, and service to society |

NSF will demonstrate significant achievement for the majority of the following performance indicators related to the ideas outcome goal |

• Enable people who work at the forefront of discovery to make important and significant contributions to science and engineering knowledge • Encourage collaborative research and education efforts across organizations, disciplines, sectors, and international boundaries • Foster connections between discoveries and their use in the service of society • Increase opportunities for individuals from underrepresented groups and institutions to conduct high-quality, competitive research and education activities • Provide leadership in identifying and developing new research and education opportunities within and across science and engineering fields • Accelerate progress in selected science and engineering areas of high priority by creating new integrative and cross-disciplinary knowledge and tools and by providing people with new skills and perspectives |

|

Tools: Broadly accessible, state-of-the-art S&E facilities, tools, and other infrastructure that enable discovery, learning, and innovation |

NSF will demonstrate significant achievement for the majority of the following performance indicators related to the tools outcome goal |

• Expand opportunities for U.S. researchers, educators, and students at all levels to access state-of-the art S&E facilities, tools, databases, and other infrastructure • Provide leadership in the development, construction, and operation of major next-generation facilities and other large research and education platforms • Develop and deploy an advanced cyberinfrastructure to enable all fields of science and engineering to fully utilize state-of-the-art computation • Provide for the collection and analysis of the scientific and technical resources of the United States and other nations to inform policy formulation and resource allocation • Support research that advances instrument technology and leads to the development of next-generation research and education tools |

|

National Oceanic and Atmospheric Administrationb |

||

|

Protect, restore, and manage the use of coastal and ocean resources through ecosystem-based management |

Improve protection, restoration, and management of coastal and ocean resources through ecosystem-based management |

• Number of overfished major stocks of fish reduced to 42 • Number of major stocks with an “unknown” stock status reduced to 77 • Percentage of plans to rebuild overfished major stocks to sustainable levels increased to 98% • Number of threatened species with lowered risk of extinction increased to 6 • Number of commercial fisheries that have insignificant marine mammal mortality maintained at 8 • Number of endangered species with lowered risk of extinction increased to 7 • Number of habitat acres restored (annual/cumulative) increased to 4,500/19,280 |

|

Understand climate variability and change to enhance society’s ability to plan and respond |

Increase understanding of climate variability and change |

• U.S. temperature forecasts (cumulative skill score computed over the regions where predictions are made) improved to 22 • New climate observations introduced increased to 355 • Reduce uncertainty of atmospheric estimates of U.S. carbon source/sink to ±0.5 gigatons carbon per year • Improve measurements of North Atlantic and North Pacific Ocean basin CO2 fluxes to within ±0.1 petagram carbon per year • Capture more than 90% of true contiguous U.S. temperature trend and more than 70% of true contiguous U.S. precipitation |

|

Strategic Goal |

Annual Performance Goal |

Performance Measure |

|

Serve society’s needs for weather and water information |

Improve accuracy and timeliness of weather and water information |

• Lead time (increased to 13 minutes), accuracy (increased to 73%), and false alarm rate (decreased to 69%) for severe weather warnings for tornadoes • Increased lead time (53 minutes) and accuracy (89%) for severe weather warnings for flash floods • Hurricane forecast track error (48 hour) reduced to 128 • Accuracy (threat score) of day 1 precipitation forecasts increased to 27% • Increased lead time (15 hours) and accuracy (90%) for winter storm warnings • Cumulative percentage of U.S. shoreline and inland areas that have improved ability to reduce coastal hazard impacts increased to 28% |

|

aNational Science Foundation FY 2005 Budget Request to Congress, <http://www.nsf.gov/bfa/bud/fy2005/pdf/fy2005.pdf>. bNational Oceanic and Atmospheric Administration FY 2005 Annual Performance Plan, <http://www.osec.doc.gov/bmi/budget/05APP/NOAA05APP.pdf>. |

||

TABLE 2.5 Climate Science-Related Performance Measures in OMB’s FY 2005 PART

|

Agency |

Performance Measurea |

|

DOE |

• Progress in delivering improved climate data and models for policy makers to determine safe levels of greenhouse gases and, by 2013, toward substantially reducing differences between observed temperature and model simulations at subcontinental scales using several decades of recent data. An independent expert panel will conduct a review and rate progress (excellent, adequate, poor) on a triennial basis |

|

EPA |

• Million metric tons of carbon equivalent of greenhouse gas emissions reduced in the building (or industry or transportation) sector • Tons of greenhouse gas emissions prevented per societal dollar in the building (or industry or transportation) sector • Elimination of U.S. consumption of Class II ozone-depleting substances, measured in tons per year of ozone-depleting potential • Reductions in melanoma and nonmelanoma skin cancers, measured by millions of skin cancer cases avoided • Percentage reduction in equivalent effective stratospheric chlorine loading rates, measured as percent change in parts per trillion of chlorine per year • Cost (industry and EPA) per ozone depletion-potential-ton phase-out targets |

|

NASA |

• As validated by external review, and quantitatively where appropriate, demonstrate the ability of NASA developed data sets, technologies, and models to enhance understanding of the Earth system, leading to improved predictive capability in each of the six science focus area roadmaps • Continue to develop and deploy advanced observing capabilities and acquire new observations to help resolve key [Earth system] science questions; progress and prioritization validated periodically by external review • Progress in understanding solar variability’s impact on space climate or global change in Earth’s atmosphere • Progress in developing the capability to predict solar activity and the evolution of solar disturbances as they propagate in the heliosphere and affect the Earth |

|

NOAA |

• U.S. temperature forecast skill • Determine actual long-term changes in temperature (or precipitation) throughout the contiguous United States • Reduce error in global measurement of sea surface temperature • Assess and model carbon sources and sinks globally • Reduce uncertainty in magnitude of North American carbon uptake • Reduce uncertainty in model simulations of the influence of aerosols on climate • New climate observations introduced • Improve society’s ability to plan and respond to climate variability and change using NOAA climate products and information (number of peer-reviewed risk and impact assessments or evaluations published and communicated to decision makers) |

|

Agency |

Performance Measurea |

|

USGS |

• Percentage of nation with land-cover data to meet land-use planning and monitoring requirements (2001 nat’l data set—66 mapping units across the country) • Percentage of nation with ecoregion assessments to meet land-use planning and monitoring requirements (number of completed ecoregion assessments divided by 84 ecoregions) • Percentage of the nation’s 65 principal aquifers with monitoring wells that are used to measure responses of water levels to drought and climatic variations |

|

NOTE: DOE = Department of Energy; EPA = Environmental Protection Agency; NASA = National Aeronautics and Space Administration; USGS = U.S. Geological Survey. a All are long-term measures (several years or more in the future) published with the FY 2006 budget, see <http://www.whitehouse.gov/omb/budget/fy2006/part.html>. |

|

agers in particular continue to have difficulty establishing meaningful outcome measures, collecting timely and useful performance information, and distinguishing between results produced by the government and results caused by external factors or players such as grant recipients.16 The report also found that issues within the purview of many agencies (e.g., the environment) are not being addressed in the GPRA context. Agency strategic plans generally contain few details on how agencies are cooperating to address common challenges and achieve common objectives. An OMB presentation to the committee acknowledged the difficulty of taking a cross-cutting view of programs such as the CCSP and identified areas in which performance measures would be especially useful.17 These include reducing uncertainty and improving predictability; assessing trade-offs between different program elements, such as making new measurements and analyzing existing data; and demonstrating that decision support tools are helping decision makers make better choices. These issues are discussed in the following chapters.

Applicability to the CCSP

It is difficult to extrapolate performance measures from a focused agency program to the CCSP. Some agency goals overlap with CCSP goals

(e.g., NOAA and CCSP climate variability goals), but an agency’s performance measures emphasize its mission and priorities. Moreover, annual GPRA measures are not always suitable for the long time frame required for climate change research. The PART measures allow a long-term focus, but they concentrate on limited parts of the program. The climate change PART measures (Table 2.5), for example, miss a number of CCSP priority areas (e.g., global water cycle, ecosystem function, human contributions and responses, decision support) and other important aspects of the program (e.g., strategic planning, resource allocation). Finally, agency performance measures are not designed to take account of contributions from other agencies. As a result, the aggregate of agency measures does not address the full scope of the CCSP.18

Nevertheless, approaches that agencies have taken to develop performance measures may be useful to the CCSP. Performance measures developed for climate change programs in the agencies provide a starting point for developing CCSP-wide metrics, and OMB guidelines and the Washington Research Evaluation Network (WREN)19 provide tips and examples for developing metrics that are relevant to the program, promote program quality, and evaluate performance effectively (see Box A.1, Appendix A). Finally, all federal agencies with science programs rely on peer and/or internal review to evaluate research performance. Such evaluation will be especially challenging for the CCSP because of (1) a limited pool of fully qualified reviewers for multidisciplinary issues; (2) conflicts of interest, especially for experts funded by participating agencies; and (3) the high cost of conducting peer review in an era of shrinking federal budgets.

EVALUATING THE OUTCOME OF RESEARCH

Research outcomes and impacts can often be assessed only decades after the research is completed. A number of studies have attempted to trace research to outcomes, including the development of weapons systems and technological innovations, and the advancement of medicine.20 More recently, retrospective review has become an important tool for determining whether

research investments were well directed, efficient, and productive (i.e., through the R&D investment criteria; see Appendix A), thus instilling confidence in future investments. Below is a review of the stratospheric ozone program of the 1970s and 1980s, which offers an opportunity to determine what factors made this multiagency program successful.

Lessons Learned from Stratospheric Ozone Depletion Research

The existence of ozone at high altitude and its role in absorbing incoming ultraviolet (UV) light and heating the stratosphere were deduced in the late nineteenth century. By the early 1930s, the oxygen-based chemistry of ozone production and destruction had been described.21 However, the amount of ozone measured by instruments carried on high-altitude rockets in the 1960s and 1970s was less than expected from the reactions involving oxygen chemistry alone. Consequently, the search began for other reactive species, including free radicals, that could reduce predicted concentrations of stratospheric ozone. Among the candidate radicals considered were the NOx group (nitric oxide and nitrous oxide), which is produced by stratospheric decomposition of nitrous oxide,22 and the ClOx group (chlorine atoms and ClO), which has a natural source from volcanoes and ocean phytoplankton.23 Both radicals are also produced from rocket exhaust, which led to public concern over the possibility that space shuttle or supersonic aircraft flights in the stratosphere could lead to depletion of stratospheric ozone.

Independently, F. Sherwood Rowland and Mario Molina were investigating the fate of chlorofluorocarbon (CFC) compounds released to the atmosphere. The very unreactivity of CFCs that made them ideal refrigerants and solvents ensured that they would persist and accumulate in the atmosphere.24 Research showed that destruction of CFCs ultimately takes place only after they are transported to high altitudes in the stratosphere, where high-energy ultraviolet photons dissociate CFC molecules and produce free chlorine radicals. On becoming aware of other work showing that

chlorine atoms can catalyze the conversion of stratospheric ozone to O2,25 Rowland and Molina concluded that an increase in the chlorine content of the stratosphere would reduce the amount of stratospheric ozone, which in turn would increase the penetration of UV radiation to the Earth’s surface.26

Coincident with the publication of their conclusions in Nature on June 28, 1974,27 the two scientists held a press conference, although widespread press attention occurred only when they presented their results at an American Chemical Society meeting later that year. Public interest in the problem, including calls to ban the use of CFCs as propellants in aerosol spray cans, followed. The U.S. government’s initial response was to create the interagency Federal Task Force on Inadvertent Modification of the Stratosphere and to commission a National Research Council (NRC) study of the problem. Reports of these groups supported the overall scientific conclusions.28 The decision to ban CFCs in spray cans in the United States was announced in 1976 and took effect in 1978.

Subsequent scientific investigation improved understanding of the chemistry of chlorine in the stratosphere, including the formation of reservoirs such as chlorine nitrate that were not considered in earlier calculations.29 Models used to predict future changes in stratospheric ozone, which included the HOx, NOx, and ClOx chemistries, began to include more complex descriptions of the circulation of air in the stratosphere, interactions with a greater number of molecular species, and improved values (including temperature dependence) of rate constants. As a result, the magnitude of the overall effects of CFCs on stratospheric ozone predicted by the models changed. In fact, in an NRC report issued in 1984, just prior to the discovery of the Antarctic ozone hole, even the sign of ozone change was in doubt.30

The Antarctic ozone hole, discovered serendipitously during routine monitoring of ozone levels by the British Antarctic Survey,31 was not predicted by any model. However, work on stratospheric chemistry during the preceding decade enabled rapid deployment of tools and instruments for elucidating the cause of rapid springtime Antarctic ozone loss. Within two years the causes of ozone depletion in the Antarctic polar vortex and the impact of similar chemistry in the northern high latitudes had been determined.32 International regulation, including the Vienna Convention (1985), the Montreal Protocol (1987), and subsequent amendments (London 1990, Copenhagen 1992, Montreal 1997, and Beijing 1999), accompanied these discoveries. Today, the response of governments to regulate stratospheric ozone depletion is viewed as a policy success, and concentrations of CFCs have leveled off or begun to decline, although it will be many decades before the Antarctic ozone hole is expected to disappear.33

Applicability to the CCSP

A number of lessons can be drawn from the ozone example above:

1. The unpredictable nature of science. Since World War II, the U.S. government has supported a wide range of science activities because it is not possible to predict what research will turn out to be important.34 Rowland and Molina’s inquiry into the fate of a man-made chlorofluoromethane was outside the scientific mainstream, but led to a key breakthrough in the emerging field of stratospheric chemistry. (No one would have thought that the use of underarm deodorant in spray cans could influence anything at a global scale, and it is doubtful a research proposal stating so would have been funded at the time.) The Antarctic ozone hole was unpredictable in the early 1980s because the appropriate two-dimensional models with stratospheric chemistry parameters were not yet developed and key reactions (even key compounds) were not yet known. The application of these models and research had to await the independent observation of the ozone hole.

|

31 |

Farman, J.C., B.G. Gardiner, and J.D. Shanklin, 1985, Large losses of total ozone in Antarctic reveal seasonal ClOx/NOx interactions, Nature, 315, 207–210. |

|

32 |

World Meteorological Organization, 1988, Report of the International Ozone Trends Panel: 1988, World Meteorological Organization, Report 18, Geneva, 2 vols. |

|

33 |

World Meteorological Organization, 2002, Scientific Assessment of Ozone Depletion: 2002, WMO Report 47, Geneva, 498 pp. |

|

34 |

Bush, V., 1945, Science, the Endless Frontier: A Report to the President, U.S. Government Printing Office, <http://www.nsf.gov/od/lpa/nsf50/vbush1945.htm>. The report led to the creation of the National Science Foundation to support research in medicine, physical and natural science, and military matters. |

2. The role of serendipity. A dramatic loss of ozone in the lower Antarctic stratosphere was first noticed by a research group from the British Antarctic Survey that was monitoring the atmosphere using a ground-based network of instruments.35 The same decline was famously missed by satellite observations at first because “anomalously low” values for total column ozone were flagged as potentially unreliable, and the satellite team’s foremost concern at the time was its ability to accurately measure column ozone with the instrument. Subsequent reanalysis of the satellite data corroborated the existence of the Antarctic ozone hole.

3. The role of leadership. Aside from the initial press release by Rowland and Molina in 1974, Rowland’s efforts to publicize the implications of their results were assisted by actions initiated by others, for example, the publicity department of the American Chemical Society and the politicians who called for further investigation. The resulting series of newspaper articles and interviews helped speed political outcomes, including the regulated reduction of CFCs. The rapidity of scientific progress on the causes of the Antarctic ozone hole is attributed by many involved to the leadership provided by Robert Watson, a National Aeronautics and Space Administration program manager who had both a thorough knowledge of the research he was supporting and the political awareness to release results at the most effective times.

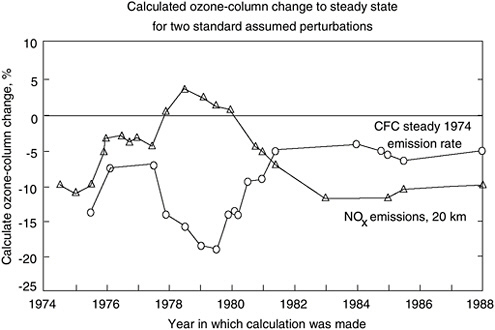

4. “Reduction in uncertainty.” This would have been a poor metric for evaluating scientific progress in the early stages of ozone research. Between 1975 and 1984, improved understanding and modeling of how mixtures of gases behave in the stratosphere actually increased uncertainty about the magnitude and even the sign of predicted trends in stratospheric ozone (see Figure 2.2).

5. Role of assessments. “State of the science” assessments can be useful for summarizing complex problems in a way that is useful to policy makers.36 However, their usefulness in guiding future research is less clear. For example, despite the recommendations of committees convened in the 1970s and 1980s, scientific progress was not coordinated. Instead, progress was made by scientists from different fields working on the problem independently and (importantly) communicating their results broadly.

6. Parallels with the problem of climate change are limited. The ozone

FIGURE 2.2 Predictions of ozone column depletions from the same assumed chlorine and nitrogen scenarios as a function of the year for which the model was current. New discoveries in chemistry and the incorporation of better values for rate constants led to substantial fluctuations in the predictions in the late 1970s and early 1980s. SOURCE: Donald Wuebbles, University of Illinois; used with permission.

problem, although complex, involves transport and reactions in the atmosphere of a suite compounds resulting largely from human activities. Climate change, in contrast, involves a number of atmospheric trace gases, aerosols, and clouds, each of which has important cycles that are independent of human activity. Understanding the ozone hole required advances in understanding the physics of atmospheric circulation and heterogeneous chemical processes, the development of methods to measure and monitor chemical species in the stratosphere, and the modeling of feedback mechanisms. Similar progress in understanding basic physical and chemical properties of the Earth system is required before credible climate change predictions can be made. However, the scope of needed advances is vast because most greenhouse gases have important sources and sinks in the biosphere and hydrosphere, and the controls on these fluxes feed back to atmospheric composition and climate.

Finally, the Montreal Protocol and subsequent policies involve a relatively small suite of compounds. In contrast, responses to climate change

could involve regulating substances important to every sector of the economy. Reductions in one greenhouse gas may be offset by increases in others. For example, increased storage of carbon in fertilized agricultural fields may be offset by increased release of nitrous oxide.

CONCLUSIONS

Although industry, academia, and federal agencies have not had to develop metrics for programs as complex as global change, their experience can provide useful guidance to the CCSP. For example, the academic experience illustrates the importance of expert judgment and peer review, which are also applicable to basic research in industry and government. The government experience (including the ozone example) shows the importance of leadership and the pitfalls of relying on a single metric such as uncertainty. Finally, the attributes of useful metrics and a methodology for creating them can be gleaned from the industry experience.

However, CCSP differs from industry in two important way that are relevant to the creation of metrics. First, in industry a manager or small management team identifies the metrics. In contrast, the CCSP program office will have to arbitrate among 13 independent agencies to choose the few important measures for guiding the program. Each of these agencies might stress a part of the program that best fulfills its mission, but would also be responsible for implementing CCSP metrics.

Second, industry operates within a framework of defined income and expenses and specific products. An increase or decrease in profits provides both a motivation to develop effective metrics and an independent check on their success. Government agencies, on the other hand, are funded by taxpayers, and frequently the “profit” is new knowledge or an innovation that is difficult to measure. Moreover, there are no simple independent checks on whether government performance measures are succeeding. A commitment by the CCSP’s senior leadership to achieve and maintain outstanding performance, an open process for developing metrics, and input and feedback from outside experts and advisory groups will be required to over-come these problems.

These and other lessons are useful to guide thinking on how and why metrics should be developed and applied. Principles that can be derived from these lessons are discussed in Chapter 3.