3

Narrowing the Research-Practice Divide—Systems Considerations

OVERVIEW

Bridging the inference gap, as described in this chapter, is the daily leap physicians must make to piece existing evidence around individual patients in the clinical setting. Capturing and utilizing data generated in the course of care offers the opportunity to bring research and practice into closer alignment and propagate a cycle of learning that can enhance both the rigor and the relevance of evidence. Papers in this chapter illustrate process and analytic changes needed to narrow the research-practice divide and allow healthcare delivery to play a more fundamental role in the generation of evidence on clinical effectiveness.

In this chapter, Brent James outlines the system-wide reorientation that occurred at Intermountain Healthcare as it implemented a system to manage care at the care delivery level. Improved performance and patient care were fostered by a system designed to collect data to track inputs and outcomes and provide feedback on performance—elements that also created a useful research tool that has led to incremental improvements in quality along with discovery and large advancements in care at the practice level. The experience at Intermountain identifies some of the organizational and cultural changes needed, but a key was the utilization of electronic health records (EHRs) and support systems. Walter F. Stewart expands on the immense potential of the EHR as a tool to narrow the inference gap—the gap between what is known at the point of care and what evidence is needed to make a clinical decision. In his paper, he focuses on the potential for EHRs to increase real-time access to knowledge and facilitate the creation of evidence

that is more directly relevant to everyday clinical decisions. Stewart views the EHR as a transforming technology and suggests several ways in which appropriate design and utilization of this tool and surrounding support systems can allow researchers to tap into and learn from the heterogeneity of patients, treatment effects, and the clinical environment to accelerate the generation and application of evidence in a learning healthcare system.

Perhaps one of the most substantial considerations will be how these quicker, practice-based opportunities to generate evidence might affect evidentiary standards. Steven Pearson’s paper outlines how the current process of assessing bodies of evidence to inform the coverage decision process might not be able to meet future needs, and the potential utility of a means to consider factors such as clinical circumstance in the process. He discusses possible unintended consequences of approaches such as Coverage with Evidence Development (CED) and suggests concepts and processes associated with coverage decisions in need of development and better definition. Finally, Robert Galvin discusses the employer’s dilemma of how to get true innovations in healthcare technology to populations of benefit as quickly as possible but guard against the harms that could arise from inadequate evaluation. He suggests that a “cycle of unaccountability” has hampered efforts to balance the need to foster innovation while controlling costs, and discusses some of the issues facing technology developers in the current system and a recent initiative by General Electric (GE), UnitedHealthcare, and InSightec to apply the CED approach to a promising treatment for uterine fibroids. Although this initiative has potential to substantially expand the capacity for evidence generation while accelerating access and innovation, challenges to be overcome include those related to methodology, making the case to employers to participate, and confronting the culture of distrust between payers and innovators.

FEEDBACK LOOPS TO EXPEDITE STUDY TIMELINESS AND RELEVANCE

Brent James, M.D., M.Stat.

Intermountain Healthcare

Quality improvement was introduced to health care in the late 1980s. Intermountain Healthcare, one of the first groups to attempt clinical improvement using these new tools, had several early successes (Classen et al. 1992; James 1989 [2005, republished as a “classics” article]). While those experiences showed that Deming’s process management methods could work within healthcare delivery, they highlighted a major challenge: the results did not, on their own, spread. Success in one location did not lead to widespread adoption, even among Intermountain’s own facilities.

Three core elements have been identified for a comprehensive quality-based strategy (Juran 1989): (1) Quality control provides core data flow and management infrastructure, allowing ongoing process management. It creates a context for (2) quality improvement—the ability to systematically identify then improve prioritized targets. (3) Quality design encompasses a set of structured tools to identify, then iteratively create new processes and products.

Since the quality movement’s inception, most care delivery organizations have focused exclusively on improvement. None have built a comprehensive quality control framework. Quality control provides the organizational infrastructure necessary to rapidly deploy new research findings across care delivery locations. The same infrastructure makes it possible to generate reliable new clinical knowledge from care delivery experience. In 1996, Intermountain undertook to build clinical quality control across its 22 hospitals, 100-plus outpatient clinics, employed and affiliated physician groups (1,250 core physicians, among more than 3,000 total associated physicians), and a health insurance plan (which funds about 25 percent of Intermountain’s total care delivery). Intermountain’s quality control plan contained 4 major elements: (1) key process analysis; (2) an outcomes tracking system that measured and reported accurate, timely, medical, cost, and patient satisfaction results; (3) an organizational structure to use outcomes data to hold practitioners accountable, and to enable measured progress on shared clinical goals; and (4) aligned incentives, to harvest some portion of resulting cost savings back to the care delivery organization (while in many instances better quality can demonstrably reduce care delivery costs, current payment mechanisms direct most such savings to health payers).

The Intermountain strategy depended heavily upon a new “shared baselines” approach to care delivery, that evolved during early quality improvement projects as a mechanism to functionally implement evidence-based medicine (James 2002): All health professionals associated with a particular clinical work process come together on a team (physicians, nurses, pharmacists, therapists, technicians, administrators, etc.). They build an evidence-based best practice guideline, fully understanding that it will not perfectly fit any patient in a real care delivery setting. They blend the guideline into clinical workflow, using standing order sets, clinical worksheets, and other tools. Upon implementation, health professionals adapt their shared common approach to the needs of each individual patient. Across more than 30 implemented clinical shared baselines, Intermountain’s physicians and nurses typically (95 percent confidence interval) modify about 5 to 15 percent of the shared baseline to meet the specific needs of a particular patient. That makes it “easy to do it right” (James 2001), while facilitating the role of clinical expertise. It also is much more efficient. Expert clinicians can focus on a subset of critical issues because the remainder of the care

process is reliable. The organization can staff, train, supply, and organize physical space to a single defined process. Shared baselines also provide a structure for electronic data systems, greatly enhancing the effectiveness of automated clinical information. Arguably, shared baselines are the key to successful implementation of electronic medical record systems.

Key Process Analysis

The Institute of Medicine’s prescription for reform of U.S. health care noted that an effective system should be organized around its most common elements (IOM 2001). Each year for 4 years, Intermountain attempted to identify high priority clinical conditions for coordinated action, through expert consensus among senior clinical and administrative leaders generated through formal nominal group technique. In practice, consensus methods never overcame advocacy. Administrative and clinical leaders, despite a superficially successful consensus process, still focused primarily on their own departmental or personal priorities. We therefore moved from expert consensus to objective measurement. That involved first, identifying front line work processes. This complex task was aided by conceptually subdividing Intermountain’s operations into 4 large classes: (1) work processes centered around clinical conditions; (2) clinical work processes that are not condition-specific (clinical support services, e.g., processes located within pharmacy, pathology, anesthesiology/procedure rooms, nursing units, intensive care units, patient safety); (3) processes associated with patient satisfaction; and (4) administrative support processes. Within each category, we attempted to identify all major work processes that produced value-added results.

These work processes were then prioritized. To illustrate, within clinical conditions we first measured the number of patients affected. Second, clinical risk to the patient was estimated. We used intensity of care as a surrogate for clinical risk, and assessed intensity of care by measuring true cost per case. This produced results that had high face validity with clinicians, while also working well with administrative leadership. Third, base-state variability within a particular clinical work process was measured by calculating the coefficient of variation, based on intensity of care (cost per case). Fourth, using Batalden and Nelson’s concept of clinical microsystems specialty groups that routinely worked together on the basis of shared patients were identified along with the clinical processes through which they managed those patients (Batalden and Splaine 2002; Nelson et al. 2002). This was a key element for organizational structure. Finally, we applied two important criteria for which we could not find metrics: we used expert judgment to identify underserved subpopulations, and to balance our roll-out across all elements of the Intermountain care delivery system.

Among more than 1,000 inpatient and outpatient condition-based clinical work processes, 104 accounted for almost 95 percent of all of Intermountain’s clinical care delivery. Rather than the traditional 80/20 rule (the Pareto principle), we saw a 90/10 rule: Clinical care concentrated massively, on a relative handful of high priority clinical processes (the IOM’s Quality Chasm report got it right!). Those processes were addressed in priority order, to achieve the most good for the most patients, while freeing resources to enable traditional, one by one care delivery plans for uncommon clinical conditions.

Outcomes Tracking

Prior to 1996, Intermountain had tried to start clinical management twice. The effort failed each time. Each failure lost $5 million to $10 million in sunk costs, and cashiered a senior vice president for medical affairs. When asked to make a third attempt, we first performed a careful autopsy on the first two attempts. Each time Intermountain had found clinicians willing to step up and lead. Then, each time, Intermountain’s planners uncritically assumed that the new clinical leaders could use the same administrative, cost-based data to manage clinical processes, as had traditionally been used to manage hospital departments and generate insurance claims. On careful examination, the administrative data contained gaping holes relative to clinical care delivery. They were organized for facilities management, not patient management.

One of the National Quality Forum’s (NQF) first activities, upon its creation, was to call together a group of experts (its Strategic Framework Board—SFB) to produce a formal, evidence-based method to identify valid measurement sets for clinical care (James 2003). The SFB found that outcomes tracking systems work best when designed around and integrated into front-line care delivery. Berwick et al. noted that such integrated data systems can “roll up” into accountability reports for practice groups, clinics, hospitals, regions, care delivery systems, states, and the nation. The opposite is not true. Data systems designed top down for national reporting usually cannot generate the information flow necessary for front-line process management and improvement (Berwick et al. 2003). Such top-down systems often compete for limited front-line resources, damaging care delivery at the patient interface (Lawrence and Mickalide 1987).

Intermountain adopted the NQF’s data system design method. It starts with an evidence-based best practice guideline, laid out for care delivery—a shared baseline. It uses that template to identify and then test a comprehensive set of medical, cost, and satisfaction outcomes reports, optimized for clinical process management and improvement. The report set leads to a list of data elements and coding manuals, which generate data marts within an

electronic data warehouse (patient registries), and decision support structure for use within electronic medical record systems.

The production of new clinical outcomes tracking data represented a significant investment for Intermountain. Clinical work processes were attacked in priority order, as determined by key process analysis. Initial progress was very fast. For example, in 1997 outcomes tracking systems were completed for the two biggest clinical processes within the Intermountain system. Pregnancy, labor, and delivery represents 11 percent of Intermountain’s total clinical volume. Ischemic heart disease adds another 10 percent. At the end of the year, Intermountain had a detailed clinical dashboard in place for 21 percent of Intermountain’s total care delivery. Those data were designed for front-line process management, then rolled up into region- and system-level accountability reports. Today, outcomes data cover almost 80 percent of Intermountain’s inpatient and outpatient clinical care. They are immediately available through internal websites, with data lag times under one month in all cases, and a few days in most cases.

Organizational Structure

About two-thirds of Intermountain’s core physician associates are community-based, independent practitioners. That required an organizational structure that heavily emphasized shared professional values, backed up by aligned financial incentives (in fact, early successes relied on shared professional values alone; financial incentives came quite late in the process, and were always modest in size). The microsystems (Batalden and Splaine 2002) subpart of the key process analysis provided the core organizational structure. Families of related processes, called Clinical Programs, identified care teams that routinely worked together, even though they often spanned traditional subspecialty boundaries. Intermountain hired part-time physician leaders (1/4 full time equivalent) for each Clinical Program in each of its 3 major regions (networks of outpatient practices and small community hospitals, organized around large tertiary hospital centers). Physician leaders are required to be in active practice within their Clinical Program; to have the respect of their professional peers; and to complete formal training in clinical quality improvement methods through Intermountain’s internal clinical QI training programs (the Advanced Training Program in Clinical Practice Improvement). Recognizing that the bulk of process management efforts rely upon clinical staff, Intermountain also hired full-time “clinical operations administrators.” Most of the staff support leaders are experienced nurse administrators. The resulting leadership dyad—a physician leader with a nursing/support staff leader—meet each month with each of the local clinical teams that work within their Clinical Program. They present and review patient outcomes results for each team, compared to

their peers and national benchmarks. They particularly focus on clinical improvement goals, to track progress, identify barriers, and discuss possible solutions. Within each region, all of the Clinical Program dyads meet monthly with their administrative counterparts (regional hospital administration, finance, information technology, insurance partners, nursing, and quality management). They review current clinical results, track progress on goals, and assign resources to overcome implementation barriers at a local level.

In addition to their regional activities, all leaders within a particular Clinical Program from across the entire Intermountain system meet together monthly as a central Guidance Council. One of the 3 regional physician leaders is funded for an additional part-time role (1/4 time) to oversee and coordinate the system-wide effort. Each system-level Clinical Program also has a separate, full-time clinical operations administrator. Finally, each Guidance Council is assigned at least one full-time statistician, and at least one full-time data manager, to help coordinate clinical outcomes data flow, produce outcomes tracking reports, and to perform special analyses. Intermountain coordinates a large part of existing staff support functions, such as medical informatics (electronic medical records), electronic data warehouse, finance, and purchasing, to support the clinical management effort.

By definition, each Guidance Council oversees a set of condition-based clinical work processes, as identified and prioritized during the key process analysis step. Each key clinical process is managed by a Development Team which reports to the Guidance Council. Development Teams meet each month. The majority of Development Team members are drawn from front-line physicians and clinical staff, geographically balanced across the Intermountain system, who have immediate hands-experience with the clinical care under discussion (technically, “fundamental knowledge”). Development Team members carry the team’s activities—analysis and management system results—back to their front-line colleagues, to seek their input and help with implementation and operations. Each Development Team also has a designated physician leader, and Knowledge Experts drawn from each region. Knowledge Experts are usually specialists associated with the Team’s particular care process. For example, the Primary Care Clinical Program includes a Diabetes Mellitus Development Team (among others). Most team members are front-line primary care physicians and nurses who see diabetes patients in their practices every day. The Knowledge Experts are diabetologists, drawn from each region.

A new Development Team begins its work by generating a Care Process Model (CPM) for their assigned key clinical process. Intermountain’s central Clinical Program staff provides a great deal of coordinated support for this effort. A Care Process Model contains 5 sequential elements:

-

The Knowledge Experts generate an evidence-based best practice guideline for the condition under study, with appropriate links to the published literature. They share their work with the body of the Development Team, who in turn share it with their front-line colleagues, asking “What would you change?” As the “shared baseline” practice guideline stabilizes over time,

-

The full Development Team converts the practice guideline into clinical workflow documents, suitable for use in direct patient care. This step is often the most difficult of the CPM development process. Good clinical flow can enhance clinical productivity, rather than adding burden to front-line practitioners. The aim is to make evidence-based best care the lowest energy default option, with data collection integrated into clinical workflow.

The core of most chronic disease CPMs is a treatment cascade. Treatment cascades start with disease detection and diagnosis. The first (and most important) “treatment” is intensive patient education, to make the patient the primary disease manager. The cascade then steps sequentially through increasing levels of treatment. A font-line clinical team moves down the cascade until they achieve adequate control of the patient’s condition, while modifying the cascade’s “shared baseline” based upon individual patient needs. The last step in most cascades is referral to a specialist.

-

The team next applies the NQF SFB outcomes tracking system development tools, to produce a balanced dashboard of medical, cost, and satisfaction outcomes. This effort involves the electronic data warehouse team, to design clinical registries that bring together complementary data flows with appropriate pre-processing.

-

The Development Team works with Intermountain’s medical informatics groups, to blend clinical workflow tools and data system needs into automated patient care data systems.

-

Central support staff help the Development Team build web-based educational materials for both care delivery professionals, and the patients they serve.

A finished CPM is formally deployed into clinical practice by the governing Guidance Council, through its regional physician/nurse leader dyads. At that point, the Development Team’s role changes. The Team continues to meet monthly to review and update the CPM. The Team’s Knowledge Experts have funded time to track new research developments. The Team also reviews care variations as clinicians adapt the shared baseline. It closely follows major clinical outcomes, and receives and clears improvement ideas that arise among Intermountain’s front-line practitioners and leadership.

Drawing on this structure, Intermountain’s CPMs tend to change quite frequently. Knowledge Experts have an additional responsibility of sharing new findings and changes with their front-line colleagues. They conduct regular continuing education sessions, targeted both at practicing physicians and their staffs, for their assigned CPM. Education sessions cover the full spectrum of the coordinated CPM: They review current best practice (the core evidence-based guideline); relate it to clinical workflow; show delivery teams how to track patient results through the outcomes data system; tie the CPM to decision support tools built into the electronic medical record; and link it to a full set of educational materials, for patients and for care delivery professionals.

Chronic disease Knowledge Experts also run the specialty clinics that support front-line care delivery teams. Continuing education sessions usually coordinate the logistics of that support. The Knowledge Experts also coordinate specialty-based nurse care managers and patient trainers.

An Illustrative CPM in Action: Diabetes Mellitus

Through its health plan and outpatient clinics, Intermountain supports almost 20,000 patients diagnosed with diabetes mellitus. Among about 800 primary care physicians who manage diabetics, approximately one-third are employed within the Intermountain Medical Group, while the remainder are community-based independent physicians. All physicians and their care delivery teams—regardless of employment status—interact regularly with the Primary Care Clinical Program medical directors and clinical operations administrators. They have access to regular diabetes continuing education sessions. Three endocrinologists (one in each region) act as Knowledge Experts on the Diabetes Development Team. In addition to conducting diabetes training, the Knowledge Experts coordinate specialty nursing care management (diabetic educators), and supply most specialty services.

Each quarter, Intermountain sends a packet of reports to every clinical team managing diabetic patients. The reports are generated from the Diabetes Data Mart (a patient registry) within Intermountain’s electronic data warehouse. The packet includes, first, a Diabetes Action List. It summarizes every diabetic patient in the team’s practice, listing testing rates and level controls (standard NCQA HEDIS measures: HbA1c, LDL, blood pressure, urinary protein, dilated retinal exams, pedal sensory exams; Intermountain was an NCQA Applied Research Center that helped generate the HEDIS diabetes measures, using the front-line focused NQF outcomes tracking design techniques outlined above). The report flags any care defect, as reflected either in test frequency or level controls. Front-line teams review lists, then either schedule flagged patients for office visits, or assign them to general care management nurses located within the local clinic. While

Intermountain pushes Diabetes Action Lists out every quarter, front-line teams can generate them on demand. Most teams do so every month.

In addition to Action Lists, front-line teams can access patient-specific Patient Worksheets through Intermountain’s web-based Results Review system. Most practices integrate Worksheets into their workflow during chart preparation. The Worksheet contains patient demographics, a list of all active medications, and a review of pertinent history and laboratory focused around chronic conditions. For diabetic patients, it will include test dates and values for the last seven HbA1c, LDLs, blood pressures, urinary proteins, dilated retinal examinations, and pedal sensory examinations. A final section of the Worksheet applies all pertinent treatment cascades, listing recommendations for currently due immunizations, disease screening, and appropriate testing. It will flag out-of-control levels, with next-step treatment recommendations (technically, this section of the Worksheet is a passive form of computerized physician order entry).

The standard quarterly report packet also contains sections comparing each clinical team’s performance to their risk-adjusted peers. A third report tracks progress on quality improvement goals, and links them to financial incentives. Finally, a separate summary report goes to the team’s Clinical Program medical director. In meeting with the front-line teams, the Clinical Program leadership dyad often share methods used by other practices to improve patient outcome performance, with specific practice flow recommendations.

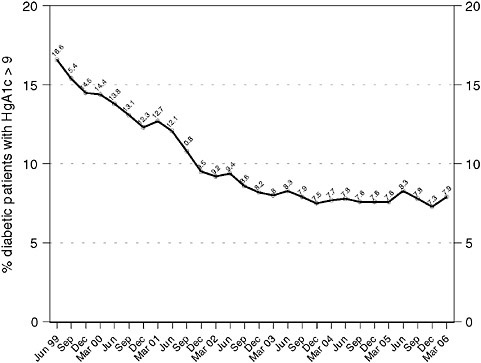

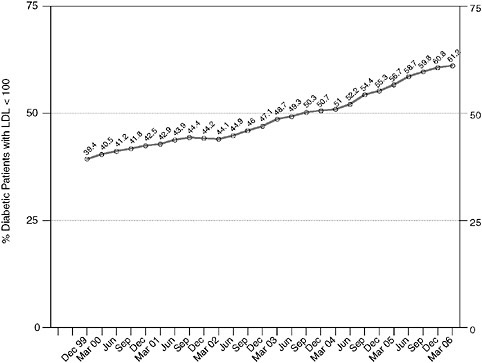

Intermountain managed more than 20,000 diabetic patients by March, 2006 and Figures 3-1 and 3-2 show system-level performance on representative diabetes outcomes measures, as pulled real-time from the Intermountain outcomes tracking system. Primary care physicians supply almost 90 percent of all diabetes care in the system.

As the last step on a treatment cascade, Intermountain’s Diabetes Knowledge Experts tend to concentrate the most difficult patients in their specialty practices. As a result, they typically have worse outcomes than their primary care colleagues.

Using Routine Care Delivery to Generate Reliable Clinical Knowledge

Evidence-based best practice faces a massive evidence gap. The healing professions currently have reliable evidence (Level I, II, or III—randomized trials, robust observational designs, or expert consensus opinion using formal methods (Lawrence and Mickalide 1987) to identify best, patient-specific, practice for less than 20 percent of care delivery choices (Ferguson 1991; Lappe et al. 2004; Williamson 1979). Bridging that gap will strain the capacity of any conceivable research system.

Intermountain designed its Clinical Programs to optimize care delivery

FIGURE 3-1 Blood sugar control with Clinical Program management over time, for all diabetic patients managed within the entire Intermountain system National guidelines recommend that all diabetic patients be managed to hemoglobin A1c levels < 9 percent; ideally, patients should be managed to levels < 7 percent.

performance. The resulting organizational and information structures make it possible to generate robust data regarding treatment effects, as a by-product of demonstrated best care. CPMs embed data systems that directly link outcome results to care delivery decisions. They deploy organized care delivery processes. Intermountain’s Clinical Programs might be thought of as effectiveness research, built system-wide into front-line care delivery. At a minimum, CPMs routinely generate Level II-3 information (robust, prospective observational time series) for all key clinical care delivery processes. In such a setting, all care changes get tested. For example, any new treatment, recently released in the published medical literature; any new drug; a new organizational structure for an ICU; a new nurse staffing policy implemented within a hospital can generate robust information to assess its effectiveness in a real care delivery setting.

At need, Development Teams move up the evidence chain as a part of routine care delivery operations. For example, the Intermountain Car-

FIGURE 3-2 Lipid (low density lipoprotein/LDL) control with Clinical Program management over time, for all diabetic patients managed within the entire Intermountain system. National guidelines recommend that all diabetic patients should be managed to LDL levels < 100 mg/dL.

diovascular Guidance Council developed robust observational evidence regarding discharge medications for patients hospitalized with ischemic heart disease or atrial fibrillation (Level II-2 evidence) (Lappe et al. 2004). The Mental Health Integration Development Team used the Intermountain outcomes tracking system to conduct a prospective non-randomized controlled trial (Level II-1 evidence) to assess best practice for the detection and management of depression in primary care clinics (Reiss-Brennan 2006; Reiss-Brennan et al. 2006a; Reiss-Brennan et al. 2006b). The Lower Respiratory Infection Development Team ran a quasi-experiment that used existing prospective data flows to assess roll-out of their community-acquired pneumonia (CAP) CPM (Level II-1 evidence) (Dean et al. 2006a; Dean et al. 2001). That led to a randomized controlled trial to identify best antibiotic choices for outpatient management of CAP (Level 1 evidence) (Dean et al. 2006b). With embedded data systems and an existing “shared baseline” care protocol that spanned the Intermountain system, it took less than 3

months to complete the trial. The largest associated expenses were IRB oversight and data analysis—costs that Intermountain underwrote, based on a clear need to quickly generate then apply appropriate evidence to real patient care.

While Intermountain demonstrates the concept of embedded effectiveness research, many other integrated care delivery networks are rapidly deploying similar methods. Embedded effectiveness research is an engine that can make evidence-based care delivery the norm, rather than the exception. It holds massive potential to deliver “best care” to patients, while generating evidence to find the next step in “best care.”

ELECTRONIC HEALTH RECORDS AND EVIDENCE-BASED PRACTICE

Walter F. Stewart, Ph.D., M.P.H., and Nirav R. Shah, M.D., M.P.H.

Geisinger Health System

Every day, clinicians engage in a decision process on behalf of patients that requires a leap beyond available evidence. This process, which we characterize as “bridging the inferential gap” (Stewart et al. 2007 [in press]), uses varying degrees of judgment, either because available evidence is not used or because the right evidence does not exist. This gap has widened as the population has aged, with the rapid growth in treatment needs and options, and as the creation of knowledge continues to accelerate. Increasing use of the EHR in practice will help to narrow this gap by substantially increasing real-time access to knowledge (Haynes 1998) and by facilitating creation of evidence that is more directly relevant to everyday clinical decisions (Haynes et al. 1995).

The Twentieth-Century Revolution in Creation of Evidence

In the early twentieth century, medical knowledge creation emerged as a new enterprise with the propagation of an integrated model of medical education, clinical care, and “bench to bedside” research (Flexner 2002; Vandenbroucke 1987) In the United States, this model evolved with the rapid growth in foundation and federal funding, and later with substantial growth in industry funding (Charlton and Andras 2005; Graham and Diamond 2004). Today, this enterprise has exceeded expectations in the breadth and depth of evidence created, but has fallen short in the relevance of evidence to everyday clinical care needs.

Over the last 50 years, rigorous scientific methods have developed as part of this enterprise, in response to challenges with the interpretation of evidence. While observational and experimental methods developed

in parallel, the randomized controlled clinical trial became the dominant method for creating clinical evidence, emerging as the “gold standard” in response to demand for a rigorous and reliable approach to answering questions (Meldrum 2000). The application of the RCT naturally evolved in response to regulatory demands for greater accuracy in the interpretation of evidence of treatment benefit and minimizing risk of harm to volunteers. As a consequence, RCTs have tended toward very focused interventions in highly select populations (Black 1996). Often, the questions answered do not fully address more specific questions that arise in clinical practice. Moreover, restricting enrollment to the healthiest subgroup of patients (i.e., with minimal comorbidities) limits the generalizability of the evidence derived. Patients seen in clinical practice tend to be considerably more diverse and more clinically complex than patients enrolled in clinical trials. This gap between existing evidence and the needs of clinical practice does not mean that we should change how RCTs are done. It is not sensible to use the RCT as a routine method to answer practice-specific questions. The resources required to answer a meaningful number of practice-based questions would be too substantial. The time required would often be too long for answers to be relevant.

Accepting that the RCT should continue to be used primarily as a testing and regulatory tool does not resolve the dilemma of the need for an effective means of creating more relevant evidence. We currently do not have the means to create evidence that is rigorous and timely, and answers the myriad of questions common to everyday practice. As a bridging strategy, we have resorted to consensus decisions among clinical experts, replacing evidence with guidance (Greenblatt 1980). Finally, systematic observational studies in general population- or practice-based samples are often cited as a means to address the generalizability limits of evidence from RCTs (Horwitz et al. 1990). Confounding by indication is an important concern with interpretation of evidence from such studies, but this is not the most significant challenge (Salas et al. 1999). Again, the same issues regarding resource limitations and access to timely evidence apply. Most practice-based questions of clinical interest cannot be answered by funding systematic prospective observational studies.

Knowledge Access

While the methods to generate knowledge over the past century have advanced at a remarkable pace and knowledge creation itself is accelerating, the methods to access such knowledge for clinical care have not evolved. Direct education of clinicians continues to be the dominant model for bringing knowledge to practice. This is largely because there are no practical alternatives. While the modes by which knowledge is conveyed

(e.g., CDs, Internet) have diversified, all such modes still rely on individual education, a method that was useful when knowledge was relatively limited. Today, however, individual continuing medical education (CME) can be characterized as a hit-or-miss approach to gaining knowledge. There is so little time, yet so much to know. There are also numerous other factors essential to bringing knowledge to practice that individual education does not address. These other factors, downstream from gaining knowledge, probably contribute to variation in quality of care. Specifically, clinicians vary substantially, not just in what they have learned, but in the accuracy of what is retained, in real-time retrieval of what is retained when needed, in the details of what they know or review about the patient during an encounter, in the interpretation of patient data given the retained knowledge that is retrieved during the encounter, and in how this whole process translates into a care decision. Even if incentives (e.g., pay for performance) were logically designed to motivate the best clinical behavior, the options for reducing variation in practice are limited given the numerous other factors that influence the ultimate clinical decision. In the paper-based world of most clinical practices, clinicians will always be constrained by a “pony-express” model of bringing knowledge to practice. That is, with very limited time, clinicians must choose a few things to learn from time to time and hope that they use what they learn effectively when needed.

Just as consensus decision making is used to bridge the knowledge gap, efforts to codify knowledge over the past decade are an important step toward increasing access to knowledge. However, this is only one of the many steps noted above that is relevant to translating knowledge to practice-based decision making. The EHR opens opportunities for a paradigm shift in how knowledge is used in practice-based clinical decisions.

The EHR and New Directions

There are several areas in which the EHR represents the potential for a fundamental shift in research emphasis—in particular, the linkage of research to development, the most common paradigm for research worldwide; the opening of opportunities to explore new models for creating evidence; and the evolving role of decision support in bringing knowledge directly to practice.

Research and Development In the private for-profit sector, the dominant motivation for research is to create value, through its intimate link to the development of better products and services. “Better” generally means that more value is attained because the product or service cost is reduced (e.g., research and development [R&D], which results in a lower cost of production), the product is made more capable at the same or a lower cost, or quality is improved (e.g., more durable, fewer errors) at the same or at

lower cost. This R&D paradigm, core to competition among market rivals in creating ongoing value to customers, does exist in certain healthcare sectors (e.g., pharmaceutical and device manufacturers) but does not exist, for the most part, in the U.S. healthcare system, where, notably, value-based competition also does not exist (Porter and Teisber 2006).

The dominant research model in academic institutions is different. Research funding is largely motivated by the mission to create knowledge, recognizing the inherent social value of such an enterprise.1 The policies and practices in this sector are largely created by academic officials and faculty in collaboration with government or foundation officials. This is in essence a closed system that seeks to fulfill its mission of creating knowledge. It is not a system primarily designed to influence or bring value, as defined above, to health care. Academic research institutions are not representative of where most health care is provided in the United States. Private practice physicians, group practices, community hospitals, and health systems deliver the vast majority of care in the United States. Even if there was a desire to shift some funding toward an R&D focus, the link between academic institutions and the dominant systems of care in the United States may not be substantial enough for research to be directed in a manner that creates value. In contrast, new healthcare strategies and models of care are increasingly tested by the Centers for Medicare and Medicaid Services (CMS), where large-scale demonstration projects are funded to identify new ways of creating value in health care. However, these macro-level initiatives are designed primarily to influence policy, not to create new models of care and, as such, lack a quality of other federally funded research that offers insight on how outcomes are actually obtained.

Even though the pursuit of knowledge is the dominant motivation for research in the academic model, discoveries sometimes result in the creation of new services and products. However, the development process is motivated by a diversity of factors (e.g., investigators’ desire to translate their research into concrete end points, unexpected market opportunities that coincide with the timing of research discoveries) that are not causally or specifically directed to development and the need to create value. This process is qualitatively different from what occurs in R&D, where, as we have noted, the intention of research is to create value.

Despite the challenges, adoption of an R&D model for healthcare delivery is not practical, given the constraints of a paper-based world. Moreover, there are numerous structural problems (e.g., lack of alignment between

interests of payers and providers, paying for performance and outcomes versus paying for care) in the U.S. healthcare system that make it very difficult to create value in the traditional sense (Porter and Teisber 2006), even if R&D evolved to how it is in other markets. R&D is, however, sensible in a digital healthcare world and is one of several factors required to achieve a broader solution of providing high-quality care at a lower cost.

Why is the EHR important in this context? Paper-based healthcare settings are fundamentally constrained not only in what is possible, but also in what one imagines is possible. It is somewhat analogous to imagining what could be done in advancing the use of the horse and buggy to go faster, to explore new dimensions of transportation (e.g., as a form of entertainment with the integration of media from radio, CDs, and video with travel), to make use of decision support (e.g., a Global Positioning System [GPS] to provide directions, to find restaurants), and so forth. These new factors were not worth considering until a new technology emerged. The paper-based world is inherently constrained in this manner. Namely, it is almost not worth considering the expansive development and adoption of clinical data standards, expansive use of human independent interactions (e.g., algorithmic ordering of routine preventive health interventions), real-time monitoring of data, detailed feedback on patient management performance, sophisticated means of clinical decision support, and timely creation of evidence, to mention a few. Moreover, a paper-based world is truly limited in the ability to take a solution tested in one clinical setting and export and scale the discovered solution to many other settings.

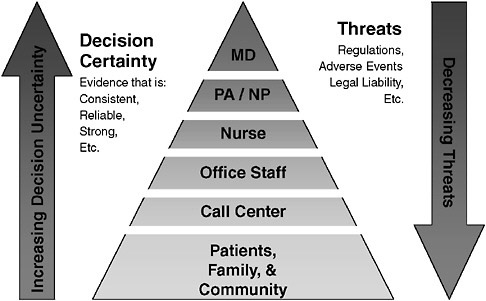

The EHR is a transforming technology. It is unlikely that the EHR in itself will create substantial value in health care. Rather, we believe it will create unique opportunities to seamlessly link research to development, to ensure that the exporting and scalability problem is solved as part of the research endeavor, and to intentionally create value in health care. Bringing knowledge to practice will be an important part of this enterprise. Figure 3-3 presents a familiar characterization of how EHR-based R&D can influence health care. Efficiency and quality of care can be improved through the integration of new workflows and sophisticated decision support that move more and more tasks to the patient and nonphysician and nonclinical staff. Clinician management should increasingly be reserved for situations where the decisions to be made are less certain and where threats (e.g., adverse events) are possible. The notion behind such a model is to continuously explore new ways in which structure can be added to the care delivery process such that those with less formal training, including patients, assume more responsibility for care.

EHR-based practices also offer the promise of aligning interests around the business value of data and information. As sophisticated models of care (e.g., integration of workflow, evaluation of data, decision support) evolve,

FIGURE 3-3 Who should be responsible for what?

the value of accurate and complete data will increase. This is especially likely to be true if market-sensible expectations (e.g., pay for performance or outcomes) emerge as a dominant force for payment. In this context, data standards and data quality represent business assets to practicing clinicians (i.e., facilitate the ability to deliver higher-quality care at a lower cost), opening the most important path alignment. Moreover, those who pay for care will view data as an essential ingredient to providing high-quality and efficient care.

Discovering What Works in Reality and Beyond

Creating medical evidence is a costly and time-consuming enterprise, with the result that it is not possible to conduct studies to address most questions relevant to health care, and the time required to generate the evidence limits its relevance. Consider, however, three related stages of research relevant to developing new models for creation of evidence: (1) development of new methods designed to extract valid evidence from analysis of retrospective longitudinal patient data; (2) translation of these methods into protocols that can rapidly evaluate patient data in real time as a component of sophisticated clinical decision support; and (3) rapid protocols designed to conduct clinical trials as a routine part of care delivery in the

face of multiple treatment options for which there is inadequate evidence to decide what is most sensible (i.e., clinical equipoise).

Valid methods are needed to extract evidence from retrospective analysis of EHR data. For example, one such method may include research on how longitudinal EHR data can be used to replicate the results of prior randomized clinical trials. Such an evaluation might include imposing the same patient inclusion and exclusion criteria, duration of “observation,” and other design features used in pivotal trials. Other techniques (e.g., use of propensity scores, interaction terms with treatment used) may have to be used to adjust for underlying differences between clinic patients and trial participants and for confounding by indication and other factors (Seeger et al. 2005). This represents one scenario for how methods may be developed and validated as a first step toward extracting evidence from EHR data. Establishing such a foundation opens opportunities to explore other questions relevant to the generalizability gap between RCT evidence and questions that arise in practice. What would have happened in the trial if, for example, patients who were sicker or older, or patients who had more diverse comorbidities, had been enrolled? What are the profiles of patients who might best (or least) benefit from the treatment? What are the treatment effects on other outcomes not measured in the original trial? Such “virtual” trials could extend the evidence from efficacy studies to those more relevant to clinical practice.

Ultimately, such methods can be used to serve two purposes: to create evidence more relevant to practice and to translate the methods into protocols that rapidly evaluate data to provide real-time decision support. Consider the needs of a patient with comorbid atrial fibrillation, diabetes, and hypertension. If each disease were considered in isolation, thiazides might be prescribed for hypertension, angiotensin receptor blocker for diabetes, and a beta-blocker for atrial fibrillation. Yet in considering the whole patient, clinicians necessarily have to make nuanced decisions (i.e., to optimize the relative benefits of taking numerous medications, simplifying the treatment regimen, reducing cost to the patient, minimizing drug-drug interactions and adverse events, etc.) that are unlikely to ever be based on expert guidelines. A logical extension of the methodological work described above is to apply such methods to the rapid processing of longitudinal, population-based EHR data to facilitate clinical decision making given a patient’s profile and preferences.

The above methods could be valuable in both providing decision support and identifying when a decision is completely uncertain. Decision uncertainty has been and will continue to be ever-present. There are no absolute means of closing the loop so that every decision is made with certainty. New questions will continue to arise at an increasingly faster pace, and these questions will always change and evolve. Meaningful advances

will emerge by making questions more explicit sooner and by providing answers proximal to when the questions arise. An everyday solution will be required to meet the perpetual growth in demand for new knowledge in medicine (Stewart et al. 2007 [in press]). One such solution may be to use the power of the EHR and new decision methods described above to identify conditions where clinical equipoise between two or more options has occurred. Embedding protocols that randomize the decision under this condition provides a means to conduct RCTs that fundamentally fill the inferential gap.

It is especially important to underscore that to be useful, functional, exportable, and scalable, solutions need to be developed that serve one critical purpose: to create value. Without this ultimate intention, physicians will be reluctant to adopt new methods. Offering improvements to quality as an incentive to adopt new ways of practicing medicine is simply not enough to change behavior. The notion of sophisticated clinical decision support (CDS) will embody many such solutions, as long as these solutions also solve the workflow challenges for such processes. A new term may have to be created to avoid confusion and to distinguish the evolution of CDS from its rudimentary roots and from what exists today (e.g., poorly timed binary alerts, drug interaction checking, simple default orders derived from practice guidelines, access to electronic textbooks and literature). The future of CDS is in developing real-time processes that directly influence clinical care decisions at exactly the right time (i.e., sensing when it is appropriate to present decision options). EHR platforms alone are unlikely to have the capability of managing such processes. They are designed for a different purpose. Rather, these processes are likely to involve sophisticated “machines” that are external to the EHR, but interact with the EHR. “Sophisticated” from today’s vantage point means reducing variation in the numerous steps we have previously described that influence a clinical decision, evaluating patient data (i.e., clinical and preferences) in relation to codified evidence-based rules, evaluating data on other patients in this context, presenting concrete support (i.e., not advice but an actionable decision that can be modified), and ensuring that the whole process increases overall productivity and efficiency of care.

We are at a seminal point in the history of medical care, as important as the changes that took place at the turn of the last century and that today have created the demand for new solutions to address the problems created by a century of success. We believe that new opportunities are emerging that will change how evidence is created, accessed, and ultimately used in the care of patients. By leveraging the EHR, we see that providers and others who care for patients will be able move beyond dependence on “old media” forms of knowledge creation to practice real-time, patient-centered care driven by the creation of value. Such a system will not only close the

gap in practice between what we know works and what we do, but also allow new means of data creation in situations of clinical equipoise.

STANDARDS OF EVIDENCE

Steven Pearson, M.D.

America’s Health Insurance Plans

It is easy to forget that not too long ago, evidence-based medicine often meant if there is no good evidence for harm, the physician was allowed to proceed with the treatment. Now, however, it is clear that we are in a new era in which evidence-based medicine is being used to address an increasing number of clinical questions, and answering these questions requires that some standard for evidence exists that is “beyond a reasonable doubt.” Evidence can be applied to make different types of decisions regarding the use of new and existing technologies in health care, including decisions regarding appropriate study designs and safeguards for new treatments, clinical decisions for the treatment of individual patients, and population-level decisions regarding insurance coverage. Coverage decisions are particularly sensitive to a narrowing of the research-practice divide, and this discussion focuses on how evidence standards currently operate in coverage decisions for both diagnostic and therapeutic technologies. Quicker, practice-based research opportunities inherent to a learning healthcare system may affect evidence standards in an unanticipated manner.

In general, following approval, technologies are assessed on how they measure up to various criteria or evidentiary hurdles. Possible hurdles for new technologies include considerations of efficacy, effectiveness versus placebo, comparative effectiveness, and perhaps even cost-effectiveness. As an example, the Technology Evaluation Center (TEC), established by Blue Cross Blue Shield Association (BCBSA) evaluates the scientific evidence for technologies to determine, among other things, whether the technology provides substantial benefits to important health outcomes, or whether the new technology is safer or more beneficial than existing technologies. To answer these questions, technology assessment organizations gather, examine, and synthesize bodies of evidence to determine the strength of evidence.

Strength of evidence, as a concept, is very complicated and not an easy construct to communicate to the population at large. The general concept is that individual studies are assessed within the context of a standing hierarchy of evidence, and issues that often get less visibility include the trade-offs between benefit versus risk. However in considering the strength of evidence, we usually talk about the net health benefit for a patient, and this can often depend on the magnitude and certainty of what we know about both the risks and the benefits. The United States Preventive Task

Force (USPTF) was uniquely visible in advancing the idea of considering not just the quality of individual evidence, but how the strength of evidence can be characterized as it applies to an entire body of evidence—whether this relates to consistencies across different studies or the completeness of the conceptual chain. These concepts have been very important in advancing how groups use evidence in making decisions.

This idea of the strength of evidence, however, is still different from a standard of evidence. For a standard of evidence, we must be able to give the evidence to a decision-making body, which must be able to do something with it. As an example of how difficult this can be, TEC has five criteria, and careful examination of the criteria indicates that much is left unresolved that might have important implications for coverage decisions. TEC criteria number three, “the technology must improve the net health outcome,” might vary depending on the nature of the intervention or circumstance of application. Moreover, TEC only evaluates select technologies and their technology assessments only inform decision-making bodies. When an evaluation goes to a decision-making group, charged with deciding whether something is medically necessary, it is often difficult to know when evidence will be sufficient to make a decision. In part this is because it is difficult to set a uniform standard of evidence. Specifying, for instance, a requirement such as three RCTs with some very specific outcomes clearly would not allow the flexibility needed to deal with the variety of issues and types of data that confront such decision-making bodies.

Although we have made much progress in advancing our thinking about how to understand the strength of a body of evidence, advancing our understanding of how to make a decision based on the strength of evidence is another issue. There are many factors that could modulate a coverage decision, including existence of alternate treatments, severity of disease, and cost. How we might account for these factors and what formal structure might be needed to integrate such “contextual considerations” are concepts that are just now beginning to evolve.

Part of the problem with evidence-based coverage decisions is the fact that evidence itself is lacking. Many of the people that sit on these decision-making bodies will agree that the majority of the time, the evidence is just not adequate for the decisions they need to make. Part of the difficulty is the lack of appropriate studies, but for procedures and devices the lack of evidence is related to the fluid nature of technology development and our evidence base. For example, devices are often rapidly upgraded and improved, and the procedures and practitioner competency with those procedures evolve over time. Evidence developed shortly after a product is developed is thus a snapshot in time, and it is difficult to know what such current evidence means for the effectiveness of the product over the next several years. Likewise, traditional evidence hierarchies are framed around

therapeutics and fit poorly for diagnostics. Many new diagnostics represent tests of higher accuracy or may be an addition to an existing diagnostic process. In the latter case, evidence will need to address not just for whom these tests are effective but when in the workup they should be used. There are other types of emerging technologies that pose new challenges to our existing evidentiary approach, such as genetic tests for disease counseling, pharmacogenomic assays, and prognostic studies. Collectively, these technologies pose several questions for our current approach to standards of evidence. As our understanding of heterogeneity of treatment effects expands, how might new modes of diagnosis change therapeutic decision making? As we move to a system that will increasingly utilize interventions that evolve rapidly, how do we establish standards of evidence that are meaningful for current clinical practice? As we begin to utilize data generated at the point of care to develop needed evidence, how might the standards of evidence for their evaluation change?

On top of all these uncertainties and challenges, our current approach to evidentiary assessment and technology appraisal is not well defined beyond taking systematized analyses of existing evidence, giving them to a group, and asking it to decide. As a field, there are many discussions about heterogeneity, meta-analyses, propensity scores, and so forth, but there is very little knowledge about how this information is or should be used by these decision-making bodies and what the process should be.

Consider an alternative approach to standards of evidence and decision making as we move toward a learning healthcare system: think of a dial that can move along a spectrum of evidence—including evidence that is persuasive, promising, or preliminary. There are additional circumstances that can also influence decision making such as the severity of disease and whether this intervention might be considered a patient’s last chance. In this case does it mean that we only need preliminary evidence of net health benefit for us to go forward with a coverage decision? What if there are many treatment alternatives? Should the bar for evidence rise? These are the types of issues we need to consider. Decision makers in private health plans have to wrestle with this problem constantly while trying to establish a consistent and transparent approach to evidence.

So what happens to the evidence bar when we move toward a learning healthcare system? Coverage with evidence development has been discussed in an earlier chapter as a movement toward what might be envisioned to be a learning healthcare system. In the case of CED, consider what might happen to the evidence bar in addressing the many important questions. If we are trying to foster promising innovation, will we drop the evidence bar for coverage? What do we mean by “promising”? Do we mean that a technology is safe and effective within certain populations, but now we are considering an expansion of its use? Is it “promising” because we know it

is safe, but are not so sure that it is effective? Or is it effective, but we don’t know whether the effectiveness is durable over the long term? Are these questions all the same visions of “promising” evidence?

Is CED a new hurdle? Does it lower the one behind it? Does it introduce the opportunity to bring new hurdles such as comparative and cost-effectiveness that we have not had before? Ultimately CED may be used to support studies whose results will enhance the strength of evidence to meet existing standards, certainly part of the vision of CED—but might it also lead to a shift to a lower initial standard of evidence for coverage decisions? As we know when CED policy became known to industry, many groups approached CMS with not “promising” but perhaps even “poor” evidence, asking for coverage in return for the establishment of a registry from which we will all “learn.” Resolving these issues is an active area of policy discussion—with individuals at CMS and elsewhere still very early on the learning curve—and is vital to improving approaches as we develop and advance our vision of a learning healthcare system.

IMPLICATIONS FOR ACCELERATING INNOVATION

Robert Galvin, M.D.

General Electric

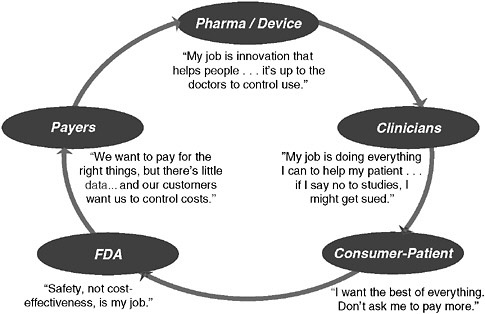

Technological innovations have substantially improved our nation’s health, but they also account for the largest percentage of the cost increases that continue to strain the U.S. healthcare system (Newhouse 1993). The process to decide whether to approve and then provide insurance coverage for these innovations has represented a “push-pull” between healthcare managers—representing healthcare insurers and public payers, trying to control cost increases—and manufacturers, including pharmaceutical companies, biotech startups, and others, looking for return on their investment and a predictable way to allocate new research resources. Employers, providers, and consumers also figure into the process, and the sum of all these stakeholders and their self-interests has, unfortunately, led to a cycle of “unaccountability” and a system that everyone agrees doesn’t work well (Figure 3-4). Over the past several years, a single-minded concentration on the rising costs of health care has gradually been evolving into a focus on the “value” of care delivered. In the context of assessing new technologies, evaluation has begun to shift to determining what outcomes are produced from the additional expense for a new innovation. A notable example of this approach is Cutler’s (Cutler et al. 2006) examination of cardiac innovations. In weighing technology costs and outcomes, he concluded that several years of additional life were the payback for the additional expense of these new interventions and at a cost that has been considered accept-

FIGURE 3-4 The cycle of unaccountability.

able in our health system. However for employers and other payers, who look at value a little differently (i.e., what is the best quality achievable at the most controlled cost?), the situation is complex. While applauding innovations that add value—similar to those examined by Cutler—they remain acutely aware of the innovations that either didn’t add much incremental value or offered some improvement for specific circumstances but ended up increasing costs at an unacceptable rate due to overuse. A good example of the latter is the case of COX-2 inhibitors for joint inflammation. This modification of nonsteroidal anti-inflammatory drugs (NSAIDs) represented a significant advance for the 3-5 percent of the population who have serious gastric side effects from first-generation NSAIDs; however, within two years of their release, more than 50 percent GE’s population on NSAIDs were using COX-2s, an overuse of technology that has cost GE tens of millions of dollars in unnecessary cost. The employers’ dilemma is how to get breakthrough innovations to populations as fast as possible, but used by just those who will truly benefit, and not overpay for innovations whose costs exceed their benefits.

How Coverage Decisions Work Today

Although a lot of recent attention has focused on Food and Drug Administration (FDA) approval, employers are impacted most directly

by decisions about coverage and reimbursement. Although approval and coverage are linked, it is often not appreciated that they are distinctly different processes. FDA approval does not necessarily equate to insurance coverage. Payers, most often CMS and health insurance companies, make coverage decisions. CMS makes national coverage decisions in a minority of cases and otherwise delegates decision making to its regional carriers, largely Blue Cross insurance plans. Final coverage decisions vary among these Blue Cross plans and other commercial health insurers, but in general, a common process is followed.

The method developed by the TEC, sponsored by the Blue Cross Blue Shield Association and composed of a committee of industry experts, is typical. The TEC decides what new services to review and then gathers all available literature and evaluates the evidence against five criteria (BlueCross BlueShield Association 2007). There is a clear bias toward large, randomized, controlled trials. If the evidence is deemed insufficient to meet the TEC criteria, a new product will be designated “experimental.” This has significant implications for technology developers, as payers, following their policy of reimbursing only for “medically necessary” services, uniformly do not pay for something considered experimental.

This process has many positives, particularly the insistence on double blinding and randomization, which minimizes “false positives” (i.e., interventions that appear to work but turn out not to). Certain innovations have significant potential morbidity and/or very high cost (e.g., some pharmaceuticals or autologous bone marrow transplants for breast cancer), and having a high bar for coverage protects patients and payers. However, the process also has several negatives. It is a slow process working in the fast-moving world of innovation, and new services that greatly help patients can be unavailable for years after the FDA has approved them. Large, randomized controlled trials are often not available or feasible, and take a significant amount of time to complete Also, RCTs are almost exclusively performed in academic medical centers, and results achieved in this setting frequently cannot be extrapolated to the world of community-based medical care, where the majority of patients receive their care. The process overall is better at not paying for unproven innovations that it is at providing access to and encouraging promising new breakthroughs.

The coverage history for digital mammography provides an example of these trade-offs. Digitizing images of the breast leads to improvements in sensitivity and specificity in the diagnosis of breast cancer, which most radiologists and oncologists believe translates into improved treatment of the disease. Although the FDA approved this innovation in 2000, it was deemed “experimental” in a 2002 TEC report due to insufficient evidence. Four years elapsed before a subsequent TEC review recommended coverage, and very soon after, all payers reimbursed the studies. In an interest-

ing twist, CMS approved reimbursement in 2001 but at the same rate as film-based mammography, a position that engendered controversy among radiologists and manufacturers. While the goal of not paying for an unproven service was met, the intervening four years between approval and coverage did not lead to improvement in this “promising” technology but rather marked the time needed to develop and execute additional clinical studies. The current process therefore falls short in addressing one part of the employer dilemma, speeding access of valuable new innovations to their populations.

What is particularly interesting in the context of today’s discussion is that the process described is the only accepted process. Recognizing that innovation needed to occur in technology assessment and coverage determination, CMS developed a new process called CED—Coverage with Evidence Development (Tunis and Pearson 2006). This process takes promising technologies that have not accumulated sufficient patient experience and instead of calling them “experimental” and leaving it to the manufacturer to gather more evidence, combines payment of the service in a selected population with evidence development. Evidence development can proceed through submission of data to a registry or through practical clinical trials, and the end point is a definitive decision on coverage. This novel approach addresses three issues simultaneously: by covering the service, those patients most in need have access; by developing information on a large population, future tailoring of coverage to just those subpopulations who truly benefit can mitigate overuse; and by paying for the service, the manufacturer collects revenue immediately and gets a more definitive answer on coverage sooner—a potential mechanism for accelerating innovation.

To date, CMS has applied CED to several interventions. The guidelines were recently updated with added specification on process and selection for CED. However, given the pace of innovations, it is not reasonable to think that CMS can apply this approach in sufficient volume to meet current needs. Because one-half of healthcare expenditures come from the private sector in the form of employer-based health benefits, it makes sense for employers to play a role in finding a solution to this cycle of unaccountability. On this basis, GE, in its role as purchaser, has launched a pilot project to apply CED in the private sector.

Private Sector CED

General Electric is working with UnitedHealthcare, a health insurer, and InSightec, an Israel-based manufacturer of healthcare equipment, to apply the CED approach to a new, promising treatment for uterine fibroids. The treatment in question is magnetic resonance (MR) based focused ultrasound (MRgFUS), in which ultrasound beams directed by magnetic

resonance imaging are focused at and destroy the fibroids (Fennessy and Tempany 2005). The condition is treated today by either surgery (hysterectomy or myomyectomy) or uterine artery embolization. The promise of the new treatment is that it is completely noninvasive and greatly decreases the time away from work that accompanies surgery.

The intervention has received FDA pre-market approval on the basis of treatment in approximately 500 women (FDA 2004), but both CMS and TEC deemed the studies not large enough to warrant coverage and the service has been labeled “experimental” (TEC Assessment Program October 2005). As a result, no major insurer currently pays for the treatment. Both CMS and TEC advised InSightec to expand its studies to include more subjects and measure whether there was recurrence of fibroids. InSightec is a small company that has been having trouble organizing further research, due to both the expense and the fact that the doctors who generally treat fibroids, gynecologists, have been uninterested in referring treatment to radiologists. The company predicts that it will likely be three to five years before TEC will perform another review.

All stakeholders involved in this project are interested in finding a non-invasive treatment for these fibroids. Women would certainly benefit from a treatment with less morbidity and a shorter recovery time. There are also economic benefits for the three principals in the CED project: GE hopes to pay less for treatment and have employees out of work for a shorter time; UnitedHealthcare would pay less for treatment as well, plus it would have the opportunity to design a study that would help target future coverage to specific subpopulations where the benefit is greatest; and InSightec would have the opportunity to develop important evidence about treatment effectiveness while receiving a return on its initial investment in the product.

The parties agreed to move forward and patterned their project on the Medicare CED model, with clearly identified roles. General Electric is the project sponsor and facilitator, with responsibility for agenda setting, meeting planning, and driving toward issue resolution. As a self-insured purchaser, GE will pay for the procedure for its own employees. United-Healthcare has several tasks: (1) market the treatment option to its members; (2) establish codes and payment rates and contract with providers performing the service; (3) extend coverage to its insured members and its own employees in addition to its self-funded members; and (4) co-develop the research protocol with InSightec, including data collection protocols and parameters around study end-points and future coverage decisions. Finally, as the manufacturer, InSightec is co-developing the research protocol, paying for the data collection and analysis (including patient surveys), and soliciting providers to participate in the project.

Progress to Date and Major Challenges

The initiative has progressed more slowly than originally planned, but data collection is set to begin before the end of 2006. The number and intensity of challenges has exceeded the expectations of the principals, and addressing them has frankly required more time and resources than anyone had predicted. However, the three companies recognize the importance of creating alternative models to the current state of coverage determination, and their commitment to a positive outcome is, if anything, stronger than it was at the outset of the project. There are challenges.

Study Design and Decision End Points

From a technical perspective this area has presented some very tough challenges. There is little information or experience about how to use data collected from nonrandomized studies in coverage decisions. The RCT has so dominated the decision making in public and private sectors that little is known about the risks or benefits of using case controls or registry data. What level of certainty is required to approve coverage? If a treatment is covered and turns out to be less beneficial than thought, should this be viewed as a faulty coverage process that resulted in wasted money or a “reasonable investment” that didn’t pay off? Who is the “customer” in the coverage determination process: the payers, the innovators, or the patients? If it is patients, how should their voice be integrated in the process?

Another set of issues has to do with fitting the coverage decision approach to the new technology in question. It is likely that some innovations should be subject to the current TEC-like approach while others would benefit from a CED-type model. On what criteria should this decision be made and who should be the decision maker?

Engaging Employers

Private sector expenditures, whether through fully insured or self-funded lines of business, ultimately derive from employers (and their workers). Although employers have talked about value rather than cost containment over the past five years, it remains to be seen how many of them will be willing to participate in CED. Traditionally employers have watched “detailing” by pharmaceutical sales people and direct-to-consumer advertising lead to costly overuse and they may be reluctant to pay even more for technologies that would otherwise not be covered. The project is just reaching the stage in which employers are being approached to participate, so it is too early to tell how they will react. Their participation may, in part, be based on how CED is framed. If the benefit to employees

is clearly described and there is a business case to offer them (e.g., that controlled accumulation of evidence could better tailor and limit future use of the innovation), then uptake may be satisfactory. However, employers’ willingness to participate in the CED approach is critical.

Culture of Distrust

The third, and most surprising, challenge is addressing the degree of distrust between payers and innovators. Numerous difficult issues arise in developing a CED program (e.g., pricing, study end points, binding or nonbinding coverage decisions), and as in any negotiation, interpersonal relationships can be major factors in finding a compromise. Partly from simply not knowing each other, partly from suspiciousness about each other’s motives, the lack of trust has slowed the project. Manufacturers believe that payers want to delay coverage to enhance insurance margins, and payers believe that manufacturers want to speed coverage to have a positive impact on their own profit statements. Both sides have evidence to support their views, but both sides are far more committed to patient welfare than they realize. If CED or other innovations in coverage determinations are going to expand, partnership and trust are key. The system would benefit by having more opportunities for these stakeholders to meet and develop positive personal and institutional relationships.