4

Model Evaluation

INTRODUCTION

How does one judge whether a model or a set of models and their results are adequate for supporting regulatory decision making? The essence of the problem is whether the behavior of a model matches the behavior of the (real) system sufficiently for the regulatory context. This issue has long been a matter of great interest, marked by many papers over the past several decades, but especially and distinctively by Caswell (1976) who observed that models are objects designed to fulfill clearly expressed tasks, just as hammers, screwdrivers, and other tools have been designed to serve identified or stated purposes. Although “model validation” became a common term for judging model performance, it has been argued persuasively (e.g., Oreskes et al. 1994) that complex computational models can never be truly validated, only “invalidated.” The contemporary phrase for what one seeks to achieve in resolving model performance with observation is “evaluation” (Oreskes 1998). Although it might seem strange for such a label to be important, earlier terms used for describing the process of judging model performance have provoked rather vigorous debate, during which the word “validation” was first to be replaced by “history matching” (Konikow and Bredehoeft 1992) and later by the term “quality assurance” (Beck et al. 1997; Beck and Chen 2000). Some of these terms imply, innately or by their de facto use,

a one-time approval step. Evaluation emerged from this debate as the most appropriate descriptor and is characteristic of a life-cycle process.

Two decades ago, model “validation” (as it was referred to then) was defined as the assessment of a model’s predictive performance against a second set of (independent) field data given model parameter (coefficient) values identified or calibrated from a first set of data. In this restricted sense, “validation” is still a part of the common vocabulary of model builders.

The difficulty in finding a label for the process of judging whether a model is adequate and reliable for its task is described as follows. The terms “validation” and “assurance” prejudice expectations of the outcome of the procedure toward only the positive—the model is valid or its quality is assured—whereas evaluation is neutral in what might be expected of the outcome. Because awareness of environmental regulatory models has become so widespread in a more scientifically aware audience of stakeholders and the public, words used within the scientific enterprise can have meanings that are misleading in contexts outside the confines of the laboratory world. The public knows well that supposedly authoritative scientists can have diametrically opposed views on the benefits of proposed measures to protect the environment.

When there is great uncertainty surrounding the science base of an issue, groups of stakeholders within society can take this issue as a license to assert utter confidence in their respective versions of the science, each of which contradicts those of the other groups. Great uncertainty can lead paradoxically to a situation of “contradictory certainties” (Thompson et al. 1986), or at least to a plurality of legitimate perspectives on the given issue, with each such perspective buttressed by a model proclaimed to be valid. Those developing models have found this situation disquieting (Bredehoeft and Konikow 1993) because, even though science thrives on the competition of ideas, when two different models yield clearly contradictory results, as a matter of logic, they cannot both be true. It matters greatly how science and society communicate with each other (Nowotny et al. 2001); hence, in part, scientists shunned the word “validation” in judging model performance.

Today, evaluation comprises more than merely a test of whether history has been matched. Evaluation should not be something of an afterthought but, indeed, a process encompassing the entire life cycle of the task. Furthermore, for models used in environmental regulatory activities, the model builder is not the only archetypal interested party holding a stake in the process but is also one among several key players,

including the model user, the decision maker or regulator, the regulated parties, and the affected members of the general public or the representative of the nongovernmental organization. Evaluation, in short, is an altogether much broader, more comprehensive affair than validation and encompasses more elements than simply the matching of observations to results.

This is not merely a question of form, however. In this chapter, where the committee describes the process of model evaluation, it adopts the perspective, discussed in Chapter 1 of this report, that a model is a “tool” designed to fulfill a task—providing scientific and technical support in the regulatory decision-making process—not a “truth-generating machine” (Janssen and Rotmans 1995; Beck et al. 1997). Furthermore, in sympathy with the Zeitgeist of contemporary environmental policy making, where the style of decision making has moved from that of a command-and-control technocracy to something of a more participatory, more open democracy (Darier et al. 1999), we must address the changing perception of what it takes to trust a model. This not only involves the elements of model evaluation but also who will have a legitimate right to say whether they can trust the model and the decisions emanating from its application. Achieving trust in the model among those stakeholders in the regulatory process is an objective to be pursued throughout the life of a model, from concept to application.

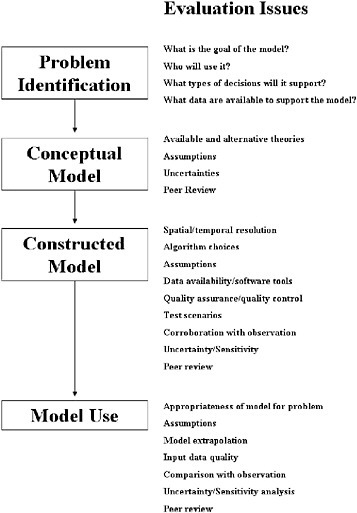

The committee’s goal in this chapter is to articulate the process of model evaluation used to inform regulation and policy making. We cover three key issues: the essential objectives for model evaluation; the elements of model evaluation, and the management and documentation of the evaluation process. To discuss the elements of model evaluation in more detail, we characterize the life stages of a model and the application of the elements of model evaluation at these different stages. We organized the discussion around four stages in the life cycle of a regulatory model—problem identification, conceptual model development, model construction, and model application (see Figure 4-1). The life-cycle concept broadens the view of what modeling entails and may strengthen the confidence that users have in models. Although this perspective is somewhat novel, the committee observed some existing and informative examples in which model evaluations effectively tracked the life cycle of a model. These examples are discussed later in this chapter. We recognize that reducing a model’s life cycle to four stages is a simplified view, especially for models with long lives that go through

FIGURE 4-1 Stages of a model’s life cycle.

important changes from version to version. The MOBILE model for estimating atmospheric vehicle emissions, the UAM (urban airshed model) air quality model, and the QUAL2 water quality models are examples of models that have had multiple versions and major scientific modifications and extensions in over two decades of their existence (Scheffe and Morris 1993; Barnwell et al. 2004; EPA 1999c). The perspective of a four-stage life cycle is also simplified from the stages of model development discussed in Chapter 3. However, simplifying a model’s life cycle makes discussion of model evaluation more tractable.

Historically, the management of model quality has been inconsistent, due in part to the failure to recognize the impact of errors and omissions in the early stages of the life cycle of the model. At EPA (and other organizations), the model evaluation process traditionally has only begun at the model construction and model application stages. Yet formulating the wrong model questions or even confronting the right questions with the wrong conceptual model will result in serious quality problems in the use of a model. Limited empirical evidence in the groundwater modeling field suggests that 20-30% of model analyses confront new data that render the prevailing conceptual model invalid (Bredehoeft 2005). Such quality issues are difficult to discover and even more difficult to resolve (if discovered) when model evaluation applies only at the late stages of the model life cycle.

ESSENTIAL OBJECTIVES FOR MODEL EVALUATION

Fundamental Questions To Be Addressed

In the transformation from simple “validation” to the more extensive process of model evaluation, it is important to identify the questions that are confronted in model evaluation. When viewing model evaluation as an ongoing process, several key questions emerge. Beck (2002b) suggests the following formulation:

-

Is the model based on generally accepted science and computational methods?

-

Does it work, that is, does it fulfill its designated task or serve its intended purpose?

-

Does its behavior approximate that observed in the system being modeled?

Responses to such questions will emerge and develop at various stages of model development and application, from the task description through the construction of the conceptual and computational models and eventually to the applications. The committee believes that answering these questions requires careful assessment of information obtained at each stage of a model’s life cycle.

Striving for Parsimony and Transparency

In the development and use of models, parsimony refers to the preference for the least complicated explanation for an observation. Transparency refers to the need for stakeholders and members of the public to comprehend the essential workings of the model and its outputs. Parsimony derives from Occam’s (or Ockham’s) razor attributed to the 14th century logician William of Occam, stating that “entities should not be multiplied unnecessarily.” Parsimony does not justify simplicity for its own sake. It instead demands that a model capture all essential processes for the system under consideration—but no more. It requires that models meet the difficult goal of being accurate representations of the system of interest while being reproducible, transparent, and useful for the regulatory decision at hand.

The need to move beyond simple validation exercises to a more extensive model evaluation leads to the need for EPA to explicitly assess the trade-offs that affect parsimony, transparency, and other considerations in the process of developing and applying models. These trade-offs are important to modelers, regulators, and stakeholders. The committee has identified three fundamental goals to be considered in making trade-offs, which are further discussed in Box 4-1:

-

The need to get the correct answer – This goal refers to the need to make a model capable of generating accurate as well as consistent and reproducible projections of future behavior or consistent assessments of current relationships.

-

The need to get the correct answer for the correct reason – This goal refers to the reproduction of the spatial and temporal detail of what scientists consider to be the essence of the system’s workings. Simple process and empirical models can be “trained” to mimic a system of interest for an initial set of observations, but if the model fails to capture all the important system processes, the model could fail to behave correctly for an observation outside the limited range of “training” observations. Such failure tends to drive models to be more detailed.

-

Transparency – This goal refers to the comprehension of the essential workings of the model by peer reviewers as well as informed but scientifically lay stakeholders and members of the public. This need drives models to be less detailed. Transparency can also been enhanced

|

BOX 4-1 Attributes That Foster Accuracy, Precision, Parsimony, and Transparency in Models Gets the Correct Result

Gets the Correct Result for the Right Reason

Transparency

|

|

-

by ensuring that reviewers, stakeholders and the public comprehend the processes followed in developing, evaluating, and applying a model, even if they do not fully understand the basic science behind the models.

These three goals can result in competing objectives in model development and application. For example, if the primary task was to use a model as a repository of knowledge, its design might place priority on getting sufficient detail to ensure that the result is correct for the correct reasons. On the other hand, to meet the task of the model as a communication device, the optimal model would minimize detail to ensure transparency. It is also of interest to consider when a regulatory task would be best served by having a model err on the side of getting accurate results but not including sufficient detail to match scientific understanding. For example, when an exposure model can accurately define the relationship between a chemical release to surface water based on a detailed mass balance, should the regulator consider an empirical model that has the same level of accuracy? Here, parsimony might give preference to the simpler empirical model, whereas transparency is best served by the mass-balance model that allows the model user to see how the release is transformed into a concentration. Moreover, in the regulatory context, the more-detailed model addresses the need to reveal to decision makers and stakeholders how different environmental processes can affect the link from emissions to concentration. Nevertheless, if the simpler empirical model provides both accurate and consistent results, it should have a

role in the decision process even if that role is to provide complementary support and evaluation for the more-detailed model.

The committee finds that modelers may often err on the side of making models more detailed than necessary. The reasons for the increasing complexity are varied, but one regulatory modeler mentioned that it is not only modelers that strive to building a more complex model but also stakeholders who wish to ensure that their issue or concerns are represented in the model, even if addressing such concerns does not have an impact on model results (A. Gilliland, Model Evaluation and Applications Branch, Office of Research and Development, EPA, personal commun., May 19, 2006). Increasing the refinement of models introduces increasing model parameters with uncertain values while decreasing the model transparency to users and reviewers. Here, the problem is a model that accrues significant uncertainties when it contains more parameters than can be calibrated with observations available to the model evaluation process. In spite of the drive to make their models more detailed, modelers often prefer to omit capabilities that do not substantially improve model performance—that is, its precision and accuracy for addressing a specific regulatory question.

ELEMENTS OF MODEL EVALUATION

The evidence used to judge the adequacy of a model for decision-making purposes comes from a variety of sources. They include studies that compare model results with known test cases or observations, comments from the peer review process, and the list of a model’s major assumptions. Box 4-2 lists those and other elements of model evaluation. Many of the elements might be repeated, eliminated, or added to the evaluation as a model’s life cycle moves from problem identification to model application stages. For example, peer review at the model development stage might focus on the translation of theory into mathematical algorithms and numerical solutions, whereas peer review at the model application stage might focus on the adequacy of the input parameters, model execution, and stakeholder involvement. Recognizing that model evaluation may occur separately during the early stages of a model’s life, as well as again during subsequent applications, helps to address issues that might arise when a model is applied by different groups and for different conditions than those for which the model was developed. The committee notes that, whereas the elements of model evaluation and the

questions to be answered throughout the evaluation process may be generic in nature, what comprises a high-quality evaluation of a model will be both task- and case-specific. As described in Chapter 2, the use of models in environmental regulatory activities varies widely both in the effort and the consequences of the regulatory efforts it supports. Thus, the model evaluation process and the resources devoted to it must be tailored to its specific context. Depending on the setting, model evaluation will not necessarily address all the elements listed in Box 4-2. In its guidance document on the use of models at the agency, EPA (2003d) recognized that a model evaluation should adopt a graded approach to model evaluation, reflecting the need for it to be adequate and appropriate for the decision at hand. The EPA Science Advisory Board (SAB) in its review of EPA’s guidance document on the use of models recommended that the graded concept be expanded to include model development and application (EPA 2006d). The committee here recognizes that model evaluation must be tailored to the complexity and impacts at hand as well as the life stage of the model and the model’s evaluation history.

MODEL EVALUATION AT THE PROBLEM IDENTIFICATION STAGE

There are many reasons why regulatory activities can be supported by environmental modeling. At the problem identification stage, decision makers together with model developers and other analysts must consider the regulatory decision at hand, the type of input the decision needs, and whether and how modeling can contribute to the decision-making process. For example, if a regulatory problem involves the assessment of the health risk of a chemical, considerations may include whether to focus narrowly on cancer risk or to include a broader spectrum of health risks. Another consideration might be whether the regulatory problem focuses on occupational exposures, acute exposures, chronic exposures, or exposures that occur to a susceptible subpopulation. The final consideration is whether a model might aid in the regulatory activity.

If there is sufficient need for computational modeling, there are three questions that must be addressed at the problem identification stage: (1) What types of decisions will the model support? (2) Who will use it? and (3) What data are available to support development, application, and evaluation of a model? Addressing these questions is important

|

BOX 4-2 Individual Elements of Model Evaluation Scientific basis – The scientific theories that form the basis for models. Computational infrastructure – The mathematical algorithms and approaches used in the execution of the model computations. Assumptions and limitations – The detailing of important assumptions used in the development or application of a computational model as well as the resulting limitations in the model that will affect the model’s applicability. Peer review – The documented critical review of a model or its application conducted by qualified individuals who are independent of those who performed the work, but who are collectively at least equivalent in technical expertise (i.e., peers) to those who performed the original work. Peer review attempts to ensure that the model is technically adequate, competently performed, properly documented, and satisfies established quality requirements through the review of assumptions, calculations, extrapolations, alternate interpretations, methodology, acceptance criteria, and/or conclusions pertaining from a model or its application (modified from EPA 2006a). Quality assurance and quality control (QA/QC) – A system of management activities involving planning, implementation, documentation, assessment reporting, and improvement to ensure that a model and its component parts are of the type needed and expected for its task and that they meet all required performance standards. Data availability and quality – The availability and quality of monitoring and laboratory data that can be used for both developing model input parameters and assessing model results. Test cases – Basic model runs where an analytical solution is available or an empirical solution is known with a high degree of confidence to ensure that algorithms and computational processes are implemented correctly. Corroboration of model results with observations – Comparison of model results with data collected in the field or laboratory to assess the accuracy and improve the performance of the model. Benchmarking against other models – Comparison of model results with other similar models. Sensitivity and uncertainty analysis – Investigation of what parameters or processes are driving model results as well as the effects of lack of knowledge and other potential sources of error in the model. Model resolution capabilities – The level of disaggregation of processes and results in the model compared to the resolution needs from the problem statement or model application. The resolution includes the level of spatial, temporal, demographic or other types of disaggregation. Transparency – The need for individuals and groups outside modeling activities to comprehend either the processes followed in evaluation or the essential workings of the model and its outputs. |

both for setting the direction of the model and for setting goals for the quality and quantity of information needed to construct and apply the model.

At this stage, data considerations should be a secondary issue, though not one to completely ignore. Problem identification must not be anchored solely to the available data to avoid the situation where data dictate the problem identification of the form, “We have these data available, so we can answer this question….” However, there would have to be confidence that quantitative analysis could inform the problem and that some data would be available.

The problem identification stage answers the question of whether modeling might help to inform the particular issue at hand and sets the direction for development of conceptual and computation models. Although the committee is not endorsing a complex model evaluation at the nascent stage of problem identification, it is clear that setting off to develop or apply a model that will not address the problem at hand or that will take too long to provide answers can have serious impacts on the effectiveness of modeling. The key goal of the problem identification phase is to identify the regulatory task at hand and assess the role that modeling could play. At this stage, the description of the regulatory task and the way modeling might address this regulatory task should be open to comment and criticism. Thus, when formal model evaluation is performed in later stages of a model’s life cycle, it must take into account the problem identification and how it influenced the nature of the model.

EVALUATION AT THE CONCEPTUAL MODEL STAGE

Some of the most important model choices are made at the conceptual stage, yet most model evaluation activities tend to avoid a critical evaluation at this stage. Often a peer review panel will begin its efforts with the implicit acceptance of all the key assumptions made to establish the conceptual model and then devote all of its attention to the model building and model application stages. Alternatively, a late-stage peer review of a nearly complete model may find the underlying conceptual model to be flawed. Finally, data must be assessed at this point to ensure the availability of data for model development, input parameters, and evaluation. The result of this process is the selection of a computational modeling approach that addresses problem identification, data availability, and transparency requirements.

Evaluating the Conceptual Model

Quality of the Basic Science

It is important to evaluate the fundamental science that forms the basis of the conceptual model. One approach is to consider the idea of a pedigree of a domain of science, a word expressing something about the history—and the quality of the history—of the concepts and theories behind the model and, possibly more appropriately, each of its constituent parts (Funtowicz and Ravetz 1990). Over the years, the fundamental scientific understanding and other understandings that are used in constructing models have been consolidated and refined to produce a mature product with a pedigree. For example, a task, such as modeling of lake eutrophication, started as an embryonic field of study, passed through the adolescence of competing schools of thought (Vollenweider 1968) to the gathering of consensus around a single scientific outlook (disputed only by the sub-discipline’s “rebels”), and finally to the adulthood of the fully consolidated outlook, contested, if at all, only by those considered “cranks” by the overwhelming majority—a history partially recounted in Schertzer and Lam (2002). The status of a model’s pedigree typically changes over time, with the strong implication of ever-improving quality. Although some models may cease to improve over time, it is more common that they continue to be refined over time, especially for long-lived regulatory models. The concept of a pedigree can be applied to the model as a whole, to one of its major subblocks (such as atmospheric chemistry or human toxicology), or to each constituent model parameter.

Quality of Available Data

For environmental models, one of the issues often ignored at the conceptual stage is the availability of data. It is one of the major issues in the use of environmental models, and it has multiple aspects:

-

Data used as inputs to the model, including data used to develop the model.

-

Data used to estimate values of model parameters (internal variables).

-

Data used for model evaluation.

There is some overlap between the first and second types of data, depending on the model application, but in general these data needs can be viewed as separate. One major problem is that collecting new data at this early stage is rarely considered. Model development and evaluation and data collection should be iterative and proceed together, but in practice, these activities at agencies such as EPA often are done by separate groups that may not meet each other until late in the process. The critical issue is that, at this stage in a model’s life cycle, there should be a requirement for an assessment of the data needs and a corresponding data collection plan. Modelers should be building on-going collaborations with experimentalists and those responsible for collection of additional data to determine how such new data can guide model development and how the resulting models can guide the collection of additional data.

EVALUATION AT THE COMPUTATIONAL MODEL STAGE

In moving from the identification of the problem, the assessment of required resolving power and data needs, and the decision concerning the basic qualitative modeling approach to a constructed computational model, a number of practical considerations arise. As we observe in Chapter 3, these considerations include (1) choices of specific mathematical expressions to represent the interactions among the model’s state variables; (2) evaluation of a host of algorithmic and software issues relating to numerical solution of the model’s equations; (3) the assembly of data to develop inputs, to test, and to compare with model results; and (4) the ability of the model to arrange the resulting numerical outputs for comprehension by all the stakeholders concerned. A prime motivation at this stage of evaluation is, does the behavior of the model approximate well what is observed? For modelers, nothing is more convincing and reassuring than seeing the curve of the model’s simulated responses passing through the dots of observed past behavior. However, as discussed in Chapter 1, natural systems are never closed and model results are never unique. Thus, any match between observations and model results might be due to processes not represented in the model canceling each other out. In addition, simply reproducing results that match observations for a single scenario or several scenarios does not mean the model can represent the full statistical characteristics of observations.

The evaluation needs fundamentally to address the questions laid out at the beginning of this chapter: the degree to which the model is

based on generally accepted science and computational methods, whether the model fulfills its designed task, and how well its behavior approximates that observed in the system being modeled. A majority of model evaluation activities traditionally occur at the stages in which the computational model is developed and applied. These are the stages when quality assurance and quality control (QA/QC) efforts are documented, testing and analysis reports generated, model documentation produced, and peer review panels commissioned. However, these formal model evaluation activities must be cognizant of and built on earlier evaluation activities during the problem identification and model conceptualization stages.

Scientific Basis, Computational Infrastructure, and Assumptions

The scientific basis, the computational infrastructure, and the major assumptions used within a computational model are some of the first elements typically addressed during model evaluation. The initial evaluation of the scientific theories, possible computational approaches, and inherent assumptions should occur during the development of the conceptual model. Model builders must reassess these issues during the construction of a computational model by obtaining a wider array of peer reviewers’ and others’ comments. Indeed, these issues are typically the first elements assessed by outside evaluators when EPA models go before review panels, such as the SAB, or the public.

Code Verification of Numerical Solutions and Other Quality Assurance Procedures

Verification of model code and assurance that the numerical algorithms are operating correctly are the essence of QA/QC procedures. These activities evaluate to what extent the executable code and other numerical software in the constructed model generate reliable and consistent solutions to the mathematical equations of the model. The document prepared for a recent evaluation by SAB of the very-high-order 3MRA modeling system (the multimedia model described in Babendreier and Castleton [2005]) defines code verification as follows (EPA 2003e):

Verification refers to activities that are designed to confirm that the mathematical framework embodied in the module is correct and that the computer code for a module is operating according to its intended design so that the results obtained using the code compare favorably with those obtained using known analytical solutions or numerical solutions from simulators based on similar or identical mathematical frameworks.

Verification activities include taking steps to ensure that code is properly maintained, modified to correct errors, and tested across all aspects of the module’s functionality. Table 4-1 lists some of the software checks listed by EPA to ensure that model computations proceed as anticipated. Other QA/QC activities include (1) the use of the model in different operating systems with different compilers to make sure that the results remain the same and (2) testing under simplified scenarios (for example, with zero emissions, zero boundary conditions, and zero initial conditions) where an analytical solution is available or an empirical solution is known with a high degree of confidence.

Like so many things, concluding—provisionally—that the constructed model is working with a reliable code comes down to the outcomes of the most rudimentary tests, such as those “comparing module results with those generated independently from hand calculations or spreadsheet models” (EPA 2003e). These tests are the equivalent of the tests made time and again to ensure a sensor or instrument is working properly. They are tests that are maximally robust against ambiguous outcomes. As such, they only ensure against gross deficiencies but cannot confirm that a model is sufficiently sound for regulatory use. Constant vigilance is required. “Even legacy codes that had more than a decade of wide use experienced environmental conditions that caused unstable numerical solutions” (EPA 2003e).

Where models are linked, as in linking emissions models to fate and transport models as discussed in Chapter 2, additional checks and audits are required to ensure the streams of data passing back and forth have strictly identical meanings and units in the partnered codes engaging in these electronic transactions. Further, such linked models do not lend themselves to be compared with simple test cases that have known solutions. This makes QA/QC activities related to linked models much more difficult.

TABLE 4-1 QA/QC Checks for Model Code

|

Software code development inspections: Software requirements, software design, or code are examined by an independent person or groups other than the author(s) to detect faults, programming errors, violations of development standards, or other problems. All errors found are recorded at the time of inspection, with later verification that all errors found have been successfully corrected. |

|

Software code performance testing: Software used to compute model predictions is tested to assess its performance relative to specific response times, computer processing usage, run time, convergence to solutions, stability of the solution algorithms, the absence of terminal failures, and other quantitative aspects of computer operation. |

|

Tests for individual model module: Checks ensure that the computer code for each module is computing module outputs accurately and within any specific time constraints. (Modules are different segments or portions of the model linked together to obtain the final model prediction.) |

|

Model framework testing: The full model framework is tested as the ultimate level of integration testing to verify that all project-specific requirements have been implemented as intended. |

|

Integration tests: The computational and transfer interfaces between modules need to allow an accurate transfer of information from one module to the next, and ensure that uncertainties in one module are not lost or changed when that information is transferred to the next module. These tests detect unanticipated interactions between modules and track down cause(s) of those interactions. (Integration tests should be designed and applied in a hierarchical way by increasing, as testing proceeds, the number of modules tested and the subsystem complexity.) |

|

Regression tests: All testing performed on the original version of the module or linked modules is repeated to detect new “bugs” introduced by changes made in the code to correct a model. |

|

Stress testing (of complex models): Stress testing ensures that the maximum load (for example, real time data acquisition and control systems) does not exceed limits. The stress test should attempt to simulate the maximum input, output, and computational |

|

load expected during peak usage. The load can be defined quantitatively using criteria such as the frequency of inputs and outputs or the number of computations or disk accesses per unit of time. |

|

Acceptance testing: Certain contractually required testing may be needed before the new model or model application is accepted by the client. Specific procedures and the criteria for passing the acceptance test are listed before the testing is conducted. A stress test and a thorough evaluation of the user interface is a recommended part of the acceptance test. |

|

Beta testing of the pre-release hardware/software: Persons outside the project group use the software as they would in normal operation and record any anomalies encountered or answer questions provided in a testing protocol by the regulatory program. The users report these observations to the regulatory program or specified developers, who address the problems before release of the final version. |

|

Reasonableness checks: These checks involve items like order-of-magnitude, unit, and other checks to ensure that the numbers are in the range of what is expected. |

|

Source: EPA 2002e. |

Comparing Model Output to Data

Comparing model results with observations is a central component of any effort to evaluate models. However, such comparisons must be made in light of the model’s purpose—a tool for assessment or prediction in support of making a decision or formulating policy. The inherent problems of providing an adequate set of observations and making credible comparisons give rise to some important issues.

The Role of Statistics

Because (near) perfect agreement between model output and observations cannot be expected, statistical concepts and methods play an inevitable and essential role in model evaluation. Indeed, it is tempting to use formal statistical hypothesis testing as an evaluation tool, perhaps in part because such terms as “accepting” and “rejecting” hypotheses sound as though they might provide a way to validate models in the now-discredited meaning of the term. However, the committee has concerns that testing (for example, that the mean of the observations equals the mean of the model output) will fail to provide much insight into the appropriateness of using an environmental model in a specific application. As discussed in Box 1-1 in Chapter 1, the evaluation of the ozone models in the 1980s and early 1990s showed that estimates of ozone concentrations from air quality models were good when compared with observations for any choice of statistical methods, but only because the errors in the models tended to cancel out. Statistics has value for conceptualizing, visualizing, and quantifying variation and dependence rather than for serving as a source of “rigorous” or “objective” standards for model evaluation. The committee cautions, however, that standard, elementary statistical methods will often be inappropriate in environmental applications, for which problems of spatial and temporal dependence are frequently a critical issue.

Although epidemiologists and air quality modelers use statistical tests to compare models with data, it is difficult to find broad-based examples in regulatory models in which formal hypothesis testing (e.g., testing that the means of two distributions are equal based on the p-value of some test statistic) has played a substantial role in any model evaluation. What is needed is statistically-sophisticated analysts that can do non-standard statistical analyses appropriate for the individual circum-

stances. For example, air quality modelers commonly present a variety of model performance statistics along with graphic comparisons of model results with observations; these are sometimes compared with acceptability criteria set by EPA for various applications.

Comparing Models with Data—Model Calibration

Model calibration is the process of changing values of model input parameters within a physically defensible range in an attempt to match the model to field observations within some acceptable criteria. Models often have a number of parameters whose values cannot be established in the model development stage but must be “calibrated” during initial model testing. This need requires observations for conditions that must broadly characterize the conditions for which the model will be used. Lack of characterization of the conditions can result in a model that is calibrated to a set of conditions that are not representative of the range of conditions for which the model is intended. The calibration step can be linked with a “validation” step where a portion of the observations are used to calibrate the model, and then the calibrated model is run and results compared with the other portion of data to “validate” the model. The typical criteria used for judging the quality of agreement is mean square error, or the average squared difference between observed values and the values predicted by the model.

The issue of model calibration can be contentious. The calibration tradition is ingrained in the water resources field by groundwater, stream-flow, and water-quality modelers, whereas the practice is shunned by air-quality modelers. This practice is not merely a disagreement about terminology, but a more fundamental difference of opinion about the relationship of models and measurement data, which is explored in Box 4-3. However, it is clear that both fields, and modelers in general, accept a fundamental role for measurement data to improve modeling. In this unifying view, model calibration is not just a matter of fiddling about trying to find suitable best values of the coefficients (parameters) in the model. Instead, calibration has to do with evaluating and quantifying the posterior uncertainty attached to the model as a function of the measured data, prior model uncertainty, and the uncertainty in the measured data against which it has been reconciled (calibrated). This view is clearly Bayesian in spirit, using data and prior knowledge to arrive at updated posterior expectations about a phenomenon, if not strictly so in number-crunching,

computational terms. It is the recognition of the fundamental codependence of models and data from measurements that is common among all models.

|

BOX 4-3 To Calibrate or Not To Calibrate In an ideal world, calibration of models would not be necessary, at least not if we view calibration merely as the search for values of a model’s parameters that provide the best match of the model’s behavior with that observed of the real system. It would not be necessary because the model would contain only parameters that are known to a high degree of accuracy. To be more pragmatic, but nonetheless somewhat philosophical, there is a debate about whether to calibrate a model or not in the real world of environmental modeling. That debate centers around two features: (1) the principle of engaging models with field data in a learning context during the development of the model; and (2) the principle of using calibration for quantifying the levels of uncertainty attached to the model’s parameters, with a view to accounting for how that uncertainty propagates forwards with predictions. The former lies within the conventional understanding and interpretation of what constitutes model calibration. The latter requires a broader, but less familiar, interpretation of calibration. Taken together, calibration can be seen to be something more than a “fiddler’s paradise,” in which the analyst seeks merely to fit the data, no matter how absurd the resulting parameter estimates; and no matter the obvious risk of subsequently making confident—but probably highly erroneous—predictions of future behavior, especially under conditions different from those reflected in the data used for model calibration (Beck 1987). The nub of the debate turns on the extent to which the analyst trusts the prior knowledge about the individual components of the model, to which the parameters are attached, yet discounts the power of the calibration data set—reflecting the collective effects of all the model’s parameters on observed behavior of the prototype, as a whole—to overturn these presumptions. The debate also turns on the extent to which individual parameters can be “measured” independently in the field or laboratory under tightly controlled conditions. The more this is feasible, the less the need to calibrate the behavior of the model (as a whole). In this argument, however, it must be remembered that many parameters remain quantities that appear in presumed relationships, that is, mathematical relationships or models between the observed quantities, so that the problem of calibrating the model as a whole is transferred to calibrating the relationship between the observables to which each individual parameter is bound. This may seem less of a problem when needing to substitute a value for soil porosity into a hydrological model. But it is surely a problem when the need is to find a value for a maximal specific growth-rate constant for a bacterial population, which is certainly not a quantity that can itself be directly measured. Experience of model calibration and the stances taken on it differ from one discipline to another. In hydrology and water quality modeling it is unsurprising how the wider interpretation and greater use of calibration have become established practice. In spite of the relatively large volumes of hydrological field data customarily available, experience over several decades has shown that hydro |

|

logical and water quality models inevitably suffer from a lack of identifiability in that many combinations of parameter values will enable the model to match the data reasonably well (Jakeman and Hornberger 1993; Beven 1996). Trying to find a best set of parameter values for the model, even a best structure for the model, have come to be accepted as barely achievable goals at best. In a pragmatic, decision-support context, what matters—given uncertain models, uncertain data, and therefore uncertain model forecasts—is whether any particular course of action (among the various options) manages to stand out above the fog of uncertainty as clearly the preferred option. Under this view, the posterior parametric uncertainties reflect the signature, or fingerprint, of all the distortions and uncertainties remaining in the model as a result of reconciling it with the field data. In a more theoretical context, interpretation of the patterns of such distortions and uncertainties can serve the purpose of learning from having engaged the model systematically with the field data. In other disciplines, such as modeling of air quality, calibration is viewed as a practice that should be avoided at all costs. Inputs to these models include pollutant emissions (spatially, temporally, and chemically resolved), three-dimensional meteorological fields (such as wind speed and direction, temperature, relative humidity, sunlight intensity, clouds and rain, also temporally resolved). Air quality models also rely on a wide range of parameters used in the description of processes simulated by the models (such as turbulent dispersion coefficients for atmospheric mixing, parameters for the dry and wet removal of pollutants, kinetic coefficients for gas and aqueous-phase chemistry, mass transfer rate constants, and thermodynamic data for the partitioning of pollutants among the different phases present in the atmosphere). The need for the determination of all of these input values and parameters has resulted in a huge investment in scientific research funded by EPA, state air pollution authorities (especially California), National Science Foundation (NSF), and others to understand the corresponding processes and to develop model application-independent approaches to estimate them. Further, complex regional meteorological models (such as MM5 and RAMS), which are used for other applications, are used to simulate the meteorology of the atmosphere and provide the corresponding input fields to the air quality models. Meteorological models themselves take advantage of the available measurements of wind speed, temperature, relative humidity, etc. in the domain that they simulate, to improve their predictions. In a technique called data assimilation the available measurements are used to “nudge” the meteorological model predictions closer to the available measurements by adding forcing terms (proportional to the difference between the model predictions and the observations) to the corresponding differential equations solved by the model. This semi-empirical form of correction can maintain the meteorological model results close to reality and improve the quality of the input provided to the air quality model. This form of calibration is involved only in the preparation of the input to the air quality model and is independent of the air quality model, its prediction, and the available air quality modeling. The emission fields are prepared by corresponding emission models that incorporate the available information about the activity levels (for example, traffic, fuel consumption by industries, population density, etc.) and emission factors |

|

(emissions per unit of activity) for each source. Some of the best applications of air quality models have been accompanied by field measurements of emissions during the model application period (for example, transportation emissions in tunnels in the area, characterization of major local sources, even use of airplanes to characterize the plumes of major point sources, etc.). Boundary conditions are measured usually by ground monitoring stations or airplanes in selected points close to the model boundary (for example, in San Nicolas Island off the shore of Southern California). Laboratory (for example in smog chambers simulating the atmosphere) and field experiments have been used to understand the corresponding processes and to provide the necessary parameters. One could argue that the historical lack of reliance on model calibration for the air quality area has resulted in significant research to understand better the most important processes and in the development of approaches to provide the necessary input. This has required a huge investment by US funding sources (the State of California, EPA, NSF, etc.) but has also resulted in probably the most comprehensive modeling tools available for environmental regulation. One could also argue that the atmosphere is a much easier medium to model (after all air is the same everywhere) compared to soil, water, ecosystems, or the human body. However, the success of the “let’s try to avoid calibration” philosophy may be a good example in the long term for other environmental modeling areas. In sum, there is nothing wrong with the healthy debate over calibration. Either way—whether calibration is accepted practice or shunned—all agree that fitting a model to past data is not an end in itself, but a means: to the end of learning something significant about the behavior of the real system; and to the end of faithfully reflecting the ineluctable uncertainty in a model. |

One effect of the rejection of model calibration for regional air quality models is the idea that model results are more appropriate for relative comparisons than for absolute estimates. EPA guidance for the use of models for the attainment of ambient air quality standards (the attainment demonstration) for 8-hour ozone and the fine-particle particulate matter (PM) begins with the notion that model estimates will not predict perfectly the observed air quality at any given location at the present time and in the future (EPA 2005d). Thus, models for demonstrating whether emissions reduction strategies will result in attainment demonstrations are recommended for use in a relative sense in concert with observed air quality data. Such use essentially involves taking the ratio of future to present predicted air quality from the models to develop a ratio and then multiplying it by an “ambient” design value. The effect will be to anchor future concentrations to “real” ambient values. If air quality models were calibrated to observations, as is done with water quality models, there would be less need to use the model in a relative sense.

EPA also uses the concept that air quality models are imperfect predictors to argue for a weight-of-evidence approach to attainment demonstrations. Under a weight-of-evidence approach, the results of the air quality models are no longer the sole determining factor but rather one input that may include trends in ambient air quality and emissions observations and other information (EPA 2005d).

Comparing Models with Data—Data Quality

Not all data are of equal quality. In addition to the usual issues of systematic and random measurement errors, there is the issue that some “data” are the result of processing sensor information through instrumentation algorithms that are really models in their own right. Examples include the post-processing of raw information that is obtained from remote-sensing instruments (e.g., Henderson and Lewis 1998) or from techniques used to separate total carbon in an airborne PM sample into inorganic and organic carbon components (e.g., Chow et al. 2001). Thus, if the data and model output disagree, the extent of disagreement that is due to the model used to convert raw measurements into the quantity of interest must be considered. An additional and related difficulty with many data sets is that the standard assumption of statistically independent measurement errors can be untrue, including for remotely sensed data, greatly complicating model and measurement data comparisons.

Comparing Models with Data—Temporal and Spatial Issues

Even with data of impeccable quality, there are still many problems in comparing them with model output. One problem is that data and model output are generally averages over different temporal and spatial scales. For example, air pollution monitors produce an observation at a point, whereas output from regional-scaled air quality models discussed earlier in the report produces at best averages over the grid cells used in the numerical solution of the governing partial differential equations. However, if for no other reason than that the meteorological inputs into air pollution models will inevitably have errors at small spatial scales, there is no expectation that the models would reproduce actual average pollution levels over the grid cells, even if such an average could be

observed. The models may do somewhat better at reproducing averages over larger regions of space or over longer intervals of time than the nominal observation frequency, and a model that does well with such averages could reasonably be judged as functioning well. Similar problems underlie many health assessments, such as when pharmacokinetic models for one exposure scenario are compared with measurements from a different exposure scenario or when data from laboratory rats exposed for 90 days are used to estimate human risks from a continuous lifetime exposure. Even so, these dilemmas are the reason models are needed—it is impossible to measure all events of interest.

There are two potential approaches that can address some of these spatial and temporal problems. The collection of two or more measurements inside the same computational cell provides information on the spatial variability of the pollutant of interest within a grid cell. However, monitoring is not always available to obtain multiple samples within the same grid cell. For the temporal issue, the collection of high temporal resolution measurements, including continuous measurements, can allow the comparison to be performed at several different time-intervals. In this manner, a model could be “stressed” to produce, for example, diurnal profiles of the pollutant. Again, however, the availability of monitoring data is a limiting factor.

Comparing Models with Data—Simulating Events Versus Long-Term Averages

An important issue is whether models are expected to reproduce observations on an event-by-event basis. If the model is used for short-term assessment or forecasting, then such a capability would be necessary. For example, when assessing whether an urban storm-water control system would be overwhelmed, resulting in the discharge of combined storm-water sewage into receiving waters, only a single-event rainfall-runoff model might be required to treat each potential storm event individually. However, when the goal is to predict how the environment will change over the long term in response to an EPA policy, such a capability is neither necessary nor sufficient. General circulation models used for assessing climatic change may be an extreme example of models that cannot reproduce event-by-event observations but are able to reproduce many of the statistical characteristics of climate over long-tern scales.

Comparing Models with Data—Simulating Novel Conditions

The comparison of model and measured data under existing conditions, no matter how extensive, provides only indirect evidence of how well a model will do at predicting what will happen under novel or post-regulatory conditions. Yet, this comparison is a fundamental element of model evaluation and its relevancy is perhaps the biggest challenge EPA faces in assessing the usefulness of its models. When model results are to be extrapolated outside of conditions for which they have been evaluated, it is important that they have the strongest possible theoretical basis, explicitly representing the processes that will most affect outcomes in the new conditions to be modeled, and embodying the best possible parameter estimates. For some models, such as for air dispersion models, it may be possible to compare output with data in a wide enough variety of circumstances to gain confidence that they will work well in new settings. Satisfying all of these conditions, however, is not always possible, as the case of competing cancer potency dose-response models makes clear. Absent a solid understanding of underlying mechanisms, the best model for doing such an extrapolation is a matter of debate.

There is the potential to test some types of models in cases where the system behaves differently, such as when there is a significant change in pollutant loads. Air pollution studies have indicated that air quality models can be stressed by simulating special periods, such as the Christmas holidays, with its low traffic emissions and high wood burning; days with major power disruptions (for example, the blackouts in the Northeast); or days when most people go on vacation (as in Europe). Pope (1989) provides an example of the possible insights from developing a model under such novel conditions. This study used epidemiological modeling to look at the reduction in hospital admissions for pneumonia, bronchitis, and asthma that occurred in the Utah Valley when a major source of pollution, the local steel mill, was closed for 13 months. The observation of a statistically significant reduction in hospital visits correlated to reductions in ambient PM concentrations helped to initiate a reassessment of ambient air quality standards for this pollutant.

Comparing Models with Data—A Bayesian Approach

For models that are used frequently, a Bayesian approach might be considered to quantitatively support model evaluation (Pascual 2004;

Reckhow 2005). For example, prior uses of the model could provide comparison of pre-implementation predictions of the success of an environmental management strategy with post-implementation observations. Using Bayesian analysis, this “prior” could be combined with a prediction-observation comparison for the site and topic of interest to evaluate the model as well as improve the strategy.

Uncertainty Analysis

Formal uncertainty analysis provides model developers, decision makers, and others with an assessment of the degree of confidence associated with model results as well as the aspects of the model having the largest impacts on its results. As such, uncertainty analysis and related sensitivity analysis is a critical aspect of model evaluation during model development and model application stages. The use of formal qualitative and quantitative uncertainty analysis in environmental regulatory modeling is growing in response to improvements in methods and computational abilities. It also is increasing due to advice from other National Research Council reports (e.g., NRC 2000, 2002), mandates from the Office of Management and Budget (OMB 2003), and internal EPA guidance (e.g., EPA 1997b). As shown in Box 4-4, there are a number of policy-related questions that can be informed through formal uncertainty analysis.

However, a formal uncertainty analysis, in particular a formal quantitative uncertainty analysis, is difficult to carry out for a variety of reasons. As noted by Mogan and Henrion (1990), “The variety of types and sources of uncertainty, along with the lack of agreed terminology, can generate considerable confusion.” In the recent report Not a Sure Thing: Making Regulatory Choices Under Uncertainty, Krupnick et al. (2006) noted the lack of a universal typology or taxonomy of uncertainty, making any discussion of the topic of uncertainty analysis for regulatory models difficult. There is also a concern that uncertainty analysis can be difficult to incorporate into policy settings. Krupnick et al. (2006) concluded that one unintended impact of an increased emphasis on uncertainty analysis may be a decrease in decision makers’ confidence in the overall analysis. The SAB Regulatory Environmental Modeling Guidance Review Panel (EPA 2006d) elaborates on the concern about using uncertainty analysis in the policy process. Although the panel noted that evaluation of model uncertainty is important in both understanding a sys-

tem and in presenting results to decision makers, it raised the concern that the use of increasingly complex quantitative uncertainty assessment techniques without an equally sophisticated framework for decision making and communication may only increase management challenges. Further, it is very difficult to perform quantitative uncertainty analyses of complex models, such as regional air quality models (N. Possiel, EPA Office of Air Quality Planning and Standards, personal commun., May 19, 2006). As these complex models are linked to other models, such as those in the state implementation planning process discussed in Chapter 2, the difficulties in performing quantitative uncertainty analysis greatly increases.

Defining Sources of Uncertainty

Although a single uniformly accepted method of categorizing uncertainties does not exist, several general categorizations are clearly defined. As noted by Krupnick et al (2006), the literature distinguishes variability from lack of knowledge and uncertainties in parameters from model uncertainties. Variability represents the inherent heterogeneity that cannot be reduced through additional information, whereas other aspects of parameter uncertainties might be reduced through more monitoring, observations, or additional experiments. The distinction of model uncertainties from parameter uncertainties is also critical. Model uncertainties represent situations in which it is unclear what all the relevant variables are or what the functional relationships among them are. As noted by Morgan (2004), model uncertainty is much more difficult to address than parameter uncertainty. Although identifying and accounting for the consequences of model structural error and uncertainty has only recently become the subject of more sustained and systematic research (Beck 1987, 2005; Beven 2005; Refsgaard et al. 2006), most analyses that have considered the issue report that model uncertainty might have a much larger impact than uncertainties associated with individual model parameters (Linkov and Burmistrov 2003; Koop and Tole 2004; Bredehoeft 2005). Such structural errors amount to conceptual errors in the model, so that if identified at this stage of evaluating the constructed model, assessment should be cast back to reevaluation of the conceptual model.

Krupnick et al. (2006) also identified two other sources of uncertainty important for regulatory modeling: decision uncertainty and linguistic uncertainty. As first observed by Finkel (1990), there are uncer-

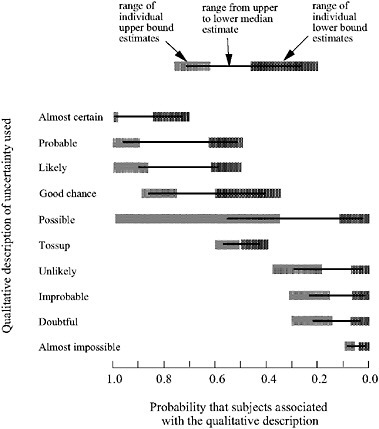

tainties that arise whenever there is ambiguity or controversy about how to apply models or model parameters to address questions that arise from social objectives that are not easy to quantify. Issues that fall into this category are the choice of discount rate and parameters that represent decisions about risk tolerance and distributional effects. Uncertainties associated with language, although implicitly qualitative, are important to consider due to the need to ultimately communicate results of a computational model to decision makers, stakeholders, and the interested public. As applied to computational models, sensitivity analysis is typically thought of as the quantification of changes in model results as a result of changes in individual model parameters. It is critical for determining what parameters or processes have the greatest impacts on model results. Figure 4-2 displays the differing interpretations associated with various descriptors that might be used to describe results from models.

Sensitivity and Uncertainty Analysis

Sensitivity and uncertainty analyses are procedures that are frequently carried out during development and application of models. As applied to computational models, sensitivity analysis is typically thought of as the quantification of changes in model results as a result of changes in individual model parameters. The concept of sensitivity analysis has value in the model development phase to establish model goals and examine the advantages and limitations of alternative algorithms. For example, the definition of sensitivity analysis developed by EPA’s Council on Regulatory Environmental Models (CREM) includes consideration of model formulation (EPA 2003d). The goal of a sensitivity analysis is to judge input parameters, model algorithms, or model assumptions in terms of their effects on model output. Sensitivity analyses can be local or global. A local sensitivity analysis is used to examine the effects of small changes in parameter values at some defined point in the range of these values. A global sensitivity analysis quantifies the effects of variation in parameters over their entire space of these values. When addressing global sensitivity, the effect of varying more than one parameter on the response must be considered. A common approach for assessing sensitivity and uncertainty is to run the model multiple times while slightly changing the inputs.

FIGURE 4-2 Range of probabilities that people assign to different words absent any specific context. Source: Adapted from Wallsten et al. 1986. Reprinted with permission; copyright 1986, Journal of Experimental Psychology: General.

Quantitative uncertainty analysis is the determination of the variation or imprecision in the output function based on the collective variation of the model inputs using a variety of methods, including Monte Carlo analysis (EPA 1997b). In a broader perspective, uncertainty analysis examines a wide range of quantitative and qualitative factors that might cause a model’s output values to vary. All models have inherent capabilities and limitations. The limitations arise because models are simplifications of the real system that they describe, and all assessments using the models are based on imperfect knowledge of input parameters. Confronting the uncertainties in the constructed model requires a model performance evaluation that (1) estimates the degree of uncertainty in the assessment based on the limitations of the model and its inputs, and (2) illustrates the relative value of increasing model complexity, of providing

a more explicit representation of uncertainties, or of assembling more data through field studies and experimental analysis.

Model Uncertainty Versus Parameter Uncertainty

Although a distinction between model uncertainty and parameter uncertainty is typically made, there is an argument over whether there is indeed any fundamental distinction. In the sense that both kinds of uncertainty can be handled through probabilistic or scenario analyses, the committee agrees, but notes that this applies only to the uncertainty about the output of models. For assessing uncertainty in model outputs, uncertainty about which model to use can be converted to uncertainty about a parameter value by constructing a new model that is a weighted average of the competing models (e.g., Hammitt 1990). But the issue of selecting a set of models that captures the full space of outcomes and the choice of weighting factors is problematic. Therefore, the committee considers that there is a worthwhile practical distinction between model and parameter uncertainty, if for no other reason than to emphasize that model uncertainty might dwarf parameter uncertainty but can easily be overlooked. This is particularly important in situations where models with alternative conceptual frameworks to the standard model are too expensive to run or do not even exist.

EVALUATION AT THE MODEL APPLICATION STAGE

A new set of practical considerations apply in moving from the development of a computational model to the application of the model to a regulatory problem, including the need for specifying boundary and initial conditions, developing input data for the specific setting, and generally getting the model running correctly. These issues do not detract from the fundamental questions and trade-offs involved in model evaluation. The evaluation will need to consider the degree to which the model is based on generally accepted science and computational methods; whether the model fulfills its designed task; and how well its behavior approximates that observed in the system being modeled. For models that are applied to a specific setting for which the model was developed, these questions should have been addressed at the model development stage, particularly if the developers are the same group applying the model.

However, frequently models are applied by users who are not the developers or even in the same institution as the developers. In many cases, model users might have a choice in the model to use and in alternative modeling approaches. In these cases, model evaluation must address the same fundamental considerations about the appropriateness of the model for the application and explicitly address the trade-offs between the need for the model to get the right answer for the right reason and the need for the modeling process to be transparent to stakeholders and the interested public. The discussion here focuses on the evaluation of model applications using uncertainty analysis. Later in this chapter, we discuss other elements of model evaluation relevant to this stage, including peer review and documentation of the model history. Chapter 5 discusses issues related to model selection.

Uncertainty Analysis at the Model Application Stage

At the model application stage, an uncertainty analysis examines a wide range of quantitative and qualitative factors that might cause a model’s output values to vary. Effective strategies for representing and communicating uncertainties are important at this stage. For many regulatory models, credibility is enhanced by acknowledging and characterizing important sources of uncertainty. For many, it is possible to quantify the effects of variability and uncertainty in input parameters on model predictions by using error propagation methods discussed below. They should not be confused with or used in place of a more comprehensive evaluation of uncertainties, including the consideration of model uncertainties and how decision makers might be informed by uncertainty analysis and use the results.

The Role of Probability in Communicating Uncertainty

Realistic assessment of uncertainty in model outputs is central to the proper use of models in decision making. Probability provides a useful framework for summarizing uncertainties and should be used as a matter of course to quantify the uncertainty in model outputs used to support regulatory decisions. A probabilistic uncertainty analysis may entail the basic task of propagating uncertainties in inputs to uncertainties in outputs (which would commonly, although perhaps ambiguously, be

called a Monte Carlo analysis). Bayesian analysis, in which one or more sources of information are explicitly used to update prior uncertainties through the use of Bayes’ theorem, is another approach for uncertainty analysis and is better, in principle, because it attempts to make use of all available information in a coherent fashion when computing the uncertainties of any model output. However, the committee considers the use of probability to quantify all uncertainties to be problematic. The committee disagrees with the notion that might be inferred from such statements as Gayer and Hahn’s (2005): “We think policy-makers should design regulations for controlling mercury emissions so that expected benefits exceed expected costs if that statement is interpreted to mean that large-scale analyses of complex environmental and human health effects should be reduced not only to a single probability distribution but also to a single number, the mean of the distribution.” Although it is hard to argue with the principle that regulations should do more good than harm, there are substantial problems in reducing the results of a large-scale study with many sources of uncertainty to a single number or even a single probability distribution. We contend that such an approach draws the line between the role of analysts and the role of policy makers in decision making at the wrong place. In particular, it may not be appropriate for analysts to attach probability distributions to critical quantities that are highly uncertain, especially if the uncertainty is itself difficult to assess. Further, the notion that reducing the results of a large-scale modeling analysis to a single number or distribution is at odds with one of the main themes that began this chapter, that models are tools for helping make decisions and are not meant as vehicles for producing decisions. In sounding a cautionary note about the difficulties of both carrying out and communicating the results of probabilistic uncertainty analyses, we are trying to avoid the outcome of having models (and a probabilistic uncertainty analysis is the output of a model) make decisions.

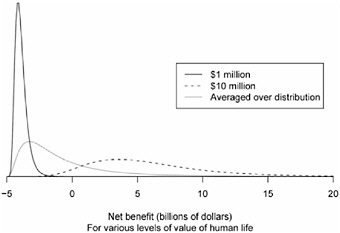

To see the difficulties that can result from this purely probabilistic approach to uncertainty analysis, consider the following EPA study that, in response to an OMB requirement, treated uncertainties probabilistically. In a study on emissions from nonroad diesel engines, one of the key parameters affecting the monetary value of possible regulations was the value assigned to a human life (EPA 2004b). A probability distribution for this parameter was obtained using the following approach. The 5th percentile of the value of a human life was set at $1 million, based on a study that had used this value as the 25th percentile. The 95th percen-

tile was set at $10 million, based on another study that had used this value as the 75th percentile. Then, using “best professional judgment” (see Table 9B-1 in EPA 2004b), a normal distribution was fit using the 5th and 95th percentile points, resulting in the mean value of a human life being $5.5 million. The numbers $1 and $10 million are rough approximations at least in part due to the decimal number system. Nevertheless, despite the arbitrary choice of highly rounded figures for the 5th and 95th percentiles, there is nothing preposterous about $5.5 million as an estimate of the value of a human life (although there is something disconcerting about the fact that this distribution assigns a probability of 0.0083 to the value of a human life being negative). However, the real problem here is not in the details of how this distribution was obtained, but that it was done with the goal of providing policy makers with a single distribution for the net benefit of a new regulation. Though the committee does not imply that such analysis arbitrarily assigns values, monetizing such things as a human life or visibility in the Grand Canyon clearly requires assessing what value some relevant population assigns to them. Thus, it is important to draw the distinction between uncertainties in such valuations and, say, uncertainty in how much lowering NOx emissions from automobiles will affect ozone levels at some location.

Another approach to uncertainty assessment is to calculate outcomes under a fixed number of plausible scenarios. If nothing in each scenario is treated as uncertain, then the outcomes will be fixed numbers. For example, one might consider scenarios with such names as highly optimistic, optimistic, neutral, pessimistic, or highly pessimistic. This approach makes no formal use of probability theory and can be simpler to present to stakeholders who are not fully versed in probability theory and practice. One advantage of the scenario approach is that many of those involved in modeling activities, including members of stakeholder groups and the public, may attach their own risk preference (such as risk seeking, risk adverse, or risk neutral) to such scenario descriptions. However, even using multiple scenarios ranging from highly optimistic to highly pessimistic will not necessarily ensure that such scenarios will bracket the true value.

In thinking about the use of probability in uncertainty analysis, it is not necessary or even desirable to consider only the extremes of representing all uncertainties by using probability or by not using probability at all. The assessment can have a hybrid approach using conditional distributions in which a small number of key parameters having large,

poorly characterized uncertainty are fixed at various plausible levels and then probabilities are used to describe all other sources of uncertainty.

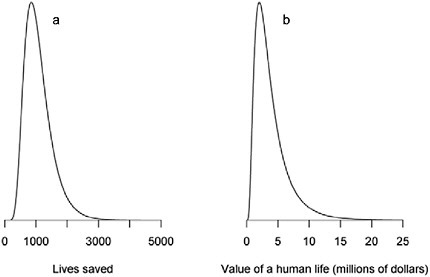

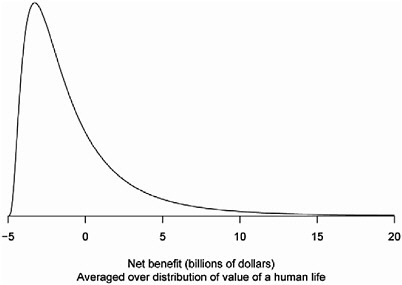

To illustrate how conditional probability distributions can be used to describe the uncertainty in a cost-benefit analysis, consider the following highly idealized problem. Suppose the economic costs of a new regulation are known to be $5 billion with very little uncertainty. Furthermore, suppose that nearly all of the benefit of the regulation will be through lives saved. Thus, to assess the monetized benefits of the regulation, we need to know how many lives will be saved and what value to assign to each life. Suppose that, based on a thorough analysis of the available evidence, the uncertainty about the number of lives saved by the regulation has a median of 1,000 and follows the distribution shown in Figure 4-3a. Furthermore, as in EPA (2004b), assume that the value of a human life follows a distribution with $1 million as its 5th percentile and $10 million as its 95th percentile, but unlike the EPA study, we assume that this distribution follows what is known as a lognormal distribution (rather than a normal distribution), which has the merit of assigning no probability to a human life having a negative value.