2

The Current NASA Applied Sciences Program

The strategic objective of the National Aeronautics and Space Administration’s (NASA’s) Applied Sciences Program (ASP) is to expand and accelerate the realization of economic and societal benefits from Earth science, information, and technology (NASA, 2004). This chapter describes and assesses strengths and weaknesses of ASP’s current approach to achieving this objective. This assessment provides the foundation upon which later chapters examine relationships between ASP and users of NASA data and research.

This chapter has two parts. First, it presents details of ASP’s overall approach, including its areas of focus, and the way the program is administered and assessed. The second section examines the strengths and weaknesses of this approach.

DETAILS OF THE CURRENT PROGRAM

The ASP is a component of NASA’s Earth Science Division—one of five divisions of the Science Mission Directorate (Figure 2.1). In 2001, ASP was instructed by NASA management to refocus applications toward the federal agencies and away from local, state, academic, and commercial applied remote sensing research (see Chapter 1 for a history of applications at NASA). The intent of the new focus was to support defined public goods in federal agencies that were already structured and mandated to provide these goods and services. This new approach welcomed academia and the commercial, state, and local sectors as participants in discussions for project development, but not as

NASA’s direct partners. With some few exceptions (for example, http://halvas.spacescience.org/broker/bess/archive/040404.htm and http://aiwg.gsfc.nasa.gov/esappdocs/crosscut/DEVELOPProjPlan.doc), nonfederal partners were supposed to be reached in a third-party manner through the federal agency partnering with NASA. Such an approach was supported by NRC (2002a, p. 5): “the process of interacting with other federal agencies to reach a broad group of users is a viable and appropriate avenue to pursue.”

FIGURE 2.1 The Applied Sciences Program in the current NASA organization structure. In 2001 the Earth Science Enterprise and ASP together with it were transferred into the Sun-Earth System Division. This division was subsequently moved into the current Science Mission Directorate.

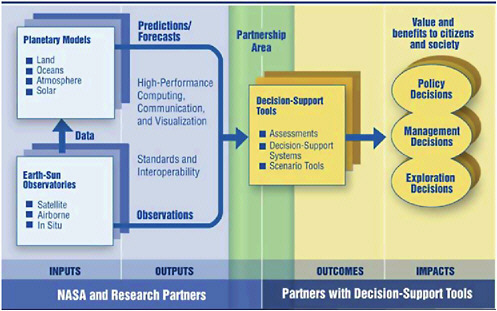

With this new model NASA sought to clarify the practical benefits of NASA Earth science, reduce perceived and real duplication of effort with other federal agencies, and focus its applications activities in a structured manner. NASA, as a research and development agency, was to extend the observations, model predictions, and computational techniques from its Earth science research to support its (primarily federal) partners. Partner agencies, in turn, would continue to develop and operate decision-support systems (DSS) to analyze scenarios, identify alternatives, and assess risks (Figure 2.2). The DSS are information-processing tools that allow authorities to make informed decisions. The tools are interactive computer systems that provide methods to retrieve information, analyze alternatives, and evaluate scenarios to gain insight into critical factors, sensitivities, and possible consequences of potential decisions. Although providing data for DSS is definitely not the only use for remote sensing data, NASA’s philosophy is that NASA-derived remote sensing data and information best fulfill their potential when incorporated into DSS. The Integrated System Solutions Architecture (ISSA) employed by ASP to partner with these agencies (Figure 2.2) embodies a linear transfer of data and research from NASA to its partners with the decision-support tools. In this representation, the ISSA constitutes an “open loop” system without any prescribed feedback mechanism from the partners to NASA for improving earth science models, earth observations, and/or decision support tools.

A representative example of a DSS is the Famine Early Warning System (FEWS) operated by the U.S. Agency for International Development (under contract to Chemonics International) in cooperation with African, south Asian, and Latin American countries. The U.S. Geological Survey provides technical support to FEWS. FEWS uses NASA-derived data to predict famine every year in the Sahel region of Africa. With ASP’s current approach NASA does not conduct research to develop the DSS. Developing the DSS is the responsibility of the user. Instead, NASA focuses on ensuring that DSS incorporate remote sensing products to best result.

FIGURE 2.2 The NASA Applied Sciences Program approach based on the use of partner agency decision-support systems. SOURCE: NASA, 2004.

With this focus on applications for decision support, ASP’s director, in 2002, promoted a systems engineering process around which the program would be organized. The systems engineering process was used to define an approach to extend NASA data and products into DSS operated by other agencies. Systems engineering is a systematic, formulaic approach with which NASA has significant expertise. This approach was viewed as a way to decrease the tendency for NASA technology to be “pushed” out to the community and instead to allow the community to “pull” the technology toward it with a bridging function and funding through ASP. As stated by NASA (2004), “The purposes of this rigorous approach are to identify and resolve data exchange problems, build partners’ confidence and reduce risk in adopting Earth science products, and strengthen partners’ abilities to use the data and predictions in their decision-support tools.” The next section describes the systems engineering process and subsequent sections present other contextual information on ASP’s approach.

Systems Engineering Process

The systems engineering process has three phases: evaluation, verification and validation, and benchmarking (NASA, 2004). To initiate the process NASA develops partnerships with organizations that it believes can use NASA satellite data. During this evaluation phase NASA signs a memorandum of agreement with partner agencies and identifies DSS that could benefit from NASA products. While providing data for DSS is not the only use for remote sensing data, NASA and ASP have identified DSS as a key focus for input of NASA data and research. ASP and the partner agency then determine baseline data requirements, assess the potential value and technical feasibility of assimilating NASA data into the DSS, and decide whether to pursue further collaboration on the project. During the verification and validation phase ASP and the partner agency measure performance against requirements and determine if the DSS can achieve the intended outcome. In the benchmarking phase (Box 2.1) the value of incorporating data or models generated by NASA-sponsored Earth science programs into the decision making of other federal agencies is tested and documented.

|

BOX 2.1 Benchmarking Benchmarking is one of the primary objectives of ASP’s systems engineering process. ASP indicates that “benchmarking refers to the task of measuring the performance of a product or service according to specified standards and reference points in order to compare performance, document value, and identify areas for improvements.” Part of NASA’s definition of a benchmark is “how the Decision Support System that assimilated NASA measurements compared in its operation, function, and performance to the earlier version.” NASA considers benchmarks an important measure of program quality and claims that “the benchmarking process (1) lowers perceived risk to adopting other Earth science data and technology, (2) provides metrics to report to agency sponsors and inform other users, (3) enables cooperation and builds trust between the agencies, and (4) develops success stories leading to more efforts between the agencies.” One part of the benchmarking phase is to measure and quantify the impact of NASA input to DSS output. According to NASA, the measurements can include the time to produce results; the accuracy, quality, and reproducibility of DSS results; and the enhanced ability of a DSS to fill a previously unmet need. SOURCE: http://science.hq.nasa.gov/earth-sun/applications/benchmark2.html |

Applications of National Priority

The ASP applies the systems engineering process in 12 Applications of National Priority: Agricultural Efficiency, Air Quality, Aviation, Carbon Management, Coastal Management, Disaster Management, Ecological Forecasting, Energy Management, Homeland Security, Invasive Species, Public Health, and Water Management. These 12 areas, expanded from seven at the inception of the program in 2002, had their origins in suggestions made by state and local participants in community meetings in the late 1990s (see also Chapter 1). NASA managers stated that they used certain criteria in developing the current set of 12 applications areas that considered: socioeconomic return, application feasibility, whether or not the area was appropriate for NASA, and partnership opportunities. The evolution from seven to 12 application areas was influenced by program personnel identifying gaps in the list, by the identification of new, potential decision-support tools or opportunities in other agencies, interest expressed in the program by managers in partnering agencies, and key national interests. With respect to national interests, areas identified in strategic plans of other agencies (e.g., NOAA) and national and international programs (the U.S. Climate Change Science Program, U.S. Climate Change Technology Program, Global Earth Observation initiative, Geospatial One Stop, or World Summit on Sustainable Development) (Birk, 2006) factored into guiding the application area definitions and scope. The relationship between the 12 ASP application areas and NASA’s six Earth Science Focus areas is shown in Table 1.2 of the web site http://science.hq.nasa.gov/strategy/AppPlan.pdf. The committee supports the fact that ASP is presently evaluating the 12 application areas to determine if they require modification in number or scope.

The 12 Applications of National Priority are administered by seven program managers who serve as points of contact (POCs) for each application area. These POCs are responsible for identifying appropriate data for their application area(s) and developing portfolios for partner agencies. POCs allocate ASP resources through open solicitations that undergo peer review (Box 2.2). These solicitations often request user proposals that target specific satellite remote sensing systems.

In addition to open solicitations, ASP funds rapid prototyping capacity projects that can forgo peer review. ASP managers identify research that is approaching readiness, or is ready, for application and provide funding to accelerate the application process. Funding for rapid prototyping is intended to complement existing support and to solve problems that lead to modification or transfer of a product or model rapidly to an operational entity. NASA has, for example, funded rapid prototyping work in

coral reef research with the intent of complementing NOAA research and monitoring of coral reef bleaching. Likewise, NASA has funded modification of the Moderate Resolution Imaging Spectroradiometer (MODIS) direct broadcast satellite data products to augment weather forecast operations.

A third portion of ASP’s budget goes to funded and unfunded earmarks that may or may not undergo peer review. In both fiscal years (FY) 2005 and 2006 ASP’s budget allocation from Congress was approximately $50 million (Birk, 2006). Out of this total approximately $15 million was directed to congressionally earmarked projects. Of the 147 projects supported by ASP during FY 2002 to FY 2006, approximately half were the result of research solicitations. The other projects were either internally directed (circa 37 percent) or directed by Congress (circa 19 percent). Furthermore, eight of the solicited projects were funded as a result of a congressionally directed FY 2005 earmark to Stennis Space Center for a special solicitation through the Mississippi Research Consortium. Fewer than half of all ASP-supported projects from FY 2002 to FY 2006 resulted from an open solicitation.

|

BOX 2.2 Types of ASP Open Solicitations ASP open solicitations have included those for the Research, Education and Applications Solution Network (REASoN) and Research Opportunities in Space and Earth Sciences (ROSES). The REASoN solicitations, managed solely by ASP, were first funded in 2002 and concluded in 2005. The FY 2005 REASoN solicitation on “decision support from Earth science results” attracted more than 250 responses. The NASA ROSES solicitation is not limited to ASP. In 2005 it attracted more than 100 responses. Researchers who apply for grants must develop a client in a federal agency other than NASA. The proposal must demonstrate how the client will use NASA products to improve its decision making. This forms the basis of benchmarking (Box 2.1). A new ROSES 2007 solicitation announcement was issued as this report was being finalized, and ASP has one element in that solicitation (Decision Support Through Earth Science Research Results); proposals will be reviewed during the summer of 2007. Each solicitation is managed with the NASA Solicitation and Proposal Integrated Review and Evaluation System (NSPIRES). Proposals are submitted electronically to NSPIRES and subsequently ranked by managers based on input from peer review. For additional information, see http://science.hq.nasa.gov/earth-sun/applications/sol_current.html and http://nspires.nasaprs.com/external/index.do/. |

Metrics

In the context of the current Administration, one clear emphasis of ASP’s approach is for clients to demonstrate measurable outputs. The importance of project documentation is underscored and benchmarking, as described previously, is a part of this documentation. Another part is the formal metrics data collection system called the Metrics Planning and Reporting System (MPAR). This system is used by all scientists who receive ASP funding and is administered by the University of Maryland Global Land Cover Facility (see http://glfc.umiacs.umd.edu/index.shtml). For example, recipients of NASA REASoN applied science funding are required to record their performance metrics monthly.

In developing this metrics system an MPAR working group was charged with reviewing and recommending program-level performance metrics and collection tools to measure how well each activity supports NASA Earth Science Enterprise science, application [italics added] and education programs (Rampriyan, 2006). This working group provides ongoing MPAR review, evaluation, recommendations, and metrics development. In addition, it recommends additions, deletions, or modifications to standardized sets of metrics.

The MPAR working group’s initial set of core (baseline) program-level metrics consisted of 10 items (Table 2.1, left-hand column). These metrics fall into three groups: a measure of the activity’s user community (metrics 1 and 2), a measure of the activity’s production and distribution of products and services (metrics 3, 4, 5, 6, and 7), and a measure of the activity’s support for ESE science, applications, and/or educational objectives (metrics 8, 9, and 10). The metrics provided by a research activity are assessed in the context of the project’s mission statement or program role. For example, a science activity might have no role in applications or education, and metrics 9 and 10 would not apply to it, or an activity might have a role in applications and education, and metric 8 would not apply to it. Similarly, the measure of an activity’s production and distribution is evaluated in the light of its role and the resources it uses. The magnitudes of the metrics that would indicate success for an activity vary widely across the set of activities, and all of these measures are not applicable to every activity.

STRENGHS AND WEAKNESSES OF ASP’s APPROACH

Building on the descriptions of key facets of ASP’s approach in the previous section, this section presents the strengths and weaknesses of ASP’s approach. In particular, this section conveys findings on the benchmarking process, the 12 Applications of National Priority, solicitations, metrics, and budget. Detailed discussion of the merits and drawbacks of ASP’s federal focus is postponed to later chapters (particularly Chapter 4). One outcome of ASP’s federal focus is that the work of ASP is insufficiently informed by the needs of the many users of its products and is not connected strongly enough to the final applications of NASA’s data and research. This lack of information from users is also carried to communication of user needs in mission planning. The Decadal Study (NRC, 2007a) emphasized user-defined requirements that maximize societal benefits; thus, this lack of direct connection and communication between ASP and many of its users or potential users is of major concern. One notable effort by ASP to generate a communication template with potential partners consists of a single catalog (http://www.asd.ssc.nasa.gov/m2m/coin_chart.aspx; see also Appendix E), or “coin chart” of the enormous variety of NASA products. This chart is an attempt to consolidate all the Earth Observation sources, physical parameters measured, models and analysis systems, model outputs/predictions, and decision support tools in NASA’s portfolio for access by the community interested in using NASA data and research for DSS. The chart is underused and indicates continued need for a more inclusive and holistic ASP role within NASA and with the stakeholder community. The perspectives presented in the remainder of this chapter are considered in the context of this broader ASP role.

Benchmarking

ASP’s benchmarked products have resulted in important benefits and lessons learned. Although the products themselves had their genesis in other NASA programs, and the “success” of the products is not solely due to ASP’s involvement in the products’ extension to partners’ DSS, the benefits of these products include (1) improved recovery support from national disasters such as hurricanes and floods; (2) more timely detection of tropical storms resulting in much improved evacuation decisions and (3) improvements in El Niño forecasting for the planning and protection of crops. Among the lessons learned are: (1) interaction and timely feedback from the customer is essential for project success; (2) success sometimes requires use of multiple sources of data and sensors;

TABLE 2.1 Program-level Metrics and Purpose Statements Associated with the Metrics Planning and Reporting System (MPAR).

|

|

Metric |

Definition and Implementation |

Purpose Statement |

|

1 |

Number of Distinct Users |

Number of distinct individual users (based on nonduplicated internet protocol addresses) who request and/or receive products, services, and/or other information during the reporting period. |

To measure the size of the project’s user community assessed in the context of its ESE role. |

|

2 |

Characterization of Distinct Users Requesting Products and Information (by Internet domain) |

Classes of users who obtain products and services from the project. The metric reveals the relative proportion of users accessing data and services from (1) first-tier domains: .com, .edu, .gov, .net, .mil, .org, foreign countries, and unresolved, and (2) second-tier domains, such as “nasa.gov”and “unm.edu.” |

To measure the types of users served by the activity assessed in the context of its ESE role. |

|

3 |

Number of Products Delivered to Users |

Number of separately cataloged and ordered data or information products delivered to users during the reporting period (by project-defined product identification). A product may consist of a number of items or files that make up a single item in a product catalog or inventory. |

To measure the data and information produced and distributed by the activity assessed in the context of its ESE role. The count of products delivered is a useful measure given the user-oriented definition of a product that is independent of how the product is constituted or how large it is. |

|

4 |

Number of Distinct Product Types Produced and Maintained by Project |

A product type is a collection of products of the same type such as sea surface temperature products. The project may add many or few product types through time but these should be tracked independent of the number of products delivered. |

The count of product types produced is a useful measure because of the effort by the activity required to develop and support each of its product types. |

|

5 |

Volume of Data Distributed |

The volume of data and/or data products distributed to users during the reporting period (in gigabytes[GB] or terabytes[TB]). |

Volume distributed is a useful measure but one that depends heavily on the particular types of data an activity produces and distributes and must be assessed in the context of the activity’s ESE role and data it works with. |

|

6 |

Total Volume of Data Available for Research and Other Uses |

Total cumulative volume of data and products held by the project and available to researchers and other users (GB or TB). This number can include data that is not online but is available through other means. |

The cumulative volume available for users provides a measure of the total resource for users that the activity creates. |

|

7 |

Delivery Time of Products to Users |

Response time for filling user requests during the reporting period. Averaged and standard deviation summary times are collected for electronic and hard media transfers. |

The delivery time for electronic or media transfers to users is a measure of the effectiveness of the activity’s service. |

|

8 |

Support for the ESE Science Focus Areas (when applicable) |

REASoN projects include a quantitative summary of data products supporting one or more of NASA’s science focus areas, and report any changes at the next monthly metrics submission. The focus areas are weather, climate change and variability, atmospheric composition, water and energy cycle, Earth surface and interior, and carbon cycle and ecosystems. |

To enable the ESE program office to determine which ESE science goals are supported by the activity, and to assess how the data products provided by the activity relate to that support. |

|

9 |

Support for the ESE Applications of National Importance (when applicable) |

REASoN projects include a quantitative summary of the data products supporting one or more of NASA’s Application and report any changes at the next monthly metrics submission. |

To enable the ESE program office to determine which ESE applications goals are supported by the activity, and to assess how the data products provided by the activity relate to that support. |

|

10 |

Support for ESE Education Initiatives (when applicable) |

In partnership with the study manager the REASoN projects submit data pertaining to the adoption and use of educational products by audience categories. These groups can include higher education, K-12, museums, and informal education. |

To enable the ESE program office to assess support provided by the activity to ESE education initiatives, by indicating use by education user groups of the activity’s products and services. |

|

SOURCE: Rampriyan, 2006 |

|||

(3) advanced planning and agreement between NASA and partner agencies on required metrics is important; (4) community-accepted metrics are the most meaningful; and (5) real-time information is highly desired by users.

Weaknesses of the benchmarking process are:

-

inconsistency in the benchmarking process across applications;

-

unclear demonstration that an ASP product improves the decision-making capability of a partner agency;

-

lack of a standard reference point for benchmarking;

-

inconsistency in the application of metrics; and

-

stretching or broadening the meaning of benchmarking.

These weaknesses are related to the way ASP conducts its activities and communicates with partners, and to the nature of its organizational position within NASA.

-

Inconsistency in the benchmarking process. NASA references two documents as guides for benchmarking and preparation of benchmark reports: Benchmarking Process Guide and a Proposed Guideline for Benchmarking Report Content. The guideline identifies six benchmarking activities:

-

planning and design;

-

description of methods and metrics used;

-

preparatory activities;

-

data collection/user feedback;

-

analysis of findings; and

-

lessons learned.

-

A note to the guideline indicates that “quantitative assessments should be emphasized in the benchmarking phase to the greatest extent practicable” (NASA, 2007). A review of several ASP benchmark reports indicated considerable diversity in the approaches. The program element and project applications teams made up of NASA and partner agency personnel determined the form and structure of the benchmarking process and the content of the benchmark report. It is apparent that the benchmarking is driven more by the characteristics of specific applications and the tools and procedures of the partner agencies than by any standard format.

-

Unclear demonstration of impact. Major parts of the benchmarking are the comparisons made by the applications teams of (1) the decision-support tools that use the Earth science observations and models; and (2) the partners’ existing tools and procedures. A desired outcome of ASP is for the partner agencies to adopt the use of NASA Earth science products. Thus, a second major activity of the benchmarking is that the applications teams develop proper documentation of procedures and guidelines

-

to describe the steps to access and assimilate the Earth science observations and products. An issue of concern is how to demonstrate in a balanced manner that the ASP product improves the decision-making capability of the partner agency and results in benefits to society.

-

Lack of standardization. While the intent of benchmarking is, among other things, to permit comparison of different products in terms of benefits, there are many cases where a standard reference point does not exist and the benchmarking team has to create its own standard. In addition, cases exist where the benchmarking simply takes the form of enhancements of a previous DSS.

-

Inconsistent application of metrics. The metrics ASP uses for measuring the effectiveness of its products in improving user decision-making capability and assessing the ultimate benefits received are not always suited to the products’ goals or purposes. A major challenge in benchmarking is identifying metrics that have the necessary status to be recognizable standards or figures of merit for making the desired comparisons. For ASP these metrics are often only developed following the completion of a project and the ensuing effort to carry out the benchmarking. The application of metrics lacks consistency in terms of recognizable standards or reference points. The general absence of a more prescriptive and quantitative process for measuring product success compromises in-depth comparisons of product benefits. In the private sector the metrics for benchmarking products are usually clear. They include the quantification of such performance indicators as product quality, ease of application, ability to meet customer schedules, time from product development to application, customer response, and the ability to meet goals or product targets.

-

Stretching or broadening the meaning of benchmarking. The meaning of the benchmarking process is stretched where suitable standards cannot be developed, as in compromising the product comparative basis of a standard by employing an application-specific measure or developing improvements in a DSS without making any comparisons.

The weaknesses of the benchmarking process and the lessons learned point to opportunities for improving this process. These include:

-

Identification of principles applicable to all benchmarking efforts so that consistency and integration can be achieved. A desired outcome would be a concept that facilitates comparisons and the assessment of the relative value of products and services.

-

Greater involvement of third-party users (the broader community). The ASP has the opportunity to greatly enhance the benefits of NASA applied science products and services to society. While the links between NASA and partner agencies are somewhat clear, the links between the partner agencies and the broader community and between ASP and the broader community are insufficiently informed. The result is a major barrier in assessing the real and long-term benefits to society.

-

Limiting benchmarking to meaningful standards and metrics. Some of the benchmarks have to be stretched to meet the intent of a national or international benchmark. Benchmarking products for DSS ought to be based on distinguishable reference points or standards.

-

A comprehensive review of benchmarks and benchmark reports to develop an improved set of metrics. Little evidence exists of attempting to refine the benchmarking process across all program elements. The current state of benchmarking is very much an ad hoc process. The database that now exists in the form of many benchmark reports appears to be adequate for developing a systematic basis for streamlining the benchmarking process and developing a set of metrics more suitable to quantification.

ASP benchmarking fails to take account of the end use. It is assumed that ASP intends its benchmarking to account for this. An alternative is that ASP intends to leave that aspect to the agency partner and merely wishes to ensure that ASP is doing its part in the partnership. This may be a subtle distinction, but it reflects the difference between criticizing ASP for not fulfilling its mission and then criticizing its mission for being too constrained by the boundaries placed around it by others. An understanding of partner requirements is needed in order to allow benchmarking to function effectively and take into account the “end uses” of NASA products. Some changes to the documentation and reporting requirements of the partners and the greater use of external review groups would aid in making the needs of end users more transparent to ASP. These issues are addressed in more detail in Chapter 4.

Applications of National Priority

Agency personnel and others expressed interest and concern about the 12 Applications of National Priority. Their concerns were grouped as follows: (1) whether ASP is the appropriate NASA lead in certain application areas; (2) the extent to which connections to user agencies have developed for key national applications; and (3) the mismatch between the number of applications and ASP staffing levels.

-

ASP as appropriate NASA lead. In some applications areas other agencies are providing significantly more funding than is ASP. For example, ASP is not the only agency engaged in carbon management; ASP spending in the Carbon Management application is less than that of the Department of Energy’s Terrestrial Carbon Program and the National Science Foundation’s special carbon cycle solicitations. The Department of Energy also has interests in energy management. Careful evaluation of ASP’s involvement in various applications where other agencies have strong and well-funded programs may assist ASP in setting its priorities in balance with its resources and in being most effective in partnerships with federal agencies and the broader community. The idea is not to infer an exclusive correlation between level of funding and benefits achieved, but rather to determine where ASP can be most effective in taking leading roles and establishing partnerships.

-

Extent of connections developed to partner agencies for key applications. The Homeland Security, Disaster Management, Energy Management, and Carbon Management application areas each have natural partnering agencies with whom to establish dialogue and projects. For example, the Department of Homeland Security (DHS) recognizes that remote sensing products are critical to homeland security yet little interaction with NASA regarding applied research has been initiated (Barnard, 2006). In addition, the Federal Emergency Management Agency (FEMA), responsible for national emergency preparedness and response in DHS, has had little to do with the ASP’s Disaster Management application area. The Minerals Management Service, a potential partner with Energy Management, noted that improved remote sensing products are needed immediately for support of management of oil, gas, sand, gravel, and other extractive activities in the Gulf of Mexico, but no discussions have been opened with ASP (Lugo-Fernandez, 2006). The weaknesses in some of these potential partnerships are not solely a function of ASP’s actions or organization but result from organizational, managerial, and resource issues at partnering agencies as well. Some of these relationships are discussed in more detail in Chapter 3.

-

Staffing levels. Concern was expressed that the 12 application areas cannot be appropriately administered by the small ASP staff. It is difficult for the seven managers to be knowledgeable about the information content in all 12 application areas where the managers are required to both establish relationships with new partners and maintain existing relationships with established partners.

Given ASP’s ongoing examination of its application areas, an opportunity exists for adaptation based on the committee’s findings and other feedback. Dialog with partner agencies in NASA and with the broader user community will undoubtedly be central to informing ASP’s decisions. With respect to the staffing issue in particular, one possible approach could incorporate open solicitations and peer review of some application areas to be led and conducted by scientists at a NASA center (e.g., Ames, Marshall, Goddard, Stennis) while still being coordinated by an ASP program manager. There is considerable expertise at the NASA centers, and each has special areas of applications expertise.

Solicitations

Solicitation issues fall into two categories: the focus of some solicitations and the way solicitations are managed.

-

Solicitation focus. The aim of focused solicitations targeting remote-sensing systems is to stimulate demand for products that require data from those systems. Thus, it is possible for NASA to build a system that has little demand and ASP could then be directed to initiate a solicitation to generate interest in the data stream to support the sensor’s existence. An example of such an open solicitation is the one that encouraged those submitting proposals to incorporate little used Gravity Recovery and Climate Experiment (GRACE) data instead of MODIS data. Approximately 80 percent of the proposals ignored this emphasis and focused instead on the familiar MODIS data (Birk, 2006). Uncertainty on data continuity is likely a significant factor in discouraging users from proposing to work with data from some sensors. For example, NASA’s highest spatial resolution remote-sensing system (Landsat-7 Enhanced Thematic Mapper + (L7/ETM+), which has significantly exceeded its predicted lifetime, has no approved follow-on programs that will provide data continuity. Users are understandably reluctant to propose applications based on these data streams.

-

Solicitation management.The largest drawbacks evident to the committee in ASP’s management of the solicitation process were complications created by regular, significant, and sometimes hard-to-find changes in the program documentation which has made the proposal process difficult or discouraging for potential partners. In addition, the expectation for independent (nonfederal) applicants to establish federal partnerships in advance of some proposal submittal deadlines created additional, unrealistic, tasks for the applicants, and potentially discour-

-

aged viable and interesting proposals from being submitted to the applicant pool. A constructive step in the direction of better management of the solicitation process was implemented in the recent solicitation announcement for ROSES 2007 (see also Box 2.2). For this solicitation NASA offered a conference call to explain the ROSES 2007 announcement to all interested parties, and provided Internet presentations on the process for those who wanted further information.

These challenges point to opportunities for improving ASP’s approach to solicitations. Improvements in the solicitation process could encourage submission of more high-quality and innovative project applications, thereby improving the opportunity for greater success in fulfilling the application of NASA data to a variety of projects with societal benefits. ASP could streamline the solicitation process somewhat, for example, by facilitating communications between independent (nonfederal) applicants and federal partners. Other improvements to the focus of some of the solicitations require NASA, outside of ASP, to be active in engaging ASP early in the mission development process. Similarly, NASA could rely on ASP to extract information and communicate with the user community to obtain their input as to user needs for data and research. ASP could then be in a position to generate stronger proposal solicitation programs that represented data streams that are both available and desirable.

Metrics Planning and Reporting System

NASA’s Metrics Planning and Reporting System (MPAR) would be a good tool for establishing a system of accountability and measurement of project progress and success. However, MPAR appears to collect metrics as if most of the users were NASA Distributed Active Archive Centers (DAACs) rather than NASA-sponsored application projects. DAAC metrics include information on the number of hits on a website and the volume of data distributed; these types of metrics are not entirely suitable to gauge the progress of projects coordinated by agencies, institutes, or groups that do not serve an archiving function. Other valuable applied remote sensing metrics include publication of results in refereed journals, masters and doctoral dissertations completed as a result of the applied research, presentation of applied results at meetings of learned societies or in public forums, and documentation of the use of NASA-derived data or models in the user’s DSS. Fortunately, recent improvements in the Metrics Planning and Reporting System allow the

incorporation of impact metrics. The Decadal Study (NRC, 2007a, p. 146) also commented on this topic:

Systems of program review and evaluation will need to be revamped to make our vision of concurrently delivering societal benefits and scientific discovery come into being. Numbers of published papers, or scientific citation indices, or even professional acclamation from scientific peers will not be enough… The degree to which human welfare has been improved, the enhancement of public understanding of and appreciation for human interaction with and impacts on Earth processes, and the effectiveness of protecting property and saving lives will additionally become important criteria for a successful Earth science and observations program.

The private sector (see also Chapter 4) has generated successful commercial applications employing NASA data and research. A mechanism to engage the private sector and include appropriate metrics would give ASP a new and potentially beneficial way to perform its bridging role. Chapter 5 builds on this discussion of metrics and proposes solutions.

Budgeting and Accounting

The committee commented on two aspects of ASP’s budgeting and accounting: (1) the negative impact of earmarks on ASP’s ability to manage its portfolio, and (2) challenges created by research grants having to cover costs of obtaining commercial imagery. The topics of budgeting and accounting differ from the others in this section because they are externally driven. Furthermore, the committee was not tasked to make recommendations on such matters. Nonetheless, these topics provide insights into some of the constraints under which ASP managers operate and both NASA and Congress might consider their impact on the program.

-

Earmarks. In addition to raising issues of lack of peer review and occasionally being unfunded, earmarks present a management challenge to ASP staff. These issues often emerge late in the fiscal year with the requirement that they must allocate part of their budget to earmarked projects.

-

Commercial imagery. Covering commercial imagery costs in research grants is currently a required part of research proposals. This procedure

-

has resulted in elevated project costs that decrease the practicality of conducting, for example, any type of research on changes in the natural environment. This type of research often incorporates frequent snapshots over extended periods of time over large geographic areas and the costs of such coverage using commercial imagery may be prohibitive.

Developing partnerships among the government, private industry, and the academic community to facilitate feedback between research and operational processes of the nation will require investment of ASP resources. Because ASP does not completely control its own budget, the program may experience difficulties in enacting strong strategic changes to its operations, and in supporting its generally rigorous open competition and peer review.

SUMMARY

-

Since 2001 ASP’s approach to reaching users has been primarily through other federal agencies. Despite practical advantages, this approach has restricted ASP’s relationship with end users, resulting in less inclusion of user needs in mission planning.

-

The program is organized into 12 Applications of National Priority. The committee heard opposing arguments in support of either more or fewer application areas. The need for greater depth in key partnerships was also emphasized. ASP’s openness toward adapting this applications list and the nature of its partnerships in some areas is well timed.

-

The program is underpinned by a systems engineering process that provides a framework to transition NASA products into decision-support systems operated by other agencies. Benchmarking is a central activity in this process, but it has yet to arrive at a level of consistency and clarity to serve the needs of the program.

-

ASP supports research through three mechanisms: open solicitations, which are peer reviewed; rapid prototyping activities, which are not peer reviewed but allow greater responsiveness to new opportunities; and earmarks, which may or may not be peer reviewed and add to the challenges of developing a focused program. From FY 2005 to FY 2006, funded earmarks accounted for roughly one-third of the total ASP budget. Fewer than half of all ASP-supported projects from FY 2002 to FY 2006 resulted from an open solicitation. Despite ASP’s attempt to steer proposals to certain data streams, submissions tend to gravitate to familiar sensors. Uncertainty over the continuity of remote sensing data beyond the NASA research and development phase is a perennial, unsolved challenge faced by ASP. The absence of effective user feedback in the ISSA