Summary

INTRODUCTION AND OVERVIEW1

Clinical effectiveness research (CER) serves as the bridge between the development of innovative treatments and therapies and their productive application to improve human health. Building on efficacy and safety determinations necessary for regulatory approval, the results of these investigations guide the delivery of appropriate care to individual patients. As the complexity, number, and diversity of treatment options grow, the provision of clinical effectiveness information is increasingly essential for a safe and efficient healthcare system. Currently, the rapid expansion in scientific knowledge is inefficiently translated from scientific lab to clinical practice (Balas and Boren, 2000; McGlynn, 2003). Limited resources play a part in this problem. Of our nation’s more than $2 trillion investment in health care, an estimated less than 0.1 percent is devoted to evaluating the relative effectiveness of the various diagnostics, procedures, devices, pharmaceuticals, and other interventions in clinical practice (AcademyHealth, 2005; Moses et al., 2005).

The problem is not merely a question of resources but also of the way they are used. With the information and practice demands at hand, and new tools in the works, a more practical and reliable clinical effectiveness research paradigm is needed. Information relevant to guiding decision making in clinical practice requires the assessment of a broad range of research

questions (e.g., how, when, for whom, and in what settings are treatments best used?), yet the current research paradigm, based on a hierarchical arrangement of study designs, assigns greater weight or strength to evidence produced from methods higher in the hierarchy, without necessarily considering the appropriateness of the design for the particular question under investigation. For example, the advantages of strong internal validity, a key characteristic of the randomized controlled trial (RCT)—long considered the gold standard in clinical research—are often muted by constraints in time, cost, and limited external validity or applicability of results. And, although the scientific value of well-designed clinical trials has been demonstrated, for certain research questions, this approach is not feasible, ethical, or practical and may not yield the answer needed. Similarly, issues of bias and confounding inherent to observational, simulation, and quasi-experimental approaches may limit their use and enhancement, even for situations and circumstances requiring a greater emphasis on external validity.

Especially given the growing capacity of information technology to capture, store, and use vastly larger amounts of clinically rich data and the importance of improved understanding of an intervention’s effect in real-world practice, the advantages of identifying and advancing methods and strategies that draw research closer to practice become even clearer.

Against the backdrop of the growing scope and scale of evidence needs, limits of current approaches, and potential of emerging data resources, the Institute of Medicine (IOM) Roundtable on Evidence-Based Medicine, now the Roundtable on Value & Science-Driven Health Care convened the Redesigning the Clinical Effectiveness Research Paradigm: Innovation and Practice-Based Approaches workshop. The issues motivating the meeting’s discussions are noted in Box S-1, the first of which is the need for a deeper and broader evidence base for improved clinical decision making. But also important are the needs to improve the efficiency and applicability of the process. Underscoring the timeliness of the discussion is recognition of the challenges presented by the expense, time, and limited generalizability of current approaches, as well as of the opportunities presented by innovative research approaches and broader use of electronic health records that make clinical data more accessible. The overall goal of the meeting was to explore these issues, identify potential approaches, and discuss possible strategies for their engagement.

Participants examined ways to expedite the development of clinical effectiveness information, highlighting the opportunities presented by innovative study designs and new methods of analysis and modeling; the size and expansion of potentially interoperable administrative and clinical datasets; and emerging research networks and data resources. The presentations and discussion emphasized approaches to research and learning that had the potential to supplement, complement, or supersede RCT findings and

|

BOX S-1 Issues Motivating the Discussion

|

suggested opportunities to engage these tools and methods as a new generation of studies that better address current challenges in clinical effectiveness research. Consideration also was given to the policies and infrastructure needed to take greater advantage of existing research capacity.

Current Research Context

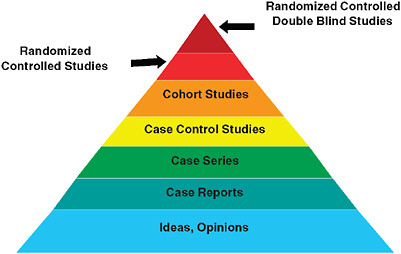

Starting points for the workshop’s discussion reside in the presentation of what has come to be viewed as the traditional clinical research model, depicted as a pyramid in Figure S-1. In this model, the strongest level of evidence is displayed at the peak of the pyramid: the randomized controlled double blind study. This is often referred to as the “gold standard” of clinical research, and is followed, in a descending sequence of strength or quality, by randomized controlled studies, cohort studies, case control studies, case series, and case reports. The base of the pyramid, the weakest evidence, is reserved for undocumented experience, ideas, and opinions. A brief overview of the range of clinical effectiveness research methods is presented in Table S-1. Approaches are categorized into two groups: experimental and nonexperimental. Experimental studies are those in which the choice and assignment of the intervention is under control of the investigator; the results of a test intervention are compared to the results of an alternative approach by actively monitoring the respective experiences of either individuals or groups receiving or not receiving the intervention. Nonexperimental studies are those in which manipulation or randomiza-

FIGURE S-1 The classic evidence hierarchy.

SOURCE: DeVoto, E., and B. S. Kramer. 2005. Evidence-Based Approach to Oncology. In Oncology an Evidence-Based Approach. Edited by A. Chang. New York: Springer. Modified and reprinted with permission of Springer SBM.

tion is generally absent, the choice of an intervention is made in the course of clinical care, and existing data collected in the course of the care process are used to draw conclusions about the relative impact of different circumstances or interventions that vary between and among identified groups, or to construct mathematical models that seek to predict the likelihood of events in the future based on variables identified in previous studies.

Noted at the workshop was the fact that, as currently practiced, the randomized controlled and blinded trial is not the gold standard for every circumstance. While not an exhaustive catalog of methods, Table S-1 provides a sense of the range of clinical research approaches that can be used to improve understanding of clinical effectiveness. Each method has the potential to advance understanding of the various aspects of the spectrum of questions that emerge throughout a product’s or intervention’s lifecycle in clinical practice. The issue is therefore not whether internal or external validity should be the overarching priority for research, but rather which approach is most appropriate to the particular need. In each case, careful attention to design and execution studies are vital.

Recent methods development, along with the identification of problems in generalizing research results to broader populations than those enrolled in tightly controlled trials, as well as the impressive advances in the potential availability of data through expanded use of electronic health records, have all prompted re-consideration of research strategies and opportuni-

TABLE S-1 Selected Examples of Clinical Research Study Designs for Clinical Effectiveness Research

|

Approach |

Description |

Data types |

Randomization |

|

Randomized Controlled Trial (RCT) |

Experimental design in which patients are randomly allocated to intervention groups (randomized) and analysis estimates the size of difference in predefined outcomes, under ideal treatment conditions, between intervention groups. RCTs are characterized by a focus on efficacy, internal validity, maximal compliance with the assigned regimen, and, typically, complete follow-up. When feasible and appropriate, trials are “double blind”—i.e., patients and trialists are unaware of treatment assignment throughout the study. |

Primary, may include secondary |

Required |

|

Pragmatic Clinical Trial (PCT) |

Experimental design that is a subset of RCTs because certain criteria are relaxed with the goal of improving the applicability of results for clinical or coverage decision making by accounting for broader patient populations or conditions of real-world clinical practice. For example, PCTs often have fewer patient inclusion/exclusion criteria, and longer term, patient-centered outcome measures. |

Primary, may include secondary |

Required |

|

Delayed (or Single-Crossover) Design Trial |

Experimental design in which a subset of study participants is randomized to receive the intervention at the start of the study and the remaining participants are randomized to receive the intervention after a pre-specified amount of time. By the conclusion of the trial, all participants receive the intervention. This design can be applied to conventional RCTs, cluster randomized and pragmatic designs. |

Primary, may include secondary |

Required |

|

Approach |

Description |

Data types |

Randomization |

|

Adaptive Design |

Experimental design in which the treatment allocation ratio of an RCT is altered based on collected data. Bayesian or Frequentist analyses are based on the accumulated treatment responses of prior participants and used to inform adaptive designs by assessing the probability or frequency, respectively, with which an event of interest occurs (e.g., positive response to a particular treatment). |

Primary, some secondary |

Required |

|

Cluster Randomized Controlled Trial |

Experimental design in which groups (e.g., individuals or patients from entire clinics, schools, or communities), instead of individuals, are randomized to a particular treatment or study arm. This design is useful for a wide array of effectiveness topics but may be required in situations in which individual randomization is not feasible. |

Often secondary |

Required |

|

N of 1 trial |

Experimental design in which an individual is repeatedly switched between two regimens. The sequence of treatment periods is typically determined randomly and there is formal assessment of treatment response. These are often done under double blind conditions and are used to determine if a particular regimen is superior for that individual. N of 1 trials of different individuals can be combined to estimate broader effectiveness of the intervention. |

Primary |

Required |

|

Interrupted Time Series |

Study design used to determine how a specific event affects outcomes of interest in a study population. This design can be experimental or nonexperimental depending on whether the event was planned or not. Outcomes occurring during multiple periods before the event are compared to those occurring during multiple periods following the event. |

Primary or secondary |

Approach dependent |

|

Approach |

Description |

Data types |

Randomization |

|

Cohort Registry Study |

Non-experimental approach in which data are prospectively collected on individuals and analyzed to identify trends within a population of interest. This approach is useful when randomization is infeasible. For example, if the disease is rare, or when researchers would like to observe the natural history of a disease or real world practice patterns. |

Primary |

No |

|

Ecological Study |

Non-experimental design in which the unit of observation is the population or community and that looks for associations between disease occurrence and exposure to known or suspected causes. Disease rates and exposures are measured in each of a series of populations and their relation is examined. |

Primary or secondary |

No |

|

Natural Experiment |

Non-experimental design that examines a naturally occurring difference between two or more populations of interest—i.e., instances in which the research design does not affect how patients are treated. Analyses may be retrospective (retrospective data analysis) or conducted on prospectively collected data. This approach is useful when RCTs are infeasible due to ethical concerns, costs, or the length of a trial will lead to results that are not informative. |

Primary or Secondary |

No |

|

Simulation and Modeling |

Non-experimental approach that uses existing data to predict the likelihood of outcome events in a specific group of individuals or over a longer time horizon than was observed in prior studies. |

Secondary |

No |

|

Approach |

Description |

Data types |

Randomization |

|

Meta Analysis |

The combination of data collected in multiple, independent research studies (that meet certain criteria) to determine the overall intervention effect. Meta analyses are useful to provide a quantitative estimate of overall effect size, and to assess the consistency of effect across the separate studies. Because this method relies on previous research, it is only useful if a broad set of studies are available. |

Secondary |

No |

|

SOURCE: Adapted, with the assistance of Danielle Whicher of the Center for Medical Technology Policy and Richard Platt from Harvard Pilgrim Healthcare, from a white paper developed by Tunis, S. R., Strategies to Improve Comparative Effectiveness Research Methods and Data Infrastructure, for June 2009 Brookings workshop, Implementing Comparative Effectiveness Research: Priorities, Methods, and Impact. |

|||

ties (Kravitz, 2004; Liang, 2005; Rush, 2008; Schneeweiss, 2004; Agency for Healthcare Research and Quality [AHRQ] CER methods and registry issues).

This emerging understanding about limitations in the current approach, with respect to both current and future needs and opportunities, sets the stage for the workshop’s discussions.

Clinical Effectiveness Research and the IOM Roundtable

Formed in 2006 as the Roundtable on Value & Science-Driven Health Care brings together key stakeholders from multiple sectors—patients, health providers, payers, employers, health product developers, policy makers, and researchers—for cooperative consideration of the ways that evidence can be better developed and applied to drive improvements in the effectiveness and efficiency of U.S. medical care. Roundtable participants have set the goal that “by the year 2020, 90 percent of clinical decisions will be supported by accurate, timely, and up-to-date clinical information, and will reflect the best available evidence.” To achieve this goal, Roundtable members and their colleagues identify issues and priorities for cooperative stakeholder engagements. Central to these efforts is the Learning Healthcare System series of workshops and publications. The series collectively characterizes the key elements of a healthcare system that is designed to generate and apply the best evidence for healthcare choices of patients and providers. A related purpose of these meetings is the identification and

engagement of barriers to the development of the learning healthcare system and the key opportunities for progress. Each meeting is summarized in a publication available through The National Academies Press. Workshops in this series include

-

The Learning Healthcare System (July 20–21, 2006)

-

Judging the Evidence: Standards for Determining Clinical Effectiveness (February 5, 2007)

-

Leadership Commitments to Improve Value in Healthcare: Toward Common Ground (July 23–24, 2007)

-

Redesigning the Clinical Effectiveness Research Paradigm: Innovation and Practice-Based Approaches (December 12–13, 2007)

-

Clinical Data as the Basic Staple of Health Learning: Creating and Protecting a Public Good (February 28–29, 2008)

-

Engineering a Learning Healthcare System: A Look to the Future (April 28–29, 2008)

-

Learning What Works: Infrastructure Required for Learning Which Care Is Best (July 30–31, 2008)

-

Value in Health Care: Accounting for Cost, Quality, Safety, Outcomes and Innovation (November 17–18, 2008)

This publication summarizes the proceedings of the fourth workshop in the Learning Healthcare System series, focused on improving approaches to clinical effectiveness research.

The Roundtable’s work is predicated on the principle that “to the greatest extent possible, the decisions that shape the health and health care of Americans—by patients, providers, payers, and policy makers alike—will be grounded on a reliable evidence base, will account appropriately for individual variation in patient needs, and will support the generation of new insights on clinical effectiveness.” Well-conducted clinical trials have and will continue to contribute to this evidence base. However, the need for research insights is pressing, and as data are increasingly captured at the point of care and larger stores of data are made available for research, exploration is urgently needed on how to best use these data to ensure care is tailored to circumstance and individual variation.

The workshop’s intent was to provide an overview of some of the most promising innovations and approaches to clinical effectiveness research. Opportunities to streamline clinical trials, improve their practical application, and reduce costs were reviewed; however, particular emphasis was placed on reviewing methods that improve our capacity to draw upon data collected at the point of care. Rather than providing a comprehensive review of methods, the discussion in the chapters that follow uses examples

to highlight emerging opportunities for improving our capacity to determine what works best for whom.

A synopsis of key points from each session is included in this chapter; more detailed information on session presentations and discussions can be found in the chapters that follow. Day one of the workshop identified key lessons learned from experience (Chapter 2) and important opportunities presented by new tools and techniques (Chapter 3) and emerging data resources (Chapter 4). Discussion and presentations during day two focused on strategies to better plan, develop, and sequence the studies needed (Chapter 5) and concluded with presentations on opportunities to better align policy with research opportunities and a panel discussion on organizing the research community for change (Chapter 6). Keynote presentations provided overviews of the evolution and opportunities for clinical effectiveness research and provided important context for workshop discussions. These presentations and a synopsis of the workshop discussion are included in Chapter 1. The workshop agenda, biographical sketches of the speakers, and a list of workshop participants can be found in Appendixes A, B, and C, respectively.

COMMON THEMES

The Redesigning the Clinical Effectiveness Research Paradigm workshop featured speakers from a wide range of perspectives and sectors in health care. Although many points of view were represented, certain themes emerged from the 2 days of discussion, as summarized below and in Box S-22:

-

Address current limitations in applicability of research results. Because clinical conditions and their interventions have complex and varying circumstances, there are different implications for the evidence needed, study designs, and the ways lessons are applied: the internal and external validity challenge. In particular given our aging population, often people have multiple conditions—co-morbidities—yet study designs generally focus on people with just one condition, limiting their applicability. In addition, although our assessment of candidate interventions is primarily through premarket studies, the opportunity for discovery extends throughout the lifecycle of an intervention—development, approval, coverage, and the full period of implementation.

|

BOX S-2 Redesigning the Clinical Effectiveness Research Paradigm

|

-

Counter inefficiencies in timeliness, costs, and volume. Much of current clinical effectiveness research has inherent limits and inefficiencies related to time, cost, and volume. Small studies may have insufficient reliability or follow-up. Large experimental studies may be expensive and lengthy but have limited applicability to practice circumstances. Studies sponsored by product manufacturers have to overcome perceived conflicts and may not be fully used. Each incremental unit of research time and money may bring greater confidence but also carries greater opportunity costs. There is a strong need for more systematic approaches to better defying how, when, for whom, and in what setting an intervention is best used.

-

Define a more strategic use to the clinical experimental model. Just as there are limits and challenges to observational data, there are limits to the use of experimental data. Challenges related to the scope of possible inferences, to discrepancies in the ability to detect near-term versus long-term events, to the timeliness of our insights and our ability to keep pace with changes in technology and procedures, all must be managed. Part of the strategy challenge is choosing the right tool at the right time. For the future of clinical effectiveness research, the important issues relate not to whether randomized experimental studies are better than observational studies, or vice versa, but to what’s right for the circumstances (clinical and economic) and how the capacity can be systematically improved.

-

Provide stimulus to new research designs, tools, and analytics. An exciting part of the advancement process has been the development of new tools and resources that may quicken the pace of our learning and add real value by helping to better target, tailor, and refine approaches. Use of innovative research designs, statistical techniques, probability, and other models may accelerate the timeliness and level of research insights. Some interesting approaches using modeling for virtual intervention studies may hold prospects for revolutionary change in certain clinical outcomes research.

-

Encourage innovation in clinical effectiveness research conduct. The kinds of “safe harbor” opportunities that exist in various fields for developing and testing innovative methodologies for addressing complex problems are rarely found in clinical research. Initiative is needed for the research community to challenge and assess its approaches—a sort of meta-experimental strategy—including those related to analyzing large datasets, in order to learn about the purposes best served by different approaches. Innovation is also needed to counter the inefficiencies related to the volume of studies conducted. How might existing research be more systematically summarized or different research methods be organized, phased, or coordinated to add incremental value to existing evidence?

-

Promote the notion of effectiveness research as a routine part of practice. Taking full advantage of each clinical experience is the theoretical goal of a learning healthcare system. But for the theory to move closer to the practice, tools and incentives are needed for caregiver engagement. A starting point is with the anchoring of the focus of clinical effectiveness research planning and priority setting on the point of service—the patient–provider interface—as the source of attention, guidance, and involvement on the key questions to engage. The work with patient registries by many specialty groups is an indication of the promise in this respect, but additional emphasis is necessary in anticipation of the access and use of the technology that opens new possibilities.

-

Improve access and use of clinical data as a knowledge resource. With the development of bigger and more numerous clinical data sets, the potential exists for larger scale data mining for new insights on the effectiveness of interventions. Taking advantage of the prospects will require improvements in data sharing arrangements and platform compatibilities, addressing issues related to real and perceived barriers from interpretation of privacy and patient protection rules, enhanced access for secondary analysis to federally sponsored clinical data (e.g., Medicare part D, pharmaceutical,

-

clinical trials), the necessary expertise, and stronger capacity to use clinical data for postmarket surveillance.

-

Foster the transformational research potential of information technology. Broad application and linkage of electronic health records hold the potential to foster movement toward real-time clinical effectiveness research that can generate vastly enhanced insights into the performance of interventions, caregivers, institutions, and systems—and how they vary by patient needs and circumstances. Capturing that potential requires working to better understand and foster the progress possible, through full application of electronic health records, developing and applying standards that facilitate interoperability, agreeing on and adhering to research data collection standards by researchers, developing new search strategies for data mining, and investing patients and caregivers as key supporters in learning.

-

Engage patients as full partners in the learning culture. With the impact of the information age growing daily, access to up-to-date information by both caregiver and patient changes the state of play in several ways. The patient sometimes has greater time and motivation to access relevant information than the caregiver, and a sharing partnership is to the advantage of both. Taking full advantage of clinical records, even with blinded information, requires a strong level of understanding and support for the work and its importance to improving the quality of health care. This support may be the most important element in the development of the learning enterprise. In addition, the more patients understand and communicate with their caregivers about the evolving nature of evidence, the less disruptive will be the frequency and amplitude of public response to research results that find themselves prematurely, or without appropriate interpretative guidance, in the headlines and the short-term consciousness of Americans.

-

Build toward continuous learning in all aspects of care. This foundational principle of a learning healthcare system will depend on system and culture change in each element of the care process with the potential to promote interest, activity, and involvement in the knowledge and evidence development process, from health professions education to care delivery and payment.

INCREASING KNOWLEDGE FROM PRACTICE-BASED RESEARCH

Of particular prominence throughout the workshop discussion was the notion of closing the gap between research and practice. Participants emphasized the challenges of ensuring that research is structured to provide

information relevant to real-world decisions faced by patients, providers, and policy makers; ensuring the rigor of the research design and execution; and monitoring the safety and effectiveness of new products, with more attention to point-of-care data.

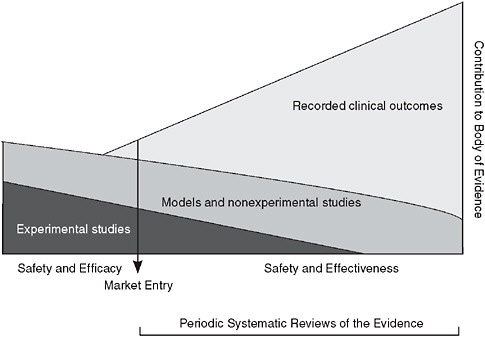

The multifaceted, practice-oriented approach to clinical effectiveness research discussed at the workshop complements and blends with traditional trial-oriented clinical research and may be represented as a continuum in which evidence is continuously produced by a blend of experimental studies with patient assignment (clinical trials); modeling, statistical, and observational studies without patient assignment; and monitored clinical experience (Figure S-2). The ratio of the different approaches varies with the nature of the intervention, as does the weight given to available studies. This enhanced flexibility and range of research resources is facilitated by the development of innovative design and analytic tools, and by the growing potential of electronic health records to allow much broader and structured access to the results of the clinical experience. The ability to draw on real-time clinical insights will naturally improve over time.

FIGURE S-2 Evidence development in the learning healthcare system.

PRESENTATION AND DISCUSSION SUMMARIES

The workshop presentations and discussions by experts from many areas of health care detailed the current state of clinical effectiveness research, provided examples of promising approaches, and proposed some key challenges and opportunities for improvement. Keynote addresses opened the 2 days of the workshop, previewing and underscoring the conceptual background, issues, and themes. IOM President Harvey V. Fineberg reviewed the evolution of clinical effectiveness research, and Carolyn M. Clancy offered meeting participants a vision for research that is better matched to evidence needs. Workshop discussions and presentations are briefly summarized; expanded discussions are included in the chapters that follow.

Clinical Effectiveness Research: Past, Present, and Future

In his keynote presentation, Fineberg briefly traced the evolution of clinical effectiveness research. From early efforts such as James Lynd’s evaluation of treatments for scurvy to 20th-century developments in statistics that strengthen scientific studies to the establishment of RCTs as the standard of Food and Drug Administration (FDA) in making judgments about efficacy in the early 1970s, clinical effectiveness research has developed rapidly and has helped to transform medical care. However, Fineberg suggested that the resulting research paradigm, with randomized controlled double blind trials at the pinnacle, has often left important evidence needs unmet when combined with the costs, complexity, and lack of generalizability of RCTs. These gaps in evidence, he noted, prompt a reevaluation of the current application and require movement toward a strategy that takes better advantage of the range of methodologies to develop evidence that meets the particular need.

Important to the redesign of the research paradigm is the consideration of a set of prior questions that could better shape research design and conduct. For example, understanding the purpose of and vantage point from which these clinical questions are being asked helps to put into perspective the roles and contributions of the study designs that might be employed. Thinking critically about what is being evaluated and mapping what is appropriate, effective, and efficient for the various types of questions is an ongoing and important challenge in clinical effectiveness research. These efforts will also combat the central paradox in health care today: Despite the overwhelming abundance of information available, there is an acute shortage of information that is relevant, timely, appropriate, and useful to clinical decision making.

The critically central question for clinical effectiveness research is what works best in clinical care for the individual patient at the time care is needed. Answering this type of question will require the transformation of current

approaches to a system that combines point-of-care focus with an electronic health record (EHR) data system coupled to systems for assembling evidence in a variety of ways. Such a system would combine several components: evaluation of learning what works and what does not work in a patient, weighing benefits and costs; decision support for the patient that would compensate for the overwhelming amount of evidence and make relevant determinations from the information available while increasing the pool of potentially useful information; meaningful continuing education for health professionals—real time, in time, practical, and applied—moving beyond the traditional lecture to learning in place, in real time, in the course of clinical care; and quality improvement systems that synthesize information from the three components above—evaluation, decision support, and meaningful continuing education.

Fineberg proposed a meta-experimental strategy, in which researchers not only focus on how well a certain method evaluates a particular kind of problem, in a specific class of patient, from a particular point of view, but also on determining the array of experimental methods that collectively perform in a manner that enables us to make better decisions for the individual and for society. With this approach, future learning opportunities can be structured to provide insights on what works for a particular kind of patient as well as how that strategy of evaluation can be employed to achieve a health system driven by evidence and based on value.

The Path to Research That Meets Evidence Needs

In the second day’s keynote address, Carolyn M. Clancy, director of the Agency for Healthcare Research and Quality (AHRQ), shared perspectives in two broad areas: emerging methods that might be applied to meet current challenges in clinical effectiveness research and approaches to turning evidence into action. Stressing the importance of not producing better evidence for its own sake, Clancy challenged researchers to focus on the goal of achieving an information-rich, patient-focused system. Building toward a healthcare system in which actionable information is made available to clinicians and patients and in which evidence is continually refined as a byproduct of healthcare delivery will require a broadening of the investigative approaches and methodologies that constitute the research arsenal.

Clancy noted that the traditional evidence hierarchy is being increasingly challenged, in part because it is inadequate to meet the current decision-making needs in health care, prompting calls for a rigorous reassessment of the appropriate roles for randomized and nonrandomized evidence. Recognizing that an intervention can work is a necessary, but not sufficient, requirement for making a treatment decision for an individual patient or for promoting it for a broad population. Even the most rigorously designed randomized trials have limitations, and research methods are needed to

explore critical questions related to important trade-offs between risks and benefits of treatments for individual patients.

Although some circumstances may always require randomized trials, nonrandomized studies can complement and extend insights from RCTs in various ways—for example, tracking longer term outcomes, monitoring replicability in broader populations and community settings, and expanding information on potential benefits and harms of a given intervention. From a practical perspective, these approaches can help to evidence match the pace of rapid change and innovation found, for example, in surgical procedures and medical device development. Promising advances include (1) practical clinical trials, in which trial design is based on information needed to make a clinical decision and conduct is embedded into healthcare delivery systems; and (2) the use of cohort study registries to explore heterogeneity of treatment effects due to setting, practitioner, and patient variation, and consequently to turn evidence into action.

Observational studies offer an alternative when trials are impractical or infeasible and also help to accelerate translation of evidence into practice and aid risk management and minimization efforts. The promotion of more transparent, consistent approaches to the assessment of evidence and increased emphasis on the quality of study design and conduct over the type of method used are trends toward research that fit evidence needs, as is the focus on new and improved research methods. Finally, Clancy emphasized that to ensure research impacts practice, attention also is needed to improve approaches to turning evidence into action, and recent efforts by AHRQ and others underscore the research community’s commitment to the creation of a system focused on the patient and improving health outcomes.

Cases in Point: Learning from Experience

The second chapter summarizes workshop discussions of case examples of high-profile issues—some linked to application of effective treatments or to premature adoption of unwarranted treatments—from which important lessons might be drawn about the design and interpretation of clinical effectiveness studies. The experiences recounted show that, from randomized trials to observational studies, each investigative approach has limitations. These limitations argue against using a particular approach and suggest that the research community needs more experience with the array of methodologies used to generate insights into clinical effectiveness and structured decision rules to guide the study design choice for particular research circumstances. Improvements can be made across the process, including careful consideration of the methods most appropriate to the question being asked; careful development and conduct of trials or studies to ensure they reflect the

“state of the art”; clear communication of results; and exploration of new approaches, such as using a hybrid mix of research approaches.

Hormone Replacement Therapy

The first case was presented by JoAnn E. Manson from Harvard Medical School on the impact of hormone replacement therapy (HRT) on health. Both observational studies and clinical trials have contributed critically important information to elucidate the health effects of HRT with estrogen and progestin and to inform decision making, and they constitute a model suggesting that research findings should be considered in the context of the totality of available evidence and that studies should be designed to complement and extend existing data. Manson noted that observational studies and randomized clinical trials of menopausal HRT and coronary heart disease (CHD) have produced widely divergent results. Observational studies had suggested a 40–50 percent reduction in the risk of CHD among women taking HRT, whereas randomized trials suggested a neutral or even elevated risk of coronary events. Well-recognized limitations of observational studies, including the potential for confounding by lifestyle practices, socioeconomic status, education, and access to medical care, as well as selection factors related to “indications for use,” can explain only some of the discrepancies. Other methodologic factors that may help to explain the differences include the limitations of observational studies in assessing short-term or acute risks, which led to incomplete capture of early clinical events after therapy began, and the predominance of follow-up time among compliant long-term users of HRT. In contrast to the greater weighting of long-term use in observational studies, clinical trial results tend to reflect shorter term use. Given that CHD risks related to HRT are highest soon after initiation of therapy, these differences may contribute substantially to the discrepancies observed.

Methodologic differences between observational studies and clinical trials, however, may not fully elucidate the basis for the discrepancies observed. The findings of observational studies and clinical trials are remarkably concordant for other health outcomes, including stroke, venous thromboembolism (blood clot), breast cancer, colorectal cancer, and fracture—outcomes that also should be affected by confounding and selection biases. Indeed, an emerging body of research suggests that the age of menopause or time since menopause critically influences the relationship between HRT and CHD outcomes.

Importantly, observational studies should be designed to capture both short- and long-term risks and should have frequent updating of exposure variables of interest (electronic health and pharmacy records may be useful). Clinical trials must be powered adequately to assess clinically relevant

subgroups and to address the possibility of a modulating effect of key clinical variables. Consideration of absolute risks in research presentation and interpretation is critically important. Finally, it may be helpful to incorporate intermediate and surrogate markers into study designs, although such markers can never fully replace clinical event ascertainment.

Drug-Eluting Coronary Stents

Research to date strongly suggests that further understanding of and solutions to the safety issues concerning drug-eluting stents (DES) will likely come from a mix of randomized trials and observational registries conducted in both the premarket and postmarket arenas and a collaborative effort among regulators, industry, and academia. As Ashley B. Boam from the FDA recounted, coronary drug-eluting stents have dramatically changed interventional cardiology practice since their introduction in the United States in 2003. These products—a combination of a metal stent and an antiproliferative drug—have significantly reduced the need for reintervention compared to the previous standard of care, bare metal stents. This substantial improvement has led to widespread adoption of these products and use in patients outside those enrolled in the initial pivotal clinical studies. The desire to bring additional DES technology to the market quickly drove research into the identification of potential surrogate markers for effectiveness. While at least two measures obtained from angiography (i.e., late loss and percentage diameter stenosis of vessel) have been identified as biomarkers with a strong correlation to clinical effectiveness—specifically, the need for a reintervention—no such marker has been identified as a possible surrogate for safety outcomes, such as death or myocardial infarction.

In the last half of 2006 and through 2007, however, the emergence of stent thrombosis—the occurrence of a clot within the stent that often leads to myocardial infarction or death—shifted the focus of DES research significantly from effectiveness to safety. Recent meta-analyses and research from centers in Europe and the United States have indicated that late stent thrombosis may be an ongoing risk to DES patients. Low event rates, less than 1 percent, and late-term occurrence (beyond 1 year postimplantation) have complicated efforts to understand the true incidence and etiology of this noteworthy complication. Confounding the picture is the lack of appropriate studies to optimize prescription of mandatory adjunctive dual antiplatelet therapy, early interruption of which is one known risk factor for stent thrombosis. The issue is challenging in part because what is under consideration is a low frequency event with a late-term appearance, which generally mandates very large and long studies. Randomization, long-term follow-up, concurrent device iterations and new platforms, on-label versus

broad clinical use considerations, and the role of resistance to acetylsalicylic acid and/or clopidogrel are among the other challenges.

Bariatric Surgery

Population-based registries—appropriately funded and constructed with clinician engagement—offer a compromise of strengths and limitations and may be the most effective tool for evaluating emerging healthcare technology, argued David R. Flum of the University of Washington. The dichotomy between “effectiveness” and “efficacy” is particularly relevant in evaluating surgical interventions where characteristics of the surgeon (experience, training, and specialty), variations in technical performance, patient selection, practice environments, and publication bias all influence the understanding of healthcare interventions. Case series, often authored by experts in the field, dominate the surgical literature. Despite their limitations, they are strong influences on clinical and policy decisions.

Bariatric interventions include a group of operations that have become increasingly popular with the advent of less-invasive surgical approaches and epidemic obesity. Understanding the safety and efficacy of bariatric interventions has come almost exclusively through single-center case series. A research group based at the University of Washington has worked to expand knowledge in this field through the use of retrospective population-level cohorts using administrative data, clinical registries, and longitudinal prospective cohorts that work to assess effectiveness. These safety data have been helpful in coverage decisions by payers in assessing quality improvement opportunities and in providing more realistic assessments of these interventions. Inherent limitations in effectiveness research include tradeoffs among numbers of patients and details on patients, the granularity and accuracy of the data, and limits to the types of outcomes that can be evaluated (i.e., no quality-of-life or functional data).

Antipsychotic Therapeutics

As shown in recent research to ensure that antipsychotic medication use is clinically effective, advances in study designs, databases, and analytic methods provide a toolbox of complementary techniques from which researchers can draw, suggested Phillip S. Wang of the National Institute of Mental Health (NIMH). Antipsychotic medications are now widely used by patients and account for a large proportion of pharmaceutical spending, particularly in public healthcare programs. However, there is a paucity of evidence to help guide clinical, purchasing, and policy decisions regarding antipsychotic medications. The recently completed, NIMH-sponsored comparative effectiveness trials of antipsychotic medications in patients

with schizophrenia (the CATIE trial), for example, blends features of efficacy studies and large, simple trials to create a pragmatic trial to provide extensive information about antipsychotic drug effectiveness over a drug course of at least 18 months. Recent advances in databases, designs, and methods can also be brought to bear to improve antipsychotic effectiveness. New study populations and databases have been developed, including practice-based networks that look at psychiatric care. Large administrative datasets are also available, such as Medicaid and useful health maintenance organization (HMO) databases, which are ideal for studying primary care in the setting where most mental health care is actually received.

Other approaches can be employed when trial data are not available. Researchers can use clinical epidemiologic data and methods, which are often a useful addition to the literature. When trials and quasi-experimental and even epidemiologic studies are not possible, researchers can use simulation methods. In addition, researchers have developed new means to deal with threats to validity—both threats to external validity, as in the development of effectiveness research, and threats to internal validity. These include new analytic methods, propensity score adjustments, and instrumental variable techniques.

Cancer Screening

Developing comparative effectiveness information about screening tests is a complex undertaking, as demonstrated by Peter B. Bach of Memorial Sloan-Kettering Cancer Center. The general rationale for screening in the context of clinical medicine or typical practice is that clinical disease usually “presents”—patients arrive with symptoms or signs that define a population that can be screened. Such circumstances actually make a strong argument for looking for preclinical conditions. That is what screening is intended to do—essentially scan an unaffected population to look for people who are at risk for developing some condition. The underlying principle of such an investigation is that theoretically we can decrease morbidity and mortality and other negative outcomes by looking for patients with preclinical conditions. Screening is widely encouraged, and one could argue that it is the dominant activity in much of primary care. Most medical journals, for example, regularly publish tables of screening evaluations, which are lists of questions that physicians should ask their patients to determine their risk for given diseases.

How these screenings impact a patient’s health need to be understood better. A recent study found that screening for lung cancer with low-dose computed tomography may increase the rate of lung cancer diagnosis and treatment, but may not meaningfully reduce the risk of advanced lung cancer or death. Until more conclusive data are available, asymptomatic

individuals should not be screened outside of clinical research studies that have a reasonable likelihood of further clarifying the potential benefits and risks. There are similar examples—in prostate cancer, in breast cancer with mammography, in renal cell cancer, and in melanoma. A paradoxical reality of the surrogate end-points often used to evaluate effectiveness in these cases is that they are readily available, but can be misleading. Simply stated, screening can often pick up pseudodisease, conditions that are not significant, yet trigger interventions or conditions that cannot be cured that are too often quickly characterized as “early” or “curable.” Refuting the principle of “catch it early” is difficult, but there are many approaches to this goal, each with its own advantages and disadvantages.

Taking Advantage of New Tools and Techniques

Clinical effectiveness research can be improved and expedited through better use of existing methods and attention to emerging tools and techniques that enhance study design, conduct, and analysis. Chapter 3 presents discussion of some key opportunities for advancement in effectiveness research, including improved efficiency and result applicability of trials and studies; innovative statistical approaches to analyses of large databases; capture and use of the wealth of data generated in genomic research; and the promise of simulation and predictive modeling.

As each paper notes, the full benefits of these tools and techniques have yet to be fully realized. To enhance clinical effectiveness research, attention is needed in part to developing a shared understanding of these various approaches and clarity on the insights each offer the research enterprise, both alone and in synergy with other approaches. Essential to this discussion will be careful consideration of circumstances and questions for which a particular approach is best suited.

Innovative Approaches to Trials

Clinical trials play an important role in assessing the effects of medical interventions, in particular where observational studies are often inadequate, such as the detection of modest treatment effects or when the risk of an invalid answer is substantial. Robert Califf from Duke University emphasizes the importance of focusing discussion about medical evidence on a serious examination of ways to improve the operational methods of both approaches and of building human systems that take advantage of the power of modern informatics on improving both RCTs and observational studies. In particular, the design and conduct of RCTs needs to evolve to take further advantage of modern informatics and to provide a more flexible and practical tool for clinical effectiveness research. Improvements in

the structure, strategy, conduct, analysis, and reporting of RCTs can help to address their perceived limitations related to cost, timeliness, and reduced generalizability.

Innovative approaches discussed including the conduct of trials within “constant registries” and targeting the standard set of rules for the conduct of trials to make them more adaptable and customized to meet research needs. A relevant model is the clinical practice database found at The Society of Thoracic Surgeons. This database has been used for quality reporting and increasingly to evaluate operative issues and technique. An extension of this model would be to develop constant disease registries capable of drawing on multiple EHR systems. The conduct of RCTs within the database would allow researchers to revolutionize the timeframe and costs of clinical trials. Trials also could take better advantage of “natural units of care” with cluster randomization, or provide information more relevant to practice by focusing on research questions based on gaps in clinical practice guidelines or being conducted in real-world practice (e.g., pragmatic clinical trials). Research networks offer the opportunity to enable the needed sharing of protocols, data structures, and other information.

A promising initiative for improvements in the quality and efficiency of clinical trials is the FDA Critical Path public–private partnership: Clinical Trials Transformation Initiative (CTTI). A collaboration of the FDA, industry, academia, patient advocates, and nonacademic clinical researchers, CTTI is designed to enhance regulations that improve the quality of clinical trials, eliminate guidances and practices that increase costs but provide no value, and conduct empirical studies of the value of guidances and practices. Primary barriers to innovation include the lack of appropriately structured financial incentives and the caution toward change that comes with a highly regulated market. To contend with this substantial barrier to ensuring that innovative approaches are implemented in practice, the research community should adopt a model from business of establishing “envelopes of creativity,” or environments in which researchers could innovate with a certain creative freedom, and where they would have appropriate financial incentives.

Califf concludes that smarter trials that provide timely information on outcomes that matter most to patients and clinicians at an acceptable cost will become an integral part of practice in learning health systems as trials increasing become embedded into the information systems that form the basis for clinical practice. These systems also will provide the foundation for integrating modern genomics and molecular medicine into the framework of care.

Innovative Analytic Tools for Large Clinical and Administrative Databases

Because healthcare databases record drug use and some health outcomes for increasingly large populations, they will be a useful data resource for timely, comparative analyses that reflect routine care. Confounding is one of the biggest issues facing effectiveness research in the analyses of these large-claims databases. While recognizing that instrumental variable analyses have the drawback of producing certain levels of untestable assumptions, Sebastian Schneeweiss from Harvard Medical School proposes that their use can lead to substantial research improvements, particularly in situations with strong confounding and where it is likely that important confounders remain unmeasured in a data source. Several developments may bring the field closer to acceptable validity, including approaches that exploit the concepts of proxy variables using high-dimensional propensity scores and exploiting provider variation in prescribing preference using instrumental variable analysis.

Epidemiologists have a number of techniques that can control for confounding by measured factors, but instrumental variables are a promising approach to address unmeasured confounders because they are an unconfounded substitute for the actual treatment. In this approach, instead of modeling treatment and outcome, researchers model the instrument—which is unconfounded on the outcome—and then correct the estimate for the correlation between the instrumental variable and the actual treatment. In this respect valid results require the identification of a quasi-random treatment assignment in the real world, such as interruption in medical practice. Recent work has also demonstrated the potential of provider treatment preference as a random component in the treatment choice process, providing an additional instrument worth consideration for comparative drug effectiveness studies.

Instrumental variable analysis is an underused, but very promising, approach compared to effectiveness research using nonrandomized data, and researchers should routinely explore whether an instrument variable analysis is possible in a particular setting. Additional work is underway to develop better methods to assess its validity and to develop systematic screens for instrument candidates.

Adaptive and Bayesian Approaches to Study Design

Adaptive and, particularly, Bayesian approaches to study design offer opportunities to improve on randomization and to facilitate new ways of learning in health care. Donald A. Berry from the University of Texas M.D. Anderson Cancer Center contends that these approaches can be used to make RCTs more flexible by using data developed during a study to

guide its conduct and to incorporate different sources of information to strengthen findings related to comparative effectiveness. The historical, frequentist approach, in which a design must be completely designed in advance and the study must be complete before inferences can be made, impedes the ability to continually assess and alter a course of study based on accrued learning. In contrast, the Bayesian approach’s ability to calculate probabilities of future observations based on previous observations enables an “online learning” ideal for developing adaptive study designs. Prospective building of adaptive study designs is critical and, except in the simplest situations, requires simulation.

Adaptive designs increase the flexibility of RCTs by enabling modifications—based on interim or other study results—including stopping the study early, changing eligibility criteria, expanding or extending accrual, dropping or adding study arms or doses, switching between clinical phases, or shifting focus to subsets of patient populations. These adaptations not only enable rapid learning about relative therapeutic benefits but also improve the overall efficiency of research. Flexibility with respect to patient accrual, for example, may enable a needed increase in study sample size, potentially minimizing the need for additional follow-on studies.

Inherently a synthetic approach, Bayesian analysis can also enhance our capacity to appropriately aggregate information from multifarious sources. For instance, in addition to use in meta-analyses, this approach has been used recently to help answer complex questions, such as the proportional attribution of mammographic screening and adjuvant treatment with tamoxifen and chemotherapy in a drop in breast cancer mortality in the United States. The results of the Bayesian models used to explore this question were consistent with non-Bayesian models as well as those derived in clinical trials.

In conclusion, Berry notes that although the rigor and inflexibility of the current research paradigm has been important to establishing medicine as a science, new approaches such as Bayesian thinking and methodologies can help to move the field even further by making research more nimble and applicable to patient care, while maintaining scientific rigor.

Simulation and Predictive Modeling

Certain research questions or evidence gaps will be difficult or impractical to answer using clinical trial methods. Although physiology-based or mechanistic models have been used only recently in medicine, as noted by Mark S. Roberts from the University of Pittsburgh and representing Archimedes, Inc., physiology-based models, such at the Archimedes model, have the potential to address these gaps by extending results beyond the

narrow spectrum of disease or practice found in most trials. These trials can also reduce the cost and time required to complete RCTs.

Physiology-based models aim to replicate disease processes at a biological level—from individual variables to system-level, whole-organ relationships. The behavior of these elements and effect on health outcomes are modeled using equations derived from and calibrated with data from empirical sources. When properly constructed and independently validated, these models not only can serve as useful tools to identify, set priorities in, or facilitate the design of new trials, but also can be engaged to conduct virtual comparative effectiveness trials. When time, cost, or other factors make doing a trial impossible, an independently validated, physiology-based model provides a useful alternative.

Emerging Genetic Information

At the forefront of discovery research, genomewide association studies permit examination of inherited genetic variability at an unprecedented level of resolution. As described by Teri Manolio of the National Human Genome Research Institute, given 500,000 or even a million SNPs (single-nucleotide polymorphism or differences among individuals within species) scattered across the genome, researchers can capture as many as 95 percent of variations in the population. This capacity enables “agnostic” genome-wide evaluations, whereby a researcher does not need to preformulate hypotheses or to limit examination to specific candidate genes, but rather can scan the entire genome. Following the availability of high-density genotyping platforms, the pace of genomic discovery has accelerated dramatically for an increasingly broad array of traits and diseases. However, examples of genomewide association studies do have drawbacks. Given the large number of comparisons per study, there is an unprecedented potential for false-positive results. Validation of findings through replication of results generally requires expanding studies from a small initial set of individuals to as many as 50,000 participants.

Two prototypes for applying genomic information from genomewide association studies to clinical effectiveness research are genetic variants related to two traits—Type 2 diabetes risk and warfarin dosing. Though both have sufficient scientific foundations and clinical availability, they remain many steps away from clinical application. Gaining more from these types of insights from genomic research will require additional epidemiologic and genomic information and evidence of impact on outcomes of importance. This evidence can be derived by linking genotypic data to phenotypic characteristics in clinical or research databases, an approach being explored by a number of biorepositories. The National Human Genome Research Institute’s eMERGE network is applying genotyping to

subsets of participants in a number of biorepositories with electronic health records. If the phenotypic measures derived from the EHRs are standardized to increase reliability, these types of linked databases hold significant promise for clinical effectiveness research.

Capacity for research, including the testing and interpretation of results, will require significant laboratory infrastructure, including a valid, readily available, FDA-certified, affordable test, conducted under the auspices of a CLIA (Clinical Laboratory Improvement Amendments)-certified laboratory. Robust electronic health records will also be critical to receive data and provide real-time performance feedback so that patients who receive abnormal results can be given suggestions for how to process that information and proceed from that point. In addition, tools for identifying emerging genomic information with potential clinical applications are needed. In this respect, a useful model is the database of Genotype and Phenotype (dbGaP), an accessible but secure large-scale database that receives, archives, and distributes results of studies of genotype–phenotype associations.

Also of vital importance is infrastructure related to policy and educational needs. For example, there is a pressing need to ensure confidentiality and privacy protection, specifically because the potential for discrimination by employers and insurers might occur if they have access to genomic information. To that end, the recently approved Genetic Information Non-Discrimination Act will be helpful. The research community also needs consensus on what should be reported to patients when abnormalities appear—what to tell them, when to tell them, and how to tell them—and adequate consent policies and procedures as well as consistent Institutional Review Board approaches. A flexible approach to genetic counseling is also needed, including the ability for patients to obtain adequate counseling from someone other than a certified genetic counselor. Also needed is a better educational infrastructure to ensure that these issues are discussed both by physicians and by patients, even during the course of ongoing genomic research; this would include better reporting guidelines for both patients and physicians. Education is needed in medical schools and nursing schools, at professional conferences, and in ongoing professional development and training to ensure that caregivers are “genomically literate.” At the same time, we have a responsibility to also educate the general population, so that patients can develop a deeper understanding of genomics. By learning how genomics affects their lives and their health care, patients will know what questions to ask.

Organizing and Improving Data Utility

Vastly larger, electronically accessible health records and administrative and clinical databases currently under development offer previously

unimagined resources for researchers and have significant and yet untapped potential to inform clinical effectiveness research. Mining data to expand the base of relevant knowledge and to determine what works for individual patients will use some of the techniques identified in Chapter 3 but will also require tools for organizing and improving these data collections. Chapter 4 provides an overview of the potential for data sources to improve effectiveness research, and identifies opportunities to better define rules of engagement and ensure these emerging data sources are appropriately harnessed. EHR and point-of-care data, enhanced administrative datasets, clinical registries, and distributed data networks are discussed. Collectively these papers illustrate how these approaches can be applied to improve the efficiency and quality of clinical practice; provide meaningful complementary data to existing research findings; accelerate the capture and dissemination of learnings from innovation in practice; and offer a means to process complex information—derived from multiple sources and formats—and develop information that supports clinical practice and informs the research enterprise.

The Electronic Health Record and Care Reengineering: Performance Improvement Redefined

Ronald A. Paulus reported on Geisinger’s use of EHRs to transform care delivery to support his contention that there is more potential than currently exploited at the nexus of point-of-care systems and research. When systematically captured, the data produced by these systems can be used to inform and improve clinical practice. Demonstrating the potential of EHRs for impact beyond practice standardization and decision support mechanisms, Geisinger has used these resources in the production of “delivery-based evidence.” EHRs capture data directly relevant to real work practice and can therefore provide extensive, longitudinal data. When coupled with an integrated data warehouse, the creation of a unique data resource can be mined for both clinical and economic insights. These data can also facilitate observational studies that address issues of clinical relevance to complement and fill gaps in RCT data. In developing such models, it is expected that more thought needs to be given to aggregating, transforming, and normalizing data in order to conduct productive analysis that will bridge the knowledge creation gap.

To illustrate the power inherent in linking data to clinical care, Paulus reviewed Geisinger’s work to enhance performance improvement (PI) initiatives. EHRs and associated data aggregation and analysis to complement PI initiatives are increasingly being adopted in the healthcare setting. At Geisinger, PI has evolved into a continuous process involving data generation, performance measurement, and analysis to transform clinical practice.

Underlying this transformation is an EHR platform fully used across the system. Geisinger’s integrated database, including EHR, financial, operational, claims, and patient satisfaction data, serves as the foundation of a Clinical Decision Intelligence System (CDIS) and is used to inform and document the results of PI efforts. PI Architecture draws upon CDIS and other inputs (e.g., evidence-based guidelines, third-party benchmarks) and leverages this information via decision support applications to help the organization answer important questions that could not be addressed before.

Key goals supported by PI Architecture include (1) assessment of PI initiatives’ returns on investment (ROIs); (2) simultaneous focus on quality and efficiency; (3) development and refinement of reusable components and modules to support future PI efforts; and (4) elimination of any unnecessary steps in care, automating processes when safe and effective to do so, delegating care to the least cost, competent caregiver, and activating the patient as a participant in her own self-care. The PI Architecture enhances and refines the traditional Plan-Do-Study-Act (PDSA) cycle and yields advantages of reduced cycle time, increased relevance, increased sustainability, increased focus on ROI, and enhanced research capabilities. Key features include (1) use of local data to document the current state of practice and to direct focus on areas of greatest potential improvement; (2) use of electronic record review and simulation to confirm hypotheses and to project the benefits of varying avenues and degrees of change; (3) testing on a small scale, using an iterative approach that builds toward a strategy of rapid escalation; and (4) leveraging of reusable parts from past initiatives to build core infrastructure and accelerate future work.

Administrative Databases in Clinical Effectiveness Research

As described by Alexander M. Walker of Worldwide Health Information Science Consultants and the Harvard School of Public Health, data from health insurance claims form the backbone of many health analytic programs. Although administrative databases are being used more effectively for research, their development and especially their application for generating insights into clinical effectiveness require careful consideration and attention to potential methodologic pitfalls and hazards. Nonetheless, there is extraordinary promise in these resources.

Insurance claims data, derived from government payers or independent health insurers, are comprehensive, population based, and well structured for longitudinal analysis. All services and therapeutics for which there is a payment enter the data system, with easy linkage across providers. The regional or employment-based nature of the populations covered includes medicine as actually provided, not just the care that reflects best practice. The need for multiple providers to interact with multiple insurers or with

a government mandate has led to highly standardized data structures supported by regular audit. Finally, these data are available for large numbers of individuals; the largest database with complete information is estimated to include data on 20 million patients.

Although claims data are excellent resources for answering many questions in health services research, they are not always sufficient for clinical research. For example, although labs ordered are recorded, the outcomes or results are not. To address this limitation, research groups have begun to augment their core files—adding laboratory and consumer data, creating the infrastructure for medical record review, implementing methods for automated and quasi-automated examination of masses of data, developing “rapid-cycle” analyses to circumvent the delays of claims processing and adjudication, and opening initiatives to collaborations that respect patients’ and institutions’ legitimate needs for privacy and confidentiality. These enhanced databases provide the information that allows researchers to trace back to specific patients or providers for additional information.

Enhanced claims databases that have been used to support surveillance programs along with automated and quasi-automated database review provide potential decision support tools for clinical safety and efficacy. Basic issues of confounding remain, however, and much attention is needed on these emerging tools to capture the full potential of these databases.

Clinical Effectiveness Research: The Promise of Registries

The dynamic and highly innovative character of healthcare technologies has been important to improvements in health; however, because intervention capacities often evolve due to iterative improvements or expanded use in practice, assessing their effectiveness presents a substantial challenge to researchers and policy makers. Because clinical registries capture information important to understanding the use of diagnostic and therapeutic interventions throughout their lifecycle, they are particularly valuable resources for assessing real-world health and economic outcomes. Alan J. Moskowitz and Annetine Gelijns from Columbia University suggest that in addition to providing information important to guiding decision making for patient care and setting policy, registries are valuable for assessing the performance of physicians and institutions, such as through the use of risk-adjusted volume–outcome relationship studies, and for increasing the efficiency of RCTs.

Several examples of findings derived from registry data on left ventricular assist devices (LVADs) were presented by Moskowitz to illustrate the potential of registries to improve effectiveness research. He pointed out that in contrast to efficacy trials or administrative databases, registries are able to keep pace with the dynamic process of medical innovation. The

premarket setting has limits on what can be learned because of the nature of efficacy trials, which are usually short and conducted in narrow populations and under ideal conditions. Once interventions are introduced into general practice, they are used in broader patient populations and under different practice circumstances. For example, only 4 percent of patients treated with coronary artery bypass grafts in practice meet the eligibility criteria of the initial trials (the elderly, females, and those with co-morbidities were excluded). Expanded use of interventions in clinical practice creates a locus for learning and innovation, with the frequent discovery of new and unexpected indications for use, as well as the accrual of knowledge on the appropriate integration of technology into the care of particular patients. Characterized as an “organized system using observational study methods to collect uniform data to evaluate specified outcomes for a population defined by a particular disease, condition, or exposure, and that serves a predetermined scientific, clinical, or policy purpose,” clinical registries collect data information important to learning and innovation. Examples include long-term outcomes and rare adverse events and outcomes achieved when technology is used by a broadened set of providers or patients. Clinical registries also provide comparative effectiveness information.

Registries offer a powerful means to capture innovation and downstream learning that take place in practice and to develop information complementary and supplementary to that produced by randomized trials. Enhancing the value of registries for clinical research requires improving the quality of data obtained, while decreasing costs and other barriers to data access. Special attention needs to be paid to the definition and standardization of target populations and outcomes (e.g., adverse events); efforts to address bias; measures to ensure representative capture of the population; and sound analytical approaches. Incorporating data collected in the usual course of patient care may help to reduce the burden and cost of registries.

Opportunities to address the traditional weaknesses of registries are presented by advances in informatics, analytical techniques, and new models of financing. The potential of registries to improve the efficiency of randomized trials also must be addressed. The development of investment incentives for stakeholders is important to improving the viability of clinical registries. Although registries have been created by public, not-for-profit, or private organizations, public–private partnerships offer a new model for registry support.

Distributed Data Networks

The variety of information created by healthcare delivery has the potential to provide insights to improve care and support clinical research.

Increasingly, these data are held by many organizations in different forms. Although the aggregation of disparate databases into a super dataset may seem desirable for the improved study power and strength of findings provided by larger numbers, such efforts also face significant challenges due to privacy concerns and the proprietary nature of some data. Richard Platt, from Harvard University illustrated how distributed research models circumvent these issues and minimize security risks by allowing the data repositories of multiple parties to remain separately owned and controlled. These models also provide an interface to these stores of highly useful data that allows them to function as a large combined dataset. This approach also takes advantage of local expertise needed to interpret content.