5

Moving to the Next Generation of Studies

INTRODUCTION

Scientific information today is expanding much faster than our ability to effectively translate and process knowledge in ways that improve patient care. To expedite the development of information—and to address both existing gaps in the evidence base and newly emerging research challenges—innovation is needed in how we use existing research tools, strategies, and study design methodologies to produce reliable knowledge. Furthermore, new approaches are needed, with special attention to using new tools, techniques, and data resources. Workshop participants discuss the potential of a next generation of studies that complement and possibly supplant those already employed in clinical effectiveness research. In that regard, decisive efforts are need to support the development of new approaches and to nurture their inclusion in research. Papers included in this chapter examine opportunities to take better advantage of emerging resources to plan, develop, and sequence studies that are more timely, relevant, efficient, and generalizable. Also considered are approaches that better account for lifecycle variation of the conditions and interventions in play. Current opportunities and needed advancements also are discussed.

A variety of innovations are presented as important components of a redesigned research paradigm as well as immediate opportunities to build toward a next generation of studies. These innovations include new approaches to observational and hybrid studies; tools for collecting and using information captured at the point of care, including those relevant to genetic variation; cooperative research networks; and possible incentives.

Presenting a vision for new inferential and statistical tools, Sharon-Lise T. Normand from Harvard Medical School discusses opportunities to increase the efficiency with which information is produced through improved use of large data streams from a variety of sources, including clinical registries, billing databases, electronic health records, preclinical research, and trials. New tools are needed to develop and implement data pooling algorithms and inferential tools. In addition, study designs not used to their full potential—including hybrid designs, preference-based designs, and quasiexperimental designs—are well suited to exploit features of the new information sources.

Findings of observational studies are intrinsically more prone to uncertainty than those from randomized trials; however, Wayne A. Ray from Vanderbilt University contends that this methodology has great value in its capacity to address the dilemma presented by the logistical difficulties and slow pace of randomized controlled trials (RCTs). Perhaps more importantly, they also enable research on many important clinical questions that RCTs are not appropriate to answer. To exploit the wealth of data becoming available, researchers will need to become more familiar with and adhere to fundamental clinical and epidemiological principles that define state-of-the-art use of observational data.

Giving clinicians information on how, for whom, and in what settings specific treatments are best used is essential to improving clinical care. John Rush from the University of Texas Southwestern Medical Center proposes that researchers widen the breadth of study designs that they employ. Rush illustrates how certain clinically important questions can be addressed with observational data obtained when systematic practices are employed, or with new study designs (e.g., hybrid studies and equipoise stratified randomized designs) or posthoc analyses. Additional challenges will be to identify key questions and develop infrastructure to conduct the needed studies.

Echoing Rush’s call for a reengineered practice system to better facilitate research, Isaac Kohane from Harvard Medical School discusses opportunities to instrument the health delivery system for research. While speaking specifically to the potential of high-throughput genotyping, phenotyping, and sample acquisition to accelerate genomic research, Kohane emphasizes the additional benefit to quality and performance improvement efforts. Needed for progress are increased investments in information technology (IT), increased transparency in regulation and patient autonomy, continued development of an informatics-savvy healthcare research workforce, and creation of a safe harbor for methodological experimentation.

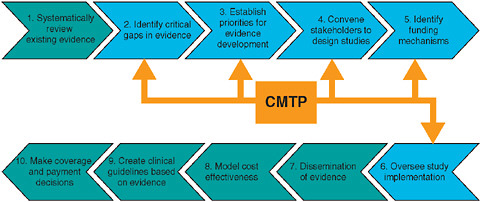

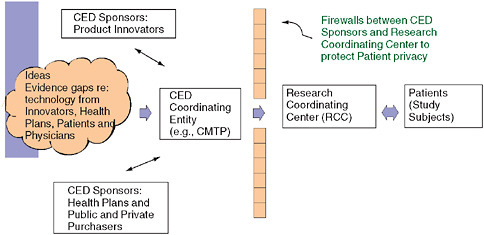

Citing the experience of the Center for Medical Technology Policy (CMTP) in attempting to facilitate private-sector coverage with evidence development, the CMTP’s Wade M. Aubry argues that “coverage with

evidence development” should complement, not compete with, traditional research enterprises. Aubry proposes that in order to draw from and expand on the experience of existing models, researchers must formalize ground rules for workgroups and separate evidence gap identification, prioritization, and selection for study design and funding. He discusses coverage with evidence development and outlines concepts for phased introduction and payment for interventions under protocol. Eric B. Larson from the Group Health Cooperative concludes the chapter by suggesting that emerging research networks, such as the development of programs funded by the National Institutes of Health (NIH) under the Clinical and Translational Science Awards, offer opportunities to contribute to a learning healthcare system in ways that produce relevant results that can be generalized.

LARGE DATA STREAMS AND THE POWER OF NUMBERS

Sharon-Lise T. Normand, Ph.D.

Harvard Medical School & Harvard School of Public Health

Abstract

This paper describes the rationale for integrating information from multiple and diverse data sources in order to efficiently produce information. Key statistical challenges involved in integrating and interpreting information are described. The fundamental issue underpinning the use of large data streams is the poolability of the data sources. New statistical tools are required to integrate the multiple and diverse data streams in order to produce valid scientific findings.

Introduction and Background

We are witnessing the rapid growth in the quantity, the type, and the quality of health data that are collected. These data derive from many different information sources: preclinical data obtained from the bench, clinical trial data, registries maintained by professional societies such as the American College of Cardiology, electronic health record data, administrative billing data such as those maintained by the Centers for Medicare & Medicaid Services, hospital discharge billing data maintained by state departments of public health, and population-based surveys data such as the Medical Expenditure Panel Survey maintained by the Agency for Healthcare Research and Quality (AHRQ).

We also are collecting more information than ever before about outcomes in both the clinical trial and observational settings. This increasingly frequent strategy has been adopted for several reasons: A single outcome

may not adequately characterize a complex disease; there may be a lack of consensus of the most important outcome; or there may be a desire to demonstrate clinical effectiveness on multiple outcomes. The consequence of the proliferation of these databases is an unprecedented demand to combine and use diverse data streams.

What circumstances have led to the proliferation of databases? First, technology and innovation are evolving rapidly, producing a plethora of new medical devices, biologics, drugs, and combination products. Scientists have made medical devices smaller, smarter, and more convenient for patients. Miniaturization techniques have allowed pacemakers to weigh less than one ounce and are the size of a quarter; biological medical devices, such as microarray-based diagnostic tests for detection of genetic variation to select medication and doses of medications, are promoting personalized medicine; and combination products, such as antimicrobial catheters and drug-eluting stents, have changed the way diseases are diagnosed and treated. Moreover, in the fast-paced device environment, technologies become quickly outdated as designs are rapidly improved. Consequently, at market introduction, the next-generation devices are already under development and under study.

Second, information technology has revolutionized medicine. The design, development, and implementation of computer-based information systems have permitted major advances in our understanding of the consequences of medical treatments through access to large data streams. Similarly, the excitement in bioinformatics of discovery of new biological insights has resulted in the development of tools to enable access to and use and management of these computer-based information systems. New initiatives to develop technologies and resources to advance the handling of larger and diverse datasets and to assist interpretation have been established in the fields of proteomics, genomics, and glycomics.

Third, rising healthcare costs have prompted stakeholders to assess the value of health care through measurement. Using administrative billing data, early research funded by the AHRQ documented substantial variations in the use of medical therapies across geographic units such as states as well as across patient subgroups such as race/ethnicity and sex. The corresponding lack of geographic variation in patient outcomes prompted research using administrative data enhanced with clinical data to assess the quality of medical care. The number and type of quality measures reported on healthcare providers, such as hospitals, nursing homes, physicians, and health plans, have grown substantially over the past decade (Byar, 1980). A second and related line of research related to rising healthcare costs is the comparative effectiveness of therapeutic options. Information obtained from comparative randomized trials, systematic reviews of randomized trials, decision analyses, or large registries are used to quantitatively assess effectiveness of competing technologies.

The availability of many large and diverse data sources presents an opportunity and a challenge to the scientific community. Under the current paradigm of assessing evidence, we continue to waste information by adhering to historical analytical and inferential procedures. Data sources relating to the same topic are treated as silos of information rather than as well-integrated information when assessing new technologies; information contained in multiple outcomes and multiple patient subgroups is ignored; and treatment heterogeneity in randomized trials is overlooked. The scientific community is not producing information efficiently. New tools, beyond those that expedite the mechanics of searching and accessing information, are required.

Using Diverse Data Streams

A fundamental problem of using diverse data sources is that of poolability. Combining data from multiple data sources is not new. At a practical level, for example, zip code level sociodemographic information from census data is often merged with patient-level information in administrative claims data to supplement covariate information. Estimates of treatment effects from diverse studies are commonly combined in the context of meta-analyses in order to learn about adverse events. The next generation of studies need to combine data sources for other reasons, however: to enhance results when the data source from which the information is based is different from the population of interest; to bridge results when transitioning from one definition to another (changing the definition from single to multiple race and ethnicity reporting); and to enhance small area estimation (see Schenker and Raghunathan, 2007, for a summary for combining survey data). Meta-analysis methods for combining information for assessing the relative effectiveness of two treatments when they have not been directly compared in randomized trials but have each been compared to other treatments have recently emerged (Lumley, 2002).

When is it sensible to combine data sources? While this is not a new statistical problem, it is increasingly more frequent and more complex. A familiar setting of combining data sources is that of meta-analysis in which the data sources are estimates obtained from multiple studies. In the typical meta-analysis setting, researchers consider whether the study populations are adequately similar, whether the treatments are defined similarly, and whether the clinical outcomes are similar. These decisions are subjective.

Once the decision is made to combine data, how should the information be pooled? Even if the patient-level data from each study were available, it would not be sensible to treat the observations from each patient across all of the studies as completely exchangeable. Exchangeability implies that we have no systematic reason to differentiate between the

outcomes of patients participating in different studies. There are numerous methodological issues to consider, such as whether data are missing and the reason for missingness, the quality of the data, the completeness of followup, type of measurement error, etc, and are beyond the scope of this paper. In looking forward, however, increasing data pooling should provide more information.

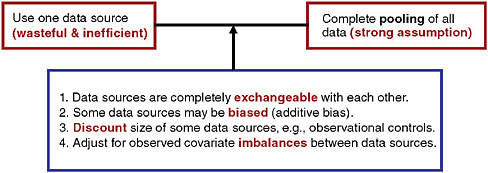

Using Observational Data to Enhance Clinical Trial Data

The use of observational data to supplement a randomized trial is not a new idea, and there exists a large literature describing advantages and disadvantages. There has been much discussion, for example, on the use of historical controls in clinical trial (Byar, 1980). Viewing data sources as a continuum, at one extreme, we could ignore concurrent observational data but clearly that would be wasteful and inefficient (Figure 5-1). When collecting data from participants in a clinical trial, obtaining parallel information from non-trial participants at study sites will enhance inferences. At the other end of the continuum we could use all available data and treat information obtained from the observational subjects on an equal footing (that is, exchangeable) with the information obtained from the clinical trial participants. This strategy involves a heroic assumption that will typically be unmet in practice. Between these extremes, there are many options available but rarely utilized. Neaton and colleagues summarize strategies for pooling information in the context of designs for circulatory system devices (Neaton et al., 2007).

The Mass COMM trial1 is a randomized trial comparing percutaneous coronary intervention (PCI) between Massachusetts hospitals with cardiac surgery-on-site (SOS) and community hospitals without cardiac surgery-on-site. The primary objective of the trial is to compare the acute safety and long-term outcomes between sites with and without cardiac SOS for patients with ischemic heart disease treated by elective PCI. The trial involves a 3:1 (sites without SOS: sites with SOS) randomization scheme that permits community hospitals to keep their volume given the substantial infrastructure investment they have made and the knowledge that volume is important. The recruitment strategy for the randomized study involves only patients presenting to community hospitals2 (it would be very difficult to randomize patients arriving at tertiary hospitals to community hospitals).

|

1 |

A randomized trial to compare percutaneous coronary intervention between Massachusetts hospitals with cardiac surgery-on-site and community hospitals without cardiac surgery-on-site (see http://www.mass.gov/Eeohhs2/docs/dph/quality/hcq_circular_letters/hospital_mdph_protocol.pdf). |

|

2 |

Massachusetts law permits elective angioplasty only at hospitals with cardiac surgery-on-site. |

FIGURE 5-1 Options for pooling data in the context of a randomized trial.

SOURCE: Spiegelhalter, D. J., K. R. Abrams, J. P. Myles. 2004. Bayesian approaches to clinical trials and health-care evaluation. West Sussex, England: John Wiley & Sons, Ltd. Reproduced with permission of John Wiley & Sons, Ltd.

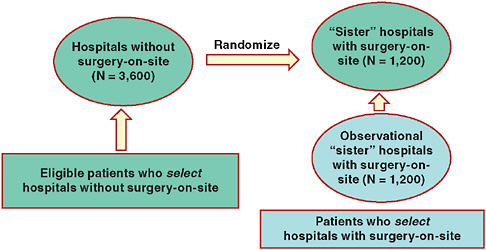

To bolster inferences and increase efficiency, the Mass COMM investigators adopted a hybrid design that borrows information from patients presenting at tertiary hospitals (concurrent observational controls). Figure 5-2 diagrams the hybrid design of this study, a randomized controlled trial using observational data.

How will the data sources (the randomized subjects and the observational subjects) be pooled? From a practical standpoint, it is not sensible to assume the observational patients arriving at tertiary hospitals and the patients randomized from community to tertiary hospitals are completely exchangeable. One strategy is to assume some differences in the outcomes of the observational controls (“additive bias”) compared to the patients randomized to the tertiary hospitals. The Mass COMM investigators assumed that the observational controls either over- or under-estimate the trial end-point by a factor of two. This decision was made prior to the enrollment of any patients.

Using Multiple Data Sources to Enhance Inference

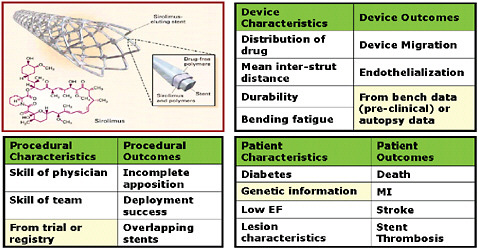

Drug-eluting stents (DES) are combination products that have largely prevented the problem of restenosis. The critical path for approval of DES, like all first-in-class therapies, included several phases, each of which involved a pass or fail score: basic research, prototype design, preclinical development including bench and animal testing, clinical development, and Food and Drug Administration (FDA) filing. Sharing of knowledge in each of these domains rather than a pass or fail grade should enhance the

FIGURE 5-2 Schematic of Mass COMM Trial: One-way randomization with observational arm.

FIGURE 5-3 Integrating information: New ontologies (variations to consider in designing processes that link data in the case of drug-eluting stents).

SOURCE: Image appears courtesy of the Food and Drug Administration.

estimates of effectiveness and safety. A selection of types of data streams for DES includes device, procedure, patient characteristics and outcomes, as displayed in Figure 5-3. It seems sensible to assume that the device characteristics would impact the device, procedural, and patient outcomes and that the procedural characteristics would impact the procedural and patient

outcomes, etc. By linking together all of these data streams through pooling, we will make more efficient use of information.

How should we pool these data sources? It is clear that there should be some probability model that links together the various silos of information. Statistical models for networks of information like that for DES exist but their practical applications have been limited.

Concluding Remarks

A key issue in the next generation of studies involves the development and implementation of pooling algorithms. The appropriateness of any pooling algorithm depends on the structure of the data, the data collection tools, and the completeness, maintenance, and documentation of data elements. Expanding our experience with pooling different data sources is the next step. New study designs are needed that exploit features of diverse information sources. There is some experience in pooling observational data with clinical trial data. These designs, such as hybrid designs, preference-based designs, and quasi-experimental designs, while available, have not been exploited to their full potential. Little experience exists for pooling data beyond the historical or concurrent observational control setting. The diverse data streams, such as that illustrated by the DES problem, are increasingly common. More focus on the development of inferential tools that will enable combining data appropriately and assessing the relationships among the streams in large databases is needed.

With the increasing number of registries, approaches for building the infrastructure to enable data sharing must be developed. Very little attention and money have been allocated for sufficient data documentation and for quality control. An additional consideration is how to best validate findings. What is the correct strategy for combining preclinical, clinical, and bench data? How do we minimize false discovery rates and determine which hypotheses are true and which are false.

Finally, we need to educate researchers, regulators, and policy makers in the interpretation of results from more diverse study designs, and the assumptions made and limitations with these designs. The availability of large data streams does not guarantee valid results—thoughtful use of data sources and innovative analytical strategies will help produce valid information.

OBSERVATIONAL STUDIES

Wayne A. Ray, M.D., M.P.H.

Vanderbilt University

Observational studies of therapeutic interventions are critical for protecting the public health. However, high-profile, misleading observational studies, such as those of hormone replacement therapy (HRT), have materially undermined confidence in this methodology. While findings of observational studies are intrinsically more prone to uncertainty than those from randomized trials, at present many of these investigations have suboptimal methodology, which can be corrected. Common problems include elementary design errors; failure to identify a clinically meaningful t0, or start of follow-up; exposure and disease misclassification; use of overly broad end-points for safety studies; confounding by the healthy drug user effect; and marginal sample size. If observational studies are to play their needed role in clinical effectiveness studies, better training of epidemiologists to recognize and address these key issues is essential.

New technologies and expanding innovations in therapeutic interventions have led to an urgent need for expansion of safety and efficacy studies. The logistical difficulties and slow pace of randomized controlled trials limit its use in many cases; but the RCT is also not appropriate for all research questions. The value of observational studies to address this dilemma and to enable research on many important clinical questions is illustrated by a number of findings regarding safety and efficacy that have been made in the past through observational designs. Prominent examples include the high risk of endometrial cancer associated with unopposed estrogen therapy and the mortality benefit of colonoscopy in colorectal cancer.

However, observational studies have been criticized as inadequate for this purpose, having yielded several controversial and misleading findings, such as HRT and vitamin E associated with cardiovascular disease and dementia protection, findings later shown to be inaccurate by randomized controlled trials. The HRT findings led to millions more women using these therapies without the expected benefits. The same pitfalls are present in efficacy and safety studies based on observational data, as illustrated by findings that demonstrated a protective effect of non-steroidal anti-inflammatory drugs (NSAIDs) on dementia.3 The outcome of these well-publicized inaccurate findings is to lead researchers to discount the value of observational studies without exploring the source or analyzing the meth-

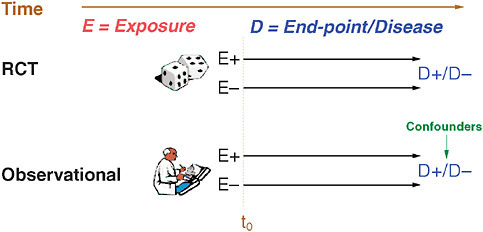

FIGURE 5-4 Notation used for observational studies in this paper.

odology. A closer look reveals that these errors are really the predictable result of ignoring some basic pharmaco-epidemiologic principles.

Figure 5-4 lays out the notation that will be followed throughout this paper. Consider a medication under study. Exposure (E) to a medication is either present (E+) or absent (E–) for various patients. In a clinical trial, individuals are randomized and starting at t0, these individuals are followed forward in time where occurrence and end-points of a disease under-study are recorded for both E+ and E– groups. Observational studies also have E+ and E– groups, follow-up commences at a certain t0, and individuals are followed forward in time to determine end-points; however, there are some important differences. First, the exposure group (E) is determined not by randomization but by measurement, and, secondly, choice by providers and patients in an observational study will lead to differences based on self-selection, some of which may present as confounders of real associations. Other potential problems that frequently surface during pharmacoepidemiology studies include suboptimal t0, immortal person-time with respect to follow-up, misclassification of exposure (both at baseline and time-dependent), misclassification of disease end-points—including overly broad or narrow designations. Potential confounders include the health user effect and variables that are time dependent, unavailable, or misclassified. Finally, the study may be powered inadequately—particularly in situations with infrequent end-points or chronic exposure.

The issue of suboptimal t0, or beginning of follow-up, is best illustrated by first considering evaluation of a surgical intervention such as coronary artery bypass graft (CABG). An evaluation that started following patients 90 days after surgery—perhaps to wait for patients to stabilize

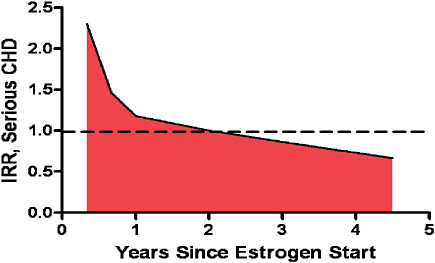

FIGURE 5-5 Risk of developing serious CHD in women using HRT therapy.

SOURCE: Derived from Hulley, S., D. Grady, T. Bush, et al. 1998. Randomized trial of estrogen plus progestin for secondary prevention of coronary heart disease in postmenopausal women. Heart and Estrogen Replacement Study (HERS) Research Group. Journal of the American Medical Association 280:605-613; Ray, W. A. 2003. Evaluating medication effects outside of clinical trials: new-user designs. American Journal of Epidemiology 158(9):915-920.

post-op—would conveniently exclude perioperative mortality. With these data excluded, CABG would appear much better than actual results. Although this type of t0 is an obvious error for surgical interventions, studies of medication often make this error with disastrous results. For example consider a woman who starts HRT. Studies suggest that as shown in Figure 5-5, there is an initial period of high risk for occurrence of coronary heart disease (CHD) and that this period of high risk abates with time (Ray, 2003). However, most of the epidemiologic studies of HRT began follow-up after this initial period, leading to a distortion of these studies’ results. Simply ensuring that follow-up initiates immediately following the start of therapy would greatly improve confidence in study findings.

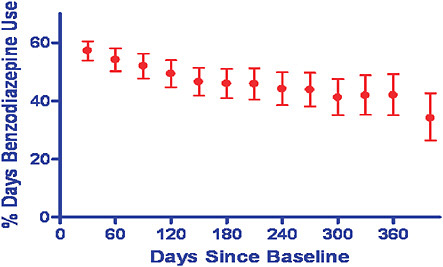

Another common problem is the failure to consider drug exposure that changes over time. This commonly will lead to underestimation of drug risk. For example, attrition and dosing changes can obscure true effects. For example in an examination of benzodiazepine use, in just 1 month, fewer than 60 percent of patients on the drug at baseline were still using it and by 1 year this was less than 40 percent. Figure 5-6 illustrates the point that if this single-point-in-time measurement of drug exposure is used to determine relative risk for falls, no effect is observed (1.02); whereas, if we take

FIGURE 5-6 Relative risk of benzodiazinepine determined through a single-point-in-time measurement of drug exposure.

SOURCE: Ray, W. A., P. B. Thapa, and P. Gideon. 2002. Misclassification of current benzodiazepine exposure by use of a single baseline measurement and its effects upon studies of injuries. Pharmacoepidemiology and Drug Safety 11(8):663-669. Reproduced with permission of John Wiley & Sons, Ltd.

into account time-dependent changes, a 44 percent increased risk of falls is observed (Ray et al., 2002). Although some advocate the use of intention to treat as in the conduct of clinical trials, in observational studies, there is not necessarily an intention to treat, maintain treatment, or promote adherence as in an RCT, so adherence rates may be low and discontinuation rates may be very high.

A third common issue is the use of overly broad end-points. The choice of end-points should differ between the two designs, although RCT design often uses broad end-points appropriately to assess safety and efficacy, it may not be as useful in larger observational trials. A pitfall of the broad approach in analyzing safety end-points is obscuring more serious events by including them under less serious categories, such as classifying torsadesde-pointes as an “arrhythmia.” In addition, all-cause mortality, which is an important indicator in closely defined, homogeneous populations of RCTs, is much more difficult to assess in the more heterogeneous and less controlled setting of observational studies. This makes certain therapies associated with death or general functional health appear to have mortality benefits when in fact none exist. For example, NSAID use has been shown

statistically to confer a mortality benefit in observational studies that cannot be reproduced in randomized trials.

A final but important source of bias in observational studies is the healthy drug user effect. People who seek preventive interventions and take medications regularly are different from those who do not. This effect will bias results in favor of medications via healthier status of those who will consistently take medication. For example, a study of antiotensin receptor blockers (ARBs) in heart failure demonstrated a 30–50 percent reduction in cardiovascular mortality for persons that were “good compliers,” but with placebo. These data showed medication adherence to be better predicted by adherence than therapy.

Given these potential problems, designing a “false-negative safety study” would include the following: a marginally adequate sample size and the use of an exposure that is not time dependent or includes substantial nonuser person time. The end-points should be broad and perhaps detected by invalidated computerized date. Similarly, one can create a “false-positive efficacy study” by focusing on an exposure that people seek, whether it is one used for prevention, sought out by informed consumers, or requires patient reporting of symptoms. Second, the cohort is a large group of prevalent users who are survivors of the period of prior drug therapy. This cohort is compared to a group of nonusers of drugs. Finally we use an end-point—such as cardiovascular disease or mortality—that is strongly influenced by behavior.

The design of observational studies is a complex subject but the previous discussion has outlined some starting points for the way forward. A first step is to separate observational analyses looking at safety from efficacy. For safety, the limitations that lead to false results are fairly easy to identify and counteract. A more difficult challenge is the need for infrastructure changes to reduce conflicts of interest by those who conduct safety studies. For efficacy, RCTs should generally be a required first step to ensure the expected benefits of therapy exist for the population as a whole if not for the individual. Third, it is necessary to challenge the assumption that because observational data often already exists in a database, study design and analysis will be fast or easy. There is an enormous amount of work involved in thinking through the particular question at hand, how various biases might apply, and how study design might effectively avoid these pitfalls. Finally, it is time to train a generation of epidemiologists to be more familiar with the clinical and pharmacological principles that affect the use of observational data. This expertise will allow clinicians to better exploit the wealth of available observational data and will lead to improve study designs. These efforts also will improve the reviews of grants and manuscripts, two additional forces critically important to improving the quality of studies of healthcare interventions.

ENHANCING THE EVIDENCE TO IMPROVE PRACTICE: EXPERIMENTAL AND HYBRID STUDIES

A. John Rush, M.D.

Departments of Clinical Sciences and Psychiatry

The University of Texas Southwestern Medical Center at Dallas

Abstract

Efficacy studies establish treatments as safe, effective, and tolerable. Clinicians, however, need to know how, for whom, when (in the course of illness or in the course of multiple treatment steps), and in what settings specific treatments are best used. Variations in treatment tactics (e.g., dose, duration) are often required for patients with different ages or co-morbid conditions, for example. Alternatively, treatments are sometimes combined to enhance outcomes, but for which patients is a particular combination better? At what treatment step(s) is/are particular treatment(s) best? When should a treatment be switched if patients are not responding? Is there a preferred sequence of treatments for specific patient groups?

This report illustrates how some of these clinically important questions can be addressed with observational data obtained when systematic practices are employed, or with new study designs (e.g., hybrid studies, equipoise stratified randomized designs) or post hoc (e.g., moderator) analyses. Suggestions for advancing this type of T2 translational research are provided.

Introduction

In the pursuit of new treatments, basic science focuses on elaborating our understanding of how the human organism works—often relying on nonhuman experiments to elucidate biological processes and functions. As this understanding grows, one attempts to determine what diseases might be better understood with this basic knowledge. For example, new “drugable” targets may be identified. Then, new molecules are developed and tested preclinically to define their effects on the targets, their effects in animal models of disease, and their safety.

Once these hurdles are passed, these potential treatments are tested in man. If successful, one has established efficacy and safety of the new drug in one or another condition. FDA approval ensues, and the new treatment is announced.

The primary outcome of this process—sometimes called T1 translational research or “bench to bedside” research—is the development of a new treatment (Woolf, 2008). This process entails the “effective translation

of the new knowledge, mechanisms, and techniques generated by advances in basic science research into new approaches for prevention, diagnosis, and treatment of disease” (Fontanarosa and DeAngelis, 2002).

Alternatively, an established treatment for one disease may be found in clinical practice (or by additional basic laboratory testing) to be of potential utility in another condition (e.g., the use of selected antiepileptic medications in the treatment of bipolar disorder) (for example, Emrich, 1990).

Once a new treatment is defined as safe enough and effective, many issues remain. Specifically, how to apply the treatment in practice—sometimes called T2 translational research (Sung et al., 2003)—must be addressed. T2 translational research has several components: (1) At the patient/clinician level: How, when, for whom, and in what settings or contexts should the new treatment be provided; (2) How can the new treatment be implemented widely (disseminated); and (3) If widely implemented, what is the cost, cost efficiency, and cost consequences of properly using the treatment.

I suggest that T1 translational research should be called Translational Research and that T2 research be renamed to Applications Research and divided into Clinical Implementation, Dissemination, and Systems/Policy research to further specify these different research enterprises, as implied by Woolf (2008).

This paper focuses on Clinical Implementation Research at the clinician/ patient level. The following discussion attempts to identify the knowledge gaps that exist when a new treatment becomes available (i.e., it has established efficacy, safety, and regulatory approval). Major depressive disorder (MDD) (American Psychiatric Association, 2000) is used to illustrate the principles discussed and the issues that need to be addressed in this type of research.

Depression as a Case Example

Clinical depression is prevalent, typically chronic or recurring, disabling, and amenable to treatment with a wide range of interventions (Practice guideline for the treatment of patients with major depressive disorder [revision]. American Psychiatric Association, 2000; U.S. Department of Health and Human Services et al., 1993). Similar to other medical syndromes, it is heterogeneous in terms of pathobiology, course of illness, genetic loading, and response to various treatments. It typically requires longer-term, not simply brief, acute management. These properties are common to other major medical disorders (e.g., congestive heart failure, cancer, hypertension, migraine headaches, epilepsy, etc.). Therefore, the following will use depression as an example to illustrate the principles proposed.

Conceptualizing Clinical Applications Research

When a new antidepressant is released, it is known to be: (1) more effective than placebo; (2) as effective as other available medications; (3) safe and well-enough tolerated to be a sensible option; and (sometimes) (4) to have established longer term efficacy based on randomized, placebo-controlled discontinuation trials.

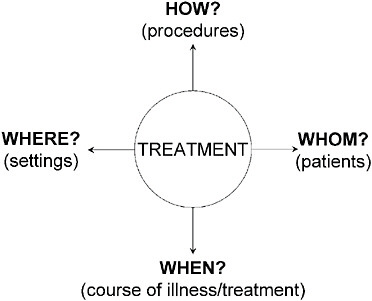

What is unknown (Figure 5-7) are answers to a plethora of clinically relevant questions in addition to how well it works overall in practice. Specifically, how, when, for whom, and in what settings is the new treatment best used? Historically, answers to these questions have been relegated to the “art of medicine”—meaning that they are never empirically answered. These evidence deficits in turn lead to a high variance in how treatments are used and in the outcomes obtained.

Why are these questions unanswered? Perhaps it is assumed that clinicians will learn on their own how best to dispense the treatment. Alternatively, this type of research may be viewed as too simple or of little public health significance to merit funding. Or perhaps systems of care will decide these issues based on bottom line, short-term costs. In fact, without answers to these questions, far from optimal outcomes are likely with the treatment, and the cost efficiency is reduced.

Figure 5-7 suggests a conceptual map of the key factors that affect

FIGURE 5-7 T2 Translational research.

outcomes of any treatment. The treatments are sometimes called treatment strategies. The remaining factors (how, whom, when, where) inform the treatment tactics (Crismon et al., 1999; Rush and Kupfer, 1995).

Treatment guidelines often provide what strategies are reasonable options at various steps in treatment (e.g., what medications are best used in the first, second, or subsequent steps). Guidelines also may recommend tactics about delivering the treatment (Rush, 2005; Rush and Prien, 1995). These recommendations more often than not rest largely or entirely on clinical consensus rather than on definitive evidence.

The “How” Factors. How treatment is delivered clearly affects the outcome. If the dose is too low, efficacy is low. If it is too high, either efficacy is again reduced and/or side effects ensue such that poor outcomes result. Other “how” factors include visit frequency, rate of dose escalation, and the diligence with which the dose and duration are managed such that an optimal chance of benefit can be achieved. These “how” factors, as with the other factors, affect outcomes and patient retention or attrition.

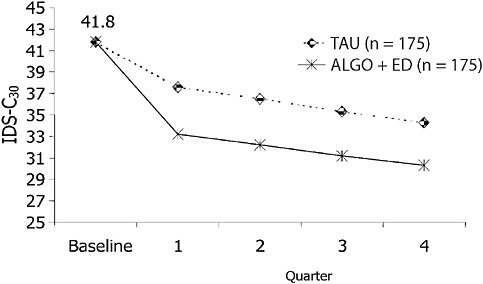

To illustrate the importance of how a treatment is delivered, consider Figure 5-8. Greater depressive symptom reduction than achieved by treatment as usual was obtained with a treatment algorithm (which provided both strategic and tactical recommendations and included the routine measurement of symptoms and tolerability to inform dose adjustments) (Trivedi et al., 2004a), despite the availability of the same antidepressant medications for both the algorithm and treatment as usual groups. Thus, a systematic approach that enhanced the quality of care resulted in better outcomes than more widely varying routine practice.

As further evidence of the importance of how a treatment is delivered, consider recent results from the National Institute of Mental Health (NIMH) multisite Sequenced Treatment Alternatives to Relieve Depression (STAR*D) trial (Fava et al., 2003; Rush et al., 2004). Typical practice entails a 2–4 week trial of an antidepressant, after which, when little effect is seen, the treatment is switched. The STAR*D trial revealed that one-third of those who ultimately responded after up to 14 weeks of treatment did so after 6 weeks of medication (Trivedi et al., 2006a). These new data argue for longer trials that are likely to improve response rates.

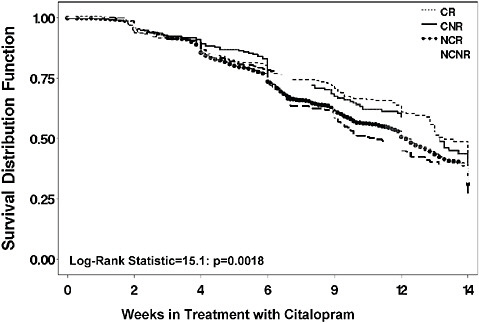

The “When” Factors. When to use a new treatment is also unclear. “When” refers to either when in the course of an illness (e.g., earlier or later) or when in the course of multiple treatment steps, or when in the context of treatment that has produced only a partial response. To illustrate the importance of these “when” factors, Figure 5-9 shows that remission is least likely and slowest in depressed patients with a recurrent course and

FIGURE 5-8 Adjusted mean depressive symptom scores on the IDS-C30. NOTE: IDS-C30 = 30-item Inventory of Depressive Symptomatology–Clinician-rated. SOURCE: Trivedi, M. H., A. J. Rush, M. L. Crismon, T. M. Kashner, M. G. Toprac, T. J. Carmody, T. Key, M. M. Biggs, K. Shores-Wilson, B. Witte, T. Suppes, A. L. Miller, K. Z. Altshuler, and S. P. Shon. 2004 (July). Clinical results for patients with major depressive disorder in the Texas Medication Algorithm Project. Archives of General Psychiatry 61(7):669-680. Copyright © 2004 American Medical Association. All rights reserved.

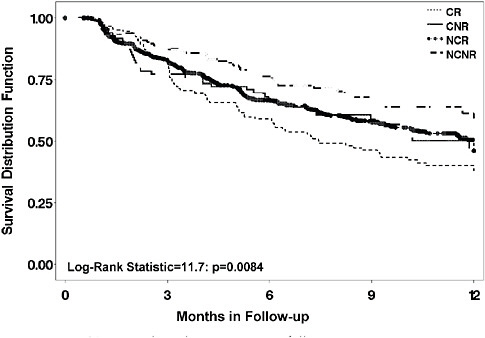

a chronic (≥2 year) index episode, while it is most rapid and effective in nonchronic, nonrecurrent patients (Rush et al., 2008). This previously unavailable information tells clinicians that a longer treatment trial is especially needed for more chronic and recurrent depressive illnesses (e.g., 9–12 weeks). Furthermore, relapse is most likely for those with chronic and recurrent depressions (Figure 5-10).

In addition, when a treatment is used in the course of multiple treatment steps, it can affect outcomes. Often new treatments are used only after several prior standard treatments. Is this preferred? In STAR*D, remission rates were lower if any treatment was used later in the step sequence. Only 33 percent remitted after the first treatment, 30 percent after the second, and 14 percent after the third and fourth treatment steps, respectively (Rush et al., 2006).

The “For Whom” Factors. The third domain that affects outcome involves patient groups for whom the treatment is best. Since depression is hetero-

FIGURE 5-9 Time to remission by prior course of illness.

NOTE: CNR = chronic and nonrecurrent; CR = chronic and recurrent course; NCNR = neither chronic nor recurrent; NCR = non chronic but recurrent.

geneous with regard to response, no one treatment works for all. While some evidence suggests that medication responses run in families (Stern et al., 1980), the “for whom” question is never addressed in efficacy trials, perhaps in part because efficacy trials enroll symptomatic volunteers with little or no co-morbid psychiatric or general medical pathology, with minimal chronicity and treatment resistance (i.e., prior failed treatment trials) (Table 5-1).

Narrowly defined efficacy samples arguably enhance internal validity by excluding subjects with concurrent co-morbid disorders that could affect efficacy or tolerability. Such samples, however, cannot address the “for whom” question. To illustrate this point, consider the results of our recent finding (Wisniewski et al., 2007) that only 635 of 2,855 depressed STAR*D participants (22 percent) would have qualified for typical efficacy trials conducted for registration purposes. Remission rates were 35 percent for efficacy trial–eligible patients and 25 percent for efficacy trial–ineligible patients. Similarly, for depressed outpatients with three to four concurrent general medical conditions (GMCs), the odds ratio of remission was

FIGURE 5-10 Time to relapse by prior course of illness.

NOTE: CNR = chronic and nonrecurrent; CR = chronic and recurrent course; NCNR = neither chronic nor recurrent; NCR = non chronic but recurrent.

TABLE 5-1 Population Gaps

|

Parameter |

Symptomatic Volunteers |

Typical Patients |

|

Chronically il |

– |

+++ |

|

Concurrent Axis I |

+ |

+++ |

|

Concurrent Axis III |

+ |

+++ |

|

Treatment-resistant |

± |

+++ |

|

Suicidal |

– |

++ |

|

Substance abusing |

– |

++ |

|

Will accept placebo |

+ |

± |

0.47 (for three) and 0.52 (for four or more), as compared to 1.0 for no co-morbid GMCs and 0.83 for one to two co-morbid GMCs (Trivedi et al., 2006b). Similar results were found if depression was accompanied by several anxiety disorders (Fava et al., 2008; Trivedi et al., 2006b). These findings question the value of antidepressant medication in those with more GMCs—a fact that could not be learned from efficacy trials.

The “In What Settings” Factor. Finally, practice setting or context may well affect outcome. Different settings are associated with different kinds of patients, with different degrees of treatment resistance, different co-morbidities, different levels of social support or stress, different treatment procedures (e.g., visit frequency, dose escalation profiles, etc.), and different prior history. Thus, setting itself is likely a highly relevant outcome parameter as it encompasses several factors that can affect outcomes.

The above conceptual model (illustrated by case examples from depression) is applicable to treatment of most diseases. The answers to the “how?,” “who?,” “for whom?,” and “in what setting?” questions will better define the best (safest, most effective) treatment for particular patients, treated under specific conditions. To develop Clinical Implementation Research, two key issues must be resolved: (1) designing cost-efficient, rapidly executed studies to obtain the answers and (2) developing a consensus by which to prioritize the questions to be answered.

Trial Design Options

Efficacy

Efficacy trials carefully control the parameters of how, whom, when, and in what settings so that when treatments are randomly assigned, should a treatment difference (e.g., drug versus placebo or drug versus drug) be found, one can ascribe the differences in outcomes to the treatments with high certainty. A classic effectiveness trial can be seen as allowing all four parameters to vary. In fact, variance is sought, as are large samples, so that post hoc moderator analyses might be conducted to generate hypotheses about for which patient group a treatment is clearly better than another (Kraemer et al., 2002). Alternatively, one can ask whether between-treatment differences are greater in one (e.g., primary care) versus another (e.g., psychiatric care) setting. Such effectiveness studies require large samples and simple outcomes—so-called Practical Clinical Trials (March et al., 2005; Tunis et al., 2003). They usually entail randomization and are most easily conducted across sites with common electronic records and clinicians who routinely use the same primary outcome measures (e.g., blood pressure, a common depression rating scale like the 16-item Quick Inventory of Depressive Symptomatology—Self-report or QIDS-SR16 (Rush et al., 2003; Trivedi et al., 2004b).

Effectiveness

These effectiveness trials have the advantage of “generalizability” and the potential for identifying target populations, settings, preferred treatment

procedures, or optimal timing (the “when” issue) on the use of a treatment. Once these moderators are identified, they must be prospectively tested to be valid (Kraemer et al., 2002). If differences between treatments A and B are found in an effectiveness trial, the cause of the difference could be due to patient subgroups, different treatment procedures in use, the timing of the use of the treatment, etc., or a combination. If the sample is large, the randomization should usually guard against these parameters being causally related to outcomes, however.

Hybrid

An alternative to a full effectiveness trial is a “hybrid trial” (Rush, 1999). Hybrid trials allow variance in one or more of the above four Treatment Outcome Relevant Parameters (TORPs) while controlling some or all of the remaining parameters. One can randomize to treatments, to different treatment procedures, or to different populations, etc.

The STAR*D trial (Fava et al., 2003; Rush et al., 2004) was a hybrid trial. The primary question in STAR*D was “what is the next best treatment if the initial, second, or third treatment steps have failed?” In essence, what are the best treatments for treatment-resistant depressions (i.e., depressions that have not benefited from one or several prior treatments)? Results had to be applicable to primary and psychiatric care settings, and generalizable to typical patients in practice (i.e., with common co-morbidities and a level of depression for which medications would typically be used as a first step). Thus, a full range of variance in patients (“for whom”) was allowed, but settings were restricted to primary and psychiatric care (public and private).

We wanted to know what the next best treatment is for depressions that did not benefit after one or several prior adequate treatment trials—not poorly delivered treatment. We, therefore, controlled both for the “how” and “when” parameters. In terms of the “how” parameters, we had to ensure that treatment was well delivered (i.e., to ensure that sufficient doses and durations were used in each treatment step) so that a failure to benefit from a treatment was likely due to the failure of the treatment and not to the failure to deliver the treatment.

We had to control for the “when” parameter to define the number of prior failed treatments and to enroll nonresistant (i.e., no prior treatment failures) in the first step. Thus, at enrollment eligible patients were defined as not treatment resistant. The first step was a single Selective Seratonin Reuptake Inhibitor (SSRI). Then, by using randomized treatment assignment in the second, third, and fourth treatment steps, we could isolate which of several different treatment options would be best for patients for whom one, two, or three prior treatments (each provided in the study itself) had failed.

Since both primary and psychiatric care settings were involved in the study, there was a risk that setting could affect outcome. We found, however, that both the types of patients and the fidelity to protocol-recommended, guideline-based treatment was similar across the two types of settings. Consequently, we found outcomes to be comparable across settings throughout all four treatment steps in the study.

This sort of hybrid design allows rather clear causal attribution to be made when between-treatment differences occur. For example, some advantage to bupropion-SR versus buspirone augmentation of citalopram was found in the second step. This difference was not due to setting, differences in treatment procedures, or when in the course of treatment these two treatments were used. In addition, hybrid trials of sufficient size can be subjected to moderator analyses (Rush et al., 2008).

Registries

Registries also can provide important information about the how, whom, when, and what setting parameters noted above. Since STAR*D patients received a single, well-delivered SSRI (citalopram) in the first step, for those who did not need the second step, we had a large population that was followed for up to a year after this first step. These sorts of registry-like data help to define the long-term course of treated depression, and such registry cohorts also provide safety and tolerability data. For example, Figure 5-10 shows that among depressed patients who do well enough to enter long-term treatment, those with a more chronic or recurrent prior course have the worst prognosis, even in treatment. They also may suggest genetic features relevant to side effects (Laje et al., 2007) or longer term outcomes. As with any observational study, replication is essential, however.

Other Designs

Finally, a comment about other study designs is in order—in particular adaptive designs (Murphy et al., 2007; Pineau et al., 2007) and equipoise stratified randomized designs (Lavori et al., 2001). Both designs attempt to mimic practice and allow prospective evaluation of common practice procedures about which there is controversy. For example, adaptive designs can determine whether continuing the same treatment longer, switching to a different treatment, or adding a second treatment to the first is preferred overall for certain patient subgroups. As a further illustration, when depressed patients have a worsening following months of a good response on treatment, does one raise the dose, hold and wait, or add an augmenting agent? We have no idea now. While a registry without randomization may

identify the common practices for these cases, without randomization we cannot be sure of the next best step.

Another attempt to mimic practice while retaining randomization entails the equipoise stratified randomized design (ESRD) (Lavori et al., 2001), which was used in STAR*D. It allowed patients with an inadequate benefit from the first treatment step (citalopram) to eliminate certain treatment strategies in the second step, while accepting the remaining treatment strategies, all of which entailed randomization to various treatment options. To illustrate, the second step provided both (1) a switch strategy (randomization to one of four new treatments after the first step was discontinued) (i.e., citalopram was stopped; the new treatment was begun) and (2) an augmentation strategy (to one of three new treatments to be added to continuing citalopram). Patients could decline one of these strategies (e.g., eliminate augmentation) while accepting the switch strategy and the subsequent randomization to one of four treatments. This design was based on clinical experience, which suggested that patients who were substantially better with the first treatment—but not entirely well so that additional treatment would be needed—would decline switching (to avoid losing the benefit from the step 1 treatment). On the other hand, we expected that those with little benefit and/or high side effects from the first treatment would prefer to switch and decline augmentation. This is, in fact, what we found (Wisniewski et al., 2007). This ESRD allowed participants to be randomized to the specific second step treatments that they were more likely to receive in practice, so that results are generalizable to practice.

Our conclusion is that effectiveness, hybrid, registry adaptive treatment, and other designs all can inform practice. The key issue is to identify the most important questions to be addressed in Clinical Implementation Research.

Defining the Key Clinical Implementation Questions

The discussion above illustrates that a host of clinically critical questions remain once a new treatment becomes available. These questions can be grouped into four conceptual domains (how, for whom, when, what setting). A range of study designs (registry/cohort studies, effectiveness, hybrid, adaptive, and ESRD) are available. The most important issue, however, is how to identify the most important questions to be addressed by Clinical Implementation Research.

Ideally, all stakeholders would have the same question in mind at the outset, but this is often not the case. In fact, the key questions likely vary based on the disease and the available knowledge about treatment of the disease. For example, for STAR*D we wanted to know the next best treatment if the first (or a subsequent one) failed. For Parkinson’s disease, it

could be how to manage the depression or prevent the dementia. For HIV, it could be how to manage lipodystrophy or when to use specific combination treatments.

In addition, the perspective of various stakeholders are different. Patients may be more concerned with side effects, adherence, or quality of life. For clinicians, it may be symptom control. For payers, it may be cost recovery or defining the best way to implement procedures. For family members, it could be how to reduce care burden.

Other parameters that affect selection of the key questions for study include (1) Will the answer change practice?, (2) Will the answer change our understanding of the disorder?, (3) Will the answer have an enduring shelf life?, and (4) Will the answer reduce wide practice variations or resolve common controversies about how to manage the disease? If the procedure is commonly used but supported with little evidence, the importance of evaluating the new procedure may be particularly high—especially if it is a more or less costly procedure (e.g., a diuretic versus an ACE inhibitor for hypertension).

One way to define the key questions is to use disease focus groups, which could be accomplished by Web meetings or in-person meetings or perhaps by convening task forces that report to Councils of specific NIH Institutes. Based on registries or large healthcare use databases or literature reviews—perhaps commissioned by AHRQ—one could identify common practices for which there is wide practice variation (or uncommon practices for which there is great promise), with little evidence for which of these alternatives is most effective, safe, or cost-efficient.

Other information sources that could help to define these key questions could include secondary analyses of available large trials, data from current registries, development of registries to identify common practices or potential changes in practice, and data mining large databases (e.g., HMOs).

I would suggest that each NIH Institute select one to three disease targets based on the public health impact of the diseases and the potential for better prevention or treatment, given current practice, practice variation, cost impact, and knowledge about and availability of the interventions. This could be accomplished by the Institute with a consensus conference to identify the key one to two questions that are the highest priority to diverse stakeholder participation. From this consensus, requests for application (RFAs) could be released and contracts let to address these questions in a timely and focused fashion. A significant annual financial commitment from the relevant institutes should be made to Clinical Implementation Research (T2) as well as System Implementation Research (T2).

Reengineering Practice for T2Research

Not only must the key questions for specific diseases be identified, but also the practice “system” needs to be reengineered to facilitate such efforts. By such efforts, the cost of such research should go down, the system can learn as it goes, and answers can be provided much more rapidly. Obvious suggestions include (1) registries for difficult-to-treat diseases to raise hypotheses about treatment for whom and when or to identify safety/ tolerability EMRs, (2) agreement on common outcome measures that have both research and clinical relevance, (3) payment to providers to obtain these measures if not part of current care (e.g., function at work, absenteeism, role function as parent, student, etc.), (4) training of clinicians who could participate in the basics of clinical research so that collaboration is facilitated, and (5) payment to clinics in the system for research time and effort if needed.

Conclusion

While major treatment advances have been realized from basic research, it is clear that simply making a new treatment available to clinicians is not sufficient to ensure its optimal, appropriate, and safe use. How, for whom, when, and in what context it is best to use the treatment, and the cost implications of these decisions, deserves higher emphasis in funding and prioritization than previously. A variety of design options are available. With systems of care now using electronic medical records, large practical clinical trials are feasible. One major hurdle remains: how to select the most important questions for prospective study to ensure results will change practice, enhance outcomes, improve cost efficiency, and/or make treatments safer.

To define these key questions, one must engage key stakeholders, focus on particular diseases, and engage care systems or develop specialized networks in which the research can be conducted. Finally, once the questions are defined, designs must be identified or developed to obtain the answers.

Institute leadership from across the NIH with critical input and collaboration from clinicians, patients, investigators, and payers is a prerequisite. Finally, either additional funding targeted at these questions or a shift in already very restricted resources is called for. Without these commitments, how, when, for whom, and in what setting a treatment is best will remain the “art of medicine,” rather than the science it could be.

ACCOMMODATING GENETIC VARIATION AS A STANDARD FEATURE OF CLINICAL RESEARCH

Isaac Kohane, M.D., Ph.D.

Harvard Medical School

Large numbers of subjects are needed to obtain reproducible results relating disease characteristics to rare events or weak effects such as those measured for common genetic variants. These numbers appear to be much higher than the 3,000–5,000 that was characteristic of such studies only 5 years ago. The costs of assembling, phenotyping, and studying these large populations are substantial, estimated at $3 billion for 500,000 individuals. Fortunately, the informational by-products of routine clinical care can be used to bring phenotyping and sample acquisition to the same high-throughput, commodity price-point as is currently true of genotyping costs. The National Center for Biomedical Computing, Informatics for Integrating Biology to the Bedside (i2b2), provides a concrete and freely available demonstration of how such efficiencies in discovery research can be delivered today without creating an entirely parallel biomedical research infrastructure and at an order of magnitude lower cost.

Although genomics is poised to have a significant impact on clinical care, the medical system is relatively ignorant about genetics. A classic example is the surprising result of a recent survey that showed that although 30–40 percent of primary care practitioners had ordered a genetic test for cancer screening in the prior year, this was not due to expected predictors such as a patient’s family history or the education of the practitioner, but rather due to patient requests for the test (Wideroff et al., 2003). The interesting thing about the genomic era is that it poses all of the questions that we are asking about secondary use of data in sharper fashion and as such it is a useful lens to look at these problems of secondary use of data. Even when you go beyond the genetics and genomics the same issues come back again and again. Nonetheless, this brief overview will address how we might instrument the healthcare system for discovery research in the genomic era.

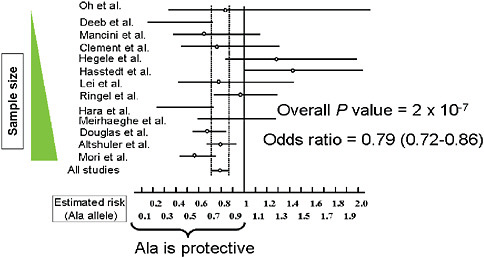

Determining true genetic associations is difficult as illustrated by a meta-analysis done by Hirschhorn of 13 studies of a single nucleotide polymorphism (SNP) that results in an amino acid substitution in the protein PPAR-gamma. This substitution has long been suspected as implicated with Type 2 diabetes susceptibility. The odds ratio reported by each of these individual studies is all over the map, and only when these data are considered in total, is it clear that their polymorphism is actually slightly protective for Type 2 diabetes (Figure 5-11) (Altshuler et al., 2000). This finding illustrates two key issues: (1) research on common variants will need

FIGURE 5-11 Comparison of studies for PPARγ Pro12Ala and Type 2 diabetes susceptibility.

SOURCE: Adapted by permission from Macmillan Publishers, Ltd. Nature Genetics 26(1):76-80. Copyright © 2000.

to include the appropriate sample size. Rather than sample sizes such as 100 or even 1,000 patients, as in these 13 underpowered studies, research will require populations on the order of 10,000 patients; (2) the large number of SNP (current tests incorporate 500,000 SNPs) coupled with relatively inexpensive and fast analyses will likely result in an overwhelming number of misleading findings and associations. The commercialization of genomic sequencing and screenings will likely compound these issues.

A significant threat to genomic medicine therefore, is the phenomenon of the “incidentalome,” in which the dangers of a large N and small p(D) contribute to the discovery of multiple abnormal genomic findings. If all of these findings are pursued without thought, ramifications for clinicians, patients, and the health system will bring into question the overall societal benefit of genomic medicine (Kohane et al., 2006). For example, testing 100,000 individuals with a genetic test that is 100 percent sensitive and 99.99 percent specific, will lead to 10 false positives. If a commercially available DNA chip has 10,000 independent gene tests, 60 percent of the population would be falsely positive. Although current genetic tests have lower specificity and sensitivity (perhaps 80 percent and 90 percent, respectively) because their utilization in practice is limited and clinicians tend to order them when there is a clinical indication (thereby increasing the prior likelihood that the patient has the disease), we have fortunately not yet been assaulted by the tsunami of false-positive results. However,

the emerging commercial approach to enable broad population screens and conduct many tests in parallel without enriching first for risk, threatens to greatly increase the number of spurious findings. And, because it no more difficult to get a 100,000 SNP chip approved by the FDA as it is for a 100 SNP chip, pressures towards the incidentalome (the universe of all possible false-positive findings) are very substantial and will increasingly present significant challenges to providers, patients, and insurers.

To overcome these problems, the field should focus on approaches and opportunities to garner a large number of appropriate patients (N). The three prongs of instrumentation that are needed to efficiently reach a large N include high-throughput genotyping, high-throughput phenotyping, and high-throughput sample acquisition. The emphasis on efficiency is paramount given resource constraints. In the U.S. Department of Health and Human Services for example, the Secretary’s Advisory Committee on Genetics, Health and Society (SACGHS) recognizes the significant health value of having a 500,000 to 1 million subject study to understand the interaction between genes and environment; however, this study is estimated to cost about $3 billion. Likewise, the cost of a pediatric study launched by the NIH for merely 100,000 individuals will cost an estimated $1–$2 billion over the next two decades. Given the number of similar large-scale genomic studies that could be initiated in the coming decades, developing efficient and inexpensive approaches to obtain data of needed quality and quantity is of utmost importance.

With respect to the three prongs of instrumentation, only high-throughput genotyping is in place and with commoditization the price is rapidly dropping—currently $250–$500 for 500,000 SNPs. The remainder of my discussion will focus on efforts to bring greater efficiency and affordability to the processes of phenotyping and sample acquisition and, in particular, on several new open source tools that aim to help the healthcare enterprise better capture the information and bioproducts produced during the course of clinical care such that they can be used effectively for discovery research.

An important component of any analysis is being able to obtain the “right” populations though phenotyping. To develop an appropriate approach, we have collaborated with computer scientists and software engineers and are working to assess a wide range of phenotypes and diseases—from asthma to major depression, rheumatoid arthritis, essential hypertension, and other common diseases. The following example focuses on efforts to translate genetic findings to improve clinical outcomes in the treatment of asthma. Several colleagues had identified a collection of SNPs in populations in Costa Rica and China that were moderately distinguishing between asthmatics that were responders or nonresponders to glucocorticoid therapy. To determine the relevance of these findings to

clinical practice in Boston required the identification of the appropriate set of patients to study. How could we identify these patients through our computerized health records? Because for this type of analysis, billing codes are too coarse grained and biased, we used automated natural language processing to evaluate text of doctors’ notes in online health records. Improving this technique to the point that it was useful was quite challenging; but, ultimately we were able to quickly, reproducibly, and accurately stratify 96,000 out of 2.5 million patients for disease severity, pharmacoresponsiveness, and exposures. Now with cases and controls (from extrema) re-consented and biomaterials obtained, we were able to identify responders and nonresponders to glucocorticoid therapy. If this type of system can be implemented and successfully used across many systems, high-throughput phenotyping may be achievable at the national level. Indeed, over 15 large academic centers have adopted the i2b2 software (freely available under open source license) so there is some reason for optimism in this regard.

Another significant barrier is in obtaining the biosamples for any phenotyped population. That is, how do we find the samples to match the phenotyped patients just identified through natural language processing? Initial efforts to obtain samples and consent entailed outreach through primary care practitioners to patients, a process that was resource and time intensive. The newly developed Crimson system, pioneered by Dr. Bry at the Brigham and Women’s Hospital in Boston, is being tested as an alternative and more efficient way to unite patient phenotype with genotype data. This system takes advantage of the many biosamples collected by laboratories in the routine course of care but ultimately discarded after use. Crimson is able to identify when these samples match up with phenotyped populations (such as the 96,000 asthmatics identified in our previous example). The end result is efficient acquisition of real biological samples—that can be used for a number of genomic tests and biological assays—matched with a rich set of known phenotypes. We have obtained 8,000 samples to date, with over 5,000 released for analysis. The opportunities presented by these richly annotated biospecimens is substantial, whether through DNA analysis by gene array, genomewide association studies, or SNP analyses; the identification of new serum/plasma markers; auto-antibody studies; testing of new antibiotics or antiviral compounds; or metabolism studies of clinical isolates.

If these advances lead to high-throughput phenotyping and sample acquisition, within the decade, we can decrease costs of large-scale genomic studies significantly. In contrast to the estimated $3 billion needed for the SACGHS study of 1 million patients, in 3 years we might expect such a study to less than $150 million and take less than one-tenth of the time to execute. These order of magnitude changes will significantly change the number of studies that can or cannot be done.

Instrumenting the health enterprise has important implications outside of genomic research as well. Existing databases such as the data mart maintained by Partner’s Health Care can be used for analyses aimed at detecting safety or risk signals such as the increased cardiovascular risk in patients taking Vioxx (Brownstein et al., 2007). As we move forward, it is therefore important to consider how the healthcare enterprise can be used for both discovery research and for surveillance. Finally it is worth noting that health is not limited to the provision of healthcare, and personally controlled health records (Kohane and Altman, 2005; Riva et al., 2001; Simons et al., 2005) may provide us with the tools that will instrument the rest of the health care that occurs outside the provider-based healthcare system.

In summary, several specific actions will help to accelerate progress. The first is the increased investment in healthcare IT; these tools will not only will improve the quality of delivered health care but also will increase the quality of secondary uses of electronically captured data. Second, increased transparency in both regulation and patient autonomy is needed to resolve the many worries (often unjustified) about HIPAA that prevent the broader implementation of these systems and approaches. With appropriate education, HIPAA should not present an obstacle to research. Third, we need the continued development of an informatics-savvy healthcare research workforce that understands relationships between health information, genomics, and biology. And finally, the most important step is to create a safe harbor for methodological “bake-offs” that challenge researchers to experiment with large datasets analysis. For example, the protein-folding community has for nearly a decade sponsored contests that pit various methodologies against one another to see which can best predict, computationally, how a given protein sequence will fold. This type of safe harbor has led to innovation in computational methodologies. Yet these types of challenges and safe harbors do not exist for equally complex areas in clinical medicine—such as predicting risk of recurring breast cancer (e.g., the Oncotype or MammaPrint gene expression tests) and/or improving natural language processing approaches to phenotyping of patients. To have an open and transparent discussion about methodological strengths and weaknesses, data should be made available and these biomarkers and studies tested. However, there is no such test bed available for methodologists around the world seeking to improve the state of the art. For the safe and meaningful conduct of biomedical research, particularly in genomics, it is essential that we start testing our data, our methodologies, and our findings.

PHASED INTRODUCTION AND PAYMENT FOR INTERVENTIONS UNDER PROTOCOL

Wade M. Aubry, M.D.

Senior Advisor

Health Technology Center

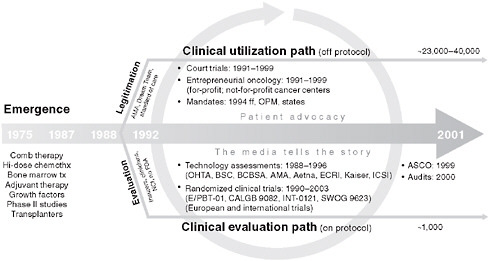

Coverage of health interventions has historically been a binary decision by Medicare and commercial health plans. Over the last two decades, however, the concept of phased introduction and payment for emerging technologies under protocol, or “coverage with evidence development (CED)” has evolved as a flexible or conditional alternative to a complete denial of coverage. An important early example of this approach from the 1990s was the support of commercial payers such as Blue Cross Blue Shield Plans for patient care costs of high-priority National Cancer Institute (NCI)-sponsored randomized clinical trials evaluating high-dose chemotherapy with autologous bone marrow transplantation (HDC/ABMT) compared to conventional-dose chemotherapy for the treatment of metastatic breast cancer. Importantly, the financial support for this investigational treatment was contractually facilitated by the Blue Cross Blue Shield Association (BCBSA) as a “Demonstration Project” operating outside of the usual medical necessity provisions in the health plan “evidence of coverage (EOC)” documents. Accrual of patients to the RCTs was slow because of the widespread availability of HDC/ABMT outside of research protocols, delaying the trials which would eventually report no benefit from the more toxic high-dose chemotherapy.

Other examples of CED can be found in the Medicare program (IOM, 2000) and include the Health Care Financing Administration (HCFA) (now Centers for Medicare & Medicaid Services [CMS])/FDA interagency agreement from 1995 allowing for coverage of Category B investigational devices (incremental modification of FDA-approved devices), coverage of lung volume reduction surgery (LVRS) for bullous emphysema under an NIH protocol (1996) (Mckenna, 2001), and the Medicare Clinical Trials Policy (CMS, 2000), under which qualifying clinical trials receive Medicare coverage for patient care costs under an approved research protocol. Over the past 4 years, Medicare CED has been formalized by CMS with a guidance document, a CED policy for implantable cardioverter defibrillators (ICDs) for the prevention of sudden cardiac death, and a Position Emission Tomography (PET) oncology registry for indications not previously covered by Medicare. In addition, the Medicare federal advisory committee established in 1999 for developing national coverage decisions (NCDs) was renamed the Medicare Evidence Development and Coverage Advisory Committee

(MedCAC), emphasizing the importance to CMS of developing better evidence to inform Medicare coverage decisions.