1

Evidence Development for Healthcare Decisions: Improving Timeliness, Reliability, and Efficiency

INTRODUCTION

The rapid growth of medical research and technology development has vastly improved the health of Americans. Nonetheless, a significant knowledge gap affects their care, and it continues to expand: the gap in knowledge about what approaches work best, under what circumstances, and for whom. The dynamic nature of product innovation and the increased emphasis on treatments tailored to the individual—whether tailored for genetics, circumstances, or patient preferences—present significant challenges to our capability to develop clinical effectiveness information that helps health professionals provide the right care at the right time for each individual patient.

Developments in health information technology, study methods, and statistical analysis, and the development of research infrastructure offer opportunities to meet these challenges. Information systems are capturing much larger quantities of data at the point of care; new techniques are being tested and used to analyze these rich datasets and to develop insights on what works for whom; and research networks are being used to streamline clinical trials and conduct studies previously not feasible. An examination of how these innovations might be used to improve understanding of clinical effectiveness of healthcare interventions is central to the Roundtable on Value & Science-Driven Health Care’s aim to help transform how evidence is developed and used to improve health and health care.

EBM AND CLINICAL EFFECTIVENESS RESEARCH

The Roundtable has defined evidence-based medicine (EBM) broadly to mean that, “to the greatest extent possible, the decisions that shape the health and health care of Americans—by patients, providers, payers, and policy makers alike—will be grounded on a reliable evidence base, will account appropriately for individual variation in patient needs, and will support the generation of new insights on clinical effectiveness.” This definition embraces and emphasizes the dynamic nature of the evidence base and the research process, noting not only the importance of ensuring that clinical decisions are based on the best evidence for a given patient, but that the care experience be reliably captured to generate new evidence.

The need to find new approaches to accelerate the development of clinical evidence and to improve its applicability drove discussion at the Roundtable’s workshop on December 12–13, 2007, Redesigning the Clinical Effectiveness Research Paradigm. The issues motivating the meeting’s discussions are noted in Box 1-1, the first of which is the need for a deeper and broader evidence base for improved clinical decision making. But also important are the needs to improve the efficiency and applicability of the process. Underscoring the timeliness of the discussion is recognition of the challenges presented by the expense, time, and limited generalizability of current approaches, as well as of the opportunities presented by innovative research approaches and broader use of electronic health records that make clinical data more accessible. The overall goal of the meeting was to explore these issues, identify potential approaches, and discuss possible strategies

|

BOX 1-1 Issues Motivating the Discussion

|

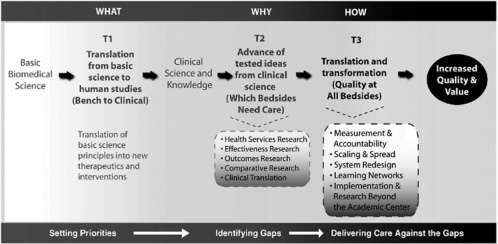

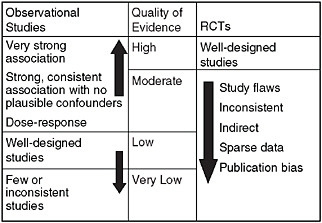

FIGURE 1-1 The classic evidence hierarchy.

SOURCE: DeVoto, E., and B. S. Kramer. 2005. Evidence-Based Approach to Oncology. In Oncology an Evidence-Based Approach. Edited by A. Chang. New York: Springer. Modified and reprinted with permission of Springer SBM.

for their engagement. Key contextual issues covered in the presentations and open workshop discussions are reviewed in this chapter.

Background: Current Research Context

Starting points for the workshop’s discussion reside in the presentation of what has come to be viewed as the traditional clinical research model, depicted as a pyramid in Figure 1-1. In this model, the strongest level of evidence is displayed at the peak of the pyramid: the randomized controlled double blind study. This is often referred to as the “gold standard” of clinical research, and is followed, in a descending sequence of strength or quality, by randomized controlled studies, cohort studies, case control studies, case series, and case reports. The base of the pyramid, the weakest evidence, is reserved for undocumented experience, ideas and opinions. Noted at the workshop was the fact that, as currently practiced the randomized controlled and blinded trial is not the gold standard for every circumstance.

The development in recent years of a broad range of clinical research approaches, along with the identification of problems in generalizing research results to populations broader than those enrolled in tightly controlled trials, as well as the impressive advances in the potential availability of data through expanded use of electronic health records, have all

prompted re-consideration of research strategies and opportunities (Kravitz, 2004; Schneeweiss, 2004; Liang, 2005; Lohr, 2007; Rush, 2008).

Table 1-1 provides brief descriptions of the many approaches to clinical effectiveness research discussed during the workshop—and these methods can be generally characterized as either experimental or non-experimental. Experimental studies are those in which the choice and assignment of the intervention is under control of the investigator; and the results of a test intervention are compared to the results of an alternative approach by actively monitoring the respective experience of either individuals or groups receiving the intervention or not. Non-experimental studies are those in which either manipulation or randomization is absent, the choice of an intervention is made in the course of clinical care, and existing data, that was collected in the course of the care process, is used to draw conclusions about the relative impact of different circumstances or interventions that vary between and among identified groups, or to construct mathematical models that seek to predict the likelihood of events in the future based on variables identified in previous studies. The data used to reach study conclusions, can be characterized as primary (generated during the conduct of the study); or secondary (originally generated for other purposes, e.g., administrative or claims data).

While not an exhaustive catalog of methods, Table 1-1 provides a sense of the range of clinical research approaches that can be used to improve understanding of clinical effectiveness. Noted at the workshop was the fact that each method has the potential to advance understanding on different aspects of the many questions that emerge throughout a product or intervention’s lifecycle in clinical practice. The issue is therefore not one of whether internal or external validity should be the overarching priority for research, but rather which approach is most appropriate to the particular need. In each case, careful attention to design and execution studies are vital.

Bridging the Research–Practice Divide

A key theme of the meeting was that it is important to draw clinical research closer to practice. Without this capacity, the need to personalize clinical care will be limited. For example, information on possible heterogeneity of treatment effects in patient populations—due to individual genetics, circumstance, or co-morbidities—is rarely available in a form that is timely, readily accessible, and applicable. To address this issue, the assessment of a healthcare intervention must go beyond determinations of efficacy (whether an intervention can work under ideal circumstances) to an understanding of effectiveness (how an intervention works in practice), which compels grounding of the assessment effort in practice records. To understand effec-

TABLE 1-1 Selected Examples of Clinical Research Study Designs for Clinical Effectiveness Research

|

Approach |

Description |

Data types |

Randomization |

|

Randomized Controlled Trial (RCT) |

Experimental design in which patients are randomly allocated to intervention groups (randomized) and analysis estimates the size of difference in predefined outcomes, under ideal treatment conditions, between intervention groups. RCTs are characterized by a focus on efficacy, internal validity, maximal compliance with the assigned regimen, and, typically, complete follow-up. When feasible and appropriate, trials are “double blind”—i.e., patients and trialists are unaware of treatment assignment throughout the study. |

Primary, may include secondary |

Required |

|

Pragmatic Clinical Trial (PCT) |

Experimental design that is a subset of RCTs because certain criteria are relaxed with the goal of improving the applicability of results for clinical or coverage decision making by accounting for broader patient populations or conditions of real-world clinical practice. For example, PCTs often have fewer patient inclusion/exclusion criteria, and longer term, patient-centered outcome measures. |

Primary, may include secondary |

Required |

|

Delayed (or Single-Crossover) Design Trial |

Experimental design in which a subset of study participants is randomized to receive the intervention at the start of the study and the remaining participants are randomized to receive the intervention after a pre-specified amount of time. By the conclusion of the trial, all participants receive the intervention. This design can be applied to conventional RCTs, cluster randomized and pragmatic designs. |

Primary, may include secondary |

Required |

|

Approach |

Description |

Data types |

Randomization |

|

Adaptive Design |

Experimental design in which the treatment allocation ratio of an RCT is altered based on collected data. Bayesian or Frequentist analyses are based on the accumulated treatment responses of prior participants and used to inform adaptive designs by assessing the probability or frequency, respectively, with which an event of interest occurs (e.g., positive response to a particular treatment). |

Primary, some secondary |

Required |

|

Cluster Randomized Controlled Trial |

Experimental design in which groups (e.g., individuals or patients from entire clinics, schools, or communities), instead of individuals, are randomized to a particular treatment or study arm. This design is useful for a wide array of effectiveness topics but may be required in situations in which individual randomization is not feasible. |

Often secondary |

Required |

|

N of 1 trial |

Experimental design in which an individual is repeatedly switched between two regimens. The sequence of treatment periods is typically determined randomly and there is formal assessment of treatment response. These are often done under double blind conditions and are used to determine if a particular regimen is superior for that individual. N of 1 trials of different individuals can be combined to estimate broader effectiveness of the intervention. |

Primary |

Required |

|

Interrupted Time Series |

Study design used to determine how a specific event affects outcomes of interest in a study population. This design can be experimental or nonexperimental depending on whether the event was planned or not. Outcomes occurring during multiple periods before the event are compared to those occurring during multiple periods following the event. |

Primary or secondary |

Approach dependent |

|

Approach |

Description |

Data types |

Randomization |

|

Cohort Registry Study |

Non-experimental approach in which data are prospectively collected on individuals and analyzed to identify trends within a population of interest. This approach is useful when randomization is infeasible. For example, if the disease is rare, or when researchers would like to observe the natural history of a disease or real world practice patterns. |

Primary |

No |

|

Ecological Study |

Non-experimental design in which the unit of observation is the population or community and that looks for associations between disease occurrence and exposure to known or suspected causes. Disease rates and exposures are measured in each of a series of populations and their relation is examined. |

Primary or secondary |

No |

|

Natural Experiment |

Non-experimental design that examines a naturally occurring difference between two or more populations of interest—i.e., instances in which the research design does not affect how patients are treated. Analyses may be retrospective (retrospective data analysis) or conducted on prospectively collected data. This approach is useful when RCTs are infeasible due to ethical concerns, costs, or the length of a trial will lead to results that are not informative. |

Primary or Secondary |

No |

|

Simulation and Modeling |

Non-experimental approach that uses existing data to predict the likelihood of outcome events in a specific group of individuals or over a longer time horizon than was observed in prior studies. |

Secondary |

No |

|

Approach |

Description |

Data types |

Randomization |

|

Meta Analysis |

The combination of data collected in multiple, independent research studies (that meet certain criteria) to determine the overall intervention effect. Meta analyses are useful to provide a quantitative estimate of overall effect size, and to assess the consistency of effect across the separate studies. Because this method relies on previous research, it is only useful if a broad set of studies are available. |

Secondary |

No |

|

SOURCE: Adapted, with the assistance of Danielle Whicher of the Center for Medical Technology Policy and Richard Platt from Harvard Pilgrim Healthcare, from a white paper developed by Tunis, S. R., Strategies to Improve Comparative Effectiveness Research Methods and Data Infrastructure, for June 2009 Brookings workshop, Implementing Comparative Effectiveness Research: Priorities, Methods, and Impact. |

|||

tiveness, feedback is crucial on how well new products and interventions work in broad patient populations, including who those populations are and under what circumstances they are treated.

Redesigning the Clinical Effectiveness Research Paradigm

Growing opportunities for practice-based clinical research are presented by work to develop information systems and data repositories that enable greater learning from practice. Moreover, there is a need to develop a research approach that can address the questions that arise in the course of practice. As noted in Table 1-1, many research methods can be used to improve understanding of clinical effectiveness, but their use must be carefully tailored to the circumstances. For example, despite the increased external validity offered by observational approaches, the uncertainty inherent in such studies due to bias and confounding often undermine confidence in these approaches. Likewise, the limitations of the randomized controlled trial (RCT) often mute its considerable research value. Those limitations may be a sample size that is too small; a drug dose that is too low to fully assess the drug’s safety; follow-up that is too short to show long-term benefits; underrepresentation or exclusion of vulnerable patient groups, including elderly patients with multiple co-morbidities, children, and young women; conduct of the trial in a highly controlled environment; and/or high cost and time investments. The issue is not one of RCTs versus non-

experimental studies but one of which is most appropriate to the particular need.

Retrospective population-level cohorts using administrative data, clinical registries, and longitudinal prospective cohorts have, for example, been valuable in assessing effectiveness and useful in helping payers to make coverage decisions, assessing quality improvement opportunities, and providing more realistic assessments of interventions. Population-based registries—appropriately funded and constructed with clinician engagement—offer a compromise to the strengths and limitations of, for example, cohort studies, and can assess “real-world” health and economic outcomes to help guide decision making for patient care and policy setting. Furthermore, they are a valuable tool for assessing and driving improvements in the performance of physicians and institutions.

When trials, quasi-experimental studies, and even epidemiologic studies are not possible, researchers may also be able to use simulation methods, if current prototypes prove broadly applicable. Physiology-based models, for example, have the potential to augment knowledge gained from trials and can be used to fill in “gaps” that are difficult or impractical to answer using clinical trial methods. In particular, they will be increasingly useful to provide estimates of key biomarkers and clinical findings. When properly constructed, they replicate the results of the studies used to build them, not only at an outcome level but also at the level of change in biomarkers and clinical findings. Physiology-based modeling has been used to enhance and extend existing clinical trials, to validate RCT results, and to conduct virtual comparative effectiveness trials.

In part, this is a taxonomy and classification challenge. To strengthen these various methods, participants suggested work to define the “state of the art” for their design, conduct, reporting, and validation; improve the quality of data used; and identify strategies to take better advantage of the complementary nature of results obtained. As participants observed, these methods can enhance understanding of an intervention’s value in many dimensions—exploring effects of variation (e.g., practice setting, providers, patients) and extending assessment to long-term outcomes related to benefits, rare events, or safety risks—collectively providing a more comprehensive assessment of the trade-offs between potential risks and benefits for individual patients.

It is also an infrastructure challenge. The efficiency, quality, and reliability of research requires infrastructure improvements that allow greater data linkage and collaboration by researchers. Research networks offer a unique opportunity to begin to build an integrated, learning healthcare system. As the research community hones its capacity to collect, store, and study data, enormous untapped capacity for data analysis is emerging. Thus, the mining of large databases has become the focus of considerable interest

and enthusiasm in the research community. Researchers can approach such data using clinical epidemiologic methods—potentially using data collected over many years, on millions of patients, to generate insights on real-world intervention use and health outcomes. It was this potential that set the stage for the discussion.

PERSPECTIVES ON CLINICAL EFFECTIVENESS RESEARCH

Keynote addresses opened discussions during the 2-day workshop. Together the addresses and discussions provide a conceptual framework for many of the meeting’s complex themes. IOM President Harvey V. Fineberg provides an insightful briefing on how clinical effectiveness research has evolved over the past 2.5 centuries and offers compelling questions for the workshop to consider. Urging participants to stay focused on better understanding patient needs and to keep the fundamental values of health care in perspective, Fineberg proposes a meta-experimental strategy, advocating for experiments with experiments to better understand their respective utilities, power, and applicability as well as some key elements of a system to support patient care and research. Carolyn M. Clancy, director of the Agency for Healthcare Research and Quality, offers a vision for 21st-century health care in which actionable information is available to clinicians and patients and evidence is continually refined as care is delivered. She provides a thoughtful overview of how emerging methods will expand the research arsenal and can address many key challenges in clinical effectiveness research. Emphasis is also given to the potential gains in quality and effectiveness of care, with greater focus on how to translate research findings into practice.

CLINICAL EFFECTIVENESS RESEARCH: PAST, PRESENT, AND FUTURE

Harvey V. Fineberg, M.D., Ph.D. President, Institute of Medicine

An increasingly important focus of the clinical effectiveness research paradigm is the efficient development of relevant and reliable information on what works best for individual patients. A brief look at the past, present, and future of clinical effectiveness research establishes some informative touchstones on the development and evolution of the current research paradigm, as well as on how new approaches and directions might dramatically improve our ability to generate insights into what works in a clinical context.

Evolution of Clinical Effectiveness Research

Among the milestones in evidence-based medicine, one of the earliest examples of the use of comparison groups in a clinical experiment is laid out in a summary written in 1747 by James Lind detailing what works and what does not work in the treatment of scurvy. With 12 subjects, Lind tried to make a systematic comparison to discern what agents might be helpful to prevent and treat the disease. Through experimentation, he learned that the intervention that seemed to work best to help sailors recover most quickly from scurvy was the consumption of oranges, limes, and other citrus fruits. Many other interventions, including vinegar and sea water, were also tested, but only the citrus fruits demonstrated benefit. What is interesting about that experiment and relevant for our discussions of evidence-based medicine today is that it took the Royal Navy more than a century to adopt a policy to issue citrus to its sailors. When we talk about the delay between new knowledge and its application in practice in clinical medicine, we therefore have ample precedent, going back to the very beginning of systematic comparisons.

Another milestone comes in the middle of the 19th century, with the first systematic use of statistics in medicine. During the Crimean War (1853–1856), Florence Nightingale collected mortality statistics in hospitals and used those data to help discern where the problems were and what might be done to improve performance and outcomes. Nightingale’s tables were the first systematic collection in a clinical setting of extensive data on outcomes in patients that were recorded and then used for the purpose of evaluation.

It was not until the early part of the 20th century that statistics in its modern form began to take hold. The 1920s and 1930s saw the development of statistical methods and accounting for the role of chance in scientific studies. R. A. Fisher (Fisher, 1953) is widely credited as one of the seminal figures in the development of statistical science. His classic work, The Design of Experiments (1935), focused on agricultural comparisons but articulated many of the critical principles in the design of controlled trials that are a hallmark of current clinical trials. It would not be until after World War II, that the first clinical trial on a medical intervention would be recorded. A 1948 study by Bradford Hill on the use of streptomycin in the treatment of tuberculosis was the original randomized controlled trial. Interestingly, the contemporary use of penicillin to treat pneumonia was never subjected to similar, rigorous testing—perhaps owing to the therapy’s dramatic benefit to patients.

Over the ensuing decades, trials began to appear in the literature with increased frequency. Along the way they also became codified and almost deified as the standard for care. In 1962, after the thalidomide scandals,

efficacy was introduced as a requirement for new drug applications in the Kefauver amendments to the Federal Food, Drug, and Cosmetic Act. But it was not until the early 1970s, that a decision of the Sixth Circuit Court certified RCTs as the standard of the Food and Drug Administration (FDA) in its judgments about efficacy. Subsequently, organizations like the Cochrane Collaboration have developed ways of integrating RCT information with data from other types of methods and from multiple sources, codifying and putting these results forward for use in clinical decision making.

The classic evidence hierarchy that starts at the bottom with some-body’s opinion—one might call that “eminence-based medicine”—and rises through different methodologies to the pinnacle, randomized controlled double blind trials. If double masked trials were universal, if they were easy, if they were inexpensive, and if their results were applicable to all patient groups, we probably would not have a need for discussion on redesigning the clinical effectiveness paradigm. Unfortunately, despite the huge number of randomized trials being conducted a number of needs are not being met by the current “randomized trial only” strategy.

Effectiveness Research to Inform Clinical Decision Making

Archie Cochrane, the inspiration for the Cochrane Collaboration, posed three deceptively simple yet critical questions for assessing clinical evidence. First, Can it work? Implied in this question is an assumption of ideal conditions. More recently, the Office of Technology Assessment popularized the terms efficacy and effectiveness to distinguish between the effects of an intervention in ideal and real-world conditions, respectively. The question “Can it work?” is a question of an intervention’s efficacy. Cochrane’s second question, “Will it work?,” is one of effectiveness. That is, how and for whom does an intervention work in practice—under usual circumstances, deployed in the field, and utilized under actual clinical care conditions with real patients and providers.

The third of Cochrane’s questions asks, “Is it worth it?” This can not only be applied to the balance of safety, benefit, and risk to an individual patient, but also can be applied to the society as a whole, for which the balance of costs and effectiveness also come into play. This final question introduces important considerations with respect to assessing different approaches and strategies in clinical effectiveness—the purpose of and vantage point from which these questions are being asked. Because of the significant range and number of perspectives involved in healthcare decision making, addressing these issues introduces many additional questions to consider upstream of those posed by Cochrane.

When assessing the vast array of strategies and approaches to evaluation, these prior questions are particularly helpful to put into perspective the roles

and contributions of each. Many dimensions of health care deserve to be considered in evaluation, particularly if we start with the idea of prevention and the predictive elements prior to an actual clinical experience. The scope of an evaluation might be at the level of the intervention (e.g., diagnostic, therapeutic, rehabilitative, etc.), the clinician (specialty, training, profession, etc.) or organization of service, institutional performance, and the patient’s role. Thinking critically about what is being evaluated and mapping what is appropriate, effective, and efficient, for the various types of questions, is an ongoing and important challenge in clinical effectiveness research.

Certain clinical questions drive very different design challenges. Evaluation of a diagnostic intervention, for example, involves the consideration of a panoply of factors: The performance of the diagnostic technology in terms of the usual measures of sensitivity and specificity, and the way in which one can reach judgments, make trade-offs, and deal with both false-positive and false-negative results. One also has to be thinking of the whole cascade of events, through clinical intervention and outcomes that may follow. For example, thought should be given to whether results enhance subsequent decisions for therapy, or whether the focus is on patient reassurance or other measurable outcomes, and how these decisions might affect judgments or influence the ultimate outcome of the patient. Considerations such as these are important for evaluating an intervention but are very different from those aimed at assessing system performance (e.g., clinician and health professionals’ performance, organizational approaches to service). These differences apply not only to methodologies and strategies but also to information needs. The same kinds of data used to evaluate a targeted, individual, time-specified intervention are not the same as those needed if the goal is to compare various strategies of organization or different classes of providers.

A related set of considerations revolves around this question: For whom—or for what patient group, are we attempting to make an evaluation? The limitations of randomized controlled trials, in terms of external validity, often reduce the relevance of findings for clinical decisions faced by physicians for their patients. For example, the attributes of real-world patients may differ significantly from that of the trial population (e.g., age, sex, other diagnoses, risk factors), with implications for the appropriateness of a given therapy for patients. Early consideration of “for whom” will help to identify those specific methods that can produce needed determinations, or add value by complementing information from clinical trials.

Finally, we must also consider point of view: For any given purpose, from whose point of view are we attempting to carry out this evaluation? For example, is the purpose motivated by one’s interest in a clinical benefit, safety, cost, acceptability, convenience, implementability, or some other factor? Is it representing the patient or the pool of patients, the payers of the

services, the clinicians who provide the services, the manufacturers? Does the motivation come from a regulatory need for decisions about permissions or restrictions, and if so, under what circumstances? These perspectives all extend differences to what kind of method will be suitable for what kind of question in what kind of circumstance. The questions of what it is we are evaluating, for whom, and with whose perspective and purpose in mind are decidedly nontrivial, and in fact they can be important guides as we reflect on the strengths and weaknesses of a variety of approaches and formulate better approaches to clinical effectiveness research.

Several key challenges drive the need for better methods and strategies for developing clinical effectiveness information. First, it is evident to anyone who has spent an hour in a clinic or has attempted to keep up-to-date with current medical practices that the amount of information that may be relevant to a particular patient and the number of potential interventions and technologies can be simply overwhelming. In fact, it is estimated that the number of published clinical trials now exceeds 10,000 per year. A conscientious clinician trying to stay current with RCTs could read three scientific papers a day and at the end of a year would be approximately 2 years behind in reading. This constant flow of information is one side of a central paradox in heath care today: Despite the overwhelming abundance of information available, there is an acute shortage of information that is relevant, timely, appropriate, and useful to clinical decision making. This is true for caregivers, payers, regulators, providers, and patients. Reconciling those two seemingly differently vectored phenomena is one of the leading challenges today.

Additional barriers and issues include contending with the dizzying array of new and complex technologies, the high cost of trials as a primary means of information acquisition, as well as a complex matrix of ethical, legal, practical, and economic issues associated with fast-moving developments in genetic information and the rise of personalized medicine. These issues are compounded by the seemingly increased divergence of purpose between manufacturers, regulators, payers, clinicians, and patients. Finally, there is the challenge to improve how knowledge is applied in practice. In part this is related to the culture of medicine, the demands of practice, and information overload, but improvements to the system and incentives will be of critical importance moving forward.

Innovative Strategies and Approaches

Fortunately, researchers are developing many innovative strategies in response to these challenges. Some of these build on, some displace, and some complement the traditional array of strategies in the classic evidence hierarchy. Across the research community, for example, investigators are

developing improved data sources—larger and potentially interoperable clinical and administrative databases, clinical registries, and electronic health records and other systems that capture relevant data at the point of care (sometimes called “the point of service”). We are seeing also the evolution of new statistical tools and techniques, such as adaptive designs, simulation and modeling, large database analyses, and data mining. Some of these are adaptations of tools used in other areas; others are truly novel and driven by the peculiar requirements of the human clinical trial. The papers that follow offer insights on adaptive designs, simulation and modeling approaches, and the various analytic approaches that can take adequate account of very large databases.

In particular, discerning meaningfully relevant information in the health context begs for closer attention, and strategies for innovative approaches to data mining are emerging—strategies analogous, if you will, to the way that Internet search engines apply some order to the vast disarray of undifferentiated information spread across the terabyte-laden database of the World Wide Web.

We are also seeing the development of innovative trial and study methodologies that aim to compensate for some of the weaknesses of clinical trials with respect to external validity. Such methods also hold promise for addressing some of the cost-related and logistical challenges in the classic formulation of trials. New approaches to accommodate physiologic and genetic information speak to the emergence of personalized medicine. At the same time, there are emerging networks of research that can amplify and accelerate, in efficiency and time, the gathering and accumulation of relevant information and a variety of models that mix and match these strategies.

Regardless of the approaches taken and the variety of perspectives considered, we must ultimately return to confront the critically central question of clinical care for the individual patient in need, at a moment in time, and determine what works best for this patient. In this regard, we might ideally move toward a system that would combine a point-of-care focus with an electronic record data system that is coupled with systems for assembling evidence in a variety of ways. Such a system would accomplish a quadrafecta, if you will—a four-part achievement of goals important to improving the system as a whole. Component goals would include enabling the evaluation and learning of what works and what does not work for individual patients that includes weighing benefits and costs; providing decision support for the specific patient in front of a clinician—identifying relevant pieces of evidence in the available information, while also contributing to the pool of potentially useable information for future patients; providing meaningful continuing education for health professionals—moving beyond the traditional lecture approach to one that enables learning in place,

occurs in real-time, and is practical and applied in the course of clinical care; and finally, collectively providing a foundation for quality improvement systems. If we can achieve this kind of integration—point of service, patient-centered understanding, robust data systems on the clinical side, coupled with relevant analytic elements—we can, potentially, simultaneously, advance on the four critical goals of evaluation, decision support, continuing education, and quality improvement.

As we move in this direction, it is worth considering whether the ultimate goal is to understand what works and what doesn’t work in an “N of 1.” After all, this reflects the ultimate in individualized care. Granted, for those steeped in thinking about probability, Bayesian analysis, and decision theory, this seems a rather extreme notion. But if we consider for a moment that probability is an expression of uncertainty and ignorance, a key consideration becomes: What parts of uncertainty in health care are reducible?

Consider the classic example of the coin toss—flip a coin, the likelihood of it landing heads or tails is approximately 50/50. In such an experiment, what forces are constant and what forces are variable in determining what happens to that coin? Gravity, obviously, is a fairly reliable constant, and the coin’s size, weight, and balance can be standardized. What varies is the exact place where the coin is tossed, the exact height of the toss, the exact force with which the coin is flipped, the exact surface on which it falls, and the force that the impact imparts to the coin. Imagine, therefore, that instead of just taking a coin out of a pocket, flipping it, and letting it fall on the floor, we instead had a vacuum chamber that was precisely a given height, with a perfectly absorptive surface, and that the coin was placed in a special lever in the vacuum chamber with a special device to flip it with exactly the same force in exactly the same location every time it strikes that perfectly balanced coin. Instead of falling 50/50 heads or tails, how the coin falls will be determined almost entirely by how it is placed in the vacuum chamber and tossed by the lever.

If we apply that analogy to individual patients, the expression of uncertainty about what happens to patients and groupings of patients should be resolvable to the individual attribute at the genetic, physiologic, functional, and historical level. If resolution to the level of individual patients is not possible, an appropriate objective might be to understand an intervention’s impact in increasingly refined subgroups of patients. In point of fact, we are already seeing signs of such movement, for example, in the form of different predictive ability for patients who have particular genetic endowments.

As we consider the array of methods and develop strategies for their use in clinical effectiveness research, a guiding notion might be “a meta-experimental strategy” that aids the determination of which new methods and approaches to learning what works, and for what specific purposes,

enables the assessment of several strategies, separately and in concert, and develops information on how the various methods and strategies can be deployed successfully. In other words, rather than focusing on how well a particular strategy evaluates a particular kind of problem, in a particular class of patient, from this particular point of view, with these particular endpoints in mind, we might ask what is the array of experimental methods that collectively perform in a manner that enables us to make better decisions for the individual and better decisions for society. What is the experiment of experiments? And how could we structure our future learning opportunity so that as we are learning what works for a particular kind of patient, we are also learning the way in which that strategy of evaluation can be employed to achieve a health system that is driven by evidence and based on value.

THE PATH TO RESEARCH THAT MEETS EVIDENCE NEEDS

Carolyn M. Clancy, M.D.

Agency for Healthcare Research and Quality

All of us share a common passion for developing better evidence so we can improve health care, improve the value of health care, and provide clinicians, patients, and other relevant parties with better information to make health decisions. In that context, this paper explores a central question: How can our approach to clinical effectiveness research take better advantage of emerging tools and study designs to address such challenges as generalizability, heterogeneity of treatment effects, multiple co-morbidities, and translating evidence into practice? This paper focuses on emerging methods in effectiveness research and approaches to turning evidence into action and concludes with some thoughts about health care in the 21st century.

Emerging Methods in Effectiveness Research

Early in the development of evidence-based medicine, discussions of methods were rarely linked to translating evidence into everyday clinical practice. Today, however, that principle is front and center, in part because of the urgency that so many of us feel about bringing evidence more squarely into healthcare delivery. Evidence is a tool for making better decisions, and health care is a process of ongoing decision making. The National Business Group on Health recently issued a survey suggesting that a growing number of consumers—primarily people on the healthier end of the spectrum, but also including some 11 percent of individuals with a chronic illness—say that they turn to sources other than their doctors for

information. Some, for example, are even going to YouTube—not yet an outlet for our work, but who knows what the future might bring? If there is a lesson there, it is that evidence that we are developing has to be valid, broadly available, and relevant.

Traditional Hierarchies of Evidence: Randomized Controlled Trials

Traditional hierarchies of evidence have by definition placed the randomized controlled trial (RCT) at the top of the pyramid of evidence, regardless of the skill and precision with which an RCT is conducted. Such hierarchies, however, are inadequate for the variety of today’s decisions in health care and are increasingly being challenged. RCTs obviously remain a very strong tool in our research armamentarium. Yet, we need a much more rigorous look at their appropriate role—as well as more scrutiny of the role of nonrandomized or quasi-experimental evidence. As we talk about the production of better evidence, we must keep the demand side squarely in our sights. Clearly a path to the future lies ultimately in the production of evidence that can be readily embedded in the delivery of care itself—a “Learning Healthcare Organization”—a Holy Grail that is not yet a reality.

The ultimate questions are fairly straightforward for a particular intervention, product, tool, or test: Can it work? Will it work—for this patient, in this setting? Is it worth it? Do the benefits outweigh any harms? Do the benefits justify the costs? Does it offer important advantages over existing alternatives? The last question in particular can be deceptively tricky. In some respects, the discussion is really a discussion of value. Given the increases in health expenditures over the past few years alone, the issue of value cannot be dismissed.

Clinicians know, of course, that clearly what is right for one person does not necessarily work for the next person. The balance of benefits versus harms is influenced by baseline risk, patient preferences, and a variety of other factors. The reality of medicine today is that for many treatment decisions, two or more options are available. What is not so clear is determining with the patient what the right choice is, in a given case, for that particular person. (As an aside, we probably do not give individual patients as much support as we should when they choose to take a path that differs from what we recommend.)

Even the most rigorously designed randomized trial has limitations, as we are all acutely aware. Knowing that an intervention can work is necessary but not sufficient for making a treatment decision for an individual patient or to promote it for a broad population. Additional types of research can clearly shed light on such critical questions as who is most likely to benefit from a treatment and what the important trade-offs are.

Nonrandomized Studies: An Important Complement to RCTs

Nonrandomized studies will never entirely supplant the need for rigorously conducted trials, but they can be a very important complement to RCTs. They can help us examine whether trial results are replicable in community settings; explore sources of differences in safety or effectiveness arising from variation among patients, clinicians, and settings; and produce a more complete picture of the potential benefits and harms of a clinical decision for individual patients or health systems. In short, nonrandomized studies can enrich our understanding of how patient treatments in practice differ from those in trials. A good case in point are two studies published in the 1980s by the Lipid Research Clinics showing that treatment to lower cholesterol can reduce the risk of coronary heart disease in middle-aged men (The Lipid Research Clinics Coronary Primary Prevention Trial Results. I. Reduction in Incidence of Coronary Heart Disease, 1984; The Lipid Research Clinics Coronary Primary Prevention Trial Results. II. The Relationship of Reduction in Incidence of Coronary Heart Disease to Cholesterol Lowering, 1984).

The researchers gave us an invaluable look at middle-aged, mostly white men who had one risk factor for coronary artery disease and were stunningly compliant with very unpleasant medicines, such as cholestyramine. The field had never had such specific data before, and, although the study was informative, patients rarely reflect the study population, at least in my experience as most come with additional risk factors and infrequently adhere to unpleasant medicines.

My colleague David Atkins recently alluded to an important nuance of RCTs that bears mention here. He observed that “trials often provide the strong strands that create the central structure, but the strength of the completed web relies on a variety of supporting cross strands made up of evidence from a more diverse array of studies”(Atkins, 2007). In other words, if clinical trials are the main strands in a web of evidence, it is important to remember that they are not the entire web.

For example, recent Agency for Healthcare Research and Quality (AHRQ) reports that have relied exclusively on nonrandomized evidence include one on total knee replacement and one on the value of islet cell transplantation. The latter study found that 50–90 percent of those who had the procedure achieved insulin independence, but it raised questions about the duration of effect. Another study on bariatric surgery found the surgery resulted in a 20–30 kilogram weight loss, versus a 2–3 kilogram loss via medicine, but raised questions about safety. Available nonrandomized studies are adequate to demonstrate “it can work,” but may not be able to answer the question, “Is it worth it?”

Nonrandomized studies complement clinical trials in several ways.

Because most trials are fairly expensive, requiring development and implementation of a fairly elaborate infrastructure, their duration is more likely to be short term rather than long term. One finding from the initial Evidence Report on Treatment of Depression—New Pharmacotherapies, which compared older with newer antidepressants, was that at that time the vast majority of studies followed patients for no longer than 3 months (Agency for Healthcare Policy and Research, 2008d). This situation has changed since then thanks to investments made by the National Institute of Mental Health.

Nonrandomized trials also help researchers to pursue the similarities and differences between the trial population and the typical target population, and between trial intervention and typical interventions. Nonrandomized trials enable researchers to examine the heterogeneity of treatment effects in a patient population that in some ways or for some components may not look very much like the trial population. This, in turn, may create a capacity to modify the recommendations as they are implemented. Finally, nonrandomized trials enable researchers to study harm and safety issues in less selective patient populations and subgroups.

One example is the National Emphysema Treatment Trial, the first multicenter clinical trial designed to determine the role, safety, and effectiveness of bilateral lung volume reduction surgery (LVRS) in the treatment of emphysema. An AHRQ technology assessment of LVRS concluded that the data on the risks and benefits of LVRS were too inconclusive to justify unrestricted Medicare reimbursement for the surgery. However, the study also found that some patients benefited from the procedure. This prompted the recommendation that a trial evaluating the effectiveness of the surgery be conducted. The National Emphysema Treatment Trial followed to evaluate the safety and effectiveness of the best available medical treatment alone and in conjunction with LVRS. A number of interesting occurrences transpired within this study. First, people were not randomized to medicine versus surgery—all patients went through a course of maximum medical therapy or state-of-the-art pulmonary rehabilitation, after which they were randomized to continue rehab or to be enrolled in the surgical part of the trial.

With many patients, this was their first experience with very aggressive pulmonary rehab. Because many felt very good after the rehab, at the end of the first course of treatment many patients pulled out of the study. That extended the time it took for study enrollment. Thereafter, some of the study’s basic findings were unexpected. Two or 3 years into the study, a paper in the New England Journal of Medicine effectively identified a high-risk subgroup whose mortality was higher after surgery. The talk of increased mortality further impeded study enrollment rates, which of course further delayed the results. Today, the number of LVRS procedures is very low. The reason for the decline is an open question—perhaps it is a matter

of patients’ perspectives or perhaps the trial took so long that one could argue that it was almost anti-innovation. Regardless, the example illustrates both that trials can take a long time and that they may not provide the magic bullet for making specific decisions.

The Challenge of Heterogeneity of Treatment Effects

In terms of the approval of products, clinical trials may not always represent the relevant patient population, setting, intervention, or comparison. Efficacy trials may exaggerate typical benefits, minimize typical harms, or overestimate net benefits. Clearly, external validity becomes a problem or a challenge, and that dilemma has been the subject of many lively debates. As noted by Nicholas Longford, “clinical trials are good experiments but poor surveys.” In a paper a few years ago in the Milbank Quarterly, Richard Kravitz and colleagues suggested that the distribution of specific aspects and treatment effects in any particular trial for approval could result in a very different sense of the expected treatment effect in broader populations (Kravitz et al., 2004).

Discussing the difficulties of applying global evidence to individual patients or groups that might depart from the population average, the paper argues that clinical trials “can be misleading and fail to reveal the potentially complex mixture of substantial benefits for some, little benefit for many, and harm for a few. Heterogeneity of treatment effects reflects patient diversity in risk of disease, responsiveness to treatment, vulnerability to adverse effects, and utility for different outcomes.” By recognizing these factors, the paper suggests, researchers can design studies that better characterize who will benefit from medical treatments, and clinicians and policy makers can make better use of the results.

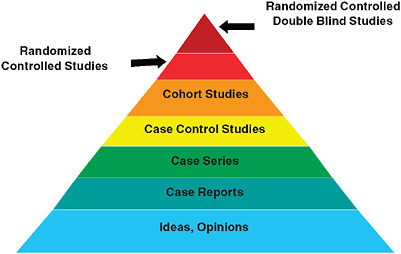

A relevant area of study that has received a great deal of attention in this regard has been the use of carotid artery surgery and endarterectomy. Figure 1-2 shows 30-day mortality in older patients who had undergone an endarterectomy. The vertical values show what happened for Medicare patients in trial hospitals as well as hospitals with high, low, and medium patient volume. The lower horizontal line shows, by contrast, mortality in the Asymptomatic Carotid Artery Surgery (ACAS) trial; the line above it represents mortality in the North American Symptomatic Carotid Endarterectomy Trial (NASCET). Clearly, generalizing the results observed in the trials to the community would have been mistaken in terms of mortality rates.

The limitations of approval trials for individual decision making are well known, such as the previously mentioned LRC trial. In point of fact, a trial may represent neither the specific setting and intervention nor the individual patient. Issues of applicability and external validity really come

FIGURE 1-2 Thirty-day mortality in older patients undergoing endarterectomy (versus trials and by annual volume).

SOURCE: Derived from McGrath et al., 2000.

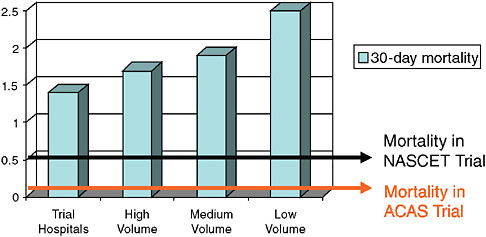

into focus, because essentially what we are reporting from trials is the average effect, and for an individual that may or may not be specifically relevant. In addition, of course, the heterogeneity of treatment effects seen in a trial becomes very important. One can come to very different conclusions depending on the distribution of the net treatment benefit and how narrow that distribution is (see Figure 1-3, for example), and yet we don’t often know that with the first trial that has been done for approval.

An ongoing challenge and debate is the extent to which we can count on subgroup analysis to gain a more complete picture and information that is more relevant to a heterogeneous treatment population. In terms of such analyses, we need to be cautious in individual trials. Subgroup analyses are not reported regularly enough for individual patient metaanalyses. Moreover, we need to look beyond RCTs to inform judgments about the applicability and heterogeneity of treatment effects. Several years ago, AHRQ was asked to produce a systematic review of what was known about the effectiveness of diagnosis and treatment for women with coronary artery disease. A critical body of evidence existed, because it was a requirement of federal trials that women and minorities be enrolled in all clinical studies. We found, however, that it was extremely difficult to combine results across those studies, which underscores how difficult it is to combine data across trials as a basis for meta-analysis or any other quantitative technique.

FIGURE 1-3 Treatment benefit distribution of different sample population subgroups for a clinical trial.

NOTE: Reprinted, with permission, from John Wiley & Sons, Inc., 2008.

SOURCE: Kravitz et al., 2004.

Practical Clinical Trials: Embedding Research into Care Delivery

Another interesting set of considerations revolves around the differences between practical (or pragmatic) clinical trials (PCTs) versus explanatory clinical trials and how PCTs might move the field closer to the notion of embedding research into care delivery and contending directly with issues confronting clinicians. In PCTs, hypotheses and study design are formulated based on information needed to make a clinical decision; explanatory trials are designed to better understand how and why an intervention works. Also, while practical trials address risks, benefits, and costs of an intervention as they would occur in routine clinical practice, explanatory trials maximize the chance that the biological effect of a new treatment will be revealed by the study (Tunis et al., 2003).

Another example comes from a systematic review of treatments for allergic rhinitis and sinusitis. For patients with either diagnosis there is a considerable body of information. The real challenge, though, is that in primary care settings most patients are not really interested in the kinds of procedures that one needs to make a definitive diagnosis. Thus, clinicians and patients typically do not have a lot of practical information to work with, even with data from the systematic study reviews. Practical clinical trials would address risks, benefits, and causes of interventions as they occur in routine clinical practice rather than trying to determine whether,

for example, a particular mechanism actually provides a definitive diagnosis. Comparisons of practical versus explanatory trials date back to the 1960s, perhaps not surprisingly, in the context of the value of various types of psychotherapy. Since then, however, the literature seems to have gone silent on such issues. It would be a useful activity to revisit these questions and actually develop an inventory of what we know about practical clinical trials and how difficult they can be.

Different study designs contribute different effects. Looking at the variety of study designs, we can move down a continuum from efficacy trials, the least biased estimate of effect under ideal conditions, where we have maximum internal validity; to effectiveness trials, which provide a more representative estimate of the benefits and harms in the real world and presumably have increased external validity; to systematic review of trials that have used the same end-points, outcomes meta-analysis, or other quantitative techniques. The latter types of trials provide the best estimate of overall effect, investigate questions of heterogeneity of treatment effect, and explore uncommon outcomes.

Collaborative Registries

We can also use cohort studies registries, which use risk prediction to target treatment and are effective in reaching underrepresented populations, and case control studies, which can be particularly helpful in detecting relatively rare harms or adverse events that were not known or not expected. There is a substantial, growing interest among a number of physician specialties in creating registries. Registries provide a way to explore longer term outcomes, adverse effects, and practice variations, and they provide an avenue for the investigation of the heterogeneity of a treatment effect. The Society for Thoracic Surgeons is probably the best (and most familiar) model, but other surgical societies also are currently developing registries of their own. It will be a very interesting challenge to determine how, when, and where registries fit into the overall context of study designs, and how information from registries fits within the broader web of evidence-based medicine. AHRQ is using patient registry data as one approach to turning evidence into action, which is discussed in the next section.

Indeed, in the world of evidence-based medicine, two of the key challenges are the translation of evidence into everyday clinical practice, and how to manage situations when existing evidence is insufficient. Virtually every day, AHRQ receives telephone calls that focus on the fact that lack of evidence of effect is not the same thing as saying that a treatment is ineffective. Similarly, what is equivalent for the group is not necessarily equivalent for the individual. Measuring and weighing outcomes such as quality of life and convenience is obviously not a feature of most standardized clinical

trials. Moreover, in considerations of such issues, we need to keep in mind the downstream effects of policy applications, such as diffusion of technology, effects on innovation, and unintended consequences. For example, one of the reasons that RCTs are such a poor fit for evaluating devices or any evolving technology is that the devices change all the time. One of the challenges that AHRQ hears regularly is that we invested all of this money and it took years to complete this trial, and we answered a question that is no longer relevant.

Observational Studies

This leads to the question of whether observational studies can reduce the need for randomized trials. Clearly observational studies are a preferred alternative when clinical trials are impractical, not possible, or unethical (we seem to debate that question a lot even though we are not particularly clear about what it means). Last year, a paper defended the value of observational studies. The authors asserted that observational studies can be a very useful tool when one examines something with a stable or predictable background course that is associated with a large, consistent, temporal effect, with rate ratios over 10, and where one can demonstrate dose response, specific effects, and biological plausibility (Glasziou et al., 2007). Observational studies are also of value when the potential for bias is low.

The advantages of observational studies are worth considering. First, most clinical trials are not sufficiently powered to detect adverse drug effects. AHRQ felt the effect of this design weakness when a report it sponsored on the benefits and harms of a variety of antidepressants was published. The report concluded that there was insufficient useful information to say anything definitive about the comparative risk profiles of newer and older antidepressants. The fact that the report had nothing to say about side effects drew a flood of protests from passionate constituents. Among other drawbacks, clinical trials clearly are limited by poor external validity in many situations; they may not be generalized to many subgroups that are of great interest to clinicians. On the other hand, longer term follow-up, not the rule for clinical trials, can be a strong advantage of observational studies. Moreover, observational studies clearly facilitate risk management and risk minimization and may indeed facilitate translation of evidence into practice. There are those who argue that all clinical trials should have an observational component, and in fact the field is starting to see some of that in trials. Comparing surgery to medical treatments for low back pain, for example, the Spine Patient Outcomes Research Trial (SPORT) randomized people if their preferences were neutral. Both patients and clinicians had to be neutral about the value of medicine versus surgery, and those people who were not neutral were followed in a registry.

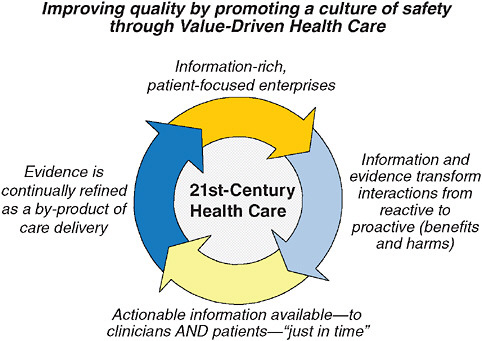

FIGURE 1-4 Evidence levels—Grades of Recommendation, Assessment, Development and Evaluation (GRADE).

Grading Evidence and Recommendations

A notable and exciting development is the GRADE (Grades of Recommendation, Assessment, Development and Evaluation) collaborative, whose goal is to promote a more consistent and transparent approach to grading evidence and recommendations.1 This approach considers that how well a study is done is at least as important as the type of study it is. GRADE evidence levels, as summarized in Figure 1-4, suggest that randomized trials that are flawed in their execution should not be at the top of the pyramid in any hierarchy of evidence. Similarly, observational studies that meet the criteria shown in the figure (and perhaps others as well), and which are done very well, might in some instances be considered better evidence than a randomized trial, if a randomized trial is poorly done. These standards are being adopted by the American Colleges of Physicians, American College of Chest Physicians, National Institute for Clinical Excellence, and World Health Organization, among others.

All of us imagine a near-term future where there is going to be much greater access to high-quality data. However, in order to take full advantage of that, we need to continue to advance work in improving methodological research. Why is this necessary? We need more comprehensive data to guide Medicare coverage decisions and to understand the wider range of outcomes. We need to address the gap when data from results of well-designed RCTs are either not available or incomplete. Finally, there are significant

quality, eligibility, and cost implications of coverage decisions (e.g., consider implantable cardioverter defibrillators).

To help advance the agenda for improving methodology, a series of 23 articles on emerging methods in comparative effectiveness and safety were published in October 2007 in a special supplement to the journal Medical Care. These papers are a valuable new resource for scientists who are committed to advancing the comparative effectiveness and safety research, and this is an area in which AHRQ intends to continue to push.2

Approaches to Turning Evidence Into Action

The Agency for Healthcare Research and Quality has several programs directed at turning evidence into action. AHRQ’s program on comparative effectiveness was authorized by Congress as part of the Medicare Modernization Act and funded through an appropriation starting in 2005. This Effective Health Care Program (EHCP) is essentially trying to produce evidence for a variety of audiences, based on unbiased information, so that people can make head-to-head comparisons as they endeavor to understand which interventions add value, which offer minimal benefit above current choices, which fail to reached their potential, and which work for some patients but not for others. The overarching goal is to develop and disseminate better evidence about benefits and risks of alternative treatments, which is also important for policy discussions. The statute is silent on cost effectiveness, although it does say that the Medicare program may not use the information to deny coverage. Less clear is whether prescription drug plans can use EHCP information in such a way; again, the statute is silent.

The AHRQ EHCP has three core components. One is synthesizing existing evidence through Evidence-Based Practice Centers (EPCs), which AHRQ has supported since 1997. The purpose is to systematically review, synthesize, and compare existing evidence on treatment effectiveness, and to identify relevant knowledge gaps. (Anyone who has ever conducted a systematic or even casual review knows that if you are searching through a pile of studies, inevitably you will have unanswered questions—questions that are related to but not quite the main focus of the particular search that you are doing.)

The second component is to generate evidence—to develop new scientific knowledge to address knowledge gaps—and to accelerate practical studies. To address critical unanswered questions or to close particular

|

2 |

All of the articles are available for free download at the website www.effectivehealthcare.ahrq.gov/reports/med-care-report.cfm or can be ordered as Pub. No. OM07-0085 from AHRQ’s clearinghouse. |

research gaps, AHRQ relies on the DEcIDE (Developing Evidence to Inform Decisions about Effectiveness) network, a group of research partners who work under task-order contracts and who have access to large electronic clinical databases of patient information. The Centers for Education & Research on Therapeutics (CERTs) is a peer-reviewed program that conducts state-of-the-art research to increase awareness of new uses of drugs, biological products, and devices; to improve the effective use of drugs, biological products, and devices; to identify risks of new uses; and to identify risks of combinations of drugs and biological products.

Finally, AHRQ also works to advance the communication of evidence and its translation into care improvements. Many researchers will recall that our colleague John Eisenberg always talked about telling the story of health services research. Named in his honor, the John M. Eisenberg Clinical Decisions and Communications Science Center, based at Oregon Health Sciences University, is devoted to developing tools to help consumers, clinicians, and policy makers make decisions about health care. The Eisenberg Center translates knowledge about effective health care into summaries that use plain, easy-to-understand, and actionable language, which can be used to assess treatments, medications, and technologies. The guides are designed to help people to use scientific information to maximize the benefits of health care, minimize harm, and optimize the use of healthcare resources. Center activities also focus on decision support and other approaches to getting information to the point of care for clinicians, as well as on making information relevant and useful to patients and consumers.

The Eisenberg Center is developing two new translational guides, the Guide to Comparative Effectiveness Reviews and Effectiveness and Off-Label Use of Recombinant Factor VIIa. In April 2007, AHRQ also published Registries for Evaluating Patient Outcomes: A User’s Guide, co-funded by AHRQ and the Centers for Medicare & Medicaid Services (CMS), the first government-supported handbook for establishing, managing, and analyzing patient registries. This resource is designed so that patient registry data can be used to evaluate the real-life impact of healthcare treatments and can truly be considered a milestone in growing efforts to better understand what treatments actually work best and for whom (Agency for Healthcare Research and Quality, 2008c).

Clearly, there are a variety of problems that no healthcare system is large enough or has sufficient data to address on its own. Many researchers envision creation of a common research infrastructure, a federated network prototype that would support the secure analyses of electronic information across multiple organizations to study risks, effects, and outcomes of various medical therapies. This would not be a centralized database—data would stay with individual organizations. However, through the use of common research definitions and terms, the collaborative would create a

large network that would expand capabilities far beyond the capacity of any one individual system.

The long-term goal is a coordinated partnership of multiple research networks that provide information that can be quickly queried and analyzed for conducting comparative effectiveness research. There are enormous opportunities here, but to come to fruition the effort will take considerable difficult work upfront. In that regard, AHRQ has funded contracts to support two important models of distributed research networks. One model being evaluated leverages partnerships of a practice-based research network to study utilization and outcomes of diabetes treatment in ambulatory care. This project is led by investigators from the University of Colorado DEcIDE center and the American Academy of Family Physicians to develop the Distributed Ambulatory Research in Therapeutics Network (DARTNet), using electronic health record data from 8 organizations representing more than 200 clinicians and over 350,000 patients (Agency for Healthcare Research and Quality, 2008a). The second model is established within a consortium of managed care organizations to study therapies for hypertension. This project is led by the HMO Research Network (HMORN) and the University of Pennsylvania DEcIDE centers (Agency for Healthcare Research and Quality, 2008a). It will develop a “Virtual Data Warehouse” to assess the effectiveness and safety of different anti-hypertensive medications used by 5.5 to 6 million individuals cared for by six health plans.

Both projects will be conducted in four phases over a period of approximately 18 months, with quarterly reports posted on AHRQ’s website. These reports will describe the design specifications for each network prototype; the evaluation of the prototype; research findings from the hypertension and diabetes studies; and the major features of each prototype in the format of a prospectus or blueprint so that the model may be replicated and publicly evaluated.

In addition to the AHRQ efforts, others are also supporting activities in this arena. Under the leadership of Mark McClellan, the Quality Alliance Steering Committee at the Engelberg Center for Health Care Reform at the Brookings Institution is engaged in work to effectively aggregate data across multiple health insurance plans for the purposes of reporting on physician performance. Effectively the plans will each be producing information on a particular physician, and its weighted average will be computed and added to the same information derived from using Medicare data. The strategy is that data would stay with individual plans, but would be accessed using a common algorithm. As recent efforts to aggregate data for the purposes of quality measurement across plans have found, this is truly difficult but important work.

Among other efforts, the nonprofit eHealth Initiative Foundation has

started a research program designed to improve drug safety for patients. The eHI Connecting Communities for Drug Safety Collaboration is a public- and private-sector effort designed to test new approaches and to develop replicable tools for assessing both the risks and the benefits of new drug treatments through the use of health information technology. Results will be placed in the public domain to accelerate the timeliness and effectiveness of drug safety efforts. Another important ongoing effort is the Food and Drug Administration’s work to link private- and public-sector postmarket safety efforts to create a virtual, integrated, electronic “Sentinel Network.” Such a network would integrate existing and planned efforts to collect, analyze, and disseminate medical product safety information to healthcare practitioners and patients at the point of care. These efforts underscore the commitment by many in the research community to creating better data and linking those data with better methods to translate them into more effective health care.

Health Care in the 21st Century

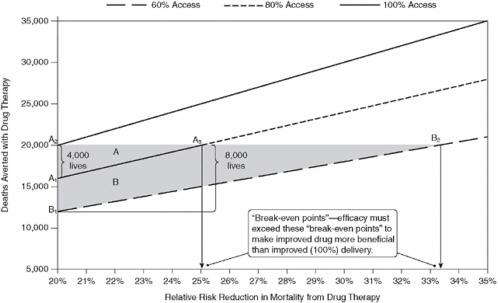

We must make sure that we do not lose sight of the importance of translating evidence into practice. For all of our excitement about current and anticipated breakthroughs leading to a world of personalized health care in the next decade, probably larger gain in terms of saving lives and reducing morbidity is likely to come from more effective translation. Researcher Steven Woolf and colleagues published interesting observations on this topic in 2005 (Figure 1-5) (Woolf and Johnson, 2005). They showed that if 100,000 patients are destined to die from a disease, a drug that reduces death rates by 20 percent will save 16,000 lives if delivered to 80 percent of the patients; increase the drug delivery to 100 percent of patients and you save an additional 4,000 lives. To compensate for that in improved efficacy you would have to have something that is 25 percent more efficacious. Thus, in the next decade, translation of the scientific evidence we already have is likely to have a much bigger impact on health outcomes than breakthroughs coming on the horizon.

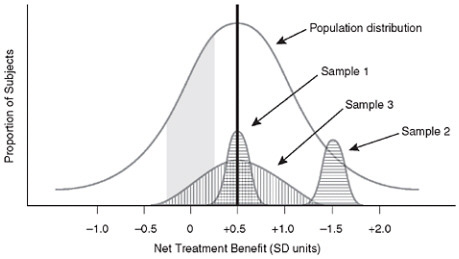

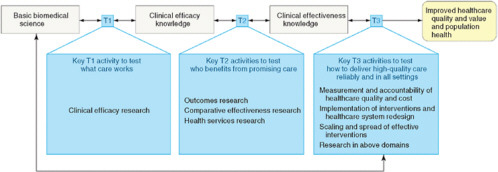

The clinical research enterprise has talked a lot about phase 1 and 2 translation research (T1 and T2). Yet, we need to think about T3: the “how” of high-quality care. We need to transcend thinking about translation as an example of efficacy and think instead about translation as encompassing measurements and accountability, system redesign, scaling and spread, learning networks, and implementation and research beyond the academic center (Dougherty and Conway, 2008). Figure 1-6 outlines the three translational steps that form the 3T’s road map for transforming the healthcare system. Figure 1-7 suggests a progression for the evolution of translational research.

FIGURE 1-5 Potential lives saved through quality improvement—The “break-even point” for a drug that reduces mortality by 20 percent.

SOURCE: Woolf, S. H., and R. E. Johnson. 2005. The break-even point: When medical advances are less important than improving the fidelity with which they are delivered. Annals of Family Medicine 3(6):545-552. Reprinted with permission from American Academy of Family Physicians, Copyright © 2005.

FIGURE 1-6 The 3T’s roadmap.

NOTE: T indicates translation. T1, T2, and T3 represent the three major translational steps in the proposed framework to transform the healthcare system. The activities in each translational step test the discoveries of prior research activities in progressively broader settings to advance discoveries originating in basic science research through clinical research and eventually to widespread implementation through transformation of healthcare delivery. Double-headed arrows represent the essential need for feedback loops between and across the parts of the transformation framework.

SOURCE: Journal of the American Medical Association 299(19):2319-2321. Copyright © 2008 American Medical Association. All rights reserved.

FIGURE 1-8 Model for 21st-century health care.

This area is clearly still under development and in need of more focused attention from researchers

In closing, we can no doubt all agree that the kind of healthcare system we would want to provide our own care would be information rich but patient focused, in which information and evidence transform interactions from the reactive to the proactive (benefits and harms). Figure 1-8 summarizes a vision for 21st-century health care. In this ideal system, actionable information would be available—to clinicians and patients—“just in time,” and evidence would be continually refined as a by-product of healthcare delivery. The goal is not producing better evidence for its own sake, although the challenges and debates about how to do that are sufficiently invigorating on their own that we can almost forget what the real goals are. Achieving an information-rich, patient-focused system is the challenge that is at the core of our work together in the Value & Science-Driven Health Care Roundtable. Where we are ultimately headed, of course, is to establish the notion, discussed widely over the past several years, of a learning healthcare system. This is a system in which evidence is generated as a byproduct of providing care and actually fed back to those who are providing care, so that we become more skilled and smarter over time.

REFERENCES

AcademyHealth. 2008. Health Services Research (HSR) Methods [cited June 15, 2008]. http://www.hsrmethods.org/ (accessed June 21, 2010).

Agency for Healthcare Research and Quality. 2008a. Developing a Distributed Research Network to Conduct Population-based Studies and Safety Surveillance 2009 [cited June 15, 2008]. http://effectivehealthcare.ahrq.gov/index.cfm/search-for-guides-reviews-and-reports/?pageaction=displayproduct&productID=150 (accessed June 21, 2010).

———. 2008b. Distributed Network for Ambulatory Research in Therapeutics [cited June 15, 2008]. http://effectivehealthcare.ahrq.gov/index.cfm/search-for-guides-reviews-and-reports/?pageaction=displayproduct&productID=317 (accessed June 21, 2010).