7

Assembling Evidence and Informing Decisions

|

KEY MESSAGES

|

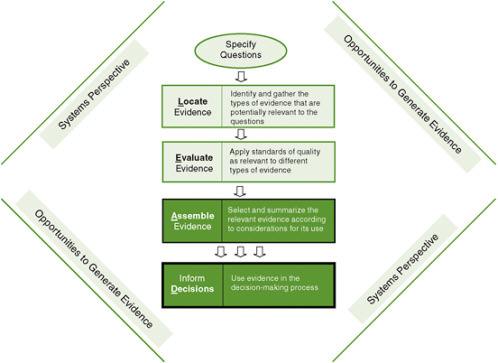

Once the best available evidence has been located (Chapter 5) and evaluated (Chapter 6), it should be assembled and communicated so it can be used to inform decisions (see Figure 7-1). The goal of such evidence reports is to present past experiences in useful, relevant, and readily understood terms to help decision makers choose which programs or policies to implement (i.e., an intervention or a combination of a set of interventions). Within their own disciplines, researchers, program

FIGURE 7-1 The Locate Evidence, Evaluate Evidence, Assemble Evidence, Inform Decisions (L.E.A.D.) framework for obesity prevention decision making.

NOTE: The elements of the framework addressed in this chapter are highlighted.

evaluators, public health officials, and policy makers tend to communicate in highly specialized, useful shorthand. Reporting evidence in accordance with the L.E.A.D. framework will create a shared understanding of the state of relevant knowledge that will support cross-disciplinary decision making. It is also important to recognize that decision makers grappling with an emergent problem such as obesity may need to decide on a course of action in the relative absence of evidence or on the basis of inconclusive, inconsistent, or incomplete evidence. In some cases, the conclusions that can be drawn from the existing evidence may not appear to fit the population, setting, or circumstances at hand. In this context, transparent and structured processes for assembling and reporting evidence allow for reasoned discussion and for modification as new information comes to light. Finally, once a course of action has been chosen, the decision itself and the knowledge gained in the process need to be incorporated into the context of the organization or system where the decision was made. Such “knowledge integration” is the desired final outcome of the application of the L.E.A.D. framework.

The committee suggests that, to inform decisions using the framework proposed in this volume, the conclusions reported to decision makers be formulated to respond to the three questions shown in Figure 5-2 in Chapter 5: (1) Why should we do something about this problem in our situation? (2) What specifically should we do about this problem? and (3) How do we implement this information for our situation? Translating these questions into the language of evidence-based decision making results in three topics that are addressed in this chapter:

-

evidence concerning the characteristics of the problem to be addressed in the population targeted by an intervention (“Why” questions);

-

evidence supporting the theoretical underpinnings of each potential intervention, presented as a logic model, and evidence supporting the impact of the intervention (“What” questions); and

-

considerations involved in implementation, with an appropriate balance of fidelity and adaptation to achieve optimum results (“How” questions).

While each of these topics has been considered in previous chapters, two major sections form the balance of this chapter: (1) specific guidance for assembling the evidence and other information (theory, professional experience, and local wisdom) to support decision making and (2) a standard template for summarizing the evidence, using the L.E.A.D. framework, to provide information relevant to the needs of decision makers. The third section briefly addresses knowledge integration.

GUIDANCE FOR ASSEMBLING THE EVIDENCE

Why Should We Do Something About This Problem in Our Situation?

An evidence report should begin with a succinct summary of the problem to be addressed in the decision-making context. In the case of local decision making, for example, this problem statement may be developed most readily by conducting a community assessment, as discussed in the Institute of Medicine (IOM) report Local Government Actions to Prevent Childhood Obesity (IOM, 2009). Community assessments begin with a detailed description of the local conditions to be addressed by an intervention. Local academic partners, public health departments, academic settings, or health information exchanges may be able to provide access to available data and necessary epidemiological or statistical support. Community assessments can also include examining the access of various segments of the community to an optimal environment to support healthy weight, including healthful food and opportunities for physical activity.

The systems thinking discussed in Chapter 4 is important in addressing “why” something should be done about a problem in a particular context. Changes in zoning codes or in agricultural policies, for example, have far-reaching consequences that are best understood by thinking of the larger system implications, often using com-

plex systems models. The information gathered to inform decision makers in those cases would necessarily include both empirical data and models that identify intended effects, dependencies, and potential adverse effects. For these reasons, answers to “Why” questions may require a critique of the model used or the underlying context.

What Specifically Should We Do About This Problem?

Estimates of the effectiveness or impact of an intervention within a well-defined population or setting are essential for assessing its potential to address a public health problem such as obesity. To this end, the evidence should be synthesized for each potential intervention to answer three questions. First, what is the broader context for the intervention? This question should be addressed using a systems perspective. Second, what does the evidence say about the effects of the intervention? Finally, what will be the overall public health impact of the intervention? Decision makers may need to weigh highly effective interventions that reach only a modest number of people against less effective interventions that reach the entire population at risk; they may decide to bring promising interventions to scale even in the face of incomplete information.

The Systems Perspective: Logic Models and the Complexity of Interventions

Reducing the proportion of the population that is overweight or obese is the ultimate goal of all obesity prevention activities; reducing individual caloric intake and/or increasing individual physical activity among at-risk persons are intermediate outcomes required to achieve this goal. Direct measurement of obesity reduction may be infeasible, or may require the passage of time or the simultaneous adoption of multiple policy and program innovations. As a result, most research studies and program evaluations focus on the intermediate outcomes, based on their logical link to the prevention of obesity.

Program evaluations and other evidence synthesis methods (e.g., a mixed-method realist review, discussed below) typically require evaluation designs that incorporate testable theories, technically referred to as logic models. The simplest logic model involves the direct chain of causation of an individual intervention. Predicting the effect of a population-level behavioral intervention on the incidence of obesity should take into account three causal links. First, does the intervention affect behavior? Second, does the change in behavior—presumably a reduction of caloric intake or an increase in physical activity—lead to the desired outcome? Third, does the change in behavior lead to other outcomes, and are they adverse or favorable? At best, the causal links that define each of these relationships are imperfect, which means that predicting the effect of an intervention will always be an inexact science.

Multiple interventions often are required to achieve improvements in population health, and studying the effects of individual interventions will be inadequate to determine their overall effectiveness. As discussed in Chapter 4, the Foresight Group

performed an analysis of the many social, economic, and political changes that have contributed to both increasing caloric intake and declining physical activity in the United Kingdom (UK Government Foresight Programme, 2007). Such analyses support the need to take a systems view to understand the implications of interventions for the outcomes of interest. For the purposes of this chapter, it should be noted that the use of qualitative or mathematical models may help identify key mediating variables, providing decision makers with guidance for the development of a portfolio of interventions that may contribute to improvements in population health.

By way of illustration, even the consideration of what appear to be relatively simple interventions, such as those that involve the provision of clear information to the public, can benefit from using a logic model and a systems perspective. Examples include the Arkansas experience in reporting children’s body mass index (BMI) to their parents (Raczynski et al., 2009) and the reporting of calorie content on restaurant menus in New York City (Farley et al., 2009). These interventions matter only if certain other conditions are present: If Arkansas parents are told that their children are overweight, will they make adjustments? How does the public sector ensure that recreational resources are available so parents can encourage their children to engage in more physical activity? In New York, will overweight people change their ordering patterns when they know the calorie content of the food offered in restaurants? Will this public reaction lead fast food chains to decrease the calorie density of the items on their menus? In such cases, the intervention itself is built on a systems model that predicts the answers to these questions and helps decision makers develop coordinated interventions that build on each other. Evidence of effectiveness requires the identification of both immediate and long-term outcomes, with a logic model being used to identify the relationships between them.

Several caveats need to be considered in the use of models to predict the effects of an intervention. Models necessarily mix observations (often limited) with theoretical predictions. The more distal an anticipated outcome, the less certainty may be ascribed to that estimate. An evidence report should include a summary of insights gained by taking a systems perspective in relation to the intervention. Systems summaries will seldom provide precise estimates of effects of a particular intervention or interventions; instead, they may highlight areas of concern or provide decision makers with options for monitoring and evaluating new interventions.

Evaluating the Effectiveness of Interventions

Traditional evidence syntheses emphasize assessment of the effectiveness of potential interventions. Similarly, the L.E.A.D. framework includes a synthesis of the evidence gathered, focused on helping the decision maker understand the likely outcomes of each potential intervention and the degree of certainty with which that projection can be made: What is the likely magnitude of the change to be expected with this intervention alone, or how dependent might the desired change be on combining this intervention with other components in a more comprehensive strategy or policy? How tightly

linked are the immediate effects of the intervention to the goals of decreased caloric intake and increased energy expenditure? In addition, careful attention should be paid to the types of information included, as well as the standards used to evaluate each source of evidence, to determine the level of certainty of the causal link between an intervention and the observed outcomes (see Chapters 5 and 6 for further detail).

The relative importance of different types of evidence will depend on the decision-making context. Best and colleagues (2008a) view evidence according to two distinct spectra: an ecological perspective at the individual, organizational, and systems levels and a programmatic perspective within basic/discovery, clinical, and population research settings. The resulting matrix of evidence recognizes both the research setting, or context, in which the evidence was generated and the fact that mechanisms, actors, and actions will differ depending on whether individuals, organizations, or systems are targeted.

In general, the best evidence at the individual level, particularly in the discovery phase of research, will be derived from experimental or quasi-experimental studies (Best et al., 2008b; Rychetik et al., 2004). These approaches, including the use of statistical meta-analysis techniques, may yield quantitative estimates of the effectiveness of individual-level interventions (see Chapter 5 for more discussion of meta-analysis); however, intent-to-treat analysis of RCTs is the best way to minimize bias in ascertaining treatment effects in individual studies (Montori and Guyatt, 2001). Questions concerning systems and populations may best be answered using nonexperimental sources, such as descriptive research or qualitative analyses (Best et al., 2008b).

Program evaluation techniques evolve with program growth and complexity. In anticipating the types of data that may be available on interventions, Jacobs (2003) notes that while all programs should involve some sort of evaluation, evaluation capacities and interests are not static. For purposes of the present discussion, decision makers may expect that newer, smaller programs are more likely to have lower-quality evidence, while larger, more established programs are likely to generate higher-quality data more directly relevant to health outcomes.

Decision makers typically adapt or modify interventions to suit local conditions. This adaptation may influence the reported effectiveness of an intervention. One method used to transform information obtained from published reports into meaningful generalized guidance is a realist review (a type of mixed-method analysis used to synthesize evidence) (Pawson et al., 2005). As described by Greenhalgh and colleagues (2007, p. 858) in a recent Cochrane review of school feeding programs, “[a] realist review exposes and articulates the mechanisms by which the primary studies assumed the interventions to work (either explicitly or implicitly); gathers evidence from primary sources about the process of implementing the intervention; and evaluates that evidence so as to judge the integrity with which each theory was actually tested and (where relevant) adjudicate between different theories.” The realist approach allows decision makers to apply prior evidence, even in circumstances where complete fidelity to the original studied intervention (i.e., conducted as planned) may not be practical

or possible. In the Greenhalgh and colleagues review, for example, the investigators identify 10 process factors associated with improved efficacy, as well as a number of technical factors that complicate the measurement and assessment of outcomes. In another case, Connelly and colleagues (2007) use the realist review strategy to assess the importance of compulsory aerobic physical education in schools (see Chapter 5 for further discussion).

The intent of evidence synthesis using the L.E.A.D. framework is to build directly on the experience of conducting systematic reviews. As has been described, however, systematic reviews conducted in accordance with this framework will differ from those that rely on quantitative methods for synthesizing the results of experimental data and will gather and evaluate a broader array of available information. The committee’s decision to include evidence derived from many types of studies, qualitative data, and alternative data sources hitherto considered unworthy of inclusion is based on recently published evidence. Examining the inclusion and exclusion of observational studies along with randomized controlled trials (RCTs) in meta-analyses led Shrier and colleagues (2007, p. 1203) to the following conclusions: (1) “including information from observational studies may improve the inference based on only randomized trials”; (2) the estimate of effect is similar for meta-analyses based on observational studies and RCTs; (3) the “advantages of including both … could outweigh the disadvantages…”; (4) “observational studies should not be excluded a priori”; and (5) RCTs have been implemented largely in interventions with individuals, while community-based interventions most frequently use other research methods. Based on technical considerations alone, RCTs are most efficient and reliable when one is considering short-term, readily measured outcomes among individual subjects. While they may be adapted to larger, community-level interventions, doing so greatly increases their cost and complexity and may decrease their reliability (Sege and De Vos, 2008). Chapter 8 presents further detail on the challenges and trade-offs of randomized experiments.

Synthesis of Information from Disparate Sources

The fundamental limitations of even the best available evidence mean that the L.E.A.D. framework will need to provide for ways to fill the gaps in the evidence carefully and transparently using several sources, including theory, professional experience, and local wisdom (see Box 7-1). This, indeed, was the experience of tobacco control efforts across the United States in the last third of the 20th century as the tobacco epidemic was reversed and halved following its peak in 1966 (see Box 7-2). The combining of the above sources with the science-based evidence in the process of planning an intervention has been characterized as “matching, mapping, pooling, and patching” (Green and Glasgow, 2006; Green and Kreuter, 2005, pp. 197-203). Appendix E offers additional in-depth guidance for using theory, professional experience, and local wisdom to adapt science-based evidence to local settings, populations, and times. Included are ways to match the evidence with appropriate intervention targets; to map

|

Box 7-1 Additional Sources of Information Theory—the basis for generalizing from evidence by matching the source of the evidence to the circumstances in which it would be applied. Social and behavioral theory specifies the conditions under which stimulus A (an intervention) produces response B (a change in attitude, belief, behavior, or organizational or community conditions). It allows for consideration of whether such conditions are salient in generalizing the intervention effects found in previous studies to the circumstances in which the intervention would be applied in the particular population, setting, and time at hand. Professional experience—pooling of the experience of those who have addressed the problem in populations or circumstances similar to those at hand. The result is sometimes referred as tacit knowledge and sometimes as anecdotal evidence. Systematic procedures can make such pooling more reliable (D’Onofrio, 2001). Local wisdom—engagement in the planning of interventions of those who have lived with the problem. |

|

Box 7-2 Blending Theory, Expert Opinion, and Local Wisdom: Tobacco Control Efforts In the latter part of the 20th century, innovators in schools, worksites, restaurants, towns, cities, and states enacted clean air policies and conducted smoking prevention and cessation programs, with varying degrees of evidence supporting their actions (Eriksen, 2005; Mercer et al., 2005); similar patterns can be seen in other public health successes of the period, such as injury control (Martin et al., 2007). Those policies and programs that demonstrated effectiveness and sustainability were deemed worthy of note by other jurisdictions. The most notable state tobacco control programs, for example, were those of California and Massachusetts. Those two states, with the support of increased tobacco taxes, had mandates in the early 1990s to undertake and evaluate more comprehensive programs (IOM, 2007). They mobilized aggressively with mass media and programs in schools, worksites, and communities. Evaluation demonstrated first a doubling, then a tripling, of the annual rate of decline in tobacco consumption in California relative to the other 48 states (Siegel et al., 2000). Massachusetts started later than California but eventually achieved a quadrupling of those average 48-state rates of reduced smoking as its program hit its stride in the mid1990s with higher taxes and greater per capita program expenditures (CDC, 1996). The other states showed more interest in the experience and evaluation data from these two states than in the several thousand randomized controlled trials (RCTs) of smoking cessation and prevention in the scientific literature. This was the case because they perceived the greater relevance and representativeness of the two states’ experience relative to the highly controlled, often artificial circumstances and sampled populations of RCTs. |

the adaptations of interventions to the characteristics of the setting, population, and time; to use professional consultations to pool the experience of other decision makers in addressing populations and settings similar to those at hand; and to use community involvement to patch the gaps in evidence, theory, and professional experience by engaging local residents to offer their interpretation of the proposed intervention and their perception of its fit with their needs.

Evaluating the Impact of Interventions

Estimates of the effectiveness of an intervention within a well-defined population or setting provide important information for policy decisions. It is important to use such estimates to begin to assess the potential of an intervention to address a public health problem such as obesity. Although consensus does not exist on the criteria necessary to confirm that an intervention has produced a significant public health impact, the RE-AIM framework provides a comprehensive model for evaluating the potential impact of a public health intervention. The five dimensions that form the framework’s acronym are reach, effectiveness, adoption, implementation, and maintenance. Reach refers to the size and characteristics of the population affected by an intervention. Public health interventions may be intended to reach all of part of the general population; may be characterized as universal, selective, or indicated based on the targeted population, which is determined on the basis of risk characteristics (as described in Chapter 2); or may be intended to reach those who can help the intended population (e.g., parents of obese children, policy makers). Typically, an intervention will reach only a portion of the intended population. Effectiveness is the impact of the intervention on targeted outcomes and quality of life. Adoption refers to the proportion and representativeness of settings and intervention staff that agree to deliver a program. Implementation is the extent to which the intervention is implemented as intended. Finally, maintenance denotes the extent to which a program is sustained over time within the delivering organization.

Consideration of each of the five dimensions of the RE-AIM framework can help evaluators understand the broad array of issues an effective program needs to address. Glasgow and colleagues (2006) propose several combined impact indices and discuss the strengths and weaknesses of each. They suggest that combining the RE-AIM dimensions, rather than considering each individually, would be most useful for making policy decisions. The metrics involved in calculating the impact indices are beyond the scope of this report but are described by the authors.

Within the three broad categories of intended reach (universal, selective, or indicated populations), each intervention may also be examined to determine uptake—the proportion of the intended population that the intervention will actually reach. Consider, for example, two different universal interventions: tax policies may well reach the entire population, although the effect may be variable depending on individual resources, while public awareness campaigns designed to reach an entire community typically reach only a portion of the community, depending on the outreach

methods used. A simple intervention that reaches the entire population may have substantial impact; in the case of tobacco control, cigarette taxes proved to have an impact due in large part to their ability to reach all smokers. The impact of other universal tobacco control interventions was achieved with more complex strategies.

It is important to point out that some of the early policy victories in tobacco control did not have a large impact, but they paved the way for subsequent policy actions that had a much greater impact and thus were critical to the success of those actions. For example, school policies prohibiting smoking on school property by teachers and staff affected small numbers of smokers, but set an example for subsequent clean air legislation, smoke-free worksites, and eventually smoke-free restaurants. The policies also helped denormalize smoking in the eyes of school-age children. Another example is the mounting of mass media campaigns that initially had limited impact on smokers themselves, but helped create an informed electorate that would support ballot initiatives and legislative acts for higher tobacco taxes, more clean air controls, and more constraints on advertising and the sale of tobacco to minors. Understanding the context of an intervention and the preliminary actions that preceded it can be key in determining its effectiveness and potential impact.

How Do We Implement This Information for Our Situation?

When making choices about how to address public health problems such as obesity, decision makers should consider whether the relationships identified in research studies will hold up in their own state, locality, or setting. This is especially so if the data on which best-practice evidence is based come from academic settings, college towns, university-affiliated hospitals or clinics, or artificially simulated settings, as is often the case with published evidence. Decision makers should also consider whether the resources and oversight of interventions in the evidence-producing studies are reproducible in their local settings, and whether the best-practice interventions can be taken to scale with larger numbers of organizations or staff lacking the training and close supervision that typically characterize the protocols of scientific studies. Were the extensive training and close supervision of those conducting the intervention related only to the research functions, or are they essential to successful replication of the intervention’s effectiveness in any setting? Was the formally controlled trial artificially complex for research purposes, or is its protocol intrinsically high maintenance? In addition, decision makers need to consider what degree of latitude their policies, program plans, or instructions and guidelines can allow in applying the tested interventions to the variety of subpopulations or individual cases that may be encountered. Although the scientific view of many evidence-based guidelines is that they should be applied faithfully and rigorously as in the protocol tested in efficacy trials or effectiveness studies, that is, applied with “fidelity,” deviations from such strict adherence to protocol may be necessary for adaptation to local circumstances. Yet if an intervention then does not work—if the results in the application fall short of those in the scien-

tific trials—practitioners are likely to be blamed for deviating from the protocol when other factors, such as a different culture in the target population, may be responsible.

The reality that most who use evidence to support obesity prevention policy or practice are likely to face is that the target population for an obesity prevention intervention will be more diverse than the populations in the original studies (Cohen et al., 2008). This situation often leads to the fidelity problem because users need to adapt the protocol, but it also raises issues related to generalizability that characterize much research; in particular, the implementation of the intervention is highly controlled, and the study population settings, procedures, and circumstance often are not representative of the typical settings or target populations at large.

The generalizability problem relates in part to a distinction between function and form with respect to each component of an intervention (Hawe et al., 2004). Functional components are those regarded as essential to successful implementation wherever and with whomever the intervention might be applied, whereas those components of the intervention that are a matter of form usually lend themselves to successful adaptation to different populations, settings, and circumstances. While form should in general follow function, it is necessary to decide which components of an intervention are essential for implementation and which can bear modification. Published studies usually do not offer guidance on these decisions. The research community needs to provide more guidance to implementers who are faced with the need to tailor components of an intervention to individuals or to various population segments (cultures, genders, age groups, etc.), settings, and times.

Decision makers should also consider whether the research process, including recruitment and informed consent procedures, screening, and attrition, led to an unrepresentative pool of subjects on which the studies’ conclusions were based. This issue is akin to that of the representativeness of the cases on which the conclusions were based. It stems, however, not from the homogeneity or unrepresentativeness of the original population sampled. Rather, sampling is altered by the experimental conditions; the study protocol; and/or dissatisfaction with questionnaires, blood draws, weighing, or other research procedures—all of which could produce an unrepresentative final sample after the initial representative population was properly sampled.

Finally, decision makers should consider that obesity is unlike many of the health-related problems to which the usual canons of evidence-based medicine apply, especially those problems that have a singular causal agent, a straightforward mechanism of causation, or a relatively consistent result for all who would receive a standardized intervention. Obesity should be seen as belonging in a class of problems that are influenced by a constellation of complex social and political factors, some of which change during the process of solving the problem. Such a problem is likely to be viewed differently by different populations, practitioners, and vendors of services and products that influence it, depending on the perspectives and biases of those with a stake in the problem (Kreuter et al., 2004). Therefore, such problems are less amenable to the usual methods of randomized experiments than most of the more strictly

biomedical points of intervention tested for evidence-based medicine. This is precisely why a systems perspective is core to the L.E.A.D. framework (see Chapter 4).

In a medical setting, resource evaluation for a potential intervention may be straightforward and based simply on the marginal cost of administering the intervention within an existing structure. For example, the British Grading of Recommendations Assessment, Development, and Evaluation (GRADE) criteria for developing recommendations in a medical setting include resource considerations. The illustrative example offered in a recent description of GRADE assesses the costs of administering magnesium sulfate to prevent pre-eclampsia and provides separate cost/benefit analyses for high-, medium-, and low-income countries (Guyatt et al., 2008a).

The RE-AIM criteria discussed above shift the focus of evaluation of issues of generalizability and reproducibility by setting explicit standards for the reporting of resources used for the implementation and maintenance of interventions (Jilcott et al., 2007). The authors caution that studies often, either by design or inadvertently, indicate additional implementation resources that may not be available outside of a research setting. Armstrong and colleagues (2008) suggest that public health agencies should report data on the resources necessary for program implementation and sustainability, as well as the effectiveness results of pilot interventions.

Other considerations have been proposed for assessing the available evidence with respect to implementation. A notable example is the “filter criteria” for decision makers delineated by Glasziou and Longbottom (1999) and Swinburn and colleagues (2005).

Existing Tools for Assembling Evidence

In the majority of situations encountered, the available data may differ with regard to details of an intervention or the context and/or population in which the intervention would be implemented. In these circumstances, the evidence review should attempt to bridge this gap. Table 7-1 provides brief descriptions and references for existing tools that can be used to assemble the evidence, taking into account the key considerations described above.

A TEMPLATE FOR SUMMARIZING THE EVIDENCE

Evidence summaries should use a uniform language and structure, for many reasons. A uniform language for drawing and describing conclusions signals that the authors used a consistent set of procedures to evaluate and synthesize the evidence. A uniform language also facilitates communication among different disciplines during policy discussions and decision making. Achieving uniformity may sacrifice nuance; on balance, however, it improves the clarity of communication. Clarity is particularly important in complex public health arenas, such as obesity prevention, that necessarily involve experts from many different disciplines, each with its own jargon and standards for communication. Use of a uniform language and structure to report the process and

TABLE 7-1 Existing Tools for Assembling Evidence

|

Tool |

Purpose |

Criteria Specific to Assembling Evidence |

|

Realist reviews |

Synthesis of evidence using mixed-method analysis to combine qualitative and quantitative studies |

Criteria relate to the effectiveness of interventions |

|

Meta-analysis |

Pooling of results of experimental and quasi-experimental designs to determine effect size |

Criteria relate to the effectiveness of interventions |

|

Grading of Recommendations Assessment, Development, and Evaluation (GRADE) |

Classification of the quality of evidence and strength of recommendations in medical settings |

Factors that affect the strength of recommendations (Guyatt et al., 2008b):

|

|

Guide to Community Preventive Services: Systematic Reviews and Evidence-Based Recommendations |

Process for systematically reviewing evidence and translating it into recommendations |

Criteria for translating evidence into recommendations (Briss et al., 2000):

|

|

Reach, Effectiveness, Adoption, Implementation, Maintenance (RE-AIM) |

Framework for systematically considering strengths and weaknesses of interventions to guide program planning |

Criteria for translating research into practice (Kaiser Permanente Institute for Health Research, 2010):

|

|

Health Canada framework for identifying, assessing, and managing health risks |

Framework for developing program-specific implementation procedures involved in risk management |

Criteria for analyzing potential risk management options (Health Canada, 2000):

|

|

Tool |

Purpose |

Criteria Specific to Assembling Evidence |

|

Obesity prevention evidence framework |

Framework for evidence-based obesity prevention decision making |

Criteria for selecting a portfolio of policies, programs, and actions (Swinburn et al., 2005):

|

|

Matching, mapping, pooling, and patching |

Combining of theory, professional experience, and local wisdom with the science-based evidence in the process of planning an intervention |

Criteria for identifying program components and interventions (Green and Kreuter, 2005):

|

conclusions of evidence synthesis makes the information easier for decision makers to understand as they consider each potential intervention or set of interventions that combine individual actions or strategies (both referred to from this point further as reporting on a potential intervention).

As these reports on many different interventions and combinations of interventions begin to proliferate and become broadly available, they will serve as starting points for others facing similar decisions in similar environments. They also are likely to promote productive discussion and debate among decision makers and others, as well as assist researchers and research funders as they decide which kinds of studies are most needed for obesity prevention decision making.

Elements of the Reporting Template

The L.E.A.D. framework calls for summarizing the conclusions of evidence synthesis for each potential intervention by using a uniform reporting template that carefully follows the main tenets of the framework. It is most likely that these reports will be developed by the decision maker’s intermediaries—his/her own staff; staff from a particular government department, such as public health; or a group that specializes in gathering and synthesizing evidence. The report should follow that template presented in Box 7-3. The following subsections briefly describe each element of this template and offer specific examples to illustrate its purpose within the decision-making context starting with a brief example of a L.E.A.D. framework report (Box 7-4).

|

Box 7-3 Elements of the Reporting Template: Using the L.E.A.D. Framework to Inform Decisions

|

Question Asked by the Decision Maker

As described in Chapter 1, there are a wide variety of decision scenarios related to obesity prevention policies and programs. A decision maker may be faced with a decision about a specific, populationwide policy change with a potential direct impact on physical activity or other weight-related behavior of adults or children. For example, a school board member may ask staff to assist in deciding whether to vote for a measure to send parents reports of their children’s BMI levels based on annual weight and height screening. Or a decision maker may need to undertake or retain a specific program aimed at increasing access to food or physical activity options in a community. Or the director of a youth service organization may ask staff to help select a family-oriented nutrition and fitness program for nationwide implementation. Finally, a decision maker may need to select one of a set of potential interventions that would decrease obesity-promoting environmental influences; for instance, an employer may ask staff to help determine which wellness services should be included in the employee benefit package next year.

Identifying the question posed by the decision maker is the essential first step in producing the evidence report. This question informs what approach is taken to selecting, implementing, and evaluating interventions; why an intervention is needed; what should be done; how the intervention can work in the given context; and how changes in well-established policies and practices can be justified.

|

Box 7-4 Example L.E.A.D. Framework Evidence Report

This list may include much more evidence from many sources—these are just examples.

|

Strategy for Locating Evidence

As described in detail in Chapter 5, the strategy for locating evidence should begin with answering the three questions that frame the discussion in this chapter: (1) Why should we do something about this problem in our situation? (2) What specifically should we do about this problem? and (3) How do we implement this information for our situation? Collecting evidence in accordance with this typology will help expand the perspective on the forms of evidence that are potentially relevant to answering the obesity prevention question asked by the decision maker and on the potential sources from which to gather that evidence. The strategy used to locate evidence for each potential intervention depends on the context of the question being asked, as well as

The entries under a, b, and c above are merely examples. A summary for decision makers is likely to include many more examples and more extensive explanatory information. The purpose of this brief model is to give the reader a concrete sense of what a L.E.A.D. evidence report would look like. Such a report should be prepared for each potential intervention to be considered by the decision maker. |

the intermediary’s knowledge of and access to study designs and methodologies in diverse fields; therefore, it is important to describe this strategy clearly in the report. (For a list of selected information sources, see Appendix D.)

Evidence Table

As described in Chapter 6, once the evidence for each potential intervention has been located, it should be evaluated. Each type of evidence should be evaluated according to established criteria for assessing the quality of that type of evidence, including the level of certainty and generalizability. In addition, the relative value of each source of evidence depends on the decision-making context. Table 7-2 offers a template for

TABLE 7-2 Evidence Table Template

|

Example Decision Maker Question: Should our schools change the contents of vending machines to help prevent obesity among our students? |

||||

|

Issue/Potential Intervention |

Source |

Type |

Quality |

Outcomes/Findings |

|

Part A: Why should we do something about this problem in our situation? |

||||

|

All needs assessment evidence for the particular problem (e.g., health burden, frequency/incidence, social determinants, trends, health disparities, monetary and social costs) should be described in this section. One example: |

||||

|

Childhood obesity rates in our area (2008 data) |

Department of Health Expanded Immunization Registry |

Quantitative report using existing database |

High |

A map of our community showing childhood obesity rates by neighborhood is provided. |

|

Part B: What specifically should we do about this problem? |

||||

|

All evidence associated with the effectiveness and impact of a particular intervention (e.g., causal pathways, outside influences, sustained effects, unintended consequences) should be described in this section. One example: |

||||

|

School nutrition policy changes |

Study citation |

Descriptive, quantitative |

High |

Policy changes in one state’s public schools significantly reduced calorie consumption among its youth during the school day. |

|

Part C: How do we implement this information for our situation? |

||||

|

All evidence associated with the relevance and implementation of a particular intervention (e.g., generalizabiltiy, sustainability, cost-effectiveness, cost feasibility, strategic planning, implementation policies, potential challenges) should be described in this section. One example: |

||||

|

Removal of sugar-sweetened beverages from all vending machines on school campuses |

Study citation |

Quantitative report using revenue statements for vending machines in schools |

High |

When replaced with water, juice, and milk, sugar-sweetened beverages can feasibly be removed from a cost perspective. |

an evidence table that should be included in the evidence report for each potential intervention. The table should be divided into three parts based on the above three questions. Thus the first part of the table should summarize the evidence that answers Why should we do something about this problem in our situation? As described further in Chapter 5, this evidence, sometimes called needs assessment, helps the decision maker understand the scope and severity of the public health problem in the local context. The second part of the table should summarize the evidence that answers What specifically should we do about this problem? This evidence describes the effectiveness and impact of potential interventions and helps the decision maker select which one to implement. Finally, the third part of the table should summarize the evidence that answers How do I implement this information for our situation? This evidence helps the decision maker assess how a potential intervention should be implemented to

achieve its desired effects in the local context. As illustrated by Table 7-2, each source of evidence and the issue it addresses should be identified, as well as the type of study design or methodology, its quality, and its major outcomes or findings. Guidance on this information can be found in Chapters 5 and 6 of this report.

Summary of Evidence

Once the potentially relevant evidence has been located and evaluated for each potential intervention as called for by the L.E.A.D. framework, it should be synthesized and summarized to help inform the decision based on the question asked. Evidence and other sources of information like theory, professional experience, local wisdom are synthesized using tools described earlier in this chapter. The following subsections address the formulation and reporting of this summary.

Why Should We Do Something About This Problem in Our Situation?

The first part of the summary should describe the local conditions to be addressed by the potential intervention. It might include, for example, the number of people affected by obesity; the rate of increase in obesity; the number of people affected by obesity-related diseases, such as type 2 diabetes, and whether those affected fall within a certain age, racial, or ethnic group more than other groups; and the associated health care costs—all specific to the particular setting.

What Specifically Should We Do About This Problem?

The second part of the summary should provide a description and rationale for the potential intervention. The synthesis of this evidence should consider the broader context for the intervention from a systems perspective, how effective the intervention might be, and its public health impact. Table 7-1, presented earlier, lists tools that can be used in assembling this evidence. Decision makers may need to balance highly effective interventions that reach only a modest number of people with less effective interventions that reach the entire population at risk. Or they may need to look at interventions for which little evidence is available on their effectiveness or impact but that enjoy community acceptance, or ones that are recommended by experts in the field based on their experience and knowledge. Guidance is offered below for summarizing the three types of evidence that can inform the selection of an intervention: (1) the broader context of the potential intervention from a systems perspective (i.e., rationale); (2) how effective the intervention is likely to be; and (3) its likely reach and impact.

The Broader Context from a Systems Perspective (i.e., rationale). The summary should clearly describe the theory used to link the outcome(s) of the intervention to obesity prevention, or the systems view taken to understand the implications of the intervention for the outcome of interest. Logic models can help predict what effect

an intervention will have on behavior, whether it will lead to the desired outcome, or whether it will lead to other outcomes. Systems views highlight areas of concern and provide decision makers with options for evaluating new interventions (see Chapter 4).

Reporting Likely Effectiveness. As discussed previously, the level of certainty as to the causal relationship between an intervention and the observed outcomes and the extent to which the research results can be translated to the current situation (generalizability) both increase the likelihood that the intervention will yield favorable results. The degree of certainty needed to recommend an intervention is not absolute: interventions that are low-cost/low-risk may be recommended with less certainty than interventions that are higher-cost or higher-risk. Interventions that require substantial capital or ongoing expenditures or that have higher potential risks will need stronger evidence before being recommended (Cohen and Neumann, 2008).

In accordance with the L.E.A.D. framework, the report should present decision makers with a summary of the estimated effectiveness of the potential intervention, following the approach of the Washington State Department of Health (2005), which was subsequently cited and applied by the Texas Department of Health State Health Services (2006). In addition, to present the full spectrum of possible conclusions, the committee suggests adding a fourth category of “no likely benefit” to the Washington State and Texas approach. As a result, the committee suggests the following categories of effectiveness:

-

Effective—One or more well-designed studies in similar contexts have shown that a potential intervention has had the desired effect on nutrition, physical activity, or obesity rate.

-

Promising—Evidence suggests likely effectiveness in achieving intervention goals. A potential intervention is adapted from a proven intervention, with substantial alterations that (1) have a scientific basis; (2) are based on evidence from evaluation studies or reports showing meaningful, positive health or behavioral outcomes; or (3) are supported by written program evaluations that include evidence of effectiveness, formative evaluation data, and/or results consistent with theory in the case of high-reach, low-cost, replicable interventions (Brownson et al., 2009).

-

Untested—A potential intervention has shown an effect in another context; it has a strong theoretical basis and some supporting data, but key data are missing; or the evidence shows effects on intermediate outcomes only, particularly if the link between these intermediate outcomes and obesity has not yet been firmly established.

-

No likely benefit—Well-conducted studies fail to show a positive effect for a potential intervention, or there is evidence of harm.

An evolution has occurred in the categorical presentation of summary conclusions regarding the evidence base for public health interventions. The committee gave special weight to the classification systems used by the Washington State and Texas public health departments and noted their congruence with current academic assessment schema—particularly those of Brownson and colleagues (2009) and a paper commissioned for this report on the current review of environmental and policy interventions for childhood obesity prevention undertaken by Transtria (see Appendix B for more information on this project). Although there are differences in nuance among the various proposed schema, as noted earlier, clear communication among professionals requires the adoption of a uniform lexicon for reporting.

When possible, the summary of evidence concerning likely effectiveness should also provide an estimate of the magnitude of the effect. In the case of quantitative data, specific statistical tools will help in describing the uncertainty surrounding these estimates. In the case of qualitative data or data that are less directly applicable to the decision-making context, the range of likely results will be more difficult to describe. In this case, qualitative descriptions of prior experiences in similar settings, which are always valuable adjuncts to research results, may be the only basis for decision making.

Reporting Likely Reach and Impact. An intervention should be categorized as universal, selective, or indicated. Within the universal category, evidence for the reach and uptake of the intervention should be summarized, in quantitative terms when possible. In some cases, widespread reach may allow for significant impact from interventions that are of relatively low absolute effectiveness. An example is distributing guidance on healthful eating through the health care or education system, which may be inexpensive, involve minimal risk, and be capable of reaching a large number of community members. Presented with evidence for a sizable potential impact, decision makers may adopt interventions of this sort as part of a larger portfolio.

How Do We Implement This Information for Our Situation?

The final part of the summary should address the implementation of the potential intervention. This section, which will most commonly be in narrative form, should describe (1) the personnel and other resources deployed in the studies examined; (2) if applicable, the relationship between the size of the program staff and the population reached; (3) the institutional relationships required for implementation; and (4) any other resource requirements that would aid decision makers in understanding how best to apply the results of previous efforts to planning this intervention.

In many cases, interventions may need to be adapted to fit the local context. Community participation in this process may lead to better understanding of the local context for implementation. When sufficient data are available, realist reviews (Pawson et al., 2005) may be undertaken to guide decision makers in making adapta-

tions to local circumstances that are likely to preserve the effectiveness of previous programs in their context.

KNOWLEDGE INTEGRATION

As noted earlier, the new knowledge obtained in following the framework needs to be incorporated into the context of the organization or system where decisions are being made, such as a public health department, a mayor’s office, a health care system, or a school system. Rather than an active step in the framework, knowledge integration is the desired final outcome of the process. Knowledge integration may also generate new questions and feed back into the steps of the framework. The cyclic nature of knowledge generation, use of evidence, integration of relevant evidence into systems, and feedback is thus completed.

The concept of knowledge integration derives from the evolution of thinking about how new knowledge from research is used (Best et al., 2008b). Earlier terms such as “knowledge transfer” and “knowledge uptake” captured the need to move new knowledge generated by research into the hands of users but reflected one-way, linear thinking, with researchers simply producing new knowledge. Experience has suggested that these models do not work (Davis et al., 2003; Grimshaw et al., 2001). Other terms, such as “knowledge translation,” suggest more of a relationship model incorporating the necessary interactions among individuals and the importance of social networks (Canadian Institutes of Health Research, 2008). “Knowledge integration” takes the process of translating evidence to practice a step further, indicating that relationships themselves are shaped by the organizations and systems in which they are embedded and that a system has particular dynamics, priorities, time scales, modes of communication, and expectations (Best et al., 2008a). In a similar manner, the recent emphasis of the National Institutes of Health (NIH) on dissemination and implementation research has encouraged investigators and decision makers to focus their research and programmatic questions on the integration of knowledge within systems (Kerner et al., 2005).

The concept of knowledge integration is closely linked to an appreciation for a study’s generalizability, discussed throughout this report. An understanding of the context in which evidence will be used is clearly part of successful knowledge integration. The challenges of addressing a study’s generalizability, such as recognizing the ecologically complex nature of communities, organizations, and health care systems as opposed to the more tightly (and artificially) controlled conditions of RCTs, have been described (Green and Glasgow, 2006).

Knowledge integration places specific emphasis on systems thinking in health sciences, which has evolved out of scientific inquiry in such fields as mathematics, biology, engineering, ecology, and management science (see Chapter 4). Examples of systems thinking come from the fields of weather forecasting, prevention of pandemics, and tobacco control (Leischow et al., 2008). In the control of obesity, multiple systems or organizations are clearly involved. The management of systems knowledge

and its successful integration will therefore require an infrastructure to store, disseminate, and communicate this information to various stakeholders within a systems environment (Leischow et al., 2008) (see Chapter 10).

REFERENCES

Armstrong, R., E. Waters, L. Moore, E. Riggs, L. G. Cuervo, P. Lumbiganon, and P. Hawe. 2008. Improving the reporting of public health intervention research: Advancing TREND and CONSORT. Journal of Public Health 30(1):103-109.

Best, A., R. A. Hiatt, and C. D. Norman. 2008a. Knowledge integration: Conceptualizing communications in cancer control systems. Patient Education and Counseling 71(3):319-327.

Best, A., W. K. Trochim, J. Haggerty, G. Moor, and C. Norman. 2008b. Systems thinking for knowledge integration: New models for policy-research collaboration. In Organizing and reorganizing: Power and change in health care organizations, edited by E. Ferlie, P. Hyde, and L. McKee. London: Routledge.

Briss, P. A., S. Zaza, M. Pappaioanou, J. Fielding, L. Wright-De Aguero, B. I. Truman, D. P. Hopkins, P. D. Mullen, R. S. Thompson, S. H. Woolf, V. G. Carande-Kulis, L. Anderson, A. R. Hinman, D. V. McQueen, S. M. Teutsch, and J. R. Harris. 2000. Developing an evidence-based Guide to Community Preventive Services—methods. American Journal of Preventive Medicine 18(1, Supplement 1):35-43.

Brownson, R. C., J. E. Fielding, and C. M. Maylahn. 2009. Evidence-based public health: A fundamental concept for public health practice. Annual Review of Public Health 30(15):1-27.

Canadian Institutes of Health Research. 2008. Knowledge translation—definition. http://www. cihr-irsc.gc.ca/e/39033.html#Exchange (accessed January 4, 2010).

CDC (Centers for Disease Control and Prevention). 1996. Cigarette smoking before and after an excise tax increase and an antismoking campaign—Massachusetts, 1990-1996. Morbidity and Mortality Weekly Report 45(44):966-970.

Cohen, D. J., B. F. Crabtree, R. S. Etz, B. A. Balasubramanian, K. E. Donahue, L. C. Leviton, E. C. Clark, N. F. Isaacson, K. C. Stange, and L. W. Green. 2008. Fidelity versus flexibility: Translating evidence-based research into practice. American Journal of Preventive Medicine 35(5, Supplement):S381-S389.

Cohen, J., and P. Neumann. 2008. Using decision analysis to better evalute pediatric clinical guidelines. Health Affairs 27(5):146-175.

Connelly, J. B., M. J. Duaso, and G. Butler. 2007. A systematic review of controlled trials of interventions to prevent childhood obesity and overweight: A realistic synthesis of the evidence. Public Health 121(7):510-517.

Cullen, K. W., and K. B. Watson. 2009. The impact of the Texas public school nutrition policy on student food selection and sales in Texas. American Journal of Public Health 99(4):706-712.

Davis, D., M. Evans, A. Jadad, L. Perrier, D. Rath, D. Ryan, G. Sibbald, S. Straus, S. Rappolt, M. Wowk, and M. Zwarenstein. 2003. The case for knowledge translation: Shortening the journey from evidence to effect. British Medical Journal 327(7405):33-35.

D’Onofrio, C. N. 2001. Pooling information about prior interventions: A new program planning tool. In Handbook of program development for health behavior research and practice, edited by S. Sussman. Thousand Oaks, CA: Sage Publications. Pp. 158-203.

Eriksen, M. 2005. Lessons learned from public health efforts and their relevance to preventing childhood obesity. In Preventing childhood obesity: Health in the balance, edited by J. Koplan, C. Liverman, and V. Kraak. Washington, DC: The National Academies Press. Pp. 343-375.

Farley, T. A., A. Caffarelli, M. T. Bassett, L. Silver, and T. R. Frieden. 2009. New York City’s fight over calorie labeling. Health Affairs 28(6):w1098-w1109.

Glasgow, R. E., L. M. Klesges, D. A. Dzewaltowski, P. A. Estabrooks, and T. M. Vogt. 2006. Evaluating the impact of health promotion programs: Using the RE-AIM framework to form summary measures for decision making involving complex issues. Health Education Research 21(5):688-694.

Glasziou, P., and H. Longbottom. 1999. Evidence-based public health practice. Australian and New Zealand Journal of Public Health 23(4):436-440.

Green, L. W., and R. E. Glasgow. 2006. Evaluating the relevance, generalization, and applicability of research: Issues in external validation and translation methodology. Evaluation & the Health Professions 29(1):126-153.

Green, L. W., and M. W. Kreuter. 2005. Health program planning: An educational and ecological approach. 4th ed. New York: McGraw-Hill.

Greenhalgh, T., E. Kristjansson, and V. Robinson. 2007. Realist review to understand the efficacy of school feeding programmes. British Medical Journal 335(7626):858-861.

Grimshaw, J. M., L. Shirran, R. Thomas, G. Mowatt, C. Fraser, L. Bero, R. Grilli, E. Harvey, A. Oxman, and M. A. O’Brien. 2001. Changing provider behavior: An overview of systematic reviews of interventions. Medical Care 39(8, Supplement 2):II-2-II-45.

Guyatt, G. H., A. D. Oxman, R. Kunz, R. Jaeschke, M. Helfand, A. Liberati, G. E. Vist, and H. J. Schunemann. 2008a. Incorporating considerations of resources use into grading recommendations. British Medical Journal 336(7654):1170-1173.

Guyatt, G. H., A. D. Oxman, G. E. Vist, R. Kunz, Y. Falck-Ytter, P. Alonso-Coello, and H. J. Schunemann. 2008b. GRADE: An emerging consensus on rating quality of evidence and strength of recommendations. British Medical Journal 336(7650):924-926.

Hawe, P., A. Shiell, and T. Riley. 2004. Complex interventions: How “out of control” can a randomised controlled trial be? British Medical Journal 328(7455):1561-1563.

Health Canada. 2000. Health Canada decision-making frameowrk for identifying, assessing, and managing health risks. Ontario: Health Canada.

IOM (Institute of Medicine). 2007. Nutrition standards for schools: Leading the way toward healthier youth. Edited by V. A. Stallings and A. L. Yaktine. Washington, DC: The National Academies Press.

IOM. 2009. Local government actions to prevent childhood obesity. Edited by L. Parker, A. C. Burns, and E. Sanchez. Washington, DC: The National Academies Press.

Jacobs, F. 2003. Child and family program evaluation: Learning to enjoy complexity. Applied Developmental Science 7(2):62-75.

Jilcott, S., A. Ammerman, J. Sommers, and R. E. Glasgow. 2007. Applying the RE-AIM framework to assess the public health impact of policy change. Annals of Behavioral Medicine 34(2):105-114.

Kaiser Permanente Institute for Health Research. 2010. What is RE-AIM? http://www.re-aim.org/whatisREAIM/index.html (accessed March 9, 2010).

Kerner, J., B. Rimer, and K. Emmons. 2005. Introduction to the special section on dissemination—dissemination research and research dissemination: How can we close the gap? Health Psychology 24(5):443-446.

Kreuter, M. W., C. De Rosa, E. H. Howze, and G. T. Baldwin. 2004. Understanding wicked problems: A key to advancing environmental health promotion. Health Education and Behavior 31(4):441-454.

Leischow, S. J., A. Best, W. M. Trochim, P. I. Clark, R. S. Gallagher, S. E. Marcus, and E. Matthews. 2008. Systems thinking to improve the public’s health. American Journal of Preventive Medicine 35(2, Supplement 1):S196-S203.

Martin, J. B., L. W. Green, and A. C. Gielen. 2007. Potential lessons from public health and health promotion for the prevention of child abuse. Journal of Prevention and Intervention in the Community 34(1-2):205-222.

Mercer, S. L., L. K. Kanh, L. W. Green, A. C. Rosenthal, R. Nathan, C. G. Husten, and W. H. Dietz. 2005. Drawing possible lessons for obesity prevention and control from the tobacco control experience. In Obesity prevention and public health, edited by D. Crawford and R. W. Jeffery. London: Oxford University Press, Inc. Pp. 231-251.

Montori, V. M., and G. H. Guyatt. 2001. Intention-to-treat principle. Canadian Medical Association Journal 165(10):1339-1341.

Pawson, R., T. Greenhalgh, G. Harvey, and K. Walshe. 2005. Realist review—a new method of systematic review designed for complex policy interventions. Journal of Health Services Research and Policy 10(Supplement 1):21-34.

Raczynski, J. M., J. W. Thompson, M. M. Phillips, K. W. Ryan, and H. W. Cleveland. 2009. Arkansas Act 1220 of 2003 to reduce childhood obesity: Its implementation and impact on child and adolescent body mass index. Journal of Public Health Policy 30(Supplement 1): S124-S140.

Rychetnik, L., P. Hawe, E. Waters, A. Barratt, and M. Frommer. 2004. A glossary for evidence based public health. Journal of Epidemiology and Community Health 58(7):538-545.

Sege, R. D., and E. De Vos. 2008. Care for children and evidence-based medicine. Pediatric Annals 37(3):168-172.

Shrier, I., J. F. Boivin, R. J. Steele, R. W. Platt, A. Furlan, R. Kakuma, J. Brophy, and M. Rossignol. 2007. Should meta-analyses of interventions include observational studies in addition to randomized controlled trials? A critical examination of underlying principles. American Journal of Epidemiology 166(10):1203-1209.

Siegel, M., P. D. Mowery, T. P. Pechacek, W. J. Strauss, M. W. Schooley, R. K. Merritt, T. E. Novotny, G. A. Giovino, and M. P. Eriksen. 2000. Trends in adult cigarette smoking in California compared with the rest of the United States, 1978-1994. American Journal of Public Health 90(3):372-379.

Swinburn, B., T. Gill, and S. Kumanyika. 2005. Obesity prevention: A proposed framework for translating evidence into action. Obesity Reviews 6(1):23-33.

Texas Department of State Health Services. 2006. Texas obesity policy portfolio. Austin, TX: Center for Policy and Innovation.

UK Government Foresight Programme. 2007. Tackling obesities: Future choices—qualitative modelling of policy options. London, UK: Crown.

Washington State Department of Health. 2005. Nutrition and physical activity: A policy resource guide. http://www.dshs.state.tx.us/obesity/pdf/WAnutritionphysicalactivityguide.pdf (accessed December 1, 2009).