Summary of the Symposium

In 2007, a committee of the National Research Council (NRC) proposed a vision that embraced recent scientific advances and set a new course for toxicity testing (NRC 2007a). The committee envisioned a new paradigm in which biologically important perturbations in key toxicity pathways would be evaluated with new methods in molecular biology, bioinformatics, computational toxicology, and a comprehensive array of in vitro tests based primarily on human biology. Although some view the vision as too optimistic with respect to the promise of the new science and debate the time required to implement the vision, no one can deny that a revolution in toxicity testing is under way. New approaches are being developed, and data are being generated. As a result, the U.S. Environmental Protection Agency (EPA) expects a large influx of data that will need to be evaluated. EPA also is faced with tens of thousands of chemicals on which toxicity information is incomplete and emerging chemicals and substances that will need risk assessment and possible regulation. Therefore, the agency asked the NRC Standing Committee on Risk Analysis Issues and Reviews to convene a symposium to stimulate discussion on the application of the new approaches and data in risk assessment.

The standing committee was established in 2006 at the request of EPA to plan and conduct a series of public workshops that could serve as a venue for discussion of issues critical for the development and review of objective, realistic, and scientifically based human health risk assessment. An ad hoc planning committee was formally appointed under the oversight of the standing committee to organize and conduct the symposium. The biographies of the standing committee and planning committee members are provided in Appendixes A and B, respectively.

The symposium was held on May 11-13, 2009, in Washington, DC, and included presentations and discussion sessions on pathway-based approaches for hazard identification, applications of new approaches to mode-of-action analyses, the challenges to and opportunities for risk assessment in the changing paradigm, and future directions. The symposium agenda, speaker and panelist biographies, and presentations are provided in Appendixes C, D, and E, respectively. The symposium also included a poster session to showcase examples of

how new technologies might be applied to quantitative and qualitative aspects of risk assessment. The poster abstracts are provided in Appendix F. This summary provides the highlights of the presentations and discussions at the symposium. Any views expressed here are those of the individual committee members, presenters, or other symposium participants and do not reflect any findings or conclusions of the National Academies.

A PARADIGM CHANGE ON THE HORIZON

Warren Muir, of the National Academies, welcomed the audience to the symposium and stated that the environmental-management paradigm of the 1970s is starting to break down with recent scientific advances and the exponential growth of information and that the symposium should be seen as the first of many discussions on the impact of advances in toxicology on risk assessment. He introduced Bernard Goldstein, of the University of Pittsburgh, chair of the Standing Committee on Risk Analysis Issues and Reviews, who stated that although the standing committee does not make recommendations, symposium participants should feel free to suggest how to move the field forward and to make research recommendations. Peter Preuss, of EPA, concluded the opening remarks and emphasized that substantial changes are on the horizon for risk assessment. The agency will soon be confronted with enormous quantities of data from high-throughput testing and as a result of the regulatory requirements of the REACH (Registration, Evaluation, Authorisation and Restriction of Chemicals) program in Europe that requires testing of thousands of chemicals. He urged the audience to consider the question, What is the future of risk assessment?

Making Risk Assessment More Useful in an Era of Paradigm Change

E. Donald Elliott, of Yale Law School and Willkie Farr & Gallagher LLP, addressed issues associated with acceptance and implementation of the new pathway approaches that will usher in the paradigm change. He emphasized that simply building a better mousetrap does not ensure its use, and he provided several examples in which innovations, such as movable type and the wheel, were not adopted until centuries later. He felt that ultimately innovations must win the support of a user community to be successful, so the new tools and approaches should be applied to problems that the current paradigm has difficulty in addressing. Elliott stated that the advocates of pathway-based toxicity testing should illustrate how it can address the needs of a user community, such as satisfying data requirements for REACH; providing valuable information on sensitive populations; evaluating materials, such as nanomaterials, that are not easily evaluated in typical animal models; and demonstrating that fewer animal tests are needed if the approaches are applied. He warned, however, that the new ap-

proaches will not be as influential if they are defined as merely less expensive screening techniques.

Elliott continued by saying that the next steps needed to effect the paradigm change will be model evaluation and judicial acceptance. NRC (2007b) and Beck (2002) set forth a number of questions to consider in evaluating a model, such as whether the results are accurate and represent the system being modeled? The standards for judicial acceptance in agency reviews and private damage cases are different. The standards for agency reviews are much more lenient than those in private cases in which a judge must determine whether an expert’s testimony is scientifically valid and applicable. Accordingly, the best approach for judicial acceptance would be to have a record established on the basis of judicial review of agency decisions, in which a court generally defers to the agency when decisions involve determinations at the frontiers of science. Elliott stated that the key issue is to create a record showing that the new approach works as well as or better than existing methods in a particular regulatory application. He concluded, however, that the best way to establish acceptance might be for EPA to use its broad rule-making authority under Section 4 of the Toxic Substances Control Act to establish what constitutes a valid testing method in particular applications.

Emerging Science and Public Health

Lynn Goldman, of Johns Hopkins Bloomberg School of Public Health, a member of the standing committee and the planning committee, discussed the public-health aspects of the emerging science and potential challenges. She agreed with Elliott that a crisis is looming, given the number of chemicals that need to be evaluated and the perception that the process for ensuring that commercial chemicals are safe is broken and needs to be re-evaluated. The emerging public-health issues are compounding the sense of urgency in that society will not be able to take 20 years to make decisions. Given the uncertainties surrounding species extrapolation, dose extrapolation, and evaluation of sensitive populations today, the vision provided in the NRC report Toxicity Testing in the 21st Century: A Vision and a Strategy offers tremendous promise. However, Goldman used the example of EPA’s Endocrine Disruptor Screening Program as a cautionary tale. In 1996, Congress passed two laws, the Food Quality Protection Act and the Safe Drinking Water Act, that directed EPA to develop a process for screening and testing chemicals for endocrine-disruptor potential. Over 13 years, while three advisory committees have been formed, six policy statements have been issued, and screening tests have been modified four times, no tier 2 protocols have been approved, and only one list of 67 pesticides to be screened has been generated. One of the most troubling aspects is that most of the science is now more than 15 years old. EPA lacked adequate funding, appropriate expertise, enforceable expectations by Congress, and the political will to push the

program forward. The fear that a chemical would be blacklisted on the basis of a screening test and the “fatigue factor,” in which supporters eventually tire and move on to other issues, compounded the problems. Goldman suggested that the following lessons should be learned from the foregoing example: support is needed from stakeholders, administration, and Congress for long-term investments in people, time, and resources to develop and implement new toxicity-testing approaches and technologies; strong partnerships within the agency and with other agencies, such as the National Institutes of Health (NIH), are valuable; new paradigms will not be supported unless there are convincing proof-of-concept and verification studies; and new processes are needed to move science into regulatory science more rapidly. Goldman concluded that the new approaches and technologies have many potential benefits, including improvement in the ability to identify chemicals that have the greatest potential for risk, the generation of more scientifically relevant data on which to base decisions, and improved strategies of hazard and risk management. However, she warned that resources are required to implement the changes: not only funding but highly trained scientists will be needed, and the pipeline of scientists who will be qualified and capable of doing the work needs to be addressed.

Toxicity Testing in the 21st Century

Kim Boekelheide, of Brown University, who was a member of the committee responsible for the report Toxicity Testing in the 21st Century: a Vision and a Strategy reviewed the report and posed several questions to consider throughout the discussion in the present symposium. The committee was formed when frustration with toxicity-testing approaches was increasing. Boekelheide cited various problems with toxicity-testing approaches, including low throughput, high cost, questionable relevance to actual human risks, use of conservative defaults, and reliance on animals. Thus, the committee was motivated by the following design criteria for its vision: to provide the broadest possible coverage of chemicals, end points, and life stages; to reduce the cost and time of testing; to minimize animal use and suffering; and to develop detailed mechanistic and dose-response information for human health risk assessment. The committee considered several options, which are summarized in Table 1. Option I was essentially the status quo, option II was a tiered approach, and options III and IV were fundamental shifts in the current approaches. Although the committee acknowledged option IV as the ultimate goal for toxicity testing, it chose option III to represent the vision for the next 10-20 years. That approach is a fundamental shift—one that is based primarily on human biology, covers a broad range of doses, is mostly high-throughput, is less expensive and time-consuming, uses substantially fewer animals, and focuses on perturbations of critical cellular responses.

TABLE 1 Options for Future Toxicity-Testing Strategies Considered by the NRC Committee on Toxicity Testing and Assessment of Environmental Agents

|

Option I In Vivo |

Option II Tiered In Vivo |

Option III In Vitro and In Vivo |

Option IV In Vitro |

|

Animal biology |

Animal biology |

Primarily human biology |

Primarily human biology |

|

High doses |

High doses |

Broad range of doses |

Broad range of doses |

|

Low throughput |

Improved throughput |

High and medium throughput |

High throughput |

|

Expensive |

Les expensive |

Less expensive |

Less expensive |

|

Time-consuming |

Less time-consuming |

Less time-consuming |

Less time-consuming |

|

Relatively large number of animals |

Fewer animals |

Substantially fewer animals |

Virtually no animals |

|

Apical end points |

Apical end points |

Perturbations of toxicity pathways |

Perturbations of toxicity pathways |

|

|

Some in silico and in vitro screens |

In silico screens possible |

In silico screens |

|

Source: Modified from NRC 2007a. K. Boekelheide, Brown University, presented at the symposium. |

|||

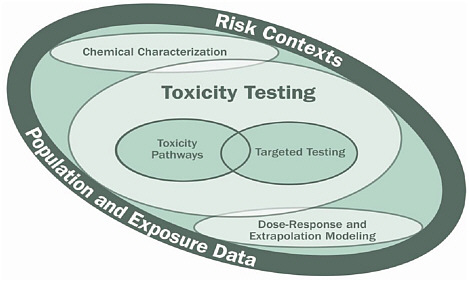

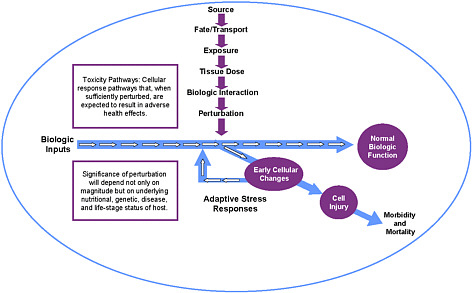

Boekelheide described the components of the vision, which are illustrated in Figure 1. The core component is toxicity-testing, in which toxicity-pathway assays play a dominant role. The committee defined a toxicity pathway as a cellular-response pathway that, when sufficiently perturbed, is expected to result in an adverse health effect (see Figure 2), and it envisioned a toxicity-testing system that evaluates biologically important perturbations in key toxicity pathways by using new methods in computational biology and a comprehensive array of in vitro tests based on human biology. Boekelheide noted that since release of the report, rapid progress in human stem-cell biology, better accessibility to human cells, and development of bioengineered tissues have made the committee’s vision more attainable. He also noted that the toxicity-pathway approach moves away from extrapolation from high dose to low dose and from animals to humans but involves extrapolation from in vitro to in vivo and between levels of biologic organization. Thus, there will be a need to build computational systems-biology models of toxicity-pathway circuitry and pharmacokinetic models that can predict human blood and tissue concentrations under specific exposure conditions.

FIGURE 1 Components of the vision described in the report, Toxicity Testing in the 21st Century: A Vision and a Strategy. Source: NRC 2007a. K. Boekelheide, Brown University, presented at the symposium.

FIGURE 2 Perturbation of cellular response pathway, leading to adverse effects. Source: Modified from NRC 2007a. K. Boekelheide, Brown University, modified from symposium presentation.

Boekelheide stated that the vision offers a toxicity-testing system more focused on human biology with more dose-relevant testing and the possibility of addressing many of the frustrating problems in the current system. He listed some challenges with the proposed vision, including development of assays for the toxicity pathways, identification and testing of metabolites, use of the results to establish safe levels of exposure, and training of scientists and regulators to use the new science. Boekelheide concluded by asking several questions for consideration throughout the symposium program: How long will it take to implement the new toxicity-testing paradigm? How will adaptive responses be distinguished from adverse responses? Is the proposed approach a screening tool or a stand-alone system? How will the new paradigm be validated? How will new science be incorporated? How will regulators handle the transition in testing?

Symposium Issues and Questions

Lorenz Rhomberg, of Gradient Corporation, a member of the standing committee and chair of the planning committee, closed the first session by providing an overview of issues and questions to consider throughout the symposium. Rhomberg stated that the new tools will enable and require new approaches. Massive quantities of multivariate data are being generated, and this poses challenges for data handling and interpretation. The focus is on “normal” biologic control and processes and the effects of perturbations on those processes, and a substantial investment will be required to improve understanding in fundamental biology. More important, our frame of reference has shifted dramatically: traditional toxicology starts with the whole organism, observes apical effects, and then tries to explain the effects by looking at changes at lower levels of biologic organization, whereas the new paradigm looks at molecular and cellular processes and tries to explain what the effects on the whole organism will be if the processes are perturbed.

People have different views on the purposes and applications of the new tools. For example, some want to use them to screen out problematic chemicals in drug, pesticide, or product development; to identify chemicals for testing and the in vivo testing that needs to be conducted; to establish testing priorities for data-poor chemicals; to identify biomarkers or early indicators of exposure or toxicity in the traditional paradigm; or to conduct pathway-based evaluations of causal processes of toxicity. Using the new tools will pose challenges, such as distinguishing between causes and effects, dissecting complicated networks of pathways to determine how they interact, and determining which changes are adverse effects rather than adaptive responses. However, the new tools hold great promise, particularly for examining how variations in the population affect how people react to various exposures.

Rhomberg concluded with some overarching questions to be considered throughout the symposium: What are the possibilities of the new tools, and how do we realize them? What are the pitfalls, and how can we avoid them? How is the short-term use of the new tools different from the ultimate vision? When should the focus be on particular pathways rather than on interactions, variability, and complexity? How is regulatory and public acceptance of the new paradigm to be accomplished?

THE NEW SCIENCE

An Overview

John Groopman, of Johns Hopkins Bloomberg School of Public Health, began the discussion of the new science by providing several examples of how it has been used. He first discussed the Keap1-Nrf2 signaling pathway, which is sensitive to a variety of environmental stressors. Keap1-Nrf2 signaling pathways have been investigated by using knockout animal models, and the investigations have provided insight into how the pathways modulate disease outcomes. Research has shown that different stressors in Nrf2 knockout mice affect different organs; that is, one stressor might lead to a liver effect, and another to a lung effect. Use of knockout animals has allowed scientists to tease apart some of the pathway integration and has shown that the signaling pathways can have large dose-response curves—in the 20,000-fold range—in response to activation.

Groopman stated, however, that some of the research has provided cautionary tales. For example, when scientists evaluated the value of an aflatoxin-albumin biomarker to predict which rats were at risk for hepatocellular carcinoma, they found that the biomarker concentration tracked with the disease at the population level but not in the individual animals. Thus, one may need to be wary of the predictive value of a single biomarker for a complex disease. In another case, scientists thought that overexpression of a particular enzyme in a signaling pathway would lead to risk reduction, but they found that transgene overexpression had no effect on tumor burden. Overall, the research suggests that a reductionist approach might not work for complex diseases. Groopman acknowledged substantial increases in the sensitivity of mass spectrometry over the last 10 years but noted that the throughput in many cases has not increased, and this is often an underappreciated and underdiscussed aspect of the new paradigm.

Groopman concluded by discussing the recent data on cancer genomes. Sequence analysis of cancer genomes has shown that different types of cancer, such as breast cancer and colon cancer, are not the same disease, and although there are common mutations within the same cancer type, the disease differs among individuals. Through sequence analysis, the number of confirmed genetic contributors to common human diseases has increased dramatically since 2000. Genome-wide association studies have shown that many alleles have modest

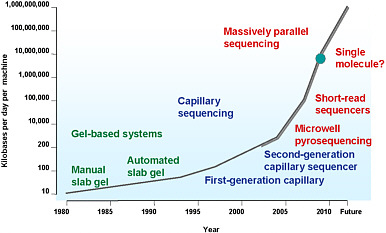

effects in disease outcomes, that many genes are involved in each disease, that most genes that have been shown to be involved in human disease were not predicted on the basis of current biologic understanding, and that many risk factors are in noncoding regions of the genome. Sequencing methods and technology have improved dramatically, and researchers who once dreamed of sequencing the human genome in a matter of years can now complete the task in a matter of days (see Figure 3). Groopman concluded by stating that the sequencing technology needs to be extended to experimental models so that questions about the concordance between effects observed in people and those observed in experimental models can be answered.

Gene-Environment Interactions

George Leikauf, of the University of Pittsburgh, discussed the current understanding of gene-environment interactions. In risk assessment, human variability and susceptibility are considered, and an uncertainty factor of 10 has traditionally been used to account for these factors. However, new tools available today are helping scientists to elucidate gene-environment interactions, and this research may provide a more scientific basis for evaluating human variability and susceptibility in the context of risk assessment. Leikauf noted that genetic disorders, such as sickle-cell anemia and cystic fibrosis, and environmental disorders, such as asbestosis and pneumoconiosis, cause relatively few deaths compared with complex diseases that are influenced by many genetic and environmental factors. Accordingly, it is the interaction between genome and environment that needs to be elucidated in the case of complex diseases.

FIGURE 3 DNA sequencing output. Current output is 1-2 billion bases per machine per day. The human genome contains 3 billion bases. Source: Stratton et al. 2009. Reprinted with permission; copyright 2009, Nature. J. Groopman, Johns Hopkins Bloomberg School of Public Health, presented at the symposium.

Leikauf continued, saying that evaluating genetic factors that affect pharmacokinetics or pharmacodynamics can provide valuable information for risk assessment. For example, genetic variations that lead to differences in carrier or transporter proteins can affect chemical absorption rates and determine the magnitude of a chemical’s effect on the body. Genetic variations that lead to differences in metabolism may also alter a chemical’s effect on the body. For example, if someone’s metabolism is such that a chemical is quickly converted to a reactive intermediate and then slowly eliminated, the person may be at greater risk because of the longer residence time of the reactive intermediate in the body. Thus, the relative rates of absorption and metabolism can be used to evaluate variability and susceptibility and can provide some scientific basis for selection of uncertainty factors. Leikauf noted, however, that determining the physiologic and pharmacologic consequences of the many genetic polymorphisms is difficult. He discussed several challenges to using genetic information to predict outcome. For example, not all genes are expressed or cause a given phenotype even if they are expressed. Thus, knowing one particular polymorphism does not mean knowing the likelihood of an outcome. Leikauf concluded, however, that the next step in genetics is to use the powerful new tools to understand the complexity and how it leads to diversity.

Tools and Technologies for Pathway-Based Research

Ivan Rusyn, of the University of North Carolina at Chapel Hill, discussed various tools and technologies that are now available for pathway-based research. He noted that the genomes of more than 180 organisms have been sequenced since 1995 and that although determining genetic sequence is important, understanding how we are different from one another may be more important. High-throughput sequencing—some of which can provide information on gene regulation and control by incorporating transcriptome analysis—has enabled the genome-wide association studies already discussed at the symposium and has provided valuable information on experimental models, both whole-animal and in vitro systems.

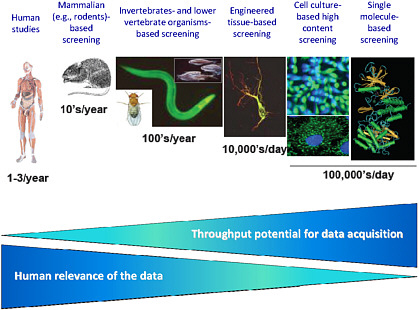

Rusyn described various tools and technologies available at the different levels of biologic organization and noted that the throughput potential for data acquisition diminishes as data relevance increases (see Figure 4). Single-molecule-based screening can involve cell-free systems or cell-based systems. In the case of cell-free systems, many of the concepts have been known for decades, but technologic advances have enabled researchers to evaluate classes of proteins, transporters, nuclear receptors, and other molecules and to screen hundreds of chemicals in a relatively short time. Miniaturization of cell-based systems has allowed researchers to create high-throughput formats that allow evaluation of P450 inhibition, metabolic stability, cellular toxicity, and enzyme induction. Screening with cell cultures has advanced rapidly as a result of robotic technologies and high-content plate design, and concentration-response

profiles on multiple phenotypes can now be generated quickly. Much effort is being invested in developing engineered tissue assays, some of which are being used by the pharmaceutical industry as screening tools. Finally, screening that uses invertebrates, such as Caenorhabditis elegans, and lower vertebrates, such as zebrafish, has been used for years, but scientists now have the ability to generate transgenic animals and to screen environmental chemicals in high-throughput or medium-throughput formats to evaluate phenotypes.

Rusyn described seminal work with knock-out strains of yeast that advanced pathway-based analysis (see, for example, Begley et al. 2002; Fry et al. 2005). High-throughput screens were used to identify pathways involved in response to chemicals that damaged DNA. Since then, multiple transcription-factor analyses have further advanced our knowledge of important pathways and have allowed scientists to rediscover “old” biology with new tools and technology. Rusyn noted, however, that it is difficult to go from gene expression to a whole pathway. A substantial volume of data is being generated, and the major challenge is to integrate all the data—chemical, traditional toxicologic, –omics, and high-throughput screening data—to advance our biologic understanding. Rusyn concluded that the complexity of science today creates an urgent need to train new scientists and develop new interdisciplinary graduate programs.

FIGURE 4 Throughput potential for data acquisition as related to levels of biologic organization. As the human relevance increases the throughput potential decreases. Source: NIEHS, unpublished data. I. Rusyn, University of North Carolina at Chapel Hill, presented at the symposium.

PATHWAY-BASED APPROACHES FOR HAZARD IDENTIFICATION

ToxCast: Redefining Hazard Identification

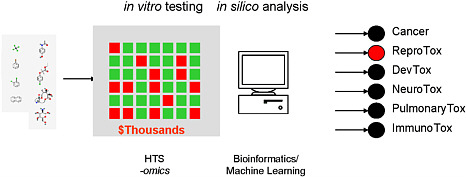

Robert Kavlock, of EPA, opened the afternoon session by discussing ToxCast, an EPA research program. He stated that a substantial problem is the lack of data on chemicals. In a recent survey (Judson et al. 2009), EPA identified about 10,000 high-priority chemicals in EPA’s program offices; found huge gaps in data on cancer, reproductive toxicity, and developmental toxicity; and found no evidence in the public domain of safety or hazard data on more than 70% of the identified chemicals. Kavlock noted that this problem is not restricted to the United States; a better job must also be done internationally to eliminate the chemical information gap. He emphasized that at this stage, priorities must be set for testing of the chemicals. The options include conducting more animal studies, using exposure as a priority-setting metric, using structure-activity models, and using bioactivity profiling, which would screen chemicals by using high-throughput technologies (see Figure 5). The ToxCast program was designed to implement the fourth option, and the name attempts to capture the key goals of the program: to cast a broad net to capture the bioactivity of the chemicals and to try to forecast the toxicity of the chemicals. The ToxCast program is part of EPA’s contribution to the Tox21 Consortium (Collins et al. 2008), a partnership of the NIH Chemical Genomics Center (NCGC), EPA’s Office of Research and Development, and the National Toxicology Program (NTP) to advance the vision proposed in the NRC report (NRC 2007a). Kavlock also noted that EPA responded to that report by issuing a strategic plan for evaluating the toxicity of chemicals that included three goals: identifying toxicity pathways and using them in screening, using toxicity pathways in risk assessment, and making an institutional transition to incorporate the new science.

FIGURE 5 Illustration of bioactivity profiling using high-throughput technologies to screen chemicals. Source: EPA 2009. R. Kavlock, U.S. Environmental Protection Agency, presented at the symposium.

Kavlock provided further details on the ToxCast program. It is a research program that was started by the National Center for Computational Toxicology and was developed to address the chemical screening and priority-setting needs for inert pesticide components, antimicrobial agents, drinking-water contaminants, and high- and medium-production-volume chemicals. The ToxCast program is currently the most comprehensive use of high-throughput technologies, at least in the public domain, to elucidate predictive chemical signatures. It is committed to stakeholder involvement and public release of the data generated. The program components are identifying toxicity pathways, developing high-throughput assays for them, screening chemical libraries, and linking the results to in vivo effects. Each component involves challenges, such as incorporating metabolic capabilities into the assays, determining whether to link assay results to effects found in rodent toxicity studies or to human toxicity, and predicting effective in vivo concentrations from effective in vitro concentrations. Kavlock described the three phases of the program (see Table 2) and noted that it is completing the first phase, proof-of-concept, and preparing for the second phase, which involves validation. He mentioned that it has developed a relational database, ToxRefDB, that contains animal toxicology data that will serve as the in vivo “anchor” for the ToxCast predictions.

Kavlock stated that 467 biochemical and cellular assays (see Table 3) are being used to evaluate chemicals, but the expectation is that a larger number of assays will eventually be used. Multiple assays and technologies are used to evaluate each end point, and initial results have been positive in that the results agree with what is known about the chemicals being testing. Kavlock concluded that the future of screening is here, and the challenge is to interpret all the data being generated. He predicted that the first application will be use of the data to set priorities among chemicals for targeted testing and that application to risk assessment will follow as more knowledge is gained from its initial use.

Practical Applications: Pharmaceuticals

William Pennie, of Pfizer, discussed screening approaches in the pharmaceutical industry and provided several examples of their use. Pennie noted that implementing new screening paradigms and using in silico and in vitro approaches may be easier in the pharmaceutical industry because of the ultimate purpose—screening out unpromising drug candidates early in the research phase as opposed to screening in environmental chemicals whose toxicity needs to be evaluated. Huge challenges are still associated with using these approaches in the pharmaceutical industry, and Pennie emphasized that academe, regulatory agencies, and industries need to collaborate to build the infrastructure needed. Otherwise, only incremental change will be made in developing and implementing pathway-based approaches.

TABLE 2 Phased Development of ToxCast Program

|

Phasea |

Number of Chemicals |

Chemical Criteria |

Purpose |

Number of Assays |

Cost per Chemical |

Target Date |

|

Ia |

320 |

Data-rich (pesticides) |

Signature development |

>500 |

$20,000 |

FY 2007-2008 |

|

Ib |

15 |

Nanomaterials |

Pilot |

166 |

$10,000 |

FY 2009 |

|

IIa |

>300 |

Data-rich chemicals |

Validation |

>400 |

~$20,000-25,000 |

FY 2009 |

|

IIb |

>100 |

Known human toxicants |

Extrapolation |

>400 |

~$20,000-25,000 |

FY 2009 |

|

IIc |

>300 |

Expanded structure and use diversity |

Extension |

>400 |

~$20,000-25,000 |

FY 2010 |

|

IId |

>12 |

Nanomaterials |

PMN |

>200 |

~$15,000-20,000 |

FY 2009-2010 |

|

III |

Thousands |

Data-poor |

Prediction and priority-setting |

>300 |

~$15,000-20,000 |

FY 2011-2012 |

|

aSince the symposium, phases IIa, IIb, and IIc have been merged into a single endeavor. Source: R. Kavlock, U. S. Environmental Protection Agency, presented at the symposium. |

||||||

TABLE 3 Types of ToxCast Assays

|

Biochemical Assays |

Cellular Assays |

|

|

|

GPCR NR Kinase Phosphatase Protease Other enzyme Ion channel Transporter |

HepG2 human hepatoblastoma A549 human lung carcinoma HEK 293 human embryonic kidney |

|

|

|

Human endothelial cells Human monocytes Human keratinocytes Human fibroblasts Human renal proximal tubule cells Human small-airway epithelial cells |

|

Radioligand binding Enzyme activity Coactivator recruitment |

|

|

|

|

Primary rat hepatocytes Primary human hepatocytes |

|

|

|

|

Cytotoxicity Reporter gene Gene expression Biomarker production High-content imaging for cellular phenotype |

|

|

Source: R. Kavlock, U.S. Environmental Protection Agency, presented at the symposium. |

|

Pennie stated that some of the pathway-based approaches have been applied more successfully in the later stages of drug development than in the early, drug-discovery phase. One problem in developing the new approaches is that scientists often focus on activation of one pathway rather than considering the complexity of the system. Pennie stated that pathway knowledge should be added to a broader understanding of the biology; thus, the focus should be on a combination of properties rather than on one specific feature. Although the pharmaceutical industry is currently using in vitro assays that are typically functional end-point assays, Pennie noted that there is no reason why those assays could not be supplemented or replaced with pathway-based assays, given a substantial investment in validation. He said that the industry is focusing on using batteries of in vitro assays to predict in vivo outcomes, similar to the ToxCast program, and described an effort at Pfizer to develop a single-assay platform that would evaluate multiple end points simultaneously and provide a single predictive score for hepatic injury. Seven assays were evaluated by using 500

compounds that spanned the classes of hepatic toxicity. Researchers were able to develop a multiparameter optimization model that determined the combination of assays that would yield the most predictive power. On the basis of that analysis, they identified a combination of assays—a general cell-viability assay followed by an optimized imaging-based hepatic platform that measured several end points—that resulted in over 60% sensitivity and about 90% specificity. Pennie emphasized the value of integrating the pathway information into the testing cascade. If an issue is identified with a chemical, that knowledge can guide the in vivo testing and, instead of a fishing expedition, scientists can test a hypothesis. Pfizer has also developed a multiparameter optimization model that uses six physicochemical properties to characterize permeability, clearance, and safety and that helps to predict the success of a drug candidate. Pennie concluded by saying that the future challenge is to develop prediction models that combine data from multiple sources (that is, structural-alert data, physicochemical data, in vitro test data, and in vivo study data) to provide a holistic view of compound safety.

Practical Applications: Consumer Products

George Daston, of Procter and Gamble, discussed harnessing the available computational power to support new approaches to toxicology to solve some problems in the consumer-products industry. He noted that the new paradigm is a shift from outcome-driven toxicology, in which models are selected to evaluate a particular disease state without knowledge about the events from exposure to outcome, to mechanism-driven toxicology, in which scientists seek answers to several questions: How does the chemical interact with the system? What is the mechanism of action? How can we predict what the outcome would be on the basis of the mechanism? The transition to mechanism-driven toxicology will be enabled by the 50 years of data from traditional toxicology, the ability to do high-throughput and high-content biology, and the huge computational power currently available.

Daston provided two examples of taking advantage of today’s computational power. First, his company needed a system to evaluate chemicals without testing every new chemical entity to make initial predictions about safety. A chemical database was developed to search chemical substructures to identify analogues that might help to predict the toxicity of untested chemicals. A process was then developed in which first a chemist reviews a new compound and designs a reasonable search strategy, then the computer is used to search enormous volumes of data for specific patterns, and finally the output is evaluated according to expert rules based on physical chemistry, metabolism, reactivity, and toxicity to support testing decisions. Daston mentioned several public databases (DSSTox, ACTOR, and ToxRefdB) that are available for conducting similar searches and emphasized the importance of public data-sharing.

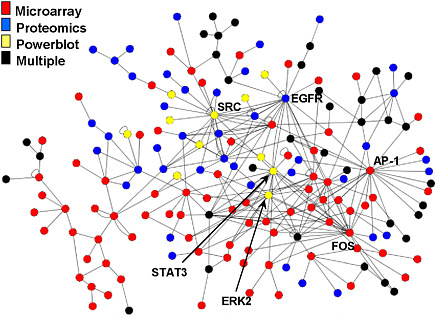

His second example involved analysis of high-content datasets from microarrays in which all potential mechanisms of action of a particular chemical are evaluated as changes in gene expression. That approach complements the one discussed by Kavlock for the ToxCast program in that it is a detailed analysis of one assay rather than a scan of multiple types of assays. Daston and others focused on using steroid-hormone mechanisms to evaluate whether gene-expression analysis (that is, genomics) could predict those mechanisms. Steroid hormone mechanisms were chosen because research has shown that effects regulated by estrogen, androgen, and other steroid hormones depend on gene expression. That is, a chemical binds to a receptor; the receptor complex migrates to the nucleus, binds to specific sites on the DNA, and causes upregulation or downregulation of specific genes; and this change in gene expression causes the observed cellular and tissue response. They found not only that chemicals that act by the same mechanism of action affect the same genes in the same direction but that the magnitude of the changes is the same as long as the chemicals are matched for pharmacologic activity. Thus, they found that genomics could be used quantitatively to improve dose-response assessments. Daston stated that genomics can be used to accelerate the mechanistic understanding, and the information gained can be used to determine whether similar kinds of effects can be modeled in an in vitro system. One surprising discovery was how extrapolatable the results were, not only from in vivo to in vitro but from species to species. Daston concluded by saying that once the critical steps in a toxicologic process are known, quantitative models can be built to predict behavior at various levels of organization.

Practical Applications: Mixtures

John Groten, of Schering-Plough, discussed current approaches and possible applications of the new science to mixtures risk assessment. He noted that especially in toxicology research in the pharmaceutical industry (but also in the food and chemical industry) there is an increasing need for parallel and efficient processes to assess compound classes, more alternatives to animal testing, tiered approaches that link toxicokinetics and toxicodynamics, enhanced use of systems biology in toxicology, and an emphasis on understanding interactions, combined action, and mixtures risk assessment. Today, risk assessments in the food, drug, and chemical industries attempt to evaluate and incorporate mixture effects, but the processes for doing so are case-driven and relatively simplistic. For example, adding hazard quotients to ensure that a sum does not exceed a threshold might be a beginning, but the likelihood of joint exposure and the possibility that compounds affect the same target system need to be assessed qualitatively and, preferably, quantitatively. Although research has been conducted on toxicokinetics and toxicodynamics of mixtures, most publications have dealt with toxicokinetic interactions. Groten stated that toxicokinetics should be used to correct for differences in exposure to mixture components but, because of a

lack of mechanistic understanding in the toxicodynamic phase, not to predict toxic interactions. He noted that empirical approaches are adequate as a starting point but that in many cases these models depend on mathematical laws rather than biologic laws, and he recommended that mechanistic understanding be used to fine tune experiments and to test or support empirical findings.

Groten listed several challenges for mixtures research, including the difficulty of using empirical models and conventional toxicology to show the underlying sequence of events in joint action, the adequacy (or inadequacy) of conventional toxicity end points to provide a wide array of testable responses at the no-observed-adverse-effect level, and the inability of current models to distinguish kinetic and dynamic interactions. He concluded by noting that the health effects of chemical mixtures are mostly related to specific interactions at the molecular level and that the application of functional genomics (sequencing, genotyping, transcriptomics, proteomics, and metabolomics) will provide new insights and advance the risk assessment of mixtures. He echoed the need that previous speakers raised for the use of multidisciplinary teams with statisticians, bioinformaticians, molecular biologists, and others to conduct future research in this field.

Pathway-Based Approaches: A European Perspective

Thomas Hartung, of the Center for Alternatives to Animal Testing, provided a European perspective on pathway-based approaches and reviewed the status of the European REACH program. He noted that regulatory toxicology is a business; toxicity testing with animals in the European Union is an $800 million/year business that employs about 15,000 people. The data generated, however, are not always helpful for reaching conclusions about toxicity. For example, one study examined 29 risk assessments of trichloroethylene and found that four concluded that it was a carcinogen, 19 were equivocal, and six concluded that it was not a carcinogen (Rudén 2001). Hartung stated that one problem is that the system today is a patchwork to which every health scare over decades has added a patch. For example, the thalidomide disaster resulted in a requirement for reproductive-toxicity testing. Many patches are 50-80 years old, and there is no way to remove a patch because international guidelines have been created and are extremely difficult to change once they have been agreed on. Another problem is that animal models are limited—humans are not 70-kg rats. However, cell cultures are also limited; metabolism and defense mechanisms are lacking, the fate of test compounds is unknown, and dedifferentiation is favored by the growth conditions. Thus, the current system is far from perfect.

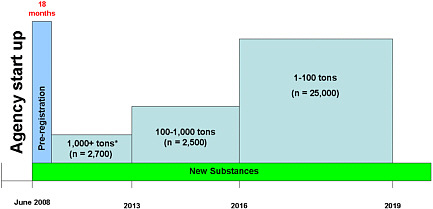

Hartung discussed the REACH initiative and noted that it constitutes the biggest investment in consumer safety ever undertaken. The original projection was that REACH would involve 27,000 companies in Europe (one-third of the world market) but affect the entire global market in that it also affects imported chemicals and that it would result in the assessment of at least 30,000 chemicals

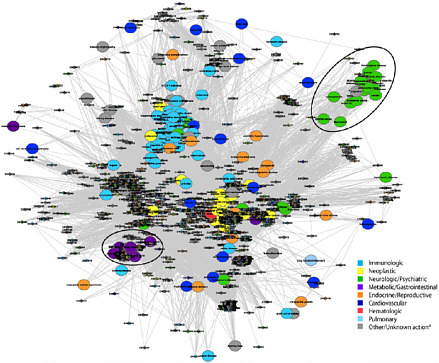

(see Figure 6 for an overview of the chemical registration process). Given that about 5,000 chemicals have been assessed in 25 years, the program goal is quite ambitious. REACH, however, is much bigger than originally expected. By December 2008, 65,000 companies have submitted over 2.7 million preregistrations on 144,000 substances. The feasibility of REACH is now being reassessed.

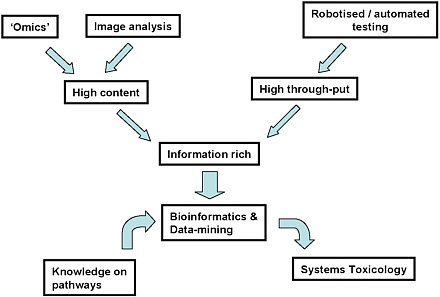

Alternative methods clearly will be needed to provide the necessary data. REACH, however, requires companies to review all existing information on a chemical and to make optimal use of in vitro systems and in silico modeling. Animal testing is considered a last resort and can be conducted only with authorization by the European Chemical Agency. Hartung stated that one problem is to determine how to validate new testing approaches. It is not known how predictive the existing animal tests are for human health effects, so it does not make sense to validate truly novel approaches against animal tests. Hartung said that the key problem for REACH will be the need for reproductive-toxicity testing, which will represent 70% of the costs of REACH and involve more than 80% of the animals that will be used. That problem will mushroom because few facilities are capable of conducting the testing. The bigger challenges, however, are the number of false positives that will result from the testing and the need to determine which chemicals truly represent a threat to humans. Hartung concluded that a revolution, construction of something new, is needed rather than an evolution—replacement of parts or pieces one by one. The worst mistake would be to integrate small advances into the existing system. The new technologies offer tremendous opportunities for a new system (see Figure 7) that can overcome the problems that we face today.

FIGURE 6 Overview of chemical registration for REACH. *Registration includes chemicals that are suspected of being carcinogens, mutagens, or reproductive toxicants and have production volumes of at least 1 ton and chemicals that are considered persistent and have production volumes of at least 100 tons. Source: Modified from EC 2007. Reprinted with permission; copyright 2007, European Union. T. Hartung, Johns Hopkins University, modified from symposium presentation.

FIGURE 7 Integration of new approaches for toxicology. Source: Hartung and Leist 2008. Reprinted with permission; copyright 2008, ALTEX. T. Hartung, Johns Hopkins University, presented at the symposium.

Panel Discussion

The afternoon session closed with a panel discussion that focused on data gaps, pitfalls, and research needs. Kavlock commented that scientists need data to validate the systems, such as data from the pharmaceutical industry, which has extensive human and animal toxicology data on pharmaceutical agents. David Jacobson-Kram, of the Food and Drug Administration (FDA), stated that FDA will soon be joining the efforts of other federal agencies on high-throughput screening and pathway profiling and may be able to provide extensive data. He continued by saying that developing a battery of short-term tests that uses changes in gene-expression patterns to predict human carcinogenic potential will revolutionize toxicology. He cautioned, however, that the tests need to be validated; negative results may simply represent the lack of metabolic activation in a system or the inability of water-insoluble compounds to reach their target. Charles Auer, retired from EPA, emphasized the substantial resources needed for such an effort.

Leikauf stated that a critical problem will be interpretation of the data. Kavlock noted that the goal of ToxCast is to determine the probability that a chemical will cause a particular adverse health effect. The data must be generated and provided to scientists so that they can evaluate them and determine whether the system is working. Hartung agreed with Kavlock that scientists will

deal with probabilities rather than bright lines (that is, point estimates). Frederic Bois, a member of the standing committee, reminded the audience that determining the probability of whether a chemical causes an effect is hazard assessment, not risk assessment; risk assessment requires a dose-response component, which is where the issue of metabolism becomes critically important. Goldstein noted that although probabilities might be generated, regulators will draw bright lines.

Kavlock stated that a key difference between the current system and the new approaches is the scale of information. Substantially more information will be generated with the new approaches, and it is hoped that that information will drive intelligent targeted testing that allows interpretation of data for risk assessment. Several symposium participants emphasized that the discussion on data interpretation and probability highlighted the need to educate the public on the new science and its implications. Linking upstream biomarkers or effects with downstream effects will be critical.

APPLICATION TO MODE-OF-ACTION ANALYSIS

What Is Required for Acceptance?

John Bucher, of the NTP, opened the morning session of the second day by exploring the relationship between toxicity pathways and modes of action and questions surrounding validation. He noted that the concept of mode of action arose from frustration over the inability to describe the biologic pathway of an outcome at the molecular level. Instead, mode of action describes a series of “key events” that lead to an outcome; key events are measurable effects in experimental studies and can be compared among studies. Bucher stated that toxicity pathways are the contents of the “black boxes” described by the modes of action and that key toxicity pathways will be identified with the help of toxico-genomic data and genetic-association studies that examine relationships between genetic alterations and human diseases. He contrasted toxicity pathways and mode of action: mode of action accommodates a less-than-complete mechanistic understanding, allows and requires considerable human judgment, and provides for conceptual cross-species extrapolation; toxicity pathways accommodate unbiased discovery, can provide integrated dose-response information, may allow more precise mechanistic “binning,” and can reveal a spectrum of responses. Bucher stated that acceptance of modes of action and toxicity pathways is complicated by various “inconvenient truths.” For mode of action, it is not a trivial task to lay out the key events for an outcome, and inconsistencies sometimes plague associations, for example, in the case of hepatic tumors in PPAR-alpha knockout mice that have been exposed to peroxisome-proliferating agents. For toxicity pathways, scientists are evaluating the worst-case scenario; chemicals are applied to cells that have lost their protective mechanism, so the chances of positive results are substantially increased. Furthermore, cells begin to deterio-

rate quickly; if an effect requires time to be observed, a cellular assay may not be conducive to detecting it.

Bucher stated that Tox21—a collaboration of EPA, NTP, and NCGC—has been remarkably successful, with each group bringing its own strengths to the effort; this collaboration should make important contributions to the advancement of the new science. However, many goals will need to be met for acceptance of the toxicity-pathway approach as the new paradigm, and until some of the goals have been reached, the scientific community cannot adequately know what will be needed for acceptance. Bucher noted that the NTP Interagency Center for the Evaluation of Alternative Toxicological Methods (NICEATM) and the Interagency Coordinating Committee on Validation of Alternative Methods (ICCVAM) were established in 2000 to facilitate development, scientific review, and validation of alternative toxicologic test methods and were charged to ensure that new and revised test methods are validated to meet the needs of federal agencies. The law that created NICEATM and ICCVAM set a high hurdle for validation of new or revised methods, but NICEATM and ICCVAM have put forth a 5-year plan to evaluate high-throughput approaches and facilitate development of alternative test methods. Bucher concluded, saying that “at some point toxicologists will have to decide when our collective understanding of adverse biological responses in…in vitro assays…has advanced to the point that data from these assays would support decisions that are as protective of the public health as are current approaches relying on the results of the two-year rodent bioassay” (Bucher and Portier 2004).

Environmental Disease: Evaluation at the Molecular Level

Kenneth Ramos, of University of Louisville, described a functional-genomics approach to unraveling the molecular mechanisms of environmental disease and used his research on polycyclic aromatic hydrocarbons (PAHs), specifically benzo[a]pyrene (BaP), as a case study. Ramos noted that a challenge for elucidating chemical toxicity is that chemicals can interact in multiple ways to cause toxicity, so the task is not defining key events but understanding the inter-relationships and interactions of all the key events. BaP is no exception in causing toxicity potentially through multiple mechanisms; it is a prototypical PAH that binds to the aryl hydrocarbon receptor (AHR), deregulates gene expression, and is metabolized by CYP450 to intermediates that cause DNA damage and oxidative stress. Ramos stated that his laboratory has focused on using computational approaches to understand genomic data and construct biologic networks, which will provide clues to BaP toxicity. He interjected that the notion of pathway-based toxicity may be problematic because intersecting pathways all contribute to the ultimate biologic outcome, so the focus should be on understanding networks.

He said that taking advantage of genomics allowed his laboratory to identify three major molecular events: reactivation of L1 retroelement (Lu et al.

2000), activation of inflammatory signaling (Johnson et al. 2003), and inhibition of genes involved in the immune response (Johnson et al. 2004). The researchers then began to investigate the observation that BaP activated repetitive genetic sequences known as retrotransposons. Retrotransposons are mobile elements in the genome, propagate through a copy-and-paste mechanism, and use reverse transcriptase and RNA intermediates. L1s are the most characterized and abundant retrotransposons, make up about 17% of mammalian DNA by mass, and mediate genome-wide changes via insertional and noninsertional mechanisms. They may cause a host of adverse effects in humans and animals because their ability to copy themselves allows them to insert themselves randomly throughout the genome.

Ramos described the work on elucidating L1 regulatory networks by using genomics and stated that the key was identifying nodes where multiple pathways appeared to overlap. He and his co-workers used silencing RNA approaches to knock down specific proteins, such as the AHR, so that they could investigate the effect on the biologic network, and they concluded that the repetitive sequences are important molecular target for PAHs. His laboratory has now turned to trying to understand the epigenetic basis of regulation of repetitive sequences and how PAHs might affect those regulatory control mechanisms in cells. The idea that biologic outcomes are affected by disruption of epigenetic events adds another layer of complexity to the story of environmental disease. It means that in addition to the direct actions of the chemical, the state of regulation of endogenous systems is important for understanding the biologic response. Ramos concluded that L1 is linked to many human diseases—such as chronic myeloid leukemia, Duchenne muscular dystrophy, colon cancer, and atherosclerosis—and that research has shown that environmental agents, such as BaP, regulate the cellular expression of L1 by transcriptional mechanisms, DNA methylation, and histone covalent modifications. Thus, the molecular machinery involved in silencing and reactivating retroelements not only is important in environmental responses but might be playing a prominent role in defining disease outcomes.

Dioxin: Evaluation of Pathways at the Molecular Level

Alvaro Puga, of the University of Cincinnati College of Medicine, used dioxin as an example to discuss molecular pathways in disease outcomes. Dioxin (2,3,7,8-tetrachlorodibenzo-p-dioxin [TCDD]) is a contaminant of Agent Orange—a herbicide used during the Vietnam War—that has been linked with numerous health effects. Some effects are characterized as antiproliferative, such as the antiestrogenic, antiandrogenic, and immunosuppressive effects; others are proliferative, such as cancer; and the remainder are characterized as effects on differentiation and development, such as birth defects. The effects of dioxin are primarily receptor-dependent. Dioxin binds to the AHR, a ligand-activated transcription factor. The resulting complex translocates to the nucleus and binds with the AHR nuclear translocator to form a complex that then binds to DNA-responsive ele-

ments on the genome; that binding induces gene expression. Dioxin is not the only ligand to bind to the AHR, and that raises the question of whether results from one ligand can be extrapolated to all ligands. That has essentially been done for several classes of halogenated aromatic hydrocarbons—polychlorinated dibenzo-p-dioxins, polychlorinated dibenzofurans, and polychlorinated biphenyls—with dioxin as the reference compound. AHR ligands have substantially different potencies to activate AHR-dependent gene expression, and their ability to produce toxicity appears to depend on their metabolic stability.

Puga stated that most toxic effects of dioxin are mediated by the AHR. For example, research has shown that AHR-deficient mice are resistant to dioxin-induced cytotoxicity and teratogenicity and that AHR-deficient zebrafish are resistant to dioxin-induced cardiac edema. The AHR has been implicated in many signaling pathways, and thus dioxin has the potential for disrupting other signaling pathways, such as MAP-kinase pathways and pathways associated with the cell cycle. Research indicates that cellular conditions determine whether the effects will be proliferative or antiproliferative. Puga stated that his laboratory is working to map the AHR regulatory network by varying the genotype of the cells (that is, using cells that have a wild-type receptor and cells that have a point mutation in the receptor that prevents binding to DNA) and asking the question, What is the target of the AHR at the whole-genome level? He said that recent research indicates that dioxin causes massive deregulation of homeobox and differentiation genes, so scientists should be critically investigating the developmental outcomes associated with dioxin exposure.

Systems-Level Approaches for Understanding Nanomaterial Biocompatibility

Brian Thrall, of the Pacific Northwest National Laboratory, discussed the challenges in evaluating mode of action and conducting hazard assessment of nanomaterials, and he provided examples of approaches from his laboratory to address the challenges. Nanotechnology will soon affect all aspects of society; current estimates are that sales from products that incorporate nanotechnology will reach $3 trillion by 2015. Although there are no documented cases of human toxicity of or disease caused by nanomaterials, concern has arisen because other types of particles and fibers have been linked to human disease, and comparisons have recently been made between asbestos fibers and carbon nanotubes. Thrall noted that if hazard assessments of the nanoproducts currently on the market were conducted using chronic bioassays, it could cost over $1 billion and take 30-50 years. Clearly, rapid screening approaches that lead to a small number of chronic bioassays or other in vivo testing would dramatically reduce the cost and time required to test the products.

Thrall stated that nanomaterials are difficult to evaluate because they are engineered materials that are made on the scale of biologic molecules and could

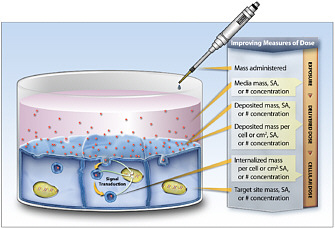

therefore interact with biologic pathways in many complex ways. Critical challenges in nanotoxicology revolve around addressing fundamental questions of exposure, dose, and mode of action. Focusing on dose, Thrall stated that a number of reports have indicated that the toxicity of particles depends on size: smaller particles tend to be more toxic on an equal-mass basis. However, Oberdorster et al. (2005) showed that using mass as the basis of comparison may not reveal much about chemical potency and biologic reactivity. The question then becomes what dose metric—mass, particle number, or surface area—is the most informative. Thrall stated that research with amorphous silica in his laboratory showed that surface area was the most appropriate dose metric in experiments evaluating cytotoxicity (Waters et al. 2009). Using genomics, he and co-workers also showed that the predominant dose-response pattern for gene expression depends on surface area and is independent of particle size. Furthermore, reverse transcription polymerase chain reaction (RT-PCR) showed that for more than 75% of the genes identified, the magnitude of expression correlated better with nominal surface area than with mass or particle number.

Thrall then asked whether any biologic processes can be attributed to size dependence; that is, do the chemical and physical properties that make nanomaterials commercially attractive cause unique biologic responses? His laboratory investigated that question by conducting gene-set enrichment analyses—a statistical approach in which the ontologic attributes of a gene set are compared. He and co-workers found that the major cellular processes affected by 10-nm and 500-nm silica were identical; none of over 1,000 biologic processes identified was statistically different as a function of particle size. So for amorphous silica, there was no compelling evidence that new biologic processes arise as a function of size at the nanoscale. His research on amorphous silica also showed how high-content data can be used to address some fundamental questions concerning nanomaterials.

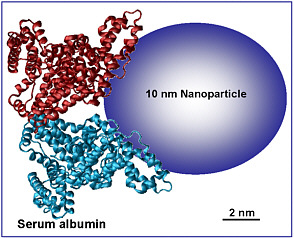

Thrall concluded his presentation by noting a few other challenges that arise with nanomaterials. First, engineered particles are not as simple as soluble chemicals because such physical forces as gravity, diffusion, and convection act on them, particularly in cell-culture systems, and can influence dose and changes in dose (see Figure 8). For example, the dose measured for a particle that settles slowly or hardly at all could differ significantly from the dose measured for a dense particle that settles quickly in the culture. Dosimetry models for the in vitro systems need to be validated so that mode-of-action studies can be anchored by biologic dose. Second, nanomaterials adsorb proteins in biologic systems (see Figure 9), and this could have an important effect on disposition of nanoparticles and biologic response to the nanoparticles. Structure-based modeling might provide insight on principles that guide protein interaction with nanomaterials and might serve in the future as a screening tool for evaluating relationships between alterations in surface chemistry and toxicity. Thrall noted the importance of developing hybrid quantitative structure-activity relationship models that integrate structural, chemical, and biologic data and stated that re-

cent work has shown that specific aspects of surface area, such as the presence of polar functional groups, rather than total surface area alone are important for toxicity. He said that although this symposium is not focused on exposure, there is a need for more information on potential exposure to set priorities among and validate nanotoxicity studies.

FIGURE 8 Dosimetry considerations in cell systems. Source: Teeguarden et al. 2007. Reprinted with permission; copyright 2007, Society of Toxicology. B. Thrall, Pacific Northwest National Laboratory, presented at the symposium.

FIGURE 9 What do cells see? Protein adsorption by nanomaterials is a universal phenomenon in biologic systems. Source: B. Thrall, unpublished data, Pacific Northwest National Laboratory, presented at the symposium. Reprinted with permission; copyright 2007, Pacific Northwest National Laboratory.

Panel Discussion

The morning session closed with a panel discussion that focused on the use of the new science in regulatory decision-making. Thrall commented that nanomaterials may not be a good case study because there are still relatively few data on them, and he emphasized the need to collect exposure data particularly to determine the magnitude of exposure and the materials to which people are being exposed. That information is needed to allow priority-setting for research. Bucher stated that dioxin and dioxin-like compounds may be good examples for incorporating the new science and noted that recent research has supported the toxic equivalency factors established by the World Health Organization for predicting carcinogenic outcome. He added that research has shown that it will not be sufficient simply to evaluate pathways and that other information on timing and persistence will need to be integrated with pathway data.

Jonathan Wiener, of Duke University, addressed policy and legal aspects of incorporating the new science in agency decision-making. He considered the scenario in which EPA based a decision, such as a decision to regulate a chemical, on the new toxicity-pathway-based science or on a combination of traditional and new testing methods. He first noted that the agency would face internal review in the executive branch in the Office of Management and Budget Office of Information and Regulatory Affairs (OIRA) and possibly in the Office of Science and Technology Policy (OSTP). Wiener suggested that the field may be open for some new and interesting approaches to guide agency science and decision-making, in light of recent actions of President Obama’s administration, such as his call for a new executive order on regulatory oversight by OIRA and his memorandum on improving agency science and strengthening OSTP’s role.

After internal review within the executive branch, Wiener observed, the next hurdle would be judicial review. He argued that, although courts can be skeptical of new scientific methods in civil tort liability lawsuits, judicial review of agency science may be more deferential, especially when agencies are acting “at the frontiers of science.” Several regulatory statutes now call for agencies to use the “best available science” or the “latest scientific knowledge,” and a court could be convinced that toxicity-pathway approaches constitute the best and latest science. Furthermore, the Supreme Court has recently held that if the agency provides a persuasive reason for changing its policy or its basis of decision-making, the courts will be receptive to the change even if the reason is not the one that a court would have given. Thus, an agency seeking to rely in whole or in part on toxicity-pathway-based approaches for making regulatory decisions ought to give a good explanation of why these new methods are valuable.

Finally, Wiener pointed out that the question of what constitutes an “adverse effect” may be pivotal. Yet, as others have discussed during this symposium, responses observed in toxicity-pathway studies may not always indicate an adverse effect as opposed to, for example, an adaptive effect. Wiener’s research indicates that the term adverse effect has been used in hundreds of federal statutes and thousands of judicial opinions since 1970, but it is almost never

defined. Wiener suggested that EPA try to provide a thorough and tractable interpretation of what an adverse effect is and how the new toxicity-pathway testing methods can demonstrate such an effect.

The question of whether a framework that would facilitate the use of new data could be developed or whether it was too soon to use the new data was discussed. Thrall noted that the development of a framework and advancing the new science would depend on fields outside toxicology, such as improving computational abilities to handle various approaches and assumptions. Ramos, however, stated that there is now technology that allows a pathway-based approach to classification, to increase understanding of modes of action, to gain insight into biologic outcome, and ultimately to predict safety. He warned, however, that one has to temper that optimism with reality and recognize that a system that has checks and balances to minimize error must be built because we do not yet know whether we can make predictions on the basis of the new science with a given level of certainty. Elliott agreed, emphasizing that the issue should not be framed as an all-or-nothing decision, and suggested that the agency should take a relatively simple, well-understood system, establish the pathway-based approach for it, and then build on that precedent. Ramos stated that there are some chemicals, such as arsenic and PAHs, with which that approach could be taken. Wiener underscored Elliott’s point and stated that in the near term toxicity-pathway-based approaches should be combined with whole-animal tests and human epidemiology so that reviewing bodies, such as OIRA and the courts, become comfortable with the information as providing a fuller picture rather than as a replacement at this stage. Other symposium participants echoed the idea of pushing forward and using and applying the data that have been collected, and one noted that no single assay is going to give a yes or no answer for a risk assessment or substantive decision. We will need to integrate all the information and use the best interpretive skills and scientific judgment to answer the important questions.

CHALLENGES AND OPPORTUNITIES FOR RISK ASSESSMENT IN THE CHANGING PARADIGM

Dose and Temporal Response

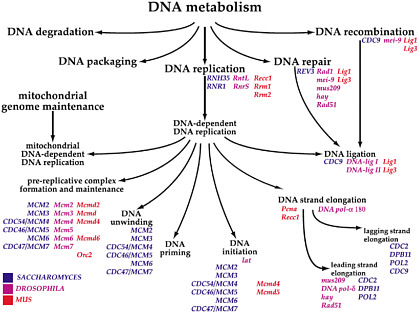

Elaine Faustman, of the University of Washington, opened the afternoon session by discussing datasets and tools available to examine dose and temporal response and what is needed to move forward. Faustman discussed the creation of gene ontologies and noted the paper by Ashburner et al. (2000) as critical in advancing the field. Three categories—biologic process (goal or objective), molecular function (elemental activity or task), and cellular component (location or complex)—have been defined, and each category has a structured, controlled vocabulary. Figure 10 provides an example of a gene ontology and shows the

FIGURE 10 Example of gene ontology for DNA metabolism, a biologic process. Similar ontologies can be built for molecular function and cellular component. Source: Ashburner et al. 2000. Reprinted with permission; copyright 2000, Nature Genetics. E. Faustman, University of Washington, presented at the symposium.

equivalent genes for three species for a specific biologic process. For risk assessment, gene ontologies provide an outstanding opportunity to use genomic information for cross-species comparisons.

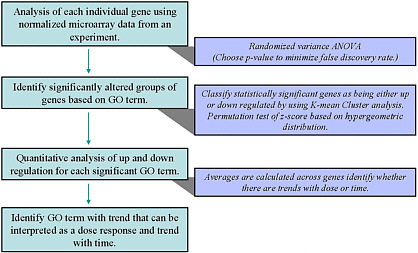

Several years ago, Faustman and co-workers recognized that decision rules were needed to evaluate dose- and time-dependent genomic data. Consequently, a system-based framework to interpret those data was developed (Yu et al. 2006). That framework (see Figure 11) has been used to identify potential signaling pathways versus single genes significantly changed after exposure. Once biologic processes are linked to pathway changes, one can begin to evaluate deviations from the normal patterns of gene expression that result from chemical exposure (Yu et al. 2006). That approach has been applied to metals, phthalates, and sulfur mustard (Robinson et al. 2010; Yu et al. 2006, 2009, 2010).

Faustman noted the report Scientific Frontiers in Developmental Toxicology and Risk Assessment (NRC 2000) and summarized the three main points of the report: signaling is used in almost every developmental event, about 17 pathways of cell-cell signaling are responsible for all of development, and the 17

pathways are highly conserved among metazoa. The report was valuable because it identified pathways involved in early, middle, and late development and laid the foundation for future work. Faustman commented that when evaluating pathways, one needs to consider not only whether the pathway is present but what the signal means (that is, a signaling pathway in one organism may have roles different from those in another organism). Furthermore, not only whether a pathway is expressed but when it is expressed and how it is expressed makes a difference. Like other speakers, Faustman emphasized the inter-relatedness of some of the pathways and the relationship of the network to general function.

Returning to the topic of risk assessment, Faustman noted observations from studies in her laboratory on the effects of metal exposure on mouse development. The studies found that metals affect genes involved in the Wnt signaling pathway, that multiple transcription-factor families are affected by metals, and that more than 50% of the genes affected were uncharacterized at the pathway level; the latter finding indicates that much work still needs to be done to determine the link between gene changes and pathways and the importance of the gene changes. Faustman closed by listing several needs, including new tools for evaluating quantitative genomic response at multiple levels of biologic organization, kinetic and dynamic models that can allow for integration at various organization levels, better characterization of variability in genomic data, discussion of and consensus on how responses and changes in responses should be considered for effect-level assessment, and discussion of approaches to evaluate responses to early low-dose exposures vs responses at increasing complexity and decreased specificity.

FIGURE 11 Framework for interpretation of dose- and time-dependent genomic data. Source: Yu et al. 2006. Reprinted with permission; copyright 2006, Toxicological Sciences. E. Faustman, University of Washington, presented at the symposium.

Application of Genomic Dose-Response Data to Define Mode of Action and Low-Dose Behavior of Chemical Toxicants

Russell Thomas, of the Hamner Institutes for Health Sciences, provided a series of practical applications of genomic data to risk assessment. He noted several aspects of genomics that make it applicable to risk assessment. First, gene-expression microarray technology is more than a decade old, and multiple studies have demonstrated the sensitivity and reproducibility of the current generation of microarrays. Second, genomic technology is capable of broadly evaluating transcriptional changes (genome-wide) and of focusing on changes in individual genes and pathways. Third, gene-expression microarray analysis can provide insight into the dose required to affect cellular processes and the underlying biology of dose-dependent transitions. Thomas commented, however, that there is still no consensus on how to use genomic information in risk assessment.

In his first example, Thomas described an experiment from his laboratory in which the dose-response changes in gene expression of several chemical carcinogens, previously tested by NTP, were evaluated with transcriptomic data, and the results were compared with tumor-incidence data. For the experiment, groups of female B6C3F1 mice were exposed to one of five doses of a given carcinogen for 90 days, the transcriptional changes in target tissues were evaluated with whole-genome microarrays from Affymetrix, and the dose-response changes in gene expression were examined with a pathway-based approach. Specifically, the researchers calculated benchmark doses (BMDs) for individual genes on the basis of their inherent variability; grouped genes on the basis of biologic function, such as their role in proliferation, apoptosis, and metabolism; and finally calculated a summary value for the particular pathway. They found good correlation not only between the BMDs for the pathway-based transcriptional responses, such as cell proliferation and DNA damage response, and tumor incidence but between overall changes in gene expression and tumor incidence. They also found that the BMD for the most sensitive biologic process was always less than the BMD for the tumor response. Thomas concluded that transcriptomic dose-response alterations correlate with tumor incidence and that BMD values for the most sensitive pathways are protective.

In the second example, Thomas described how to use cross-species differences in transcriptional dose-response data to evaluate mode of action. Thomas and co-workers exposed groups of rats and mice to chloroprene for 5 or 15 days. Chloroprene is metabolized to epoxide metabolites, and the rate of metabolism and of generation of the epoxide metabolites is about 10 times higher in mice than in rats. Accordingly, they used a physiologically based pharmacokinetic (PBPK) model to try to normalize the doses so that mice and rats received about the same internal dose. The BMD analysis of the genomic data indicated that at 5 days glutathione metabolism was perturbed and at 15 days DNA repair genes

were affected and that the mouse was substantially more sensitive than the rat, although some differences disappeared when the comparison was based on internal dose. Overall, the results indicated the importance of the generation of the reactive epoxide metabolites in the proposed mode of action of chloroprene. Thomas concluded that pathway-based transcriptomic dose-response data can provide insights into the mode of action.