7

Making Use of Assessment Information

At the heart of any plan for improving assessment is the goal of obtaining information about what students have and have not learned that can be used to help them improve their learning, to guide their teachers, and to support others who make decisions about their education.

USING ASSESSMENTS TO GUIDE INSTRUCTION

Linda Darling-Hammond explored how assessment information can be used to guide instruction—for example, by providing models of good instruction and high-quality student work, diagnostic information, and evidence about the effectiveness of curricula and instruction. As she had discussed earlier, when rich assessment tasks are embedded in the curriculum, they can serve multiple purposes more effectively than can current accountability tests and can influence instruction in positive ways. She provided several examples of tasks that engage students in revealing their thinking and reasoning and that elicit complex knowledge and skills.

A performance task developed for an Ohio end-of-course exam, for example, requires mathematical analysis and modeling, as well as sophisticated understanding of ratio and proportion. It presents a scenario in which a woman needs to calculate how much money she saved in heating bills after purchasing insulation, taking into account variation in weather from year to year. To answer the questions, students must do tasks that include Internet research to gain contextual information, calculating the cost-effectiveness of the insulation, and writing a written summary of their findings and conclusions. They are also

asked to devise a generalized method of comparison using set formulas and to create a pamphlet for gas company customers explaining this tool. Some of the questions that are part of this assessment are shown in Box 7-1.

This sort of task, Darling-Hammond explained, is engaging for students in part because it elicits complex knowledge and skills. It also reveals to teachers the sorts of thinking and reasoning of which each student is capable. It does so in part because it was developed based on an understanding of the learning progressions characteristic of this area of study and of students’ cognitive development. Because of these characteristics of the assessment, scoring and analyzing the results are valuable learning opportunities for teachers.1 Darling-Hammond stressed that in several other places (e.g., Finland, Sweden, and the Canadian province Alberta) teachers are actively engaged in the development of assessment tasks, as well as scoring and analysis, which makes it easier for them to see and forge the links between what is assessed and what they teach. In general, she suggested, “the conversation about curriculum and instruction in this country is deeply impoverished in comparison to the conversation that is going on in other countries.” For example, the idea that comparing test scores obtained at two different points in time is sufficient to identify student growth is simplistic, in her view. Much more useful would be a system that identifies numerous benchmarks along a vertical scale—a learning continuum—and incorporates thoughtful means of using it to measure students’ progress. This sort of data could then to be combined with other data about students and teachers to provide a richer picture of instruction and learning.

Darling-Hammond noted that technology greatly expands the opportunities for this sort of assessment. In addition to delivering the assessments and providing rapid feedback, it can be used to provide links to instructional materials and other resources linked to the standards being assessed. It can be used to track data about students’ problem-solving strategies or other details of their responses and make it easy to aggregate results in different ways for different purposes. It can also facilitate human scoring, in part be making it possible for teachers to participate without meeting in a central location. Technology can also make it possible for students to compile digital records of their performance on complex tasks that could be used to demonstrate their progress or readiness for further study in a particular area or in a postsecondary institution. Such an assessment system could also make it easier for policy makers to understand student performance: for example, they could see not just abstract scores, but exemplars of student work, at the classroom, school, or district level.

|

1 |

For detailed descriptions of learning progressions in English/language arts, mathematics, and other subjects, Darling-Hammond pointed participants to the website of England’s Qualifications and Curriculum Authority (http://www.qcda.gov.uk/ [accessed June 2010]). Assessment tasks are developed from theses descriptions and teachers use them both to identify how far students have progressed on various dimensions and also to report their progress to others and for instruction planning purposes. |

|

BOX 7-1 Ohio Performance Assessment Project “Heating Degree Days” Task Based on Ms. Johnson’s situation and some initial information, begin to research “heating degree days” on the Internet:

SOURCE: Reprinted with permission from Linda Darling-Hammond on behalf of Stanford University School Redesign Network, Using Assessment to Guide Instruction: “Ohio Performance Assessment Project ‘Heating Degree Days’ Task.” Copyright 2009 by Ohio Department of Education. |

SUPPORTING TEACHERS

Many teachers seem to have difficulty using assessment information to plan instruction, Margaret Heritage observed, but if they don’t know how to do this, “they are really not going to have the impact on student learning that is the goal of all this investment on effort.” A number of studies have documented this problem, examining teachers’ use of assessments designed to inform instruction in reading, mathematics, and science (Herman et al., 2006; Heritage et al., 2009; Heritage, Jones, and White, 2010; Herman, Osmubdson, and Silver, 2010).

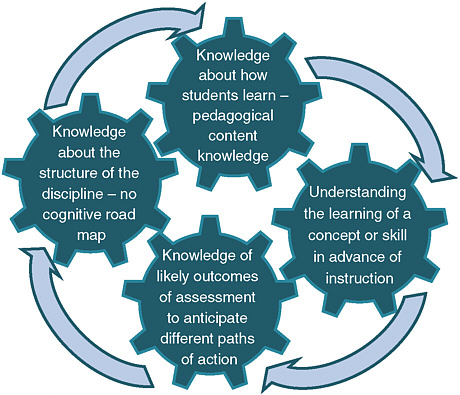

There are a number of possible reasons for this difficulty, Heritage noted. Perhaps most important is that preservice education in the United States does not, as a rule, leave teachers with an expectation that assessment is a tool to support their work. Figure 7-1 illustrates the kinds of professional knowledge that teachers need in order to make use of the rich information that sophisticated assessments can provide, presented as a set of interacting cogs.

First, teachers need to have a complex enough understanding of the structure of the discipline they are teaching to have a clear cognitive road map in which to fit assessment information. This understanding of how ideas develop from rudimentary forms into more sophisticated conceptual frameworks is the foundation for the learning maps discussed earlier (see Chapter 2) and is also key to teachers’ work. There are many teachers who do have this knowledge, Heritage stressed, but many more who do not. Teachers also need sufficient knowledge of how students learn within a domain—pedagogical content knowledge. Understanding of how a particular concept or skill is learned can help teachers plan instruction that meets their students’ needs. Finally, teachers

FIGURE 7-1 Knowledge that teachers need to utilize assessments.

SOURCE: Heritage (2010, slide #3).

need to have both the time and the experience to consider in advance the likely outcomes of an assessment so that they can plan possible courses of action.

Another way of thinking about how teachers might use high-quality assessment results is to consider both what both learners and teachers ought to do with the results, Heritage said. Research on the differences between novices and experts (see National Research Council, 2001) shows that experts not only possess more information, they also have complex structures for their knowledge that guide them is assimilating new information and using their knowledge in a variety of ways. Thus, learners need not only to accumulate discrete knowledge, but also to develop increasingly sophisticated conceptual frameworks for the knowledge and skills they are acquiring. They also need to use metacognitive strategies to guide their own learning—that is, to monitor and assess their own progress and develop strategies for making progress.

How can a teacher support this learning? One way is to structure each student’s classroom experiences so that they focus on learning goals that fit the progress that student has already made, and Heritage stressed how important it is for teachers to understand that students do not “all learn at the same pace, in the same way, at the same time.” Ideally, teachers will be equipped to recognize “ripening structures,” areas where students are just ready to take a next step. Providing feedback to students is how teachers can guide students, and assessment information can provide the material for very precise feedback. Particularly important, Heritage said, is that assessment results can provide not just retrospective views of what students have already learned, but clear, specific pointers to where they should go next. Table 7-1 shows some of the responses students and teachers might have to rich assessment information, as well as some ways that assessments can support those responses.

How then, might the public school system best support teachers? Heritage noted that all aspects of the education system in the United Kingdom, where she spent much of her career, provide better support for teachers than do the national, state, and local systems in the United States, though it is worth remembering that the United States is much larger and delegates most educational control to local districts. Nevertheless, for her, the first place to look for changes is higher education. Too many graduating U.S. teachers are not well prepared, particularly in the ways described above, and Heritage highlighted several kinds of knowledge teachers need:

-

models of how students’ thinking and skills develop within a discipline and across disciplines;

-

understanding of the kinds of challenges to learning students face in a discipline;

-

understanding of the interdependence of teaching and learning and emerging developmental processes;

-

deep pedagogical content knowledge; and

-

understanding of ways to involve students in learning and assessment, and of how to evidence of their understanding in the course of a lesson—along with strategies for responding to their findings.

Teachers also need a variety of resources in order to work effectively, Heritage noted. First, they need sound assessments that are “worth teaching to” and cover a range of performance. Descriptions of students’ learning trajectories developed through research (such as the cognitive maps earlier; see Chapter 2) are critical, in Heritage’s view. From her perspective the common core standards are “skeletal” in this respect. If clear descriptions of learning progressions were available, assessments could be linked to clear descriptions of performance goals and of what particular levels of competence look like. Teachers also need an array of resources that support them in interpreting information and acting on it. England’s Qualifications and Curriculum Authority (see footnote 1) is one example; other countries also provide specific resources built around the assessment and the curriculum. “It amazes me that we don’t have a Smithsonian of exemplary teacher practices,” she added.

Heritage said that U.S. teachers are also not given nearly enough time for the reflection and planning that are essential to thoughtful use of information and instructional planning. U.S. teachers spend an average of 1,130 contract hours in the classroom, she noted; in contrast, the average for countries in the

TABLE 7-1 Possible Student and Teacher Responses to Assessment Information

|

Learner |

Teacher |

Assessment to Support Teacher Learning |

|

• Construct new concepts based on current and prior knowledge. |

• Structure new experiences within the ZPD that build on previous “ripening” learning. |

• Indicate actual and potential development (retrospective and prospective). |

|

• Develop integrated knowledge structures (schema). |

||

|

• Embody learning practices (assessment as teaching). |

||

|

• Engage students in interactions and activity to create networks structured around key ideas. |

||

|

• Apply knowledge to new situations. |

• Integrate cognition and context. |

|

|

• Use metacognitive strategies. |

• Make students’ thinking visible. |

|

|

• Provide feedback. |

||

|

• Support metacognitive activity and self-regulation. |

• Locate learning status in the larger landscape. |

Organisation for Economic Co-operation and Development is 803 hours for primary teachers and 674 hours for upper secondary teachers. But perhaps most important, she said, is that U.S. teachers are consistently asked to implement programs that have been devised by someone else, rather than to study the practice of their vocation.

Karin Hess drew on her involvement with several research programs to sketch a view of strategies for supporting teachers in using assessment results. One, the Center for Collaborative Education (see http://www.cce.org/ [accessed June 2010]), has focused on ways to incorporate performance assessment into accountability programs for Massachusetts middle and high schools. The professional development approach includes teacher workshop time to participate in developing a standards-based assessment and for analyzing student work. Another, the Hawaii Progress Maps, has focused on documenting learning progression and formative assessment practices (Hess, Kurizaki, and Holt, 2009). As part of the project, researchers asked teachers to identify struggling students and to track their strategies with those students throughout the year. Teachers had access to a profile that shows the benchmarks and learning progressions for each grade, which they could use in documenting the students’ progress.

From these and other projects that have explored the use of learning progressions in formative assessment, Hess had several observations. First, she emphasized that learning progressions are very specific, research-based description of how students develop specific skills in a specified domain. She noted that curricular sequences and scopes and other documents intended to serve the same purpose are often not true learning progressions, in that they do not describe the way students develop competence in a discipline. For example, she noted, it is all too easy, for a curriculum document to include skills that may be important but that are beyond the capacity of most students at the level in which they are included.

Heritage said she has also found that teachers who engage in this work alter their perceptions about students, as well as their own teaching practice, and they came to better understand the standards to which they are teaching. Teachers in the Hawaii project, for example, found that they had to get to know their students better in order to place them properly on a learning continuum, and they incorporated that thinking into their assessment designs. The teachers also said that they began to focus on what their students could do, rather than on what they could not do, in part because they had a clearer understanding of the “big picture,” a more complete understanding of how proficiency in a particular area would look.

PRACTITIONERS’ PERSPECTIVES

Teri Siskind offered some of the lessons educators in South Carolina have learned through several projects focused on improving teacher quality. In one

project, the state experimented with using trained assessment coaches to provide personalized feedback to teachers to help improve their use of assessment data: however, the results were similar to those achieved with untrained facilitators. In general, the teachers did improve in their ability to develop tasks and interpret the results, but there was no clear evidence that these improvements led to improved student achievement. That study also showed that teachers did not understand the state’s standards well, although the reason may lie more with the standards themselves than with teachers.

Another project was a pilot science assessment using hands-on tasks developed by WestEd (a nonprofit agency that develops assessment materials and provides technical assistance to districts, states, and other entities) that was tailored to South Carolina’s standards. The project showed that the teachers’ participation in scoring the tasks was very beneficial, because it both improved their understanding of student work and engaged them in thinking about the science concepts the standards were targeting. The project also found that teachers were willing and able to develop assessments of this type. However, as with the other project, Siskind noted, there was no subsequent evidence of effects on student performance.

South Carolina is also collaborating with the Dana Center and education company Agile Mind on a 3-year, classroom-embedded training effort called the Algebra project. This project, which is being developed incrementally, involves summer training for teachers and support during the school year in mathematics and science. The project is so small that Siskind described its effects thus far as “little ripples in a vast ocean,” but she emphasized that the state hopes to develop better systems for evaluating these sorts of projects. She hopes they will improve the capacity to examine measures of teacher performance, fidelity of implementation, sustainability, and results—and also to better track which students have been exposed to teachers who have received particular supports. Unfortunately, the state has lost funding for many of these programs.

South Carolina’s Department of Education also requested funding from the legislature to support the development and implementation of a comprehensive formative assessment system. However, the legislation that resulted diverged significantly from what had been requested, though it incorporates some elements. Siskind said districts have had mixed reactions to some of the changes suggested, noting that “some things that are lovingly embraced when they are voluntary turn evil when they are mandated.”

Peg Cagle, a long-time middle school mathematics teacher in California, began with the observation that “a good assessment system would buttress, not batter, classroom teachers.” She noted that she was extremely impressed by the visionary ideas presented at the workshop, but that “the most visionary design coupled with myopic implementation is not going to improve teaching or learning.” At present, she said, the California state assessment system has very high stakes for teachers but not for students, so, in effect, teachers are rewarded for

their success at persuading students to care about the results. Teachers and principals resort to stunts to capture students’ attention—which is hardly the purpose of assessment, she cautioned. If an assessment system is easily gamed, she added, it really is not a measure of what students know, but of what they have learned to show.

Cagle also contrasted the optimism in the discussions of the potential that innovative assessments offer and the current state of teacher morale around the country. The punitive nature of assessment is a significant factor in their low morale, in her view. While she strongly favors looking for ways to make sure the teaching force is of the highest quality, she believes that current means of judging teachers do not reflect the complexity of the work they do. The hardest part of the job, she added, is identifying the misconceptions that are impeding students’ progress in order to address them effectively. Current assessments rarely provide that kind of information, but it is what teachers need most. Moreover, the veil of secrecy surrounding the state’s assessment is not a service to teachers or students. Cagle only sees the assessments her students take if one is absent, leaving a book free during the test for her to examine—and even that examination is officially prohibited. The limited number of items released after the testing are not sufficient to help her understand what it is the students did not understand or why.

A good assessment system, Cagle said, would focus less on statistical information and more on opportunities for teachers to engage in thinking about student work. Current assessments, by and large, she argued, reflect “a tragically impoverished view of what public education is supposed to provide.”

AGGREGATING INFORMATION FROM DIFFERENT SOURCES

Laurie Wise returned to the idea of a coherent system, a term that implies disparate, but interrelated, parts that work together. He focused on how it might be possible to aggregate disparate elements to provide summative information that meets a range of purposes.

He turned first to why it is important to aggregate separate pieces, rather than just relying on an end-of-the-year test—a point stressed repeatedly across the two workshops. He summarized much of the discussion by noting that a system of assessments that captures information of different kinds at multiple points during instruction can provide deeper, more timely information that can be used formatively as well as summatively and can establish a much closer link between curriculum and instruction and assessment. It also offers opportunities for the assessment of complex, higher-order thinking and perhaps for measuring teacher and school effectiveness, as well.

It is still important to sum up this information, though, for at least three reasons. In addition to obtaining rich profiles of diagnostic information, educators need information they can use to make decisions about students, such

as whether they are ready to advance to the next grade or course or to graduate form high school. Schools and districts also need input regarding teacher performance, and evidence of student learning is an important kind of input, though Wise cautioned that other sorts of information are also very important. Systems also need this sort of information in order to make decisions about schools.

Given that aggregation is useful, there are a number of ways it might be done, Wise suggested. Simplest, perhaps, would be to administer the same assessment several times during the year and assign students their highest score, though this approach would be limited in the content it could cover, among other disadvantages. Through-course assessment, where different tests are administered at several points during the year, is anther possibility, of which there are several versions. One version is end-of-unit tests, which allows for deeper coverage of the material in each part of the year’s instruction. This approach could be supplemented by an end-of-course assessment. Another possibility is cumulative tests, in which each test addresses all of the content covered up to that point. This model would allow students to demonstrate that they have overcome weaknesses (e.g., lack of knowledge) that were evident in earlier assessments.

Considering the nature of reading comprehension suggests another sort of model, Wise said. Reading is not taught in discrete chunks, but rather developed over time with increasingly complex stimuli and challenges, so the separate component model does not fit this domain well. (Wise noted that the same might be true of other domains.) Instead, the goal for assessment would be to test skill levels with increasing subtlety at several points during the year. Doing so would make it possible to measure growth through the year and also provide a summative measure at the end. As with the other models, it would be possible to assign differing weights to different components of the assessment in calculating the summative score. The design of the assessment and the weighting should reflect the nature of the subject, the grade level, and other factors, Wise added.

There is some tension between the formative and summative purposes of testing, even in these models. For example, Wise noted, having students score their own work and having teachers score their students’ work is an advantage in formative assessment but a disadvantage in summative assessment. These models could be devised to permit some of both, thus allowing teachers to see how closely their formative results map onto summative results. For example, each of the tests administered throughout the year could include both summative and formative portions, some of which might be scored by the teacher and some of which might be scored externally.

Another approach is the one that is common in many college courses, in which a final grade is based on a final exam, a mid-term exam, and a paper, with different weights for each. More broadly, Wise noted, it could encompass

any combination of test plus some portfolio of work that would be scored according to a common rubric. Relative weights could be determined based on a range of factors. Testing could also include group tasks, for example, in order to measure skills valued by employers, such as collaboration. In this approach, group scores would be combined with individual scores, and Wise note that technology might offer options for scoring individual contributions to group results. Results could also be collected at the school level, for other purposes.

Wise also considered the way alternate models of testing might be used to provide information about teacher effectiveness. In the current school-level accountability system, teachers are highly motivated to work together to make sure that all students in the school succeed because the school is evaluated on the basis of how all students do. If, instead, data were aggregated by teacher, there might be a perverse incentive for greater competition among teachers—which is not likely to be good for students. One solution would be to incorporate other kinds of information about teachers, as is done in many other employment settings. For example, teacher ratings could include not only student achievement data, but also principal and peer ratings on such factors as contributions to the school as a whole and the learning environment, innovations, and so forth. Such a system might also be used diagnostically, to help identify areas in which teachers need additional support and development.

Wise had three general recommendations for assessment:

-

Assessments should closely follow, but also lead, the design of instruction.

-

Assessments should provide timely, actionable information, as well as summative information needed for evaluation.

-

Aggregation of summative information should support the validity of intended interpretations. With a complex system—as opposed to a single assessment—it is possible to meet multiple purposes in a valid manner.

“There is potentially great value to a more continuous assessment system incorporating different types of measures—even to the extremes of portfolios and group tasks,” Wise concluded, and the flexibility such an approach offers for meeting a variety of goals is perhaps its greatest virtue.”