Reference Guide on Multiple Regression

Daniel L. Rubinfeld, Ph.D., is Robert L. Bridges Professor of Law and Professor of Economics Emeritus, University of California, Berkeley, and Visiting Professor of Law at New York University Law School.

CONTENTS

II. Research Design: Model Specification

A. What Is the Specific Question That Is Under Investigation by the Expert?

B. What Model Should Be Used to Evaluate the Question at Issue?

1. Choosing the dependent variable

2. Choosing the explanatory variable that is relevant to the question at issue

3. Choosing the additional explanatory variables

4. Choosing the functional form of the multiple regression model

5. Choosing multiple regression as a method of analysis

III. Interpreting Multiple Regression Results

A. What Is the Practical, as Opposed to the Statistical, Significance of Regression Results?

1. When should statistical tests be used?

2. What is the appropriate level of statistical significance?

3. Should statistical tests be one-tailed or two-tailed?

B. Are the Regression Results Robust?

1. What evidence exists that the explanatory variable causes changes in the dependent variable?

2. To what extent are the explanatory variables correlated with each other?

3. To what extent are individual errors in the regression model independent?

4. To what extent are the regression results sensitive to individual data points?

5. To what extent are the data subject to measurement error?

A. Who Should Be Qualified as an Expert?

B. Should the Court Appoint a Neutral Expert?

V. Presentation of Statistical Evidence

A. What Disagreements Exist Regarding Data on Which the Analysis Is Based?

Appendix: The Basics of Multiple Regression

1. Specifying the regression model

C. Interpreting Regression Results

D. Determining the Precision of the Regression Results

1. Standard errors of the coefficients and t-statistics

3. Sensitivity of least squares regression results

Multiple regression analysis is a statistical tool used to understand the relationship between or among two or more variables.1 Multiple regression involves a variable to be explained—called the dependent variable—and additional explanatory variables that are thought to produce or be associated with changes in the dependent variable.2 For example, a multiple regression analysis might estimate the effect of the number of years of work on salary. Salary would be the dependent variable to be explained; the years of experience would be the explanatory variable.

Multiple regression analysis is sometimes well suited to the analysis of data about competing theories for which there are several possible explanations for the relationships among a number of explanatory variables.3 Multiple regression typically uses a single dependent variable and several explanatory variables to assess the statistical data pertinent to these theories. In a case alleging sex discrimination in salaries, for example, a multiple regression analysis would examine not only sex, but also other explanatory variables of interest, such as education and experience.4 The employer-defendant might use multiple regression to argue that salary is a function of the employee’s education and experience, and the employee-plaintiff might argue that salary is also a function of the individual’s sex. Alternatively, in an antitrust cartel damages case, the plaintiff’s expert might utilize multiple regression to evaluate the extent to which the price of a product increased during the period in which the cartel was effective, after accounting for costs and other variables unrelated to the cartel. The defendant’s expert might use multiple

1. A variable is anything that can take on two or more values (e.g., the daily temperature in Chicago or the salaries of workers at a factory).

2. Explanatory variables in the context of a statistical study are sometimes called independent variables. See David H. Kaye & David A. Freedman, Reference Guide on Statistics, Section II.A.1, in this manual. The guide also offers a brief discussion of multiple regression analysis. Id., Section V.

3. Multiple regression is one type of statistical analysis involving several variables. Other types include matching analysis, stratification, analysis of variance, probit analysis, logit analysis, discriminant analysis, and factor analysis.

4. Thus, in Ottaviani v. State University of New York, 875 F.2d 365, 367 (2d Cir. 1989) (citations omitted), cert. denied, 493 U.S. 1021 (1990), the court stated:

In disparate treatment cases involving claims of gender discrimination, plaintiffs typically use multiple regression analysis to isolate the influence of gender on employment decisions relating to a particular job or job benefit, such as salary.

The first step in such a regression analysis is to specify all of the possible “legitimate” (i.e., nondiscriminatory) factors that are likely to significantly affect the dependent variable and which could account for disparities in the treatment of male and female employees. By identifying those legitimate criteria that affect the decisionmaking process, individual plaintiffs can make predictions about what job or job benefits similarly situated employees should ideally receive, and then can measure the difference between the predicted treatment and the actual treatment of those employees. If there is a disparity between the predicted and actual outcomes for female employees, plaintiffs in a disparate treatment case can argue that the net “residual” difference represents the unlawful effect of discriminatory animus on the allocation of jobs or job benefits.

regression to suggest that the plaintiff’s expert had omitted a number of price-determining variables.

More generally, multiple regression may be useful (1) in determining whether a particular effect is present; (2) in measuring the magnitude of a particular effect; and (3) in forecasting what a particular effect would be, but for an intervening event. In a patent infringement case, for example, a multiple regression analysis could be used to determine (1) whether the behavior of the alleged infringer affected the price of the patented product, (2) the size of the effect, and (3) what the price of the product would have been had the alleged infringement not occurred.

Over the past several decades, the use of multiple regression analysis in court has grown widely. Regression analysis has been used most frequently in cases of sex and race discrimination5 antitrust violations,6 and cases involving class cer-

5. Discrimination cases using multiple regression analysis are legion. See, e.g., Bazemore v. Friday, 478 U.S. 385 (1986), on remand, 848 F.2d 476 (4th Cir. 1988); Csicseri v. Bowsher, 862 F. Supp. 547 (D.D.C. 1994) (age discrimination), aff’d, 67 F.3d 972 (D.C. Cir. 1995); EEOC v. General Tel. Co., 885 F.2d 575 (9th Cir. 1989), cert. denied, 498 U.S. 950 (1990); Bridgeport Guardians, Inc. v. City of Bridgeport, 735 F. Supp. 1126 (D. Conn. 1990), aff’d, 933 F.2d 1140 (2d Cir.), cert. denied, 502 U.S. 924 (1991); Bickerstaff v. Vassar College, 196 F.3d 435, 448–49 (2d Cir. 1999) (sex discrimination); McReynolds v. Sodexho Marriott, 349 F. Supp. 2d 1 (D.C. Cir. 2004) (race discrimination); Hnot v. Willis Group Holdings Ltd., 228 F.R.D. 476 (S.D.N.Y. 2005) (gender discrimination); Carpenter v. Boeing Co., 456 F.3d 1183 (10th Cir. 2006) (sex discrimination); Coward v. ADT Security Systems, Inc., 140 F.3d 271, 274–75 (D.C. Cir. 1998); Smith v. Virginia Commonwealth Univ., 84 F.3d 672 (4th Cir. 1996) (en banc); Hemmings v. Tidyman’s Inc., 285 F.3d 1174, 1184–86 (9th Cir. 2000); Mehus v. Emporia State University, 222 F.R.D. 455 (D. Kan. 2004) (sex discrimination); Guiterrez v. Johnson & Johnson, 2006 WL 3246605 (D.N.J. Nov. 6, 2006 (race discrimination); Morgan v. United Parcel Service, 380 F.3d 459 (8th Cir. 2004) (racial discrimination). See also Keith N. Hylton & Vincent D. Rougeau, Lending Discrimination: Economic Theory, Econometric Evidence, and the Community Reinvestment Act, 85 Geo. L.J. 237, 238 (1996) (“regression analysis is probably the best empirical tool for uncovering discrimination”).

6. E.g., United States v. Brown Univ., 805 F. Supp. 288 (E.D. Pa. 1992) (price fixing of college scholarships), rev’d, 5 F.3d 658 (3d Cir. 1993); Petruzzi’s IGA Supermarkets, Inc. v. Darling-Delaware Co., 998 F.2d 1224 (3d Cir.), cert. denied, 510 U.S. 994 (1993); Ohio v. Louis Trauth Dairy, Inc., 925 F. Supp. 1247 (S.D. Ohio 1996); In re Chicken Antitrust Litig., 560 F. Supp. 963, 993 (N.D. Ga. 1980); New York v. Kraft Gen. Foods, Inc., 926 F. Supp. 321 (S.D.N.Y. 1995); Freeland v. AT&T, 238 F.R.D. 130 (S.D.N.Y. 2006); In re Pressure Sensitive Labelstock Antitrust Litig., 2007 U.S. Dist. LEXIS 85466 (M.D. Pa. Nov. 19, 2007); In re Linerboard Antitrust Litig., 497 F. Supp. 2d 666 (E.D. Pa. 2007) (price fixing by manufacturers of corrugated boards and boxes); In re Polypropylene Carpet Antitrust Litig., 93 F. Supp. 2d 1348 (N.D. Ga. 2000); In re OSB Antitrust Litig., 2007 WL 2253418 (E.D. Pa. Aug. 3, 2007) (price fixing of Oriented Strand Board, also known as “waferboard”); In re TFT-LCD (Flat Panel) Antitrust Litig., 267 F.R.D. 583 (N.D. Cal. 2010).

For a broad overview of the use of regression methods in antitrust, see ABA Antitrust Section, Econometrics: Legal, Practical and Technical Issues (John Harkrider & Daniel Rubinfeld, eds. 2005). See also Jerry Hausman et al., Competitive Analysis with Differenciated Products, 34 Annales D’Économie et de Statistique 159 (1994); Gregory J. Werden, Simulating the Effects of Differentiated Products Mergers: A Practical Alternative to Structural Merger Policy, 5 Geo. Mason L. Rev. 363 (1997).

tification (under Rule 23).7 However, there are a range of other applications, including census undercounts,8 voting rights,9 the study of the deterrent effect of the death penalty,10 rate regulation,11 and intellectual property.12

7. In antitrust, the circuits are currently split as to the extent to which plaintiffs must prove that common elements predominate over individual elements. E.g., compare In Re Hydrogen Peroxide Litig., 522 F.2d 305 (3d Cir. 2008) with In Re Cardizem CD Antitrust Litig., 391 F.3d 812 (6th Cir. 2004). For a discussion of use of multiple regression in evaluating class certification, see Bret M. Dickey & Daniel L. Rubinfeld, Antitrust Class Certification: Towards an Economic Framework, 66 N.Y.U. Ann. Surv. Am. L. 459 (2010) and John H. Johnson & Gregory K. Leonard, Economics and the Rigorous Analysis of Class Certification in Antitrust Cases, 3 J. Competition L. & Econ. 341 (2007).

8. See, e.g., City of New York v. U.S. Dep’t of Commerce, 822 F. Supp. 906 (E.D.N.Y. 1993) (decision of Secretary of Commerce not to adjust the 1990 census was not arbitrary and capricious), vacated, 34 F.3d 1114 (2d Cir. 1994) (applying heightened scrutiny), rev’d sub nom. Wisconsin v. City of New York, 517 U.S. 565 (1996); Carey v. Klutznick, 508 F. Supp. 420, 432–33 (S.D.N.Y. 1980) (use of reasonable and scientifically valid statistical survey or sampling procedures to adjust census figures for the differential undercount is constitutionally permissible), stay granted, 449 U.S. 1068 (1980), rev’d on other grounds, 653 F.2d 732 (2d Cir. 1981), cert. denied, 455 U.S. 999 (1982); Young v. Klutznick, 497 F. Supp. 1318, 1331 (E.D. Mich. 1980), rev’d on other grounds, 652 F.2d 617 (6th Cir. 1981), cert. denied, 455 U.S. 939 (1982).

9. Multiple regression analysis was used in suits charging that at-large areawide voting was instituted to neutralize black voting strength, in violation of section 2 of the Voting Rights Act, 42 U.S.C. § 1973 (1988). Multiple regression demonstrated that the race of the candidates and that of the electorate were determinants of voting. See Williams v. Brown, 446 U.S. 236 (1980); Rodriguez v. Pataki, 308 F. Supp. 2d 346, 414 (S.D.N.Y. 2004); United States v. Vill. of Port Chester, 2008 U.S. Dist. LEXIS 4914 (S.D.N.Y. Jan. 17, 2008); Meza v. Galvin, 322 F. Supp. 2d 52 (D. Mass. 2004) (violation of VRA with regard to Hispanic voters in Boston); Bone Shirt v. Hazeltine, 336 F. Supp. 2d 976 (D.S.D. 2004) (violations of VRA with regard to Native American voters in South Dakota); Georgia v. Ashcroft, 195 F. Supp. 2d 25 (D.D.C. 2002) (redistricting of Georgia’s state and federal legislative districts); Benavidez v. City of Irving, 638 F. Supp. 2d 709 (N.D. Tex. 2009) (challenge of city’s at-large voting scheme). For commentary on statistical issues in voting rights cases, see, e.g., Statistical and Demographic Issues Underlying Voting Rights Cases, 15 Evaluation Rev. 659 (1991); Stephen P. Klein et al., Ecological Regression Versus the Secret Ballot, 31 Jurimetrics J. 393 (1991); James W. Loewen & Bernard Grofman, Recent Developments in Methods Used in Vote Dilution Litigation, 21 Urb. Law. 589 (1989); Arthur Lupia & Kenneth McCue, Why the 1980s Measures of Racially Polarized Voting Are Inadequate for the 1990s, 12 Law & Pol’y 353 (1990).

10. See, e.g., Gregg v. Georgia, 428 U.S. 153, 184–86 (1976). For critiques of the validity of the deterrence analysis, see National Research Council, Deterrence and Incapacitation: Estimating the Effects of Criminal Sanctions on Crime Rates (Alfred Blumstein et al. eds., 1978); Richard O. Lempert, Desert and Deterrence: An Assessment of the Moral Bases of the Case for Capital Punishment, 79 Mich. L. Rev. 1177 (1981); Hans Zeisel, The Deterrent Effect of the Death Penalty: Facts v. Faith, 1976 Sup. Ct. Rev. 317; and John Donohue & Justin Wolfers, Uses and Abuses of Statistical Evidence in the Death Penalty Debate, 58 Stan. L. Rev. 787 (2005).

11. See, e.g., Time Warner Entertainment Co. v. FCC, 56 F.3d 151 (D.C. Cir. 1995) (challenge to FCC’s application of multiple regression analysis to set cable rates), cert. denied, 516 U.S. 1112 (1996); Appalachian Power Co. v. EPA, 135 F.3d 791 (D.C. Cir. 1998) (challenging the EPA’s application of regression analysis to set nitrous oxide emission limits); Consumers Util. Rate Advocacy Div. v. Ark. PSC, 99 Ark. App. 228 (Ark. Ct. App. 2007) (challenging an increase in nongas rates).

12. See Polaroid Corp. v. Eastman Kodak Co., No. 76-1634-MA, 1990 WL 324105, at *29, *62–63 (D. Mass. Oct. 12, 1990) (damages awarded because of patent infringement), amended by No.

Multiple regression analysis can be a source of valuable scientific testimony in litigation. However, when inappropriately used, regression analysis can confuse important issues while having little, if any, probative value. In EEOC v. Sears, Roebuck & Co.,13 in which Sears was charged with discrimination against women in hiring practices, the Seventh Circuit acknowledged that “[m]ultiple regression analyses, designed to determine the effect of several independent variables on a dependent variable, which in this case is hiring, are an accepted and common method of proving disparate treatment claims.”14 However, the court affirmed the district court’s findings that the “E.E.O.C.’s regression analyses did not ‘accurately reflect Sears’ complex, nondiscriminatory decision-making processes’” and that the “‘E.E.O.C.’s statistical analyses [were] so flawed that they lack[ed] any persuasive value.’”15 Serious questions also have been raised about the use of multiple regression analysis in census undercount cases and in death penalty cases.16

The Supreme Court’s rulings in Daubert and Kumho Tire have encouraged parties to raise questions about the admissibility of multiple regression analyses.17 Because multiple regression is a well-accepted scientific methodology, courts have frequently admitted testimony based on multiple regression studies, in some cases over the strong objection of one of the parties.18 However, on some occasions courts have excluded expert testimony because of a failure to utilize a multiple regression methodology.19 On other occasions, courts have rejected regression

76-1634-MA, 1991 WL 4087 (D. Mass. Jan. 11, 1991); Estate of Vane v. The Fair, Inc., 849 F.2d 186, 188 (5th Cir. 1988) (lost profits were the result of copyright infringement), cert. denied, 488 U.S. 1008 (1989); Louis Vuitton Malletier v. Dooney & Bourke, Inc., 525 F. Supp. 2d 576, 664 (S.D.N.Y. 2007) (trademark infringement and unfair competition suit). The use of multiple regression analysis to estimate damages has been contemplated in a wide variety of contexts. See, e.g., David Baldus et al., Improving Judicial Oversight of Jury Damages Assessments: A Proposal for the Comparative Additur/Remittitur Review of Awards for Nonpecuniary Harms and Punitive Damages, 80 Iowa L. Rev. 1109 (1995); Talcott J. Franklin, Calculating Damages for Loss of Parental Nurture Through Multiple Regression Analysis, 52 Wash. & Lee L. Rev. 271 (1997); Roger D. Blair & Amanda Kay Esquibel, Yardstick Damages in Lost Profit Cases: An Econometric Approach, 72 Denv. U. L. Rev. 113 (1994). Daniel Rubinfeld, Quantitative Methods in Antitrust, in 1 Issues in Competition Law and Policy 723 (2008).

13. 839 F.2d 302 (7th Cir. 1988).

14. Id. at 324 n.22.

15. Id. at 348, 351 (quoting EEOC v. Sears, Roebuck & Co., 628 F. Supp. 1264, 1342, 1352 (N.D. Ill. 1986)). The district court commented specifically on the “severe limits of regression analysis in evaluating complex decision-making processes.” 628 F. Supp. at 1350.

16. See David H. Kaye & David A. Freedman, Reference Guide on Statistics, Sections II.A.3, B.1, in this manual.

17. Daubert v. Merrill Dow Pharms., Inc. 509 U.S. 579 (1993); Kumho Tire Co. v. Carmichael, 526 U.S. 137, 147 (1999) (expanding the Daubert’s application to nonscientific expert testimony).

18. See Newport Ltd. v. Sears, Roebuck & Co., 1995 U.S. Dist. LEXIS 7652 (E.D. La. May 26, 1995). See also Petruzzi’s IGA Supermarkets, supra note 6, 998 F.2d at 1240, 1247 (finding that the district court abused its discretion in excluding multiple regression-based testimony and reversing the grant of summary judgment to two defendants).

19. See, e.g., In re Executive Telecard Ltd. Sec. Litig., 979 F. Supp. 1021 (S.D.N.Y. 1997).

studies that did not have an adequate foundation or research design with respect to the issues at hand.20

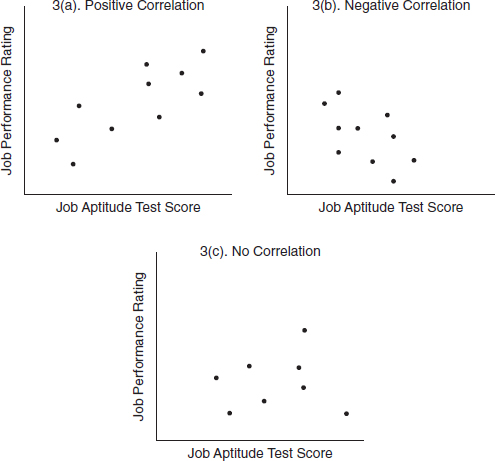

In interpreting the results of a multiple regression analysis, it is important to distinguish between correlation and causality. Two variables are correlated—that is, associated with each other—when the events associated with the variables occur more frequently together than one would expect by chance. For example, if higher salaries are associated with a greater number of years of work experience, and lower salaries are associated with fewer years of experience, there is a positive correlation between salary and number of years of work experience. However, if higher salaries are associated with less experience, and lower salaries are associated with more experience, there is a negative correlation between the two variables.

A correlation between two variables does not imply that one event causes the second. Therefore, in making causal inferences, it is important to avoid spurious correlation.21 Spurious correlation arises when two variables are closely related but bear no causal relationship because they are both caused by a third, unexamined variable. For example, there might be a negative correlation between the age of certain skilled employees of a computer company and their salaries. One should not conclude from this correlation that the employer has necessarily discriminated against the employees on the basis of their age. A third, unexamined variable, such as the level of the employees’ technological skills, could explain differences in productivity and, consequently, differences in salary.22 Or, consider a patent infringement case in which increased sales of an allegedly infringing product are associated with a lower price of the patented product.23 This correlation would be spurious if the two products have their own noncompetitive market niches and the lower price is the result of a decline in the production costs of the patented product.

Pointing to the possibility of a spurious correlation will typically not be enough to dispose of a statistical argument. It may be appropriate to give little weight to such an argument absent a showing that the correlation is relevant. For example, a statistical showing of a relationship between technological skills

20. See City of Tuscaloosa v. Harcros Chemicals, Inc., 158 F.2d 548 (11th Cir. 1998), in which the court ruled plaintiffs’ regression-based expert testimony inadmissible and granted summary judgment to the defendants. See also American Booksellers Ass’n v. Barnes & Noble, Inc., 135 F. Supp. 2d 1031, 1041 (N.D. Cal. 2001), in which a model was said to contain “too many assumptions and simplifications that are not supported by real-world evidence,” and Obrey v. Johnson, 400 F.3d 691 (9th Cir. 2005).

21. See David H. Kaye & David A. Freedman, Reference Guide on Statistics, Section V.B.3, in this manual.

22. See, e.g., Sheehan v. Daily Racing Form Inc., 104 F.3d 940, 942 (7th Cir.) (rejecting plaintiff’s age discrimination claim because statistical study showing correlation between age and retention ignored the “more than remote possibility that age was correlated with a legitimate job-related qualification”), cert. denied, 521 U.S. 1104 (1997).

23. In some particular cases, there are statistical tests that allow one to reject claims of causality. For a brief description of these tests, which were developed by Jerry Hausman, see Robert S. Pindyck & Daniel L. Rubinfeld, Econometric Models and Economic Forecasts § 7.5 (4th ed. 1997).

and worker productivity might be required in the age discrimination example, above.24

Causality cannot be inferred by data analysis alone; rather, one must infer that a causal relationship exists on the basis of an underlying causal theory that explains the relationship between the two variables. Even when an appropriate theory has been identified, causality can never be inferred directly. One must also look for empirical evidence that there is a causal relationship. Conversely, the fact that two variables are correlated does not guarantee the existence of a relationship; it could be that the model—a characterization of the underlying causal theory—does not reflect the correct interplay among the explanatory variables. In fact, the absence of correlation does not guarantee that a causal relationship does not exist. Lack of correlation could occur if (1) there are insufficient data, (2) the data are measured inaccurately, (3) the data do not allow multiple causal relationships to be sorted out, or (4) the model is specified wrongly because of the omission of a variable or variables that are related to the variable of interest.

There is a tension between any attempt to reach conclusions with near certainty and the inherently uncertain nature of multiple regression analysis. In general, the statistical analysis associated with multiple regression allows for the expression of uncertainty in terms of probabilities. The reality that statistical analysis generates probabilities concerning relationships rather than certainty should not be seen in itself as an argument against the use of statistical evidence, or worse, as a reason to not admit that there is uncertainty at all. The only alternative might be to use less reliable anecdotal evidence.

This reference guide addresses a number of procedural and methodological issues that are relevant in considering the admissibility of, and weight to be accorded to, the findings of multiple regression analyses. It also suggests some standards of reporting and analysis that an expert presenting multiple regression analyses might be expected to meet. Section II discusses research design—how the multiple regression framework can be used to sort out alternative theories about a case. The guide discusses the importance of choosing the appropriate specification of the multiple regression model and raises the issue of whether multiple regression is appropriate for the case at issue. Section III accepts the regression framework and concentrates on the interpretation of the multiple regression results from both a statistical and a practical point of view. It emphasizes the distinction between regression results that are statistically significant and results that are meaningful to the trier of fact. It also points to the importance of evaluating the robustness

24. See, e.g., Allen v. Seidman, 881 F.2d 375 (7th Cir. 1989) (judicial skepticism was raised when the defendant did not submit a logistic regression incorporating an omitted variable—the possession of a higher degree or special education; defendant’s attack on statistical comparisons must also include an analysis that demonstrates that comparisons are flawed). The appropriate requirements for the defendant’s showing of spurious correlation could, in general, depend on the discovery process. See, e.g., Boykin v. Georgia Pac. Co., 706 F.2d 1384 (1983) (criticism of a plaintiff’s analysis for not including omitted factors, when plaintiff considered all information on an application form, was inadequate).

of regression analyses, i.e., seeing the extent to which the results are sensitive to changes in the underlying assumptions of the regression model. Section IV briefly discusses the qualifications of experts and suggests a potentially useful role for court-appointed neutral experts. Section V emphasizes procedural aspects associated with use of the data underlying regression analyses. It encourages greater pretrial efforts by the parties to attempt to resolve disputes over statistical studies.

Throughout the main body of this guide, hypothetical examples are used as illustrations. Moreover, the basic “mathematics” of multiple regression has been kept to a bare minimum. To achieve that goal, the more formal description of the multiple regression framework has been placed in the Appendix. The Appendix is self-contained and can be read before or after the text. The Appendix also includes further details with respect to the examples used in the body of this guide.

II. Research Design: Model Specification

Multiple regression allows the testifying economist or other expert to choose among alternative theories or hypotheses and assists the expert in distinguishing correlations between variables that are plainly spurious from those that may reflect valid relationships.

A. What Is the Specific Question That Is Under Investigation by the Expert?

Research begins with a clear formulation of a research question. The data to be collected and analyzed must relate directly to this question; otherwise, appropriate inferences cannot be drawn from the statistical analysis. For example, if the question at issue in a patent infringement case is what price the plaintiff’s product would have been but for the sale of the defendant’s infringing product, sufficient data must be available to allow the expert to account statistically for the important factors that determine the price of the product.

B. What Model Should Be Used to Evaluate the Question at Issue?

Model specification involves several steps, each of which is fundamental to the success of the research effort. Ideally, a multiple regression analysis builds on a theory that describes the variables to be included in the study. A typical regression model will include one or more dependent variables, each of which is believed to be causally related to a series of explanatory variables. Because we cannot be certain that the explanatory variables are themselves unaffected or independent of the influence of the dependent variable (at least at the point of initial study), the explanatory

variables are often termed covariates. Covariates are known to have an association with the dependent or outcome variable, but causality remains an open question.

For example, the theory of labor markets might lead one to expect salaries in an industry to be related to workers’ experience and the productivity of workers’ jobs. A belief that there is job discrimination would lead one to create a model in which the dependent variable was a measure of workers’ salaries and the list of covariates included a variable reflecting discrimination in addition to measures of job training and experience.

In a perfect world, the analysis of the job discrimination (or any other) issue might be accomplished through a controlled “natural experiment,” in which employees would be randomly assigned to a variety of employers in an industry under study and asked to fill positions requiring identical experience and skills. In this observational study, where the only difference in salaries could be a result of discrimination, it would be possible to draw clear and direct inferences from an analysis of salary data. Unfortunately, the opportunity to conduct observational studies of this kind is rarely available to experts in the context of legal proceedings. In the real world, experts must do their best to interpret the results of real-world “quasi-experiments,” in which it is impossible to control all factors that might affect worker salaries or other variables of interest.25

Models are often characterized in terms of parameters—numerical characteristics of the model. In the labor market discrimination example, one parameter might reflect the increase in salary associated with each additional year of prior job experience. Another parameter might reflect the reduction in salary associated with a lack of current on-the-job experience. Multiple regression uses a sample, or a selection of data, from the population (all the units of interest) to obtain estimates of the values of the parameters of the model. An estimate associated with a particular explanatory variable is an estimated regression coefficient.

Failure to develop the proper theory, failure to choose the appropriate variables, or failure to choose the correct form of the model can substantially bias the statistical results—that is, create a systematic tendency for an estimate of a model parameter to be too high or too low.

1. Choosing the dependent variable

The variable to be explained, the dependent variable, should be the appropriate variable for analyzing the question at issue.26 Suppose, for example, that pay dis-

25. In the literature on natural and quasi-experiments, the explanatory variables are characterized as “treatments” and the dependent variable as the “outcome.” For a review of natural experiments in the criminal justice arena, see David P. Farrington, A Short History of Randomized Experiments in Criminology, 27 Evaluation Rev. 218–27 (2003).

26. In multiple regression analysis, the dependent variable is usually a continuous variable that takes on a range of numerical values. When the dependent variable is categorical, taking on only two or three values, modified forms of multiple regression, such as probit analysis or logit analysis, are

crimination among hourly workers is a concern. One choice for the dependent variable is the hourly wage rate of the employees; another choice is the annual salary. The distinction is important, because annual salary differences may in part result from differences in hours worked. If the number of hours worked is the product of worker preferences and not discrimination, the hourly wage is a good choice. If the number of hours worked is related to the alleged discrimination, annual salary is the more appropriate dependent variable to choose.27

2. Choosing the explanatory variable that is relevant to the question at issue

The explanatory variable that allows the evaluation of alternative hypotheses must be chosen appropriately. Thus, in a discrimination case, the variable of interest may be the race or sex of the individual. In an antitrust case, it may be a variable that takes on the value 1 to reflect the presence of the alleged anticompetitive behavior and the value 0 otherwise.28

3. Choosing the additional explanatory variables

An attempt should be made to identify additional known or hypothesized explanatory variables, some of which are measurable and may support alternative substantive hypotheses that can be accounted for by the regression analysis. Thus, in a discrimination case, a measure of the skills of the workers may provide an alternative explanation—lower salaries may have been the result of inadequate skills.29

appropriate. For an example of the use of the latter, see EEOC v. Sears, Roebuck & Co., 839 F.2d 302, 325 (7th Cir. 1988) (EEOC used logit analysis to measure the impact of variables, such as age, education, job-type experience, and product-line experience, on the female percentage of commission hires).

27. In job systems in which annual salaries are tied to grade or step levels, the annual salary corresponding to the job position could be more appropriate.

28. Explanatory variables may vary by type, which will affect the interpretation of the regression results. Thus, some variables may be continuous and others may be categorical.

29. In James v. Stockham Valves, 559 F. 2d 310 (5th Cir. 1977), the Court of Appeals rejected the employer’s claim that skill level rather than race determined assignment and wage levels, noting the circularity of defendant’s argument. In Ottaviani v. State University of New York, 679 F. Supp. 288, 306–08 (S.D.N.Y. 1988), aff’d, 875 F.2d 365 (2d Cir. 1989), cert. denied, 493 U.S. 1021 (1990), the court ruled (in the liability phase of the trial) that the university showed that there was no discrimination in either placement into initial rank or promotions between ranks, and so rank was a proper variable in multiple regression analysis to determine whether women faculty members were treated differently than men.

However, in Trout v. Garrett, 780 F. Supp. 1396, 1414 (D.D.C. 1991), the court ruled (in the damage phase of the trial) that the extent of civilian employees’ prehire work experience was not an appropriate variable in a regression analysis to compute back pay in employment discrimination. According to the court, including the prehire level would have resulted in a finding of no sex discrimination, despite a contrary conclusion in the liability phase of the action. Id. See also Stuart v. Roache, 951 F.2d 446 (1st Cir. 1991) (allowing only 3 years of seniority to be considered as the result of prior

Not all possible variables that might influence the dependent variable can be included if the analysis is to be successful; some cannot be measured, and others may make little difference.30 If a preliminary analysis shows the unexplained portion of the multiple regression to be unacceptably high, the expert may seek to discover whether some previously undetected variable is missing from the analysis.31

Failure to include a major explanatory variable that is correlated with the variable of interest in a regression model may cause an included variable to be credited with an effect that actually is caused by the excluded variable.32 In general, omitted variables that are correlated with the dependent variable reduce the probative value of the regression analysis. The importance of omitting a relevant variable depends on the strength of the relationship between the omitted variable and the dependent variable and the strength of the correlation between the omitted variable and the explanatory variables of interest. Other things being equal, the greater the correlation between the omitted variable and the variable of interest, the greater the bias caused by the omission. As a result, the omission of an important variable may lead to inferences made from regression analyses that do not assist the trier of fact.33

discrimination), cert. denied, 504 U.S. 913 (1992). Whether a particular variable reflects “legitimate” considerations or itself reflects or incorporates illegitimate biases is a recurring theme in discrimination cases. See, e.g., Smith v. Virginia Commonwealth Univ., 84 F.3d 672, 677 (4th Cir. 1996) (en banc) (suggesting that whether “performance factors” should have been included in a regression analysis was a question of material fact); id. at 681–82 (Luttig, J., concurring in part) (suggesting that the failure of the regression analysis to include “performance factors” rendered it so incomplete as to be inadmissible); id. at 690–91 (Michael, J., dissenting) (suggesting that the regression analysis properly excluded “performance factors”); see also Diehl v. Xerox Corp., 933 F. Supp. 1157, 1168 (W.D.N.Y. 1996).

30. The summary effect of the excluded variables shows up as a random error term in the regression model, as does any modeling error. See Appendix, infra, for details. But see David W. Peterson, Reference Guide on Multiple Regression, 36 Jurimetrics J. 213, 214 n.2 (1996) (review essay) (asserting that “the presumption that the combined effect of the explanatory variables omitted from the model are uncorrelated with the included explanatory variables” is “a knife-edge condition…not likely to occur”).

31. A very low R-squared (R2) is one indication of an unexplained portion of the multiple regression model that is unacceptably high. However, the inference that one makes from a particular value of R2 will depend, of necessity, on the context of the particular issues under study and the particular dataset that is being analyzed. For reasons discussed in the Appendix, a low R2 does not necessarily imply a poor model (and vice versa).

32. Technically, the omission of explanatory variables that are correlated with the variable of interest can cause biased estimates of regression parameters.

33. See Bazemore v. Friday, 751 F.2d 662, 671–72 (4th Cir. 1984) (upholding the district court’s refusal to accept a multiple regression analysis as proof of discrimination by a preponderance of the evidence, the court of appeals stated that, although the regression used four variable factors (race, education, tenure, and job title), the failure to use other factors, including pay increases that varied by county, precluded their introduction into evidence), aff’d in part, vacated in part, 478 U.S. 385 (1986).

Note, however, that in Sobel v. Yeshiva University, 839 F.2d 18, 33, 34 (2d Cir. 1988), cert. denied, 490 U.S. 1105 (1989), the court made clear that “a [Title VII] defendant challenging the validity of

Omitting variables that are not correlated with the variable of interest is, in general, less of a concern, because the parameter that measures the effect of the variable of interest on the dependent variable is estimated without bias. Suppose, for example, that the effect of a policy introduced by the courts to encourage husbands to pay child support has been tested by randomly choosing some cases to be handled according to current court policies and other cases to be handled according to a new, more stringent policy. The effect of the new policy might be measured by a multiple regression using payment success as the dependent variable and a 0 or 1 explanatory variable (1 if the new program was applied; 0 if it was not). Failure to include an explanatory variable that reflected the age of the husbands involved in the program would not affect the court’s evaluation of the new policy, because men of any given age are as likely to be affected by the old policy as they are the new policy. Randomly choosing the court’s policy to be applied to each case has ensured that the omitted age variable is not correlated with the policy variable.

Bias caused by the omission of an important variable that is related to the included variables of interest can be a serious problem.34 Nonetheless, it is possible for the expert to account for bias qualitatively if the expert has knowledge (even if not quantifiable) about the relationship between the omitted variable and the explanatory variable. Suppose, for example, that the plaintiff’s expert in a sex discrimination pay case is unable to obtain quantifiable data that reflect the skills necessary for a job, and that, on average, women are more skillful than men. Suppose also that a regression analysis of the wage rate of employees (the dependent variable) on years of experience and a variable reflecting the sex of each employee (the explanatory variable) suggests that men are paid substantially more than women with the same experience. Because differences in skill levels have not been taken into account, the expert may conclude reasonably that the

a multiple regression analysis [has] to make a showing that the factors it contends ought to have been included would weaken the showing of salary disparity made by the analysis,” by making a specific attack and “a showing of relevance for each particular variable it contends…ought to [be] includ[ed]” in the analysis, rather than by simply attacking the results of the plaintiffs’ proof as inadequate for lack of a given variable. See also Smith v. Virginia Commonwealth Univ., 84 F.3d 672 (4th Cir. 1996) (en banc) (finding that whether certain variables should have been included in a regression analysis is a question of fact that precludes summary judgment); Freeland v. AT&T, 238 F.R.D. 130, 145 (S.D.N.Y. 2006) (“Ordinarily, the failure to include a variable in a regression analysis will affect the probative value of the analysis and not its admissibility”).

Also, in Bazemore v. Friday, the Court, declaring that the Fourth Circuit’s view of the evidentiary value of the regression analyses was plainly incorrect, stated that “[n]ormally, failure to include variables will affect the analysis’ probativeness, not its admissibility. Importantly, it is clear that a regression analysis that includes less than ‘all measurable variables’ may serve to prove a plaintiff’s case.” 478 U.S. 385, 400 (1986) (footnote omitted).

34. See also David H. Kaye & David A. Freedman, Reference Guide on Statistics, Section V.B.3, in this manual.

wage difference measured by the regression is a conservative estimate of the true discriminatory wage difference.

The precision of the measure of the effect of a variable of interest on the dependent variable is also important.35 In general, the more complete the explained relationship between the included explanatory variables and the dependent variable, the more precise the results. Note, however, that the inclusion of explanatory variables that are irrelevant (i.e., not correlated with the dependent variable) reduces the precision of the regression results. This can be a source of concern when the sample size is small, but it is not likely to be of great consequence when the sample size is large.

4. Choosing the functional form of the multiple regression model

Choosing the proper set of variables to be included in the multiple regression model does not complete the modeling exercise. The expert must also choose the proper form of the regression model. The most frequently selected form is the linear regression model (described in the Appendix). In this model, the magnitude of the change in the dependent variable associated with the change in any of the explanatory variables is the same no matter what the level of the explanatory variables. For example, one additional year of experience might add $5000 to salary, regardless of the previous experience of the employee.

In some instances, however, there may be reason to believe that changes in explanatory variables will have differential effects on the dependent variable as the values of the explanatory variables change. In these instances, the expert should consider the use of a nonlinear model. Failure to account for nonlinearities can lead to either overstatement or understatement of the effect of a change in the value of an explanatory variable on the dependent variable.

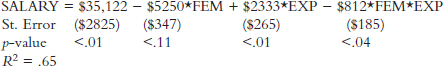

One particular type of nonlinearity involves the interaction among several variables. An interaction variable is the product of two other variables that are included in the multiple regression model. The interaction variable allows the expert to take into account the possibility that the effect of a change in one variable on the dependent variable may change as the level of another explanatory variable changes. For example, in a salary discrimination case, the inclusion of a term that interacts a variable measuring experience with a variable representing the sex of the employee (1 if a female employee; 0 if a male employee) allows the expert to test whether the sex differential varies with the level of experience. A significant negative estimate of the parameter associated with the sex variable suggests that inexperienced women are discriminated against, whereas a significant

35. A more precise estimate of a parameter is an estimate with a smaller standard error. See Appendix, infra, for details.

negative estimate of the interaction parameter suggests that the extent of discrimination increases with experience.36

Note that insignificant coefficients in a model with interactions may suggest a lack of discrimination, whereas a model without interactions may suggest the contrary. It is especially important to account for interaction terms that could affect the determination of discrimination; failure to do so may lead to false conclusions concerning discrimination.

5. Choosing multiple regression as a method of analysis

There are many multivariate statistical techniques other than multiple regression that are useful in legal proceedings. Some statistical methods are appropriate when nonlinearities are important;37 others apply to models in which the dependent variable is discrete, rather than continuous.38 Still others have been applied predominantly to respond to methodological concerns arising in the context of discrimination litigation.39

It is essential that a valid statistical method be applied to assist with the analysis in each legal proceeding. Therefore, the expert should be prepared to explain why any chosen method, including multiple regression, was more suitable than the alternatives.

36. For further details concerning interactions, see the Appendix, infra. Note that in Ottaviani v. State University of New York, 875 F.2d 365, 367 (2d Cir. 1989), cert. denied, 493 U.S. 1021 (1990), the defendant relied on a regression model in which a dummy variable reflecting gender appeared as an explanatory variable. The female plaintiff, however, used an alternative approach in which a regression model was developed for men only (the alleged protected group). The salaries of women predicted by this equation were then compared with the actual salaries; a positive difference would, according to the plaintiff, provide evidence of discrimination. For an evaluation of the methodological advantages and disadvantages of this approach, see Joseph L. Gastwirth, A Clarification of Some Statistical Issues in Watson v. Fort Worth Bank and Trust, 29 Jurimetrics J. 267 (1989).

37. These techniques include, but are not limited to, piecewise linear regression, polynomial regression, maximum likelihood estimation of models with nonlinear functional relationships, and autoregressive and moving-average time-series models. See, e.g., Pindyck & Rubinfeld, supra note 23, at 117–21, 136–37, 273–84, 463–601.

38. For a discussion of probit analysis and logit analysis, techniques that are useful in the analysis of qualitative choice, see id. at 248–81.

39. The correct model for use in salary discrimination suits is a subject of debate among labor economists. As a result, some have begun to evaluate alternative approaches, including urn models (Bruce Levin & Herbert Robbins, Urn Models for Regression Analysis, with Applications to Employment Discrimination Studies, Law & Contemp. Probs., Autumn 1983, at 247) and, as a means of correcting for measurement errors, reverse regression (Delores A. Conway & Harry V. Roberts, Reverse Regression, Fairness, and Employment Discrimination, 1 J. Bus. & Econ. Stat. 75 (1983)). But see Arthur S. Goldberger, Redirecting Reverse Regressions, 2 J. Bus. & Econ. Stat. 114 (1984); Arlene S. Ash, The Perverse Logic of Reverse Regression, in Statistical Methods in Discrimination Litigation 85 (D.H. Kaye & Mikel Aickin eds., 1986).

III. Interpreting Multiple Regression Results

Multiple regression results can be interpreted in purely statistical terms, through the use of significance tests, or they can be interpreted in a more practical, nonstatistical manner. Although an evaluation of the practical significance of regression results is almost always relevant in the courtroom, tests of statistical significance are appropriate only in particular circumstances.

A. What Is the Practical, as Opposed to the Statistical, Significance of Regression Results?

Practical significance means that the magnitude of the effect being studied is not de minimis—it is sufficiently important substantively for the court to be concerned. For example, if the average wage rate is $10.00 per hour, a wage differential between men and women of $0.10 per hour is likely to be deemed practically insignificant because the differential represents only 1% ($0.10/$10.00) of the average wage rate.40 That same difference could be statistically significant, however, if a sufficiently large sample of men and women was studied.41 The reason is that statistical significance is determined, in part, by the number of observations in the dataset.

As a general rule, the statistical significance of the magnitude of a regression coefficient increases as the sample size increases. Thus, a $1.00 per hour wage differential between men and women that was determined to be insignificantly different from zero with a sample of 20 men and women could be highly significant if the sample size were increased to 200.

Often, results that are practically significant are also statistically significant.42 However, it is possible with a large dataset to find statistically significant coeffi-

40. There is no specific percentage threshold above which a result is practically significant. Practical significance must be evaluated in the context of a particular legal issue. See also David H. Kaye & David A. Freedman, Reference Guide on Statistics, Section IV.B.2, in this manual.

41. Practical significance also can apply to the overall credibility of the regression results. Thus, in McCleskey v. Kemp, 481 U.S. 279 (1987), coefficients on race variables were statistically significant, but the Court declined to find them legally or constitutionally significant.

42. In Melani v. Board of Higher Education, 561 F. Supp. 769, 774 (S.D.N.Y. 1983), a Title VII suit was brought against the City University of New York (CUNY) for allegedly discriminating against female instructional staff in the payment of salaries. One approach of the plaintiff’s expert was to use multiple regression analysis. The coefficient on the variable that reflected the sex of the employee was approximately $1800 when all years of data were included. Practically (in terms of average wages at the time) and statistically (in terms of a 5% significance test), this result was significant. Thus, the court stated that “[p]laintiffs have produced statistically significant evidence that women hired as CUNY instructional staff since 1972 received substantially lower salaries than similarly qualified men.” Id. at 781 (emphasis added). For a related analysis involving multiple comparison, see Csicseri v. Bowsher,

cients that are practically insignificant. Similarly, it is also possible (especially when the sample size is small) to obtain results that are practically significant but fail to achieve statistical significance. Suppose, for example, that an expert undertakes a damages study in a patent infringement case and predicts “but-for sales”—what sales would have been had the infringement not occurred—using data that predate the period of alleged infringement. If data limitations are such that only 3 or 4 years of preinfringement sales are known, the difference between but-for sales and actual sales during the period of alleged infringement could be practically significant but statistically insignificant. Alternatively, with only 3 or 4 data points, the expert would be unable to detect an effect, even if one existed.

1. When should statistical tests be used?

A test of a specific contention, a hypothesis test, often assists the court in determining whether a violation of the law has occurred in areas in which direct evidence is inaccessible or inconclusive. For example, an expert might use hypothesis tests in race and sex discrimination cases to determine the presence of a discriminatory effect.

Statistical evidence alone never can prove with absolute certainty the worth of any substantive theory. However, by providing evidence contrary to the view that a particular form of discrimination has not occurred, for example, the multiple regression approach can aid the trier of fact in assessing the likelihood that discrimination has occurred.43

Tests of hypotheses are appropriate in a cross-sectional analysis, in which the data underlying the regression study have been chosen as a sample of a population at a particular point in time, and in a time-series analysis, in which the data being evaluated cover a number of time periods. In either analysis, the expert may want to evaluate a specific hypothesis, usually relating to a question of liability or to the determination of whether there is measurable impact of an alleged violation. Thus, in a sex discrimination case, an expert may want to evaluate a null hypothesis of no discrimination against the alternative hypothesis that discrimination takes a par-

862 F. Supp. 547, 572 (D.D.C. 1994) (noting that plaintiff’s expert found “statistically significant instances of discrimination” in 2 of 37 statistical comparisons, but suggesting that “2 of 37 amounts to roughly 5% and is hardly indicative of a pattern of discrimination”), aff’d, 67 F.3d 972 (D.C. Cir. 1995).

43. See International Brotherhood. of Teamsters v. United States, 431 U.S. 324 (1977) (the Court inferred discrimination from overwhelming statistical evidence by a preponderance of the evidence); Ryther v. KARE 11, 108 F.3d 832, 844 (8th Cir. 1997) (“The plaintiff produced overwhelming evidence as to the elements of a prima facie case, and strong evidence of pretext, which, when considered with indications of age-based animus in [plaintiff’s] work environment, clearly provide sufficient evidence as a matter of law to allow the trier of fact to find intentional discrimination.”); Paige v. California, 291 F.3d 1141 (9th Cir. 2002) (allowing plaintiffs to rely on aggregated data to show employment discrimination).

ticular form.44 Alternatively, in an antitrust damages proceeding, the expert may want to test a null hypothesis of no legal impact against the alternative hypothesis that there was an impact. In either type of case, it is important to realize that rejection of the null hypothesis does not in itself prove legal liability. It is possible to reject the null hypothesis and believe that an alternative explanation other than one involving legal liability accounts for the results.45

Often, the null hypothesis is stated in terms of a particular regression coefficient being equal to 0. For example, in a wage discrimination case, the null hypothesis would be that there is no wage difference between sexes. If a negative difference is observed (meaning that women are found to earn less than men, after the expert has controlled statistically for legitimate alternative explanations), the difference is evaluated as to its statistical significance using the t-test.46 The t-test uses the t-statistic to evaluate the hypothesis that a model parameter takes on a particular value, usually 0.

2. What is the appropriate level of statistical significance?

In most scientific work, the level of statistical significance required to reject the null hypothesis (i.e., to obtain a statistically significant result) is set conventionally at 0.05, or 5%.47 The significance level measures the probability that the null hypothesis will be rejected incorrectly. In general, the lower the percentage required for statistical significance, the more difficult it is to reject the null hypothesis; therefore, the lower the probability that one will err in doing so. Although the 5% criterion is typical, reporting of more stringent 1% significance tests or less stringent 10% tests can also provide useful information.

In doing a statistical test, it is useful to compute an observed significance level, or p-value. The p-value associated with the null hypothesis that a regression coefficient is 0 is the probability that a coefficient of this magnitude or larger could have occurred by chance if the null hypothesis were true. If the p-value were less than or equal to 5%, the expert would reject the null hypothesis in favor of the

44. Tests are also appropriate when comparing the outcomes of a set of employer decisions with those that would have been obtained had the employer chosen differently from among the available options.

45. See David H. Kaye & David A. Freedman, Reference Guide on Statistics, Section IV.C.5, in this manual.

46. The t-test is strictly valid only if a number of important assumptions hold. However, for many regression models, the test is approximately valid if the sample size is sufficiently large. See Appendix, infra, for a more complete discussion of the assumptions underlying multiple regression..

47. See, e.g., Palmer v. Shultz, 815 F.2d 84, 92 (D.C. Cir. 1987) (“‘the .05 level of significance…[is] certainly sufficient to support an inference of discrimination’” (quoting Segar v. Smith, 738 F.2d 1249, 1283 (D.C. Cir. 1984), cert. denied, 471 U.S. 1115 (1985))); United States v. Delaware, 2004 U.S. Dist. LEXIS 4560 (D. Del. Mar. 22, 2004) (stating that .05 is the normal standard chosen).

alternative hypothesis; if the p-value were greater than 5%, the expert would fail to reject the null hypothesis.48

3. Should statistical tests be one-tailed or two-tailed?

When the expert evaluates the null hypothesis that a variable of interest has no linear association with a dependent variable against the alternative hypothesis that there is an association, a two-tailed test, which allows for the effect to be either positive or negative, is usually appropriate. A one-tailed test would usually be applied when the expert believes, perhaps on the basis of other direct evidence presented at trial, that the alternative hypothesis is either positive or negative, but not both. For example, an expert might use a one-tailed test in a patent infringement case if he or she strongly believes that the effect of the alleged infringement on the price of the infringed product was either zero or negative. (The sales of the infringing product competed with the sales of the infringed product, thereby lowering the price.) By using a one-tailed test, the expert is in effect stating that prior to looking at the data it would be very surprising if the data pointed in the direct opposite to the one posited by the expert.

Because using a one-tailed test produces p-values that are one-half the size of p-values using a two-tailed test, the choice of a one-tailed test makes it easier for the expert to reject a null hypothesis. Correspondingly, the choice of a two-tailed test makes null hypothesis rejection less likely. Because there is some arbitrariness involved in the choice of an alternative hypothesis, courts should avoid relying solely on sharply defined statistical tests.49 Reporting the p-value or a confidence interval should be encouraged because it conveys useful information to the court, whether or not a null hypothesis is rejected.

48. The use of 1%, 5%, and, sometimes, 10% levels for determining statistical significance remains a subject of debate. One might argue, for example, that when regression analysis is used in a price-fixing antitrust case to test a relatively specific alternative to the null hypothesis (e.g., price fixing), a somewhat lower level of confidence (a higher level of significance, such as 10%) might be appropriate. Otherwise, when the alternative to the null hypothesis is less specific, such as the rather vague alternative of “effect” (e.g., the price increase is caused by the increased cost of production, increased demand, a sharp increase in advertising, or price fixing), a high level of confidence (associated with a low significance level, such as 1%) may be appropriate. See, e.g., Vuyanich v. Republic Nat’l Bank, 505 F. Supp. 224, 272 (N.D. Tex. 1980) (noting the “arbitrary nature of the adoption of the 5% level of [statistical] significance” to be required in a legal context); Cook v. Rockwell Int’l Corp., 2006 U.S. Dist. LEXIS 89121 (D. Colo. Dec. 7, 2006).

49. Courts have shown a preference for two-tailed tests. See, e.g., Palmer v. Shultz, 815 F.2d 84, 95–96 (D.C. Cir. 1987) (rejecting the use of one-tailed tests, the court found that because some appellants were claiming overselection for certain jobs, a two-tailed test was more appropriate in Title VII cases); Moore v. Summers, 113 F. Supp. 2d 5, 20 (D.D.C. 2000) (reiterating the preference for a two-tailed test). See also David H. Kaye & David A. Freedman, Reference Guide on Statistics, Section IV.C.2, in this manual; Csicseri v. Bowsher, 862 F. Supp. 547, 565 (D.D.C. 1994) (finding that although a one-tailed test is “not without merit,” a two-tailed test is preferable).

B. Are the Regression Results Robust?

The issue of robustness—whether regression results are sensitive to slight modifications in assumptions (e.g., that the data are measured accurately)—is of vital importance. If the assumptions of the regression model are valid, standard statistical tests can be applied. However, when the assumptions of the model are violated, standard tests can overstate or understate the significance of the results.

The violation of an assumption does not necessarily invalidate a regression analysis, however. In some instances in which the assumptions of multiple regression analysis fail, there are other statistical methods that are appropriate. Consequently, experts should be encouraged to provide additional information that relates to the issue of whether regression assumptions are valid, and if they are not valid, the extent to which the regression results are robust. The following questions highlight some of the more important assumptions of regression analysis.

1. What evidence exists that the explanatory variable causes changes in the dependent variable?

In the multiple regression framework, the expert often assumes that changes in explanatory variables affect the dependent variable, but changes in the dependent variable do not affect the explanatory variables—that is, there is no feedback.50 In making this assumption, the expert draws the conclusion that a correlation between a covariate and the dependent outcome variable results from the effect of the former on the latter and not vice versa. Were it the case that the causality was reversed so that the outcome variable affected the covariate, and not vice versa, spurious correlation is likely to cause the expert and the trier of fact to reach the wrong conclusion. Finally, it is possible in some cases that both the outcome variable and the covariate each affect the other; if the expert does not take this more complex relationship into account, the regression coefficient on the variable of interest could be either too high or too low.51

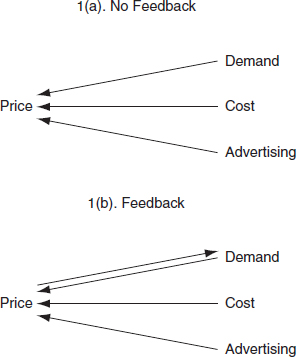

Figure 1 illustrates this point. In Figure 1(a), the dependent variable, price, is explained through a multiple regression framework by three covariate explanatory variables—demand, cost, and advertising—with no feedback. Each of the three covariates is assumed to affect price causally, while price is assumed to have no effect on the three covariates. However, in Figure 1(b), there is feedback, because price affects demand, and demand, cost, and advertising affect price. Cost and advertising, however, are not affected by price. In this case both price and demand are jointly determined; each has a causal effect on the other.

50. The assumption of no feedback is especially important in litigation, because it is possible for the defendant (if responsible, for example, for price fixing or discrimination) to affect the values of the explanatory variables and thus to bias the usual statistical tests that are used in multiple regression.

51. When both effects occur at the same time, this is described as “simultaneity.”

Figure 1. Feedback.

As a general rule, there are no basic direct statistical tests for determining the direction of causality; rather, the expert, when asked, should be prepared to defend his or her assumption based on an understanding of the underlying behavior evidence relating to the businesses or individuals involved.52

Although there is no single approach that is entirely suitable for estimating models when the dependent variable affects one or more explanatory variables, one possibility is for the expert to drop the questionable variable from the regression to determine whether the variable’s exclusion makes a difference. If it does not, the issue becomes moot. Another approach is for the expert to expand the multiple regression model by adding one or more equations that explain the relationship between the explanatory variable in question and the dependent variable.

Suppose, for example, that in a salary-based sex discrimination suit the defendant’s expert considers employer-evaluated test scores to be an appropriate explanatory variable for the dependent variable, salary. If the plaintiff were to provide information that the employer adjusted the test scores in a manner that penalized women, the assumption that salaries were determined by test scores and not that test scores were affected by salaries might be invalid. If it is clearly inappropriate,

52. There are statistical time-series tests for particular formulations of causality; see Pindyck & Rubinfeld, supra note 23, § 9.2.

the test-score variable should be removed from consideration. Alternatively, the information about the employer’s use of the test scores could be translated into a second equation in which a new dependent variable—test score—is related to workers’ salary and sex. A test of the hypothesis that salary and sex affect test scores would provide a suitable test of the absence of feedback.

2. To what extent are the explanatory variables correlated with each other?

It is essential in multiple regression analysis that the explanatory variable of interest not be correlated perfectly with one or more of the other explanatory variables. If there were perfect correlation between two variables, the expert could not separate out the effect of the variable of interest on the dependent variable from the effect of the other variable. In essence, there are two explanations for the same pattern in the data. Suppose, for example, that in a sex discrimination suit, a particular form of job experience is determined to be a valid source of high wages. If all men had the requisite job experience and all women did not, it would be impossible to tell whether wage differentials between men and women were the result of sex discrimination or differences in experience.

When two or more explanatory variables are correlated perfectly—that is, when there is perfect collinearity—one cannot estimate the regression parameters. The existing dataset does not allow one to distinguish between alternative competing explanations of the movement in the dependent variable. However, when two or more variables are highly, but not perfectly, correlated—that is, when there is multicollinearity—the regression can be estimated, but some concerns remain. The greater the multicollinearity between two variables, the less precise are the estimates of individual regression parameters, and an expert is less able to distinguish among competing explanations for the movement in the outcome variable (even though there is no problem in estimating the joint influence of the two variables and all other regression parameters).53

Fortunately, the reported regression statistics take into account any multicollinearity that might be present.54 It is important to note as a corollary, however, that a failure to find a strong relationship between a variable of interest and

53. See Griggs v. Duke Power Co., 401 U.S. 424 (1971) (The court argued that an education requirement was one rationalization of the data, but racial discrimination was another. If you had put both race and education in the regression, it would have been asking too much of the data to tell which variable was doing the real work, because education and race were so highly correlated in the market at that time.).

54. See Denny v. Westfield State College, 669 F. Supp. 1146, 1149 (D. Mass. 1987) (The court accepted the testimony of one expert that “the presence of multicollinearity would merely tend to overestimate the amount of error associated with the estimate…. In other words, p-values will be artificially higher than they would be if there were no multicollinearity present.”) (emphasis added); In re High Fructose Corn Syrup Antitrust Litig., 295 F.3d 651, 659 (7th Cir. Ill. 2002) (refusing to second-guess district court’s admission of regression analyses that addressed multicollinearity in different ways).

a dependent variable need not imply that there is no relationship.55 A relatively small sample, or even a large sample with substantial multicollinearity, may not provide sufficient information for the expert to determine whether there is a relationship.

3. To what extent are individual errors in the regression model independent?

If the expert calculated the parameters of a multiple regression model using as data the entire population, the estimates might still measure the model’s population parameters with error. Errors can arise for a number of reasons, including (1) the failure of the model to include the appropriate explanatory variables, (2) the failure of the model to reflect any nonlinearities that might be present, and (3) the inclusion of inappropriate variables in the model. (Of course, further sources of error will arise if a sample, or subset, of the population is used to estimate the regression parameters.)

It is useful to view the cumulative effect of all of these sources of modeling error as being represented by an additional variable, the error term, in the multiple regression model. An important assumption in multiple regression analysis is that the error term and each of the explanatory variables are independent of each other. (If the error term and an explanatory variable are independent, they are not correlated with each other.) To the extent this is true, the expert can estimate the parameters of the model without bias; the magnitude of the error term will affect the precision with which a model parameter is estimated, but will not cause that estimate to be consistently too high or too low.

The assumption of independence may be inappropriate in a number of circumstances. In some instances, failure of the assumption makes multiple regression analysis an unsuitable statistical technique; in other instances, modifications or adjustments within the regression framework can be made to accommodate the failure.

The independence assumption may fail, for example, in a study of individual behavior over time, in which an unusually high error value in one time period is likely to lead to an unusually high value in the next time period. For example, if an economic forecaster underpredicted this year’s Gross Domestic Product, he or she is likely to underpredict next year’s as well; the factor that caused the prediction error (e.g., an incorrect assumption about Federal Reserve policy) is likely to be a source of error in the future.

55. If an explanatory variable of concern and another explanatory variable are highly correlated, dropping the second variable from the regression can be instructive. If the coefficient on the explanatory variable of concern becomes significant, a relationship between the dependent variable and the explanatory variable of concern is suggested.

Alternatively, the assumption of independence may fail in a study of a group of firms at a particular point in time, in which error terms for large firms are systematically higher than error terms for small firms. For example, an analysis of the profitability of firms may not accurately account for the importance of advertising as a source of increased sales and profits. To the extent that large firms advertise more than small firms, the regression errors would be large for the large firms and small for the small firms. A third possibility is that the dependent variable varies at the individual level, but the explanatory variable of interest varies only at the level of a group. For example, an expert might be viewing the price of a product in an antitrust case as a function of a variable or variables that measure the marketing channel through which the product is sold (e.g., wholesale or retail). In this case, errors within each of the marketing groups are likely not to be independent. Failure to account for this could cause the expert to overstate the statistical significance of the regression parameters.

In some instances, there are statistical tests that are appropriate for evaluating the independence assumption.56 If the assumption has failed, the expert should ask first whether the source of the lack of independence is the omission of an important explanatory variable from the regression. If so, that variable should be included when possible, or the potential effect of its omission should be estimated when inclusion is not possible. If there is no important missing explanatory variable, the expert should apply one or more procedures that modify the standard multiple regression technique to allow for more accurate estimates of the regression parameters.57

4. To what extent are the regression results sensitive to individual data points?

Estimated regression coefficients can be highly sensitive to particular data points. Suppose, for example, that one data point deviates greatly from its expected value, as indicated by the regression equation, while the remaining data points show

56. In a time-series analysis, the correlation of error values over time, the “serial correlation,” can be tested (in most instances) using a number of tests, including the Durbin-Watson test. The possibility that some error terms are consistently high in magnitude and others are systematically low, heteroscedasticity can also be tested in a number of ways. See, e.g., Pindyck & Rubinfeld, supra note 23, at 146–59. When serial correlation and/or heteroscedasticity are present, the standard errors associated with the estimated coefficients must be modified. For a discussion of the use of such “robust” standard errors, see Jeffrey M. Wooldridge, Introductory Econometrics: A Modern Approach, ch. 8 (4th ed. 2009).

57. When serial correlation is present, a number of closely related statistical methods are appropriate, including generalized differencing (a type of generalized least squares) and maximum likelihood estimation. When heteroscedasticity is the problem, weighted least squares and maximum likelihood estimation are appropriate. See, e.g., id. All these techniques are readily available in a number of statistical computer packages. They also allow one to perform the appropriate statistical tests of the significance of the regression coefficients.

little deviation. It would not be unusual in this situation for the coefficients in a multiple regression to change substantially if the data point in question were removed from the sample.

Evaluating the robustness of multiple regression results is a complex endeavor. Consequently, there is no agreed set of tests for robustness that analysts should apply. In general, it is important to explore the reasons for unusual data points. If the source is an error in recording data, the appropriate corrections can be made. If all the unusual data points have certain characteristics in common (e.g., they all are associated with a supervisor who consistently gives high ratings in an equal pay case), the regression model should be modified appropriately.

One generally useful diagnostic technique is to determine to what extent the estimated parameter changes as each data point in the regression analysis is dropped from the sample. An influential data point—a point that causes the estimated parameter to change substantially—should be studied further to determine whether mistakes were made in the use of the data or whether important explanatory variables were omitted.58

5. To what extent are the data subject to measurement error?

In multiple regression analysis it is assumed that variables are measured accurately.59 If there are measurement errors in the dependent variable, estimates of regression parameters will be less accurate, although they will not necessarily be biased. However, if one or more independent variables are measured with error, the corresponding parameter estimates are likely to be biased, typically toward zero (and other coefficient estimates are likely to be biased as well).

To understand why, suppose that the dependent variable, salary, is measured without error, and the explanatory variable, experience, is subject to measurement error. (Seniority or years of experience should be accurate, but the type of experience is subject to error, because applicants may overstate previous job responsibilities.) As the measurement error increases, the estimated parameter associated with the experience variable will tend toward zero, that is, eventually, there will be no relationship between salary and experience.

It is important for any source of measurement error to be carefully evaluated. In some circumstances, little can be done to correct the measurement-error prob-

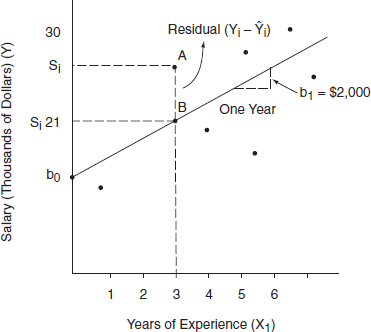

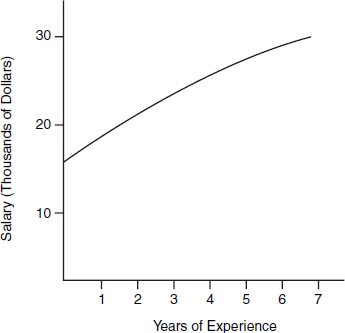

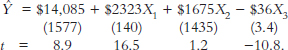

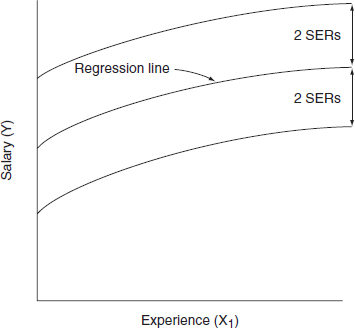

58. A more complete and formal treatment of the robustness issue appears in David A. Belsley et al., Regression Diagnostics: Identifying Influential Data and Sources of Collinearity 229–44 (1980). For a useful discussion of the detection of outliers and the evaluation of influential data points, see R.D. Cook & S. Weisberg, Residuals and Influence in Regression (Monographs on Statistics and Applied Probability No. 18, 1982). For a broad discussion of robust regression methods, see Peer J. Rouseeuw & Annick M. Leroy, Robust Regression and Outlier Detection (2004).