Reference Guide on Epidemiology

Michael D. Green, J.D., is Bess & Walter Williams Chair in Law, Wake Forest University School of Law, Winston-Salem, North Carolina.

D. Michal Freedman, J.D., Ph.D., M.P.H., is Epidemiologist, Division of Cancer Epidemiology and Genetics, National Cancer Institute, Bethesda, Maryland.

Leon Gordis, M.D., M.P.H., Dr.P.H., is Professor Emeritus of Epidemiology, Johns Hopkins Bloomberg School of Public Health, and Professor Emeritus of Pediatrics, Johns Hopkins School of Medicine, Baltimore, Maryland.

CONTENTS

II. What Different Kinds of Epidemiologic Studies Exist?

A. Experimental and Observational Studies of Suspected Toxic Agents

B. Types of Observational Study Design

C. Epidemiologic and Toxicologic Studies

III. How Should Results of an Epidemiologic Study Be Interpreted?

D. Adjustment for Study Groups That Are Not Comparable

IV. What Sources of Error Might Have Produced a False Result?

A. What Statistical Methods Exist to Evaluate the Possibility of Sampling Error?

B. What Biases May Have Contributed to an Erroneous Association?

C. Could a Confounding Factor Be Responsible for the Study Result?

1. What techniques can be used to prevent or limit confounding?

2. What techniques can be used to identify confounding factors?

3. What techniques can be used to control for confounding factors?

V. General Causation: Is an Exposure a Cause of the Disease?

A. Is There a Temporal Relationship?

B. How Strong Is the Association Between the Exposure and Disease?

C. Is There a Dose–Response Relationship?

D. Have the Results Been Replicated?

E. Is the Association Biologically Plausible (Consistent with Existing Knowledge)?

F. Have Alternative Explanations Been Considered?

G. What Is the Effect of Ceasing Exposure?

H. Does the Association Exhibit Specificity?

I. Are the Findings Consistent with Other Relevant Knowledge?

VI. What Methods Exist for Combining the Results of Multiple Studies?

VII. What Role Does Epidemiology Play in Proving Specific Causation?

Epidemiology is the field of public health and medicine that studies the incidence, distribution, and etiology of disease in human populations. The purpose of epidemiology is to better understand disease causation and to prevent disease in groups of individuals. Epidemiology assumes that disease is not distributed randomly in a group of individuals and that identifiable subgroups, including those exposed to certain agents, are at increased risk of contracting particular diseases.1

Judges and juries are regularly presented with epidemiologic evidence as the basis of an expert’s opinion on causation.2 In the courtroom, epidemiologic research findings are offered to establish or dispute whether exposure to an agent3

1. Although epidemiologists may conduct studies of beneficial agents that prevent or cure disease or other medical conditions, this reference guide refers exclusively to outcomes as diseases, because they are the relevant outcomes in most judicial proceedings in which epidemiology is involved.

2. Epidemiologic studies have been well received by courts deciding cases involving toxic substances. See, e.g., Siharath v. Sandoz Pharms. Corp., 131 F. Supp. 2d 1347, 1356 (N.D. Ga. 2001) (“The existence of relevant epidemiologic studies can be a significant factor in proving general causation in toxic tort cases. Indeed, epidemiologic studies provide ‘the primary generally accepted methodology for demonstrating a causal relation between a chemical compound and a set of symptoms or disease.’” (quoting Conde v. Velsicol Chem. Corp., 804 F. Supp. 972, 1025–26 (S.D. Ohio 1992))), aff’d, 295 F.3d 1194 (11th Cir. 2002); Berry v. CSX Transp., Inc., 709 So. 2d 552, 569 (Fla. Dist. Ct. App. 1998). Well-conducted studies are uniformly admitted. 3 Modern Scientific Evidence: The Law and Science of Expert Testimony § 23.1, at 187 (David L. Faigman et al. eds., 2007–08) [hereinafter Modern Scientific Evidence]. Since Daubert v. Merrell Dow Pharmaceuticals, 509 U.S. 579 (1993), the predominant use of epidemiologic studies is in connection with motions to exclude the testimony of expert witnesses. Cases deciding such motions routinely address epidemiology and its implications for the admissibility of expert testimony on causation. Often it is not the investigator who conducted the study who is serving as an expert witness in a case in which the study bears on causation. See, e.g., Kennedy v. Collagen Corp., 161 F.3d 1226 (9th Cir. 1998) (physician is permitted to testify about causation); DeLuca v. Merrell Dow Pharms., Inc., 911 F.2d 941, 953 (3d Cir. 1990) (a pediatric pharmacologist expert’s credentials are sufficient pursuant to Fed. R. Evid. 702 to interpret epidemiologic studies and render an opinion based thereon); Medalen v. Tiger Drylac U.S.A., Inc., 269 F. Supp. 2d 1118, 1129 (D. Minn. 2003) (holding toxicologist could testify to general causation but not specific causation); Burton v. R.J. Reynolds Tobacco Co., 181 F. Supp. 2d 1256, 1267 (D. Kan. 2002) (a vascular surgeon was permitted to testify to general causation); Landrigan v. Celotex Corp., 605 A.2d 1079, 1088 (N.J. 1992) (an epidemiologist was permitted to testify to both general causation and specific causation); Trach v. Fellin, 817 A.2d 1102, 1117–18 (Pa. Super. Ct. 2003) (an expert who was a toxicologist and pathologist was permitted to testify to general and specific causation).

3. We use the term “agent” to refer to any substance external to the human body that potentially causes disease or other health effects. Thus, drugs, devices, chemicals, radiation, and minerals (e.g., asbestos) are all agents whose toxicity an epidemiologist might explore. A single agent or a number of independent agents may cause disease, or the combined presence of two or more agents may be necessary for the development of the disease. Epidemiologists also conduct studies of individual characteristics, such as blood pressure and diet, which might pose risks, but those studies are rarely of interest in judicial proceedings. Epidemiologists also may conduct studies of drugs and other pharmaceutical products to assess their efficacy and safety.

caused a harmful effect or disease.4 Epidemiologic evidence identifies agents that are associated with an increased risk of disease in groups of individuals, quantifies the amount of excess disease that is associated with an agent, and provides a profile of the type of individual who is likely to contract a disease after being exposed to an agent. Epidemiology focuses on the question of general causation (i.e., is the agent capable of causing disease?) rather than that of specific causation (i.e., did it cause disease in a particular individual?).5 For example, in the 1950s, Doll and Hill and others published articles about the increased risk of lung cancer in cigarette smokers. Doll and Hill’s studies showed that smokers who smoked 10 to 20 cigarettes a day had a lung cancer mortality rate that was about 10 times higher than that for nonsmokers.6 These studies identified an association between smoking cigarettes and death from lung cancer that contributed to the determination that smoking causes lung cancer.

However, it should be emphasized that an association is not equivalent to causation.7 An association identified in an epidemiologic study may or may not be

4. E.g., Bonner v. ISP Techs., Inc., 259 F.3d 924 (8th Cir. 2001) (a worker exposed to organic solvents allegedly suffered organic brain dysfunction); Burton v. R.J. Reynolds Tobacco Co., 181 F. Supp. 2d 1256 (D. Kan. 2002) (cigarette smoking was alleged to have caused peripheral vascular disease); In re Bextra & Celebrex Mktg. Sales Practices & Prod. Liab. Litig., 524 F. Supp. 2d 1166 (N.D. Cal. 2007) (multidistrict litigation over drugs for arthritic pain that caused heart disease); Ruff v. Ensign-Bickford Indus., Inc., 168 F. Supp. 2d 1271 (D. Utah 2001) (chemicals that escaped from an explosives manufacturing site allegedly caused non-Hodgkin’s lymphoma in nearby residents); Castillo v. E.I. du Pont De Nemours & Co., 854 So. 2d 1264 (Fla. 2003) (a child born with a birth defect allegedly resulting from mother’s exposure to a fungicide).

5. This terminology and the distinction between general causation and specific causation are widely recognized in court opinions. See, e.g., Norris v. Baxter Healthcare Corp., 397 F.3d 878 (10th Cir. 2005); In re Hanford Nuclear Reservation Litig., 292 F.3d 1124, 1129 (9th Cir. 2002) (“‘Generic causation’ has typically been understood to mean the capacity of a toxic agent…to cause the illnesses complained of by plaintiffs. If such capacity is established, ‘individual causation’ answers whether that toxic agent actually caused a particular plaintiff’s illness.”); In re Rezulin Prods. Liab. Litig., 369 F. Supp. 2d 398, 402 (S.D.N.Y. 2005); Soldo v. Sandoz Pharms. Corp., 244 F. Supp. 2d 434, 524–25 (W.D. Pa. 2003); Burton v. R.J. Reynolds Tobacco Co., 181 F. Supp. 2d 1256, 1266–67 (D. Kan. 2002). For a discussion of specific causation, see infra Section VII.

6. Richard Doll & A. Bradford Hill, Lung Cancer and Other Causes of Death in Relation to Smoking: A Second Report on the Mortality of British Doctors, 2 Brit. Med. J. 1071 (1956).

7. See Soldo v. Sandoz Pharms. Corp., 244 F. Supp. 2d 434, 461 (W.D. Pa. 2003) (Hill criteria [see infra Section V] developed to assess whether an association is causal); Miller v. Pfizer, Inc., 196 F. Supp. 2d 1062, 1079–80 (D. Kan. 2002); Magistrini v. One Hour Martinizing Dry Cleaning, 180 F. Supp. 2d 584, 591 (D.N.J. 2002) (“[A]n association is not equivalent to causation.” (quoting the second edition of this reference guide)); Zandi v. Wyeth a/k/a Wyeth, Inc., No. 27-CV-06-6744, 2007 WL 3224242, at *11 (D. Minn. Oct. 15, 2007).

Association is more fully discussed infra Section III. The term is used to describe the relationship between two events (e.g., exposure to a chemical agent and development of disease) that occur more frequently together than one would expect by chance. Association does not necessarily imply a causal effect. Causation is used to describe the association between two events when one event is a necessary link in a chain of events that results in the effect. Of course, alternative causal chains may exist that do not include the agent but that result in the same effect. For general treatment of causation in tort law

causal.8 Assessing whether an association is causal requires an understanding of the strengths and weaknesses of the study’s design and implementation, as well as a judgment about how the study findings fit with other scientific knowledge. It is important to emphasize that all studies have “flaws” in the sense of limitations that add uncertainty about the proper interpretation of the results.9 Some flaws are inevitable given the limits of technology, resources, the ability and willingness of persons to participate in a study, and ethical constraints. In evaluating epidemiologic evidence, the key questions, then, are the extent to which a study’s limitations compromise its findings and permit inferences about causation.

A final caveat is that employing the results of group-based studies of risk to make a causal determination for an individual plaintiff is beyond the limits of epidemiology. Nevertheless, a substantial body of legal precedent has developed that addresses the use of epidemiologic evidence to prove causation for an individual litigant through probabilistic means, and the law developed in these cases is discussed later in this reference guide.10

The following sections of this reference guide address a number of critical issues that arise in considering the admissibility of, and weight to be accorded to, epidemiologic research findings. Over the past several decades, courts frequently have confronted the use of epidemiologic studies as evidence and have recognized their utility in proving causation. As the Third Circuit observed in DeLuca v. Merrell Dow Pharmaceuticals, Inc.: “The reliability of expert testimony founded on reasoning from epidemiologic data is generally a fit subject for judicial notice; epidemiology is a well-established branch of science and medicine, and epidemiologic evidence has been accepted in numerous cases.”11 Indeed,

and that for factual causation to exist an agent must be a necessary link in a causal chain sufficient for the outcome, see Restatement (Third) of Torts: Liability for Physical Harm § 26 (2010). Epidemiologic methods cannot deductively prove causation; indeed, all empirically based science cannot affirmatively prove a causal relation. See, e.g., Stephan F. Lanes, The Logic of Causal Inference in Medicine, in Causal Inference 59 (Kenneth J. Rothman ed., 1988). However, epidemiologic evidence can justify an inference that an agent causes a disease. See infra Section V.

8. See infra Section IV.

9. See In re Phenylpropanolamine (PPA) Prods. Liab. Litig., 289 F. Supp. 2d 1230, 1240 (W.D. Wash. 2003) (quoting this reference guide and criticizing defendant’s “ex post facto dissection” of a study); In re Orthopedic Bone Screw Prods. Liab. Litig., MDL No. 1014, 1997 U.S. Dist. LEXIS 6441, at *26–*27 (E.D. Pa. May 5, 1997) (holding that despite potential for several biases in a study that “may…render its conclusions inaccurate,” the study was sufficiently reliable to be admissible); Joseph L. Gastwirth, Reference Guide on Survey Research, 36 Jurimetrics J. 181, 185 (1996) (review essay) (“One can always point to a potential flaw in a statistical analysis.”).

10. See infra Section VII.

11. 911 F.2d 941, 954 (3d Cir. 1990); see also Norris v. Baxter Healthcare Corp., 397 F.3d 878, 882 (10th Cir. 2005) (an extensive body of exonerative epidemiologic evidence must be confronted and the plaintiff must provide scientifically reliable contrary evidence); In re Meridia Prods. Liab. Litig., 328 F. Supp. 2d 791, 800 (N.D. Ohio 2004) (“Epidemiologic studies are the primary generally accepted methodology for demonstrating a causal relation between the chemical compound and a set of symptoms or a disease….” (quoting Conde v. Velsicol Chem. Corp., 804 F. Supp. 972,

much more difficult problems arise for courts when there is a paucity of epidemiologic evidence.12

Three basic issues arise when epidemiology is used in legal disputes, and the methodological soundness of a study and its implications for resolution of the question of causation must be assessed:

- Do the results of an epidemiologic study or studies reveal an association between an agent and disease?

- Could this association have resulted from limitations of the study (bias, confounding, or sampling error), and, if so, from which?

- Based on the analysis of limitations in Item 2, above, and on other evidence, how plausible is a causal interpretation of the association?

Section II explains the different kinds of epidemiologic studies, and Section III addresses the meaning of their outcomes. Section IV examines concerns about the methodological validity of a study, including the problem of sampling error.13Section V discusses general causation, considering whether an agent is capable of causing disease. Section VI deals with methods for combining the results of multiple epidemiologic studies and the difficulties entailed in extracting a single global measure of risk from multiple studies. Additional legal questions that arise in most toxic substances cases are whether population-based epidemiologic evidence can be used to infer specific causation, and, if so, how. Section VII addresses specific causation—the matter of whether a specific agent caused the disease in a given plaintiff.

1025–26 (S.D. Ohio 1992))); Brasher v. Sandoz Pharms. Corp., 160 F. Supp. 2d 1291, 1296 (N.D. Ala. 2001) (“Unquestionably, epidemiologic studies provide the best proof of the general association of a particular substance with particular effects, but it is not the only scientific basis on which those effects can be predicted.”).

12. See infra note 181.

13. For a more in-depth discussion of the statistical basis of epidemiology, see David H. Kaye & David A. Freedman, Reference Guide on Statistics, Section II.A, in this manual, and two case studies: Joseph Sanders, The Bendectin Litigation: A Case Study in the Life Cycle of Mass Torts, 43 Hastings L.J. 301 (1992); Devra L. Davis et al., Assessing the Power and Quality of Epidemiologic Studies of Asbestos-Exposed Populations, 1 Toxicological & Indus. Health 93 (1985). See also References on Epidemiology and References on Law and Epidemiology at the end of this reference guide.

II. What Different Kinds of Epidemiologic Studies Exist?

A. Experimental and Observational Studies of Suspected Toxic Agents

To determine whether an agent is related to the risk of developing a certain disease or an adverse health outcome, we might ideally want to conduct an experimental study in which the subjects would be randomly assigned to one of two groups: one group exposed to the agent of interest and the other not exposed. After a period of time, the study participants in both groups would be evaluated for the development of the disease. This type of study, called a randomized trial, clinical trial, or true experiment, is considered the gold standard for determining the relationship of an agent to a health outcome or adverse side effect. Such a study design is often used to evaluate new drugs or medical treatments and is the best way to ensure that any observed difference in outcome between the two groups is likely to be the result of exposure to the drug or medical treatment.

Randomization minimizes the likelihood that there are differences in relevant characteristics between those exposed to the agent and those not exposed. Researchers conducting clinical trials attempt to use study designs that are placebo controlled, which means that the group not receiving the active agent or treatment is given an inactive ingredient that appears similar to the active agent under study. They also use double blinding where possible, which means that neither the participants nor those conducting the study know which group is receiving the agent or treatment and which group is given the placebo. However, ethical and practical constraints limit the use of such experimental methodologies to assess the value of agents that are thought to be beneficial to human beings.14

When an agent’s effects are suspected to be harmful, researchers cannot knowingly expose people to the agent.15 Instead epidemiologic studies typically

14. Although experimental human studies cannot intentionally expose subjects to toxins, they can provide evidence that a new drug or other beneficial intervention also has adverse effects. See In re Bextra & Celebrex Mktg. Sales Practices & Prod. Liab. Litig., 524 F. Supp. 2d 1166, 1181 (N.D. Cal. 2007) (the court relied on a clinical study of Celebrex that revealed increased cardiovascular risk to conclude that the plaintiff’s experts’ testimony on causation was admissible); McDarby v. Merck & Co., 949 A.2d 223 (N.J. Super. Ct. App. Div. 2008) (explaining how clinical trials of Vioxx revealed an association with heart disease).

15. Experimental studies in which human beings are exposed to agents known or thought to be toxic are ethically proscribed. See Glastetter v. Novartis Pharms. Corp., 252 F.3d 986, 992 (8th Cir. 2001); Brasher v. Sandoz Pharms. Corp., 160 F. Supp. 2d 1291, 1297 (N.D. Ala. 2001). Experimental studies can be used where the agent under investigation is believed to be beneficial, as is the case in the development and testing of new pharmaceutical drugs. See, e.g., McDarby v. Merck & Co., 949 A.2d 223, 270 (N.J. Super. Ct. App. Div. 2008) (an expert witness relied on a clinical trial of a new drug to find the adjusted risk for the plaintiff); see also Gordon H. Guyatt, Using Randomized Trials in

“observe”16 a group of individuals who have been exposed to an agent of interest, such as cigarette smoke or an industrial chemical and compare them with another group of individuals who have not been exposed. Thus, the investigator identifies a group of subjects who have been exposed17 and compares their rate of disease or death with that of an unexposed group. In contrast to clinical studies in which potential risk factors can be controlled, epidemiologic investigations generally focus on individuals living in the community, for whom characteristics other than the one of interest, such as diet, exercise, exposure to other environmental agents, and genetic background, may distort a study’s results. Because these characteristics cannot be controlled directly by the investigator, the investigator addresses their possible role in the relationship being studied by considering them in the design of the study and in the analysis and interpretation of the study results (see infra Section IV).18 We emphasize that the Achilles’ heel of observational studies is the possibility of differences in the two populations being studied with regard to risk factors other than exposure to the agent.19 By contrast, experimental studies, in which subjects are randomized, generally avoid this problem.

B. Types of Observational Study Design

Several different types of observational epidemiologic studies can be conducted.20 Study designs may be chosen because of suitability for investigating the question of interest, timing constraints, resource limitations, or other considerations.

Most observational studies collect data about both exposure and health outcome in every individual in the study. The two main types of observational studies are cohort studies and case-control studies. A third type of observational study is a cross-sectional study, although cross-sectional studies are rarely useful in identifying toxic agents.21 A final type of observational study, one in which data about

Pharmacoepidemiology, in Drug Epidemiology and Post-Marketing Surveillance 59 (Brian L. Strom & Giampaolo Velo eds., 1992). Experimental studies also may be conducted that entail the discontinuation of exposure to a harmful agent, such as studies in which smokers are randomly assigned to a variety of smoking cessation programs or have no cessation.

16. Classifying these studies as observational in contrast to randomized trials can be misleading to those who are unfamiliar with the area, because subjects in a randomized trial are observed as well. Nevertheless, the use of the term “observational studies” to distinguish them from experimental studies is widely employed.

17. The subjects may have voluntarily exposed themselves to the agent of interest, as is the case, for example, for those who smoke cigarettes, or subjects may have been exposed involuntarily or even without knowledge to an agent, such as in the case of employees who are exposed to chemical fumes at work.

18. See David A. Freedman, Oasis or Mirage? 21 Chance 59, 59–61 (Mar. 2008).

19. Both experimental and observational studies are subject to random error. See infra Section IV.A.

20. Other epidemiologic studies collect data about the group as a whole, rather than about each individual in the group. These group studies are discussed infra Section II.B.4.

21. See infra Section II.B.3.

individuals are not gathered, but rather population data about exposure and disease are used, is an ecological study.22

The difference between cohort studies and case-control studies is that cohort studies measure and compare the incidence of disease in the exposed and unexposed (“control”) groups, while case-control studies measure and compare the frequency of exposure in the group with the disease (the “cases”) and the group without the disease (the “controls”). In a case-control study, the rates of exposure in the cases and the rates in the controls are compared, and the odds of having the disease when exposed to a suspected agent can be compared with the odds when not exposed. The critical difference between cohort studies and case-control studies is that cohort studies begin with exposed people and unexposed people, while case-control studies begin with individuals who are selected based on whether they have the disease or do not have the disease and their exposure to the agent in question is measured. The goal of both types of studies is to determine if there is an association between exposure to an agent and a disease and the strength (magnitude) of that association.

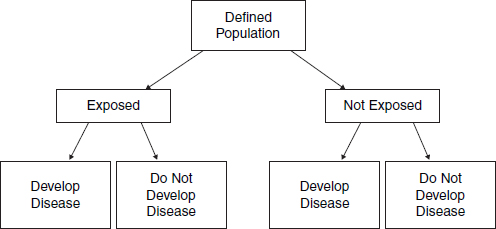

In cohort studies,23 researchers define a study population without regard to the participants’ disease status. The cohort may be defined in the present and followed forward into the future (prospectively) or it may be constructed retrospectively as of sometime in the past and followed over historical time toward the present. In either case, the researchers classify the study participants into groups based on whether they were exposed to the agent of interest (see Figure 1).24 In a prospective study, the exposed and unexposed groups are followed for a specified length of time, and the proportions of individuals in each group who develop the disease of interest are compared. In a retrospective study, the researcher will determine the proportion of individuals in the exposed group who developed the disease from available records or evidence and compare that proportion with the proportion of another group that was not exposed.25 Thus, as illustrated in Table 1,

22. For thumbnail sketches on all types of epidemiologic study designs, see Brian L. Strom, Study Designs Available for Pharmacoepidemiology Studies, in Pharmacoepidemiology 17, 21–26 (Brian L. Strom ed., 4th ed. 2005).

23. Cohort studies also are referred to as prospective studies and followup studies.

24. In some studies, there may be several groups, each with a different magnitude of exposure to the agent being studied. Thus, a study of cigarette smokers might include heavy smokers (>3 packs a day), moderate smokers (1 to 2 packs a day), and light smokers (<1 pack a day). See, e.g., Robert A. Rinsky et al., Benzene and Leukemia: An Epidemiologic Risk Assessment, 316 New Eng. J. Med. 1044 (1987).

25. Sometimes in retrospective cohort studies the researcher gathers historical data about exposure and disease outcome of a cohort. Harold A. Kahn, An Introduction to Epidemiologic Methods 39–41 (1983). Irving Selikoff, in his seminal study of asbestotic disease in insulation workers, included several hundred workers who had died before he began the study. Selikoff was able to obtain information about exposure from union records and information about disease from hospital and autopsy

Figure 1. Design of a cohort study.

Table 1. Cross-Tabulation of Exposure by Disease Status

| No Disease | Disease | Totals | Incidence Rates of Disease | |

| Not exposed | a | c | a + c | c/(a + c) |

| Exposed | b | d | b + d | d/(b + d) |

a researcher would compare the proportion of unexposed individuals with the disease, c/(a + c), with the proportion of exposed individuals with the disease, d/(b + d). If the exposure causes the disease, the researcher would expect a greater proportion of the exposed individuals to develop the disease than the unexposed individuals.26

One advantage of the cohort study design is that the temporal relationship between exposure and disease can often be established more readily than in other study designs, especially a case-control design, discussed below. By tracking people who are initially not affected by the disease, the researcher can determine the time of disease onset and its relation to exposure. This temporal relationship is critical to the question of causation, because exposure must precede disease onset if exposure caused the disease.

As an example, in 1950 a cohort study was begun to determine whether uranium miners exposed to radon were at increased risk for lung cancer as compared

records. Irving J. Selikoff et al., The Occurrence of Asbestosis Among Insulation Workers in the United States, 132 Ann. N.Y. Acad. Sci. 139, 143 (1965).

26. Researchers often examine the rate of disease or death in the exposed and control groups. The rate of disease or death entails consideration of the number developing disease within a specified period. All smokers and nonsmokers will, if followed for 100 years, die. Smokers will die at a greater rate than nonsmokers in the earlier years.

with nonminers. The study group (also referred to as the exposed cohort) consisted of 3400 white, underground miners. The control group (which need not be the same size as the exposed cohort) comprised white nonminers from the same geographic area. Members of the exposed cohort were examined every 3 years, and the degree of this cohort’s exposure to radon was measured from samples taken in the mines. Ongoing testing for radioactivity and periodic medical monitoring of lungs permitted the researchers to examine whether disease was linked to prior work exposure to radiation and allowed them to discern the relationship between exposure to radiation and disease. Exposure to radiation was associated with the development of lung cancer in uranium miners.27

The cohort design is used often in occupational studies such as the one just discussed. Because the design is not experimental, and the investigator has no control over what other exposures a subject in the study may have had, an increased risk of disease among the exposed group may be caused by agents other than the exposure of interest. A cohort study of workers in a certain industry that pays below-average wages might find a higher risk of cancer in those workers. This may be because they work in that industry, or, among other reasons, because low-wage groups are exposed to other harmful agents, such as environmental toxins present in higher concentrations in their neighborhoods. In the study design, the researcher must attempt to identify factors other than the exposure that may be responsible for the increased risk of disease. If data are gathered on other possible etiologic factors, the researcher generally uses statistical methods28 to assess whether a true association exists between working in the industry and cancer. Evaluating whether the association is causal involves additional analysis, as discussed in Section V.

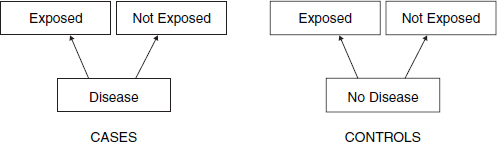

In case-control studies,29 the researcher begins with a group of individuals who have a disease (cases) and then selects a similar group of individuals who do not have the disease (controls). (Ideally, controls should come from the same source population as the cases.) The researcher then compares the groups in terms of past exposures. If a certain exposure is associated with or caused the disease, a higher proportion of past exposure among the cases than among the controls would be expected (see Figure 2).

27. This example is based on a study description in Abraham M. Lilienfeld & David E. Lilienfeld, Foundations of Epidemiology 237–39 (2d ed. 1980). The original study is Joseph K. Wagoner et al., Radiation as the Cause of Lung Cancer Among Uranium Miners, 273 New Eng. J. Med. 181 (1965).

28. See Daniel L. Rubinfeld, Reference Guide on Multiple Regression, Section II.B, in this manual; David H. Kaye & David A. Freedman, Reference Guide on Statistics, Section V.D, in this manual.

29. Case-control studies are also referred to as retrospective studies, because researchers gather historical information about rates of exposure to an agent in the case and control groups.

Figure 2. Design of a case-control study.

Thus, for example, in the late 1960s, doctors in Boston were confronted with an unusual number of young female patients with vaginal adenocarcinoma. Those patients became the “cases” in a case-control study (because they had the disease in question) and were matched with “controls,” who did not have the disease. Controls were selected based on their being born in the same hospitals and at the same time as the cases. The cases and controls were compared for exposure to agents that might be responsible, and researchers found maternal ingestion of DES (diethylstilbestrol) in all but one of the cases but none of the controls.30

An advantage of the case-control study is that it usually can be completed in less time and with less expense than a cohort study. Case-control studies are also particularly useful in the study of rare diseases, because if a cohort study were conducted, an extremely large group would have to be studied in order to observe the development of a sufficient number of cases for analysis.31 A number of potential problems with case-control studies are discussed in Section IV.B.

A third type of observational study is a cross-sectional study. In this type of study, individuals are interviewed or examined, and the presence of both the exposure of interest and the disease of interest is determined in each individual at a single point in time. Cross-sectional studies determine the presence (prevalence) of both exposure and disease in the subjects and do not determine the development of disease or risk of disease (incidence). Moreover, because both exposure and disease are determined in an individual at the same point in time, it is not possible to establish the temporal relation between exposure and disease—that is, that the

30. See Arthur L. Herbst et al., Adenocarcinoma of the Vagina: Association of Maternal Stilbestrol Therapy with Tumor Appearance, 284 New Eng. J. Med. 878 (1971).

31. Thus, for example, to detect a doubling of disease caused by exposure to an agent where the incidence of disease is 1 in 100 in the unexposed population would require sample sizes of 3100 for the exposed and nonexposed groups for a cohort study, but only 177 for the case and control groups in a case-control study. Harold A. Kahn & Christopher T. Sempos, Statistical Methods in Epidemiology 66 (1989).

exposure preceded the disease, which would be necessary for drawing any causal inference. Thus, a researcher may use a cross-sectional study to determine the connection between a personal characteristic that does not change over time, such as blood type, and existence of a disease, such as aplastic anemia, by examining individuals and determining their blood types and whether they suffer from aplastic anemia. Cross-sectional studies are infrequently used when the exposure of interest is an environmental toxic agent (current smoking status is a poor measure of an individual’s history of smoking), but these studies can provide valuable leads to further directions for research.32

Up to now, we have discussed studies in which data on both exposure and health outcome are obtained for each individual included in the study.33 In contrast, studies that collect data only about the group as a whole are called ecological studies.34 In ecological studies, information about individuals is generally not gathered; instead, overall rates of disease or death for different groups are obtained and compared. The objective is to identify some difference between the two groups, such as diet, genetic makeup, or alcohol consumption, that might explain differences in the risk of disease observed in the two groups.35 Such studies may be useful for identifying associations, but they rarely provide definitive causal answers.36 The difficulty is illustrated below with an ecological study of the relationship between dietary fat and cancer.

32. For more information (and references) about cross-sectional studies, see Leon Gordis, Epidemiology 195–98 (4th ed. 2009).

33. Some individual studies may be conducted in which all members of a group or community are treated as exposed to an agent of interest (e.g., a contaminated water system) and disease status is determined individually. These studies should be distinguished from ecological studies.

34. In Cook v. Rockwell International Corp., 580 F. Supp. 2d 1071, 1095–96 (D. Colo. 2006), the plaintiffs’ expert conducted an ecological study in which he compared the incidence of two cancers among those living in a specified area adjacent to the Rocky Flats Nuclear Weapons Plant with other areas more distant. (The likely explanation for relying on this type of study is the time and expense of a study that gathered information about each individual in the affected area.) The court recognized that ecological studies are less probative than studies in which data are based on individuals but nevertheless held that limitation went to the weight of the study. Plaintiff’s expert was permitted to testify to causation, relying on the ecological study he performed.

In Renaud v. Martin Marietta Corp., 749 F. Supp. 1545, 1551 (D. Colo. 1990), aff’d, 972 F.2d 304 (10th Cir. 1992), the plaintiffs attempted to rely on an excess incidence of cancers in their neighborhood to prove causation. Unfortunately, the court confused the role of epidemiology in proving causation with the issue of the plaintiffs’ exposure to the alleged carcinogen and never addressed the evidentiary value of the plaintiffs’ evidence of a disease cluster (i.e., an unusually high incidence of a particular disease in a neighborhood or community). Id. at 1554.

35. David E. Lilienfeld & Paul D. Stolley, Foundations of Epidemiology 12 (3d ed. 1994).

36. Thus, the emergence of a cluster of adverse events associated with use of heparin, a longtime and widely-prescribed anticoagulent, led to suspicions that some specific lot of heparin was responsible. These concerns led the Centers for Disease Control to conduct a case control study that concluded

If a researcher were interested in determining whether a high dietary fat intake is associated with breast cancer, he or she could compare different countries in terms of their average fat intakes and their average rates of breast cancer. If a country with a high average fat intake also tends to have a high rate of breast cancer, the finding would suggest an association between dietary fat and breast cancer. However, such a finding would be far from conclusive, because it lacks particularized information about an individual’s exposure and disease status (i.e., whether an individual with high fat intake is more likely to have breast cancer).37 In addition to the lack of information about an individual’s intake of fat, the researcher does not know about the individual’s exposures to other agents (or other factors, such as a mother’s age at first birth) that may also be responsible for the increased risk of breast cancer. This lack of information about each individual’s exposure to an agent and disease status detracts from the usefulness of the study and can lead to an erroneous inference about the relationship between fat intake and breast cancer, a problem known as an ecological fallacy. The fallacy is assuming that, on average, the individuals in the study who have suffered from breast cancer consumed more dietary fat than those who have not suffered from the disease. This assumption may not be true. Nevertheless, the study is useful in that it identifies an area for further research: the fat intake of individuals who have breast cancer as compared with the fat intake of those who do not. Researchers who identify a difference in disease or death in an ecological study may follow up with a study based on gathering data about individuals.

Another epidemiologic approach is to compare disease rates over time and focus on disease rates before and after a point in time when some event of interest took place.38 For example, thalidomide’s teratogenicity (capacity to cause birth defects) was discovered after Dr. Widukind Lenz found a dramatic increase in the incidence of limb reduction birth defects in Germany beginning in 1960. Yet, other than with such powerful agents as thalidomide, which increased the incidence of limb reduction defects by several orders of magnitude, these secular-trend studies (also known as time-line studies) are less reliable and less able to

that contaminated heparin manufactured by Baxter was responsible for the outbreak of adverse events. See David B. Blossom et al., Outbreak of Adverse Event Reactions Associated with Contaminated Heparin, 359 New Eng. J. Med. 2674 (2008); In re Heparin Prods. Liab. Litig. 2011 WL 2971918 (N.D. Ohio July 21, 2011).

37. For a discussion of the data on this question and what they might mean, see David Freedman et al., Statistics (4th ed. 2007).

38. In Wilson v. Merrell Dow Pharmaceuticals, Inc., 893 F.2d 1149, 1152–53 (10th Cir. 1990), the defendant introduced evidence showing total sales of Bendectin and the incidence of birth defects during the 1970–1984 period. In 1983, Bendectin was removed from the market, but the rate of birth defects did not change. The Tenth Circuit affirmed the lower court’s ruling that the time-line data were admissible and that the defendant’s expert witnesses could rely on them in rendering their opinions. Similar evidence was relied on in cases involving cell phones and the drug Parlodel, which was alleged to cause postpartum strokes in women who took the drug to suppress lactation. See Newman v. Motorola, Inc., 218 F. Supp. 2d 769, 778 (D. Md. 2002); Siharath v. Sandoz Pharms. Corp., 131 F. Supp. 2d 1347, 1358 (N.D. Ga. 2001).

detect modest causal effects than the observational studies described above. Other factors that affect the measurement or existence of the disease, such as improved diagnostic techniques and changes in lifestyle or age demographics, may change over time. If those factors can be identified and measured, it may be possible to control for them with statistical methods. Of course, unknown factors cannot be controlled for in these or any other kind of epidemiologic studies.

C. Epidemiologic and Toxicologic Studies

In addition to observational epidemiology, toxicology models based on live animal studies (in vivo) may be used to determine toxicity in humans.39 Animal studies have a number of advantages. They can be conducted as true experiments, and researchers control all aspects of the animals’ lives. Thus, they can avoid the problem of confounding,40 which epidemiology often confronts. Exposure can be carefully controlled and measured. Refusals to participate in a study are not an issue, and loss to followup very often is minimal. Ethical limitations are diminished, and animals can be sacrificed and their tissues examined, which may improve the accuracy of disease assessment. Animal studies often provide useful information about pathological mechanisms and play a complementary role to epidemiology by assisting researchers in framing hypotheses and in developing study designs for epidemiologic studies.

Animal studies have two significant disadvantages, however. First, animal study results must be extrapolated to another species—human beings—and differences in absorption, metabolism, and other factors may result in interspecies variation in responses. For example, one powerful human teratogen, thalidomide, does not cause birth defects in most rodent species.41 Similarly, some known teratogens in animals are not believed to be human teratogens. In general, it is often difficult to confirm that an agent known to be toxic in animals is safe for human beings.42 The second difficulty with inferring human causation from animal studies is that the high doses customarily used in animal studies require consideration of the dose–response relationship and whether a threshold no-effect dose exists.43 Those matters are almost always fraught with considerable, and currently unresolvable, uncertainty.44

39. For an in-depth discussion of toxicology, see Bernard D. Goldstein & Mary Sue Henifin, Reference Guide on Toxicology, in this manual.

40. See infra Section IV.C.

41. Phillip Knightley et al., Suffer the Children: The Story of Thalidomide 271–72 (1979).

42. See Ian C.T. Nesbit & Nathan J. Karch, Chemical Hazards to Human Reproduction 98–106 (1983); Int’l Agency for Research on Cancer (IARC), Interpretation of Negative Epidemiologic Evidence for Carcinogenicity (N.J. Wald & Richard Doll eds., 1985) [hereafter IARC].

43. See infra Section V.C & note 119.

44. See Soldo v. Sandoz Pharms. Corp., 244 F. Supp. 2d 434, 466 (W.D. Pa. 2003) (quoting this reference guide in the first edition of the Reference Manual); see also General Elec. Co. v. Joiner, 522 U.S. 136, 143–45 (1997) (holding that the district court did not abuse its discretion in exclud-

Toxicologists also use in vitro methods, in which human or animal tissue or cells are grown in laboratories and are exposed to certain substances. The problem with this approach is also extrapolation—whether one can generalize the findings from the artificial setting of tissues in laboratories to whole human beings.45

Often toxicologic studies are the only or best available evidence of toxicity.46 Epidemiologic studies are difficult, time-consuming, expensive, and sometimes, because of limited exposure or the infrequency of disease, virtually impossible to perform.47 Consequently, they do not exist for a large array of environmental agents. Where both animal toxicologic and epidemiologic studies are available, no universal rules exist for how to interpret or reconcile them.48 Careful assessment

ing expert testimony on causation based on expert’s failure to explain how animal studies supported expert’s opinion that agent caused disease in humans).

45. For a further discussion of these issues, see Bernard D. Goldstein & Mary Sue Henifin, Reference Guide on Toxicology, Section III.A, in this manual.

46. IARC, a well-regarded international public health agency, evaluates the human carcinogenicity of various agents. In doing so, IARC obtains all of the relevant evidence, including animal studies as well as any human studies. On the basis of a synthesis and evaluation of that evidence, IARC publishes a monograph containing that evidence and its analysis of the evidence and provides a categorical assessment of the likelihood the agent is carcinogenic. In a preamble to each of its monographs, IARC explains what each of the categorical assessments means. Solely on the basis of the strength of animal studies, IARC may classify a substance as “probably carcinogenic to humans.” International Agency for Research on Cancer, Human Papillomaviruses, 90 Monographs on the Evaluation of Carcinogenic Risks to Humans 9–10 (2007), available at http://monographs.iarc.fr/ENG/Monographs/vol90/index.php; see also Magistrini v. One Hour Martinizing Dry Cleaning, 180 F. Supp. 2d 584, 600 n.18 (D.N.J. 2002). When IARC monographs are available, they are generally recognized as authoritative. Unfortunately, IARC has conducted evaluations of only a fraction of potentially carcinogenic agents, and many suspected toxic agents cause effects other than cancer.

47. Thus, in a series of cases involving Parlodel, a lactation suppressant for mothers of newborns, efforts to conduct an epidemiologic study of its effect on causing strokes were stymied by the infrequency of such strokes in women of child-bearing age. See, e.g., Brasher v. Sandoz Pharms. Corp., 160 F. Supp. 2d 1291, 1297 (N.D. Ala. 2001). In other cases, a plaintiff’s exposure to an overdose of a drug may be unique or nearly so. See Zuchowicz v. United States, 140 F.3d 381 (2d Cir. 1998).

48. See IARC, supra note 41 (identifying a number of substances and comparing animal toxicology evidence with epidemiologic evidence); Michele Carbone et al., Modern Criteria to Establish Human Cancer Etiology, 64 Cancer Res. 5518, 5522 (2004) (National Cancer Institute symposium concluding that “There should be no hierarchy [among different types of scientific methods to determine cancer causation]. Epidemiology, animal, tissue culture and molecular pathology should be seen as integrating evidences in the determination of human carcinogenicity.”)

A number of courts have grappled with the role of animal studies in proving causation in a toxic substance case. One line of cases takes a very dim view of their probative value. For example, in Brock v. Merrell Dow Pharmaceuticals, Inc., 874 F.2d 307, 313 (5th Cir. 1989), the court noted the “very limited usefulness of animal studies when confronted with questions of toxicity.” A similar view is reflected in Richardson v. Richardson-Merrell, Inc., 857 F.2d 823, 830 (D.C. Cir. 1988), Bell v. Swift Adhesives, Inc., 804 F. Supp. 1577, 1579–80 (S.D. Ga. 1992), and Cadarian v. Merrell Dow Pharmaceuticals, Inc., 745 F. Supp. 409, 412 (E.D. Mich. 1989).

Other courts have been more amenable to the use of animal toxicology in proving causation. Thus, in Marder v. G.D. Searle & Co., 630 F. Supp. 1087, 1094 (D. Md. 1986), aff’d sub nom. Wheelahan v. G.D. Searle & Co., 814 F.2d 655 (4th Cir. 1987), the court observed: “There is a range of scientific

of the methodological validity and power49 of the epidemiologic evidence must be undertaken, and the quality of the toxicologic studies and the questions of interspecies extrapolation and dose–response relationship must be considered.50

methods for investigating questions of causation—for example, toxicology and animal studies, clinical research, and epidemiology—which all have distinct advantages and disadvantages.” In Milward v. Acuity Specialty Products Group, Inc., 639 F.3d 11, 17-19 (1st Cir. 2011), the court endorsed an expert’s use of a “weight-of-the-evidence” methodology, holding that the district court abused its discretion in ruling inadmissible an expert’s testimony about causation based on that methodology. As a corollary to recognizing weight of the evidence as a valid scientific technique, the court also noted the role of judgment in making an appropriate inference from the evidence. While recognizing the legitimacy of the methodology, the court also acknowledged that, as with any scientific technique, it can be improperly applied. See also Metabolife Int’l, Inc. v. Wornick, 264 F.3d 832, 842 (9th Cir. 2001) (holding that the lower court erred in per se dismissing animal studies, which must be examined to determine whether they are appropriate as a basis for causation determination); In re Heparin Prods. Liab. Litig. 2011 WL 2971918 (N.D. Ohio July 21, 2011) (holding that animal toxicology in conjunction with other non-epidemiologic evidence can be sufficient to prove causation); Ruff v. Ensign-Bickford Indus., Inc., 168 F. Supp. 2d 1271, 1281 (D. Utah 2001) (affirming animal studies as sufficient basis for opinion on general causation.); cf. In re Paoli R.R. Yard PCB Litig., 916 F.2d 829, 853–54 (3d Cir. 1990) (questioning the exclusion of animal studies by the lower court). The Third Circuit in a subsequent opinion in Paoli observed:

[I]n order for animal studies to be admissible to prove causation in humans, there must be good grounds to extrapolate from animals to humans, just as the methodology of the studies must constitute good grounds to reach conclusions about the animals themselves. Thus, the requirement of reliability, or “good grounds,” extends to each step in an expert’s analysis all the way through the step that connects the work of the expert to the particular case.

In re Paoli R.R. Yard PCB Litig., 35 F.3d 717, 743 (3d Cir. 1994); see also Cavallo v. Star Enter., 892 F. Supp. 756, 761–63 (E.D. Va. 1995) (courts must examine each of the steps that lead to an expert’s opinion), aff’d in part and rev’d in part, 100 F.3d 1150 (4th Cir. 1996).

One explanation for these conflicting lines of cases may be that when there is a substantial body of epidemiologic evidence that addresses the causal issue, animal toxicology has much less probative value. That was the case, for example, in the Bendectin cases of Richardson, Brock, and Cadarian. Where epidemiologic evidence is not available, animal toxicology may be thought to play a more prominent role in resolving a causal dispute. See Michael D. Green, Expert Witnesses and Sufficiency of Evidence in Toxic Substances Litigation: The Legacy of Agent Orange and Bendectin Litigation, 86 Nw. U. L. Rev. 643, 680–82 (1992) (arguing that plaintiffs should be required to prove causation by a preponderance of the available evidence); Turpin v. Merrell Dow Pharms., Inc., 959 F.2d 1349, 1359 (6th Cir. 1992); In re Paoli R.R. Yard PCB Litig., No. 86–2229, 1992 U.S. Dist. LEXIS 16287, at *16 (E.D. Pa. 1992). For another explanation of these cases, see Gerald W. Boston, A Mass-Exposure Model of Toxic Causation: The Control of Scientific Proof and the Regulatory Experience, 18 Colum. J. Envtl. L. 181 (1993) (arguing that epidemiologic evidence should be required in mass-exposure cases but not in isolated-exposure cases); see also IARC, supra note 41; Bernard D. Goldstein & Mary Sue Henifin, Reference Guide on Toxicology, Section I.F, in this manual. The Supreme Court, in General Electric Co. v. Joiner, 522 U.S. 136, 144–45 (1997), suggested that there is no categorical rule for toxicologic studies, observing, “[W]hether animal studies can ever be a proper foundation for an expert’s opinion [is] not the issue…. The [animal] studies were so dissimilar to the facts presented in this litigation that it was not an abuse of discretion for the District Court to have rejected the experts’ reliance on them.”

49. See infra Section IV.A.3.

50. See Ellen F. Heineman & Shelia Hoar Zahm, The Role of Epidemiology in Hazard Evaluation, 9 Toxic Substances J. 255, 258–62 (1989).

III. How Should Results of an Epidemiologic Study Be Interpreted?

Epidemiologists are ultimately interested in whether a causal relationship exists between an agent and a disease. However, the first question an epidemiologist addresses is whether an association exists between exposure to the agent and disease. An association between exposure to an agent and disease exists when they occur together more frequently than one would expect by chance.51 Although a causal relationship is one possible explanation for an observed association between an exposure and a disease, an association does not necessarily mean that there is a cause–effect relationship. Interpreting the meaning of an observed association is discussed below.

This section begins by describing the ways of expressing the existence and strength of an association between exposure and disease. It reviews ways in which an incorrect result can be produced because of the sampling methods used in all observational epidemiologic studies and then examines statistical methods for evaluating whether an association is real or the result of a sampling error.

The strength of an association between exposure and disease can be stated in various ways,52 including as a relative risk, an odds ratio, or an attributable risk.53 Each of these measurements of association examines the degree to which the risk of disease increases when individuals are exposed to an agent.

A commonly used approach for expressing the association between an agent and disease is relative risk (“RR”). It is defined as the ratio of the incidence rate (often referred to as incidence) of disease in exposed individuals to the incidence rate in unexposed individuals:

![]()

51. A negative association implies that the agent has a protective or curative effect. Because the concern in toxic substances litigation is whether an agent caused disease, this reference guide focuses on positive associations.

52. Another outcome measure is a risk difference. A risk difference is the difference between the proportion of disease in those exposed to the agent and the proportion of disease in those who were unexposed. Thus, in the example of relative risk in the text below discussing relative risk, the proportion of disease in those exposed is 40/100 and the proportion of disease in the unexposed is 20/100. The risk difference is 20/100.

53. Numerous courts have employed these measures of the strength of an association. See, e.g., In re Bextra & Celebrex Mktg. Sales Practices & Prod. Liab. Litig., 524 F. Supp. 2d 1166, 1172–74 (N.D. Cal. 2007); Cook v. Rockwell Int’l Corp., 580 F. Supp. 2d 1071, 1095 (D. Colo. 2006) (citing the second edition of this reference guide); In re W.R. Grace & Co., 355 B.R. 462, 482–83 (Bankr. D. Del. 2006).

The incidence rate of disease is defined as the number of cases of disease that develop during a specified period of time divided by the number of persons in the cohort under study.54 Thus, the incidence rate expresses the risk that a member of the population will develop the disease within a specified period of time.

For example, a researcher studies 100 individuals who are exposed to an agent and 200 who are not exposed. After 1 year, 40 of the exposed individuals are diagnosed as having a disease, and 20 of the unexposed individuals also are diagnosed as having the disease. The relative risk of contracting the disease is calculated as follows:

- The incidence rate of disease in the exposed individuals is 40 cases per year per 100 persons (40/100), or 0.4.

- The incidence rate of disease in the unexposed individuals is 20 cases per year per 200 persons (20/200), or 0.1.

- The relative risk is calculated as the incidence rate in the exposed group (0.4) divided by the incidence rate in the unexposed group (0.1), or 4.0.

A relative risk of 4.0 indicates that the risk of disease in the exposed group is four times as high as the risk of disease in the unexposed group.55

In general, the relative risk can be interpreted as follows:

- If the relative risk equals 1.0, the risk in exposed individuals is the same as the risk in unexposed individuals.56 There is no association between exposure to the agent and disease.

- If the relative risk is greater than 1.0, the risk in exposed individuals is greater than the risk in unexposed individuals. There is a positive association between exposure to the agent and the disease, which could be causal.

- If the relative risk is less than 1.0, the risk in exposed individuals is less than the risk in unexposed individuals. There is a negative association, which could reflect a protective or curative effect of the agent on risk of disease. For example, immunizations lower the risk of disease. The results suggest that immunization is associated with a decrease in disease and may have a protective effect on the risk of disease.

Although relative risk is a straightforward concept, care must be taken in interpreting it. Whenever an association is uncovered, further analysis should be

54. Epidemiologists also use the concept of prevalence, which measures the existence of disease in a population at a given point in time, regardless of when the disease developed. Prevalence is expressed as the proportion of the population with the disease at the chosen time. See Gordis, supra note 32, at 43–47.

55. See DeLuca v. Merrell Dow Pharms., Inc., 911 F.2d 941, 947 (3d Cir. 1990); Magistrini v. One Hour Martinizing Dry Cleaning, 180 F. Supp. 2d 584, 591 (D.N.J. 2002).

56. See Magistrini, 180 F. Supp. 2d at 591.

conducted to assess whether the association is real or a result of sampling error, confounding, or bias.57 These same sources of error may mask a true association, resulting in a study that erroneously finds no association.

The odds ratio (“OR”) is similar to a relative risk in that it expresses in quantitative terms the association between exposure to an agent and a disease.58 It is a convenient way to estimate the relative risk in a case-control study when the disease under investigation is rare.59 The odds ratio approximates the relative risk when the disease is rare.60

In a case-control study, the odds ratio is the ratio of the odds that a case (one with the disease) was exposed to the odds that a control (one without the disease) was exposed. In a cohort study, the odds ratio is the ratio of the odds of developing a disease when exposed to a suspected agent to the odds of developing the disease when not exposed.

Consider a case-control study, with results as shown schematically in a 2 × 2 table (Table 2):

Table 2. Cross-tabulation of cases and controls by exposure status

| Cases (with disease) |

Controls (no disease) |

|

| Exposed | a | b |

| Not exposed | c | d |

In a case-control study,

![]()

57. See infra Sections IV.B–C.

58. A relative risk cannot be calculated for a case-control study, because a case-control study begins by examining a group of persons who already have the disease. That aspect of the study design prevents a researcher from determining the rate at which individuals develop the disease. Without a rate or incidence of disease, a researcher cannot calculate a relative risk.

59. If the disease is not rare, the odds ratio is still valid to determine whether an association exists, but interpretation of its magnitude is less intuitive.

60. See Marcello Pagano & Kimberlee Gauvreau, Principles of Biostatistics 354 (2d ed. 2000). For further detail about the odds ratio and its calculation, see Kahn & Sempos, supra note 31, at 47–56.

Looking at Table 2, this ratio can be calculated as

![]()

This works out to ad/bc. Because we are multiplying two diagonal cells in the table and dividing by the product of the other two diagonal cells, the odds ratio is also called the cross-products ratio.

Consider the following hypothetical study: A researcher identifies 100 individuals with a disease who serve as “cases” and 100 people without the disease who serve as “controls” for her case-control study. Forty of the 100 cases were exposed to the agent and 60 were not. Among the control group, 20 people were exposed and 80 were not. The data can be presented in a 2 × 2 table (Table 3):

Table 3. Case-Control Study Outcome

| Cases (with disease) | Controls (no disease) | |

| Exposed | 40 | 20 |

| Not exposed | 60 | 80 |

The calculation of the odds ratio would be:

![]()

If the disease is relatively rare in the general population (about 5% or less), the odds ratio is a good approximation of the relative risk, which means that there is almost a tripling of the disease in those exposed to the agent.61

61. The odds ratio is usually marginally greater than the relative risk. As the disease in question becomes more common, the difference between the odds ratio and the relative risk grows.

The reason why the odds ratio approximates the relative risk when the incidence of disease is small can be demonstrated by referring to Table 2. The odds ratio, as stated in the text, is ad/bc. The relative risk for such a study would compare the incidence of disease in the exposed group, or a/(a + b), with the incidence of disease in the unexposed group or c/(c + d). The relative risk would be:

![]()

When the incidence of disease is low, a and c will be small in relation to b and d, and the relative risk will then approximate the odds ratio of ad/bc. See Leon Gordis, Epidemiology 208–09 (4th ed. 2009).

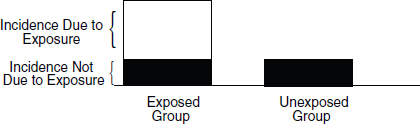

A frequently used measurement of risk is the attributable risk (“AR”). The attributable risk represents the amount of disease among exposed individuals that can be attributed to the exposure. It also can be expressed as the proportion of the disease among exposed individuals that is associated with the exposure (also called the “attributable proportion of risk,” the “etiologic fraction,” or the “attributable risk percent”). The attributable risk reflects the maximum proportion of the disease that can be attributed to exposure to an agent and consequently the maximum proportion of disease that could be potentially prevented by blocking the effect of the exposure or by eliminating the exposure.62 In other words, if the association is causal, the attributable risk is the proportion of disease in an exposed population that might be caused by the agent and that might be prevented by eliminating exposure to that agent (see Figure 3).63

Figure 3. Risks in exposed and unexposed groups.

To determine the proportion of a disease that is attributable to an exposure, a researcher would need to know the incidence of the disease in the exposed group and the incidence of disease in the unexposed group. The attributable risk is

![]()

62. Kenneth J. Rothman et al., Modern Epidemiology 297 (3d ed. 2008); see also Landrigan v. Celotex Corp., 605 A.2d 1079, 1086 (N.J. 1992) (illustrating that a relative risk of 1.55 conforms to an attributable risk of 35%, that is, (1.55 − 1.0)/1.55 = .35, or 35%).

63. Risk is not zero for the control group (those not exposed) when there are other causal chains that cause the disease that do not require exposure to the agent. For example, some birth defects are the result of genetic sources, which do not require the presence of any environmental agent. Also, some degree of risk in the control group may be the result of background exposure to the agent being studied. For example, nonsmokers in a control group may have been exposed to passive cigarette smoke, which is responsible for some cases of lung cancer and other diseases. See also Ethyl Corp. v. EPA, 541 F.2d 1, 25 (D.C. Cir. 1976). There are some diseases that do not occur without exposure to an agent; these are known as signature diseases. See infra note 177.

The attributable risk can be calculated using the example described in Section III.A. Suppose a researcher studies 100 individuals who are exposed to a substance and 200 who are not exposed. After 1 year, 40 of the exposed individuals are diagnosed as having a disease, and 20 of the unexposed individuals are also diagnosed as having the disease.

- The incidence of disease in the exposed group is 40 persons out of 100 who contract the disease in a year.

- The incidence of disease in the unexposed group is 20 persons out of 200 (or 10 out of 100) who contract the disease in a year.

- The proportion of disease that is attributable to the exposure is 30 persons out of 40, or 75%.

This means that 75% of the disease in the exposed group is attributable to the exposure. We should emphasize here that “attributable” does not necessarily mean “caused by.” Up to this point, we have only addressed associations. Inferring causation from an association is addressed in Section V.

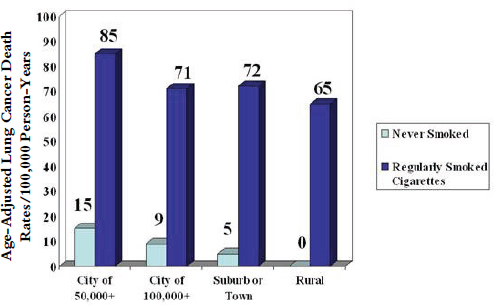

D. Adjustment for Study Groups That Are Not Comparable

Populations often differ in characteristics that relate to disease risk, such as age, sex, and race. Those who live in Florida have a much higher death rate than those who live in Alaska.64 Is sunshine dangerous? Perhaps, but the Florida population is much older than the Alaska population, and some adjustment must be made for the differences in age distribution in the two states in order to compare disease or death rates between populations. The technique used to accomplish this is called adjustment, and two types of adjustment are used—direct and indirect. In direct adjustment (e.g., when based on age), overall disease/death rates are calculated for each population as though each had the age distribution of another standard, or reference, population, using the age-specific disease/death rates for each study population. We can then compare these overall rates, called age-adjusted rates, knowing that any difference between these rates cannot be attributed to differences in age, since both age-adjusted rates were generated using the same standard population.

Indirect adjustment is used when the age-specific rates for a study population are not known. In that case, the overall disease/death rate for the standard/reference population is recalculated based on the age distribution of the population of interest using the age-specific rates of the standard population. Then, the actual number of disease cases/deaths in the population of interest can be compared with

64. See Lilienfeld & Stolley, supra note 35, at 68–70 (the mortality rate in Florida is approximately three times what it is in Alaska).

the number in the reference population that would be expected if the reference population had the age distribution of the population of interest.

This ratio is called the standardized mortality ratio (SMR). When the outcome of interest is disease rather than death, it is called the standardized morbidity ratio.65 If the ratio equals 1.0, the observed number of deaths equals the expected number of deaths, and the mortality rate of the population of interest is no different from that of the reference population. If the SMR is greater than 1.0, the population of interest has a higher mortality risk than that of the reference population, and if the SMR is less than 1.0, the population of interest has a lower mortality rate than that of the reference population.

Thus, age adjustment provides a way to compare populations while in effect holding age constant. Adjustment is used not only for comparing mortality rates in different populations but also for comparing rates in different groups of subjects selected for study in epidemiologic investigations. Although this discussion has focused on adjusting for age, it is also possible to adjust for any number of other variables, such as gender, race, occupation, and socioeconomic status. It is also possible to adjust for several factors simultaneously.66

IV. What Sources of Error Might Have Produced a False Result?

Incorrect study results occur in a variety of ways. A study may find a positive association (relative risk greater than 1.0) when there is no true association. Or a study may erroneously result in finding that that there is no association when in reality there is. A study may also find an association when one truly exists, but the association found may be greater or less than the real association.

Three general categories of phenomena can result in an association found in a study to be erroneous: chance, bias, and confounding. Before any inferences about causation are drawn from a study, the possibility of these phenomena must be examined.67

65. See Taylor v. Airco, Inc., 494 F. Supp. 2d 21, 25 n.4 (D. Mass. 2007) (explaining SMR and its relationship with relative risk). For an example of adjustment used to calculate an SMR for workers exposed to benzene, see Robert A. Rinsky et al., Benzene and Leukemia: An Epidemiologic Risk Assessment, 316 New Eng. J. Med. 1044 (1987).

66. For further elaboration on adjustment, see Gordis, supra note 32, at 73–78; Philip Cole, Causality in Epidemiology, Health Policy, and Law, 27 Envtl. L. Rep. 10,279, 10,281 (1997).

67. See Cole, supra note 65, at 10,285. In DeLuca v. Merrell Dow Pharmaceuticals, Inc., 911 F.2d 941, 955 (3d Cir. 1990), the court recognized and discussed random sampling error. It then went on to refer to other errors (e.g., systematic bias) that create as much or more error in the outcome of a study. For a similar description of error in study procedure and random sampling, see David H. Kaye & David A. Freedman, Reference Guide on Statistics, Section IV, in this manual.

The findings of a study may be the result of chance (or random error). In designing a study, the size of the sample can be increased to reduce (but not eliminate) the likelihood of random error. Once a study has been completed, statistical methods (discussed in Section IV.A) permit an assessment of the extent to which the results of a study may be due to random error.

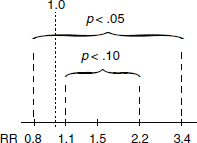

The two main techniques for assessing random error are statistical significance and confidence intervals. A study that is statistically significant has results that are unlikely to be the result of random error, although any criterion for “significance” is somewhat arbitrary. A confidence interval provides both the relative risk (or other risk measure) found in the study and a range (interval) within which the risk likely would fall if the study were repeated numerous times. These two techniques (which are closely related) are explained in Section IV.A.

We should emphasize a matter that those unfamiliar with statistical methodology frequently find confusing: That a study’s results are statistically significant says nothing about the importance of the magnitude of any association (i.e., the relative risk or odds ratio) found in a study or about the biological or clinical importance of the finding.68 “Significant,” as used with the adjective “statistically,” does not mean important. A study may find a statistically significant relationship that is quite modest—perhaps it increases the risk only by 5%, which is equivalent to a relative risk of 1.05.69 An association may be quite large—the exposed cohort might be 10 times more likely to develop disease than the control group—but the association is not statistically significant because of the potential for random error given a small sample size. In short, statistical significance is not about the size of the risk found in a study.

Bias (or systematic error) also can produce error in the outcome of a study. Epidemiologists attempt to minimize bias through their study design, including data collection protocols. Study designs are developed before they begin gathering data. However, even the best designed and conducted studies have biases, which may be subtle. Consequently, after data collection is completed, analytical tools are often used to evaluate potential sources of bias. Sometimes, after bias is identified, the epidemiologist can determine whether the bias would tend to inflate or dilute any association that may exist. Identification of the bias may permit the

68. See Modern Scientific Evidence, supra note 2, § 6.36 at 358 (“Statisticians distinguish between ‘statistical’ and ‘practical’ significance….”); Cole, supra note 65, at 10,282. Understandably, some courts have been confused about the relationship between statistical significance and the magnitude of the association. See Hyman & Armstrong, P.S.C. v. Gunderson, 279 S.W.3d 93, 102 (Ky. 2008) (describing a small increased risk as being considered statistically insignificant and a somewhat larger risk as being considered statistically significant.); In re Pfizer Inc. Sec. Litig., 584 F. Supp. 2d 621, 634–35 (S.D.N.Y. 2008) (confusing the magnitude of the effect with whether the effect was statistically significant); In re Joint E. & S. Dist. Asbestos Litig., 827 F. Supp. 1014, 1041 (S.D.N.Y. 1993) (concluding that any relative risk less than 1.50 is statistically insignificant), rev’d on other grounds, 52 F.3d 1124 (2d Cir. 1995).

69. In general, small effects that are statistically significant require larger sample sizes. When effects are larger, generally fewer subjects are required to produce statistically significant findings.

epidemiologist to make an assessment of whether the study’s conclusions are valid. Epidemiologists may reanalyze a study’s data to correct for a bias identified in a completed study or to validate the analytical methods used.70 Common biases and how they may produce invalid results are described in Section IV.B.

Finally, a study may reach incorrect conclusions about causation because, although the agent and disease are associated, the agent is not a true causal factor. Rather, the agent may be associated with another agent that is the true causal factor, and this latter factor confounds the relationship being examined in the study. Confounding is explained in Section IV.C.

A. What Statistical Methods Exist to Evaluate the Possibility of Sampling Error?71

Before detailing the statistical methods used to assess random error (which we use as synonymous with sampling error), two concepts are explained that are central to epidemiology and statistical analysis. Understanding these concepts should facilitate comprehension of the statistical methods.

Epidemiologists often refer to the true association (also called “real association”), which is the association that really exists between an agent and a disease and that might be found by a perfect (but nonexistent) study. The true association is a concept that is used in evaluating the results of a given study even though its value is unknown. By contrast, a study’s outcome will produce an observed association, which is known.

Formal procedures for statistical testing begin with the null hypothesis, which posits that there is no true association (i.e., a relative risk of 1.0) between the agent and disease under study. Data are gathered and analyzed to see whether they disprove72 the null hypothesis. The data are subjected to statistical testing to assess the plausibility that any association found is a result of random error or whether it supports rejection of the null hypothesis. The use of the null hypothesis for this testing should not be understood as the a priori belief of the investigator. When epidemiologists investigate an agent, it is usually because they hypothesize that the agent is a cause of some outcome. Nevertheless, epidemiologists prepare their

70. E.g., Richard A. Kronmal et al., The Intrauterine Device and Pelvic Inflammatory Disease: The Women’s Health Study Reanalyzed, 44 J. Clin. Epidemiol. 109 (1991) (a reanalysis of a study that found an association between the use of IUDs and pelvic inflammatory disease concluded that IUDs do not increase the risk of pelvic inflammatory disease).

71. For a bibliography on the role of statistical significance in legal proceedings, see Sanders, supra note 13, at 329 n.138.

72. See, e.g., Daubert v. Merrell Dow Pharms., Inc., 509 U.S. 579, 593 (1993) (scientific methodology involves generating and testing hypotheses).

study designs and test the plausibility that any association found in a study was the result of random error by using the null hypothesis.73

1. False positives and statistical significance

When a study results in a positive association (i.e., a relative risk greater than 1.0), epidemiologists try to determine whether that outcome represents a true association or is the result of random error.74 Random error is illustrated by a fair coin (i.e., not modified to produce more heads than tails [or vice versa]). On average, for example, we would expect that coin tosses would yield half heads and half tails. But sometimes, a set of coin tosses might yield an unusual result, for example, six heads out of six tosses,75 an occurrence that would result, purely by chance, in less than 2% of a series of six tosses. In the world of epidemiology, sometimes the study findings, merely by chance, do not reflect the true relationships between an agent and outcome. Any single study—even a clinical trial—is in some ways analogous to a set of coin tosses, being subject to the play of chance. Thus, for example, even though the true relative risk (in the total population) is 1.0, an epidemiologic study of a particular study population may find a relative risk greater than (or less