Opportunities to Build a Safer System for Health IT

Health IT supports a safety-critical system: its design, implementation, and use can either provide a substantial improvement in the quality and safety of patient care or pose serious risks to patients. In any sociotechnical system, consideration of the interactions of the people, processes, and technology form the baseline for ensuring successful system performance. Evidence suggests that existing health IT products in actual use may not yet be consistently producing the anticipated benefits, indicating that health IT products, in some cases, can contribute to unintended risks of harm.

To improve safety, health IT needs to optimize the interaction between people, technology, and the rest of the sociotechnical system. Sociotechnical theory, as described in Chapter 3, advocates for direct involvement of end users in system design. It shifts the paradigm for software development from technical development done in isolation by software and systems engineers to a process that is inclusive and iterative, engaging end users in design, deployment, and integration of the software product into workflow to enhance satisfaction and effectiveness.

Adhering to well-developed practices for design, training, and use can minimize safety risks. Building safer health IT involves exploring both real and potential hazards so that hazards are minimized or eliminated. Health IT can be viewed as having two related but distinct life cycles, with one relating to the design and development of health IT and the other associated with the implementation and use of health IT. Vendors and implementing organizations have specific roles in all phases of both life cycles and ought to coordinate their efforts for ensuring safety. The size, complexity, and resources available to large and small clinician practices and health care

organizations may affect their abilities to fully realize the benefits of health IT products intended to facilitate safer care. This chapter reflects as much as possible the literature, experiences of key stakeholders, and the committee’s expert opinion.

Technology does not exist in isolation from its operator. As such, the design and use of health IT are interdependent. The design and development of products affects their safe performance and the extent to which clinician users will accept or reject the technology. To the end user, a safely functioning health IT product is one that includes

• Easy retrieval of accurate, timely, and reliable native and imported data;

• A system the user wants to interact with;

• Simple and intuitive data displays;

• Easy navigation;

• Evidence at the point of care to aid decision making;

• Enhancements to workflow, automating mundane tasks, and streamlining work, never increasing physical or cognitive workload;

• Easy transfer of information to and from other organizations and providers; and

• No unanticipated downtime.

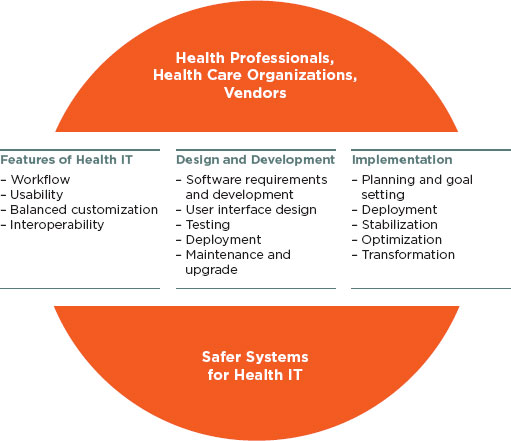

Investing in health IT products aims to make care safer and improve health professional workflow while not introducing harm or risks. Key features such as enhanced workflow, usability, balanced customization, and interoperability affect whether or not clinician users enjoy successful interactions with the product and achieve these aims. Effective design and development drive the safe functioning of the products as well as determine some aspects of safe use by health professionals. Collaboration among users and vendors across the continuum of technology design, including embedding products into clinical workflow and ongoing product optimization, represents a dynamic process characterized by frequent feedback and joint accountability to promote safer health IT. The combination of these activities can result in building safer systems for health IT, as summarized in Figure 4-1.

Safer Systems for Health IT Seamlessly Support Cognitive and Clinical Workflows

The cognitive work of clinicians is substantial. Clinicians must rapidly integrate large amounts of data to make decisions in unstable and complex

FIGURE 4-1

Interdependent activities for building a safer system for health IT.

settings. The use of health IT is intended to aid in performing technical work that is also cognitive work, such as coordinating resources for a procedure, assembling patient data for action, or supporting a decision that requires knowledge of resource availability. However, creating a graphical representation of the information needed to support the complex processes clinicians use to collect and analyze data elements, consider alternative choices, and then make a definitive decision is challenging.

The introduction of health IT sometimes changes clinical workflows in unanticipated ways; these changes may be detrimental to patient safety. Although some templates may be very useful to providers, a rigid template for recording the “history of present illness,” for example, may alter the conversation between physician and patient in such a way that important historical clues are not conveyed or received. An inflexible order sequence may require the provider to hold important orders in mind while navigating through mandatory screens, increasing the cognitive workload of communicating

patient care orders and adding to the possibility that intended orders are forgotten. A time-consuming process for locating laboratory or radiographic data presents a barrier to retrieval. In addition, the time spent on cumbersome data retrieval and data remodeling is time taken away from other clinical demands, requiring shortcuts in other aspects of care. Evaluation of the impact of introducing health IT on the cognitive workload of clinicians is important to determine unintended consequences and the potential for distraction, delays in care, and increased workload in general.

The timeframe for greatest threats to safety is during initial implementation, when workflow is new, a steep learning curve threatens previous practice, and nonperformance of any aspect of a technology causes the user to seek immediate alternate pathways to achieve a particular functionality, otherwise called a workaround. Alternatively, users of mature health IT products are at risk for habituation and overreliance on a technology, requiring vigilant attention to alerts or other notifications so that safety features are not ignored. When use of health IT impedes workflow, there must be a way to identify not only the faulty process that results but also any potential increase in workload for clinicians.

Workarounds, common in health IT environments, are often a symptom of suboptimal design. When workarounds circumvent built-in safety features of a product, patient safety may be compromised. Integrating health IT within real-world clinical workflows requires attention to in situ use to ensure appropriate use of safety features (Koppel et al., 2008).

For example, coping mechanisms such as “paste forward” (or “copy forward”), a practice of copying portions of previously entered documentation and reusing the text in a new note, may be understood as compensatory survival strategies in an environment where the electronic environment does not support an efficient clinician workflow. However, this function may encourage staff to repeat an earlier evaluation rather than consider whether it is still accurate. In addition, the problem list in some electronic health records (EHRs) is limited to structured International Classification of Diseases (ninth revision) (ICD-9) entries, which may not capture the relevant clinical information required for optimal care. Paste forward is then employed as a means of bringing forward important, longitudinal data, such as richly detailed descriptions of the prior evaluation and medical thinking for each of a patient’s multiple medical problems that otherwise is not accommodated in the EHR. Yet, if done without exquisite attention to detail, these workarounds themselves can create risk. The optimal design and implementation of EHRs should include a deep understanding of and response to the clinician-initiated workarounds.

Usability Is a Key Driver of Safety

Health professionals work in complex, high-risk, and frequently chaotic environments fraught with interruptions, time pressures, and incomplete, disorganized, and overwhelming amounts of information. Health professionals require technologies that make this work easier and safer, rather than more difficult. Health IT products are needed that promote efficiency and ease of use while minimizing the likelihood of error.

Many health information systems used today provide poor support for the cognitive tasks and workflow of clinicians (NRC, 2009). This can lead to clinicians spending time unnecessarily identifying the most relevant data for clinical decision making, potentially selecting the wrong data, and missing important information that may increase patient safety risks. If the design of the software disrupts an efficient workflow or presents a cumbersome user interface, the potential for harm rises (see Box 4-1). Software design and its effect on workflow, as well as an effective user interface, are key determinants of usability.

The committee expressed concerns that poor usability, such as the example in Box 4-1, is one of the single greatest threats to patient safety. On the other hand, once improved, it can be an effective promoter of patient safety.

The common expectation is that health IT should make “the right thing to do the easy thing to do” as facilitated by effective design. Evaluation of the impact of health IT on usability and on cognitive workload is important to determine unintended consequences and the potential for distraction, delays in care, and increased workload in general.

Usability guidelines and principles focused on improving safety need to be put into practice. Research over the past several decades supports a number of usability guidelines and principles. For example, there are a finite number of styles with which a user may interact with a computer system: direct manipulation (e.g., moving objects on a screen), menu selection, form fill-in, command language, and natural language. Each of these styles has known advantages and disadvantages, and one (or perhaps a blend of two or more) may well be more appropriate for a specific application from a usability standpoint.

The National Institute of Standards and Technology (NIST) has been developing guidelines and standards for usability design and evaluation. One report, NIST Guide to the Processes Approach for Improving the Usability of Electronic Health Records, introduces the basic concepts of usability, common principles of good usability design, methods for usability evaluation and improvement, processes of usability engineering, and the importance of organizational commitment to usability (NIST, 2010b). The second report, Customized Common Industry Format Template for Electronic

BOX 4-1

Opportunities for Unintended Consequences

Health IT that is not designed to facilitate common tasks can result in unintended consequences.

• The most common ordering sequence is frequently not the most prominent sequence presented to the clinician, increasing the chance of an inadvertent error. For example, in the hospital setting, where anticoagulation is being initiated or where patient characteristics are in flux, the required dose of Coumadin varies from day to day. When the selections for “— mg of Coumadin daily” appear at the top of the list of choices, there is an increased chance clinicians will inadvertently select “5 mg of Coumadin daily” rather than scrolling down to the bottom of the page and finding “5 mg of Coumadin today.” This design-workflow mismatch may result in patients receiving unintended Coumadin doses or similarly may affect other medications requiring daily dose adjustment.

• When a patient’s medications are listed alphabetically or randomly rather than grouped by type, users are forced through several pages of medications and mentally knit together the therapeutic program for each individual condition. In this situation, the cognitive workload of understanding of the patient’s diabetic regimen, for example, is made unnecessarily complex, and a clinician may easily miss one of the patient’s five diabetic medications, scattered among the patient’s 24 medications displayed across three different pages. Likewise, a patient’s congestive heart failure medications may be dispersed across the same several pages, interspersed with medications for other conditions, again increasing the mental workload. In one example, “furosemide 80 mg q am” was toward the top of the list and then, separated by many intervening medications and on the next page, the clinician later found an entry for “furosemide 40 mg q pm.” Such data disorganization contributes to the possibility of clinical error.

Even if clinicians are aware of these issues and become more diligent, health IT products that are not designed for users’ needs create additional cognitive workload, which, over time, may cause the clinician to be more susceptible to making mistakes.

Personal communication, Christine A. Sinsky, August 11, 2011.

Health Record Usability Testing, is not only a template for reporting usability evaluation but also a guideline for what and how usability evaluation should be conducted (NIST, 2010a). NIST released draft guidance on design evaluation and human user performance testing for usability issues related to patient safety, Technical Evaluation, Testing and Evaluation of the Usability of Electronic Health Records. NIST will publish its final guidance based on constructive technical feedback received during a public comment process for the draft report (NIST, 2011).

The National Center for Cognitive Informatics and Decision Making in Healthcare (NCCD) has developed the Rapid Usability Assessment process to assess the usability of EHRs on specific meaningful use objectives and to provide detailed and actionable feedback to vendors to help improve their systems. The Rapid Usability Assessment process is based on two established methodologies. The first is the use of well-established usability principles to identify usability problems that are targets for potential improvements (Neilson, 1994; Zhang et al., 2003). This evaluation is performed by usability experts. The usability problems identified in the process are documented, rated for severity by the experts, and communicated to the vendors.

The second phase of the Rapid Usability Assessment involves the use of a technique known as the “keystroke-level model” (Card et al., 1983; Kieras, unpublished). Using this method, it is possible to estimate the time and steps required to complete specific tasks. This method makes the assumption that an expert user would be using the system and therefore provides the optimal or fastest time to complete the task. The Rapid Usability Assessment uses a software tool, CogTool, to enhance the accuracy and reliability of the keystroke-level model (John et al., 2004). The program calculates the amount of time an expert user will use to complete the task and steps involved in that task. A confidential report is provided to participating vendors, which includes objective measures of the usability of the system, actionable results, and opportunities for further consultation with the usability evaluation team. It is important to note that although usability is integral to safe systems, sometimes safe practices require taking more time to perform a task to do it safely.

In addition to the Rapid Usability Assessment, the NCCD also developed a unified framework for EHR usability, called TURF, which stands for the four major factors for usability: task, user, representation, and function (Zhang and Walji, 2011). TURF is a theory that describes, explains, and predicts usability differences across EHR systems. It is also a framework that defines and measures EHR usability systematically and objectively. The NCCD is currently developing and testing software tools to automate a subset of the features of TURF, but these tests are still laboratory based.

A dynamic tension exists between the need for design standards development

and vendor competitive differentiation, which is discussed further in the next section. As a result, dissemination of best practices for EHR design has been restrained (McDonnell et al., 2010). Without a comprehensive set of standards for EHR-specific functionalities, general software usability design practices are used knowing that modification will likely be needed to meet the needs of health professionals.

User-centered design and usability testing takes into account knowledge, preferences, workflow, and human factors associated with the complex information needs of varied providers in diverse settings. Many new product enhancements address functionalities desired by users that facilitate or improve workflow and improve on current health IT products. One such example is the electronic capture of gestures observed in an operating room that is then recorded as activities requiring no interruption of the clinician’s working within the sterile field. To support usability within EHRs, Shnei- derman has identified eight heuristically and experientially derived “golden rules” for interface design (Shneiderman et al., 2009) (see Table 4-1).

Achieving the Right Balance Between Customization and Standardization

Current health IT products do not arrive as finished products ready for out-of-the-box or turnkey deployment, but rather often require substantial completion on site. Many smaller organizations do not have the resources for such onsite “customization” and must get by without the products being user ready. For example, when a large institution recognized the need for a diabetic flow sheet that was not supplied by the vendor, it created its own diabetic flow sheet locally. A smaller organization, using the same EHR product and with the same need for a diabetic flow sheet, did not have this capability and its clinicians reverted to a paper workaround using a handwritten flow sheet.

Widespread institution-specific customization presents challenges to maintenance, upgrades, sharing of best practices, and interoperability across multiple-user organizations. Some standardization is necessary, but too much standardization can unnecessarily restrict an organization. In some instances, the implementing organization needs to customize and adapt innovation—the product being integrated—in order to better adopt the innovation (Berwick, 2003). The committee believes there is value in standardization and expressed the need for judicious use of customization when appropriate. Vendors are encouraged to provide more complete, responsive, and resilient health IT products as a preferred way to decrease the need for extensive customization.

TABLE 4-1

Eight Golden Rules for Interface Design

| Principles | Characteristics |

|

Strive for consistency |

- Similar tasks ought to have similar sequences of action to perform, for example: • Identical terminology in prompts and menus • Consistent screen appearance - Any exceptions should be understandable and few |

|

Cater to universal usability |

- Users span a wide range of expertise and have different desires, for example: • Expert users may want shortcuts • Novices may want explanations |

|

Offer informative feedback |

- Systems should provide feedback for every user action to: • Reassure the user that the appropriate action has been or is being done • Instruct the user about the nature of an error if one has been made - Infrequent or major actions call for substantial responses, while frequent or minor actions require less feedback |

|

Design dialogs to yield closure |

- Have a beginning, middle, and end to action sequences - Provide informative feedback when a group of actions has been completed - Signal that it is okay to drop contingency plans - Indicate the need for preparing the next group of actions |

|

Prevent errors |

- Systems should be designed so that users cannot make serious errors, for example: • Do not display menu items that are not appropriate in a given context • Do not allow alphabetic characters in numeric entry fields - User errors should be detected and instructions for recovery offered - Errors should not change the system state |

|

Permit easy reversal of actions |

- When possible, actions (and sequences of actions) should be reversible |

|

Support internal locus of control |

- Surprises or changes should be avoided in familiar behaviors and complex data-entry sequences |

|

Reduce short-term memory load |

- Interfaces should be avoided if they require users to remember information from one screen for use in connection with another screen |

Interoperability

Increased interoperability (i.e., the ability to exchange health information between health IT products and across organizational boundaries) can improve patient safety (Kaelber et al., 2008). Multiple levels of interoperability exist and are needed for different levels of communication (see Table 4-2). Currently, laboratory data have been relatively easy to exchange because good standards exist such as Logical Observation Identifiers Names and Codes (LOINC) and are widely accepted. However, important information such as problem lists and medication lists (which exist in some health IT products) are not easily transmitted and understood by the receiving health IT product because existing standards have not been uniformly adopted. Standards need to be further developed to support interoperability throughout all health IT products.

The committee believes interoperability must extend throughout the continuum of care, including pharmacies, laboratories, ambulatory, acute, post-acute, home, and long-term care settings. For all these organizations to safely coordinate care, health IT products must use common nomenclatures, encoding formats, and presentation formats. Interoperability with personal health record systems and other patient engagement tools is also desirable, both in delivering data to patients and in collecting information from any patient-operated systems.

Failure to achieve interoperability has considerable risks for patient safety. Without the ability of different health IT products to exchange data, information must be transferred by hand or electronic means outside the primary method (e.g., facsimile). Every time information is copied or transmitted by hand, there is a risk of error or loss of data. Incomplete and erroneous records may cause delays in care and result in harm.

Imported data must be timely, accurate, accessible, and displayed in a user-friendly fashion. Patient safety can be at risk even among products that have achieved some level of interoperability. For example, when a data value expected as a number arrives as a string, it can be misinterpreted, resulting in a wrong display. Also, electrocardiogram tracings can be displayed on a screen split in parts and rotated 90 degrees. The extra time required to mentally process such data into what is familiar delays care and increases the chance of error.

The nationwide exchange of data is intended to support portability and immediate access to one’s health information. However, the competitive marketplace today provides few incentives for vendors themselves to support portability. The committee believes conformance tests ought to be available to clinicians so they can ensure their data are exchangeable. Independent entities need to be supported to develop “stress tests” that can be applied to validate whether medical record interoperability can be

TABLE 4-2

Aspects of Interoperability and Their Impact on Patient Safety

|

Aspects of Interoperability |

Definition |

Impact on Patient Safety |

|

Ability to exchange the physical data stream of bits (Hoekstra et al., 2009) that represent relevant information |

Allows for electronic communication between software components |

Software components that cannot communicate with each other force users to reenter data manually, which: - Detracts from time better used attending to patient safety and - Increases opportunities to enter misinformation |

|

Ability to exchange data without loss of semantic content |

Ability for software system to properly work when modules from different vendors are “plugged in” |

The loss of meaning in received data compromises patient safety |

|

Accept “plug-ins” seamlessly |

Semantic content refers to information that allows software to understand the electronic bits |

- The inability to use multiple modules within one organization decreases the likelihood that users can provide their patient information to another health IT product - Lack of “plug-in” interoperability means that the user does not have the ability to select modules from multiple vendors that may perform a specific function more safely |

|

Display similar information in the same way |

- Different health IT products display similar information in similar ways - Systems are consistent in matters such as screen position of fields, color, and units |

When information is displayed inconsistently across organizations, the user must reconcile the different representations of the information mentally, which: - Requires an increased cognitive effort that could be better used toward safe care and - Increases the chance that a user may make a mistake |

achieved. These should impose requirements that will ensure both completeness of the record (e.g., a drug administration should include dosage and time) and interoperability (e.g., the drug name and units should be available and displayable by the receiving system). Suitable organizations to develop conformance tests might be entities such as the ECRI Institute or the Certification Commission for Healthcare Information Technology. Smaller clinics are in particular need of testing to ensure interoperability. Vendors could facilitate this process by publicly documenting their product and identifying the conformance tests they ran successfully.

Making data exchangeable is primarily the responsibility of the vendors who produce the code. The committee believes guidelines need to be developed to support safe interoperability (see Box 4-2).

Safety Considerations for EHR Implementation in Small Practices and Small Hospitals

In examining the current state of health IT, it is important to recognize that experiences often differ for care providers in small settings as compared to others. This difference is often not well captured in the literature

BOX 4-2

Potential Guidelines for Safe Interoperability

The committee believes guidelines for safe interoperability are needed, such as the following:

• All health IT products should be able to export data in structured and standard formats, ready for use in other modules or systems, without loss of semantic content.

• All health IT products should provide their documentation without a fee and in an easily read form. All products should publicize their adherence to relevant standards.

• All health IT products should be able to display imported data in a way compatible with data generated internally, so that users need not exert additional mental effort to deal with data arriving from other software packages.

• Use of standards for data representation should be strongly encouraged, as should support for both the completion of important standards still being developed, such as the RxNorm drug nomenclature effort at the National Library of Medicine, and the adoption of standards that exist but lack adequate adherence, such as SNOMED (Systematized Nomenclature for Medicine).

but instead recounted in personal experiences, which are the basis for this section. An emphasis on safety specific to small practices and hospitals—as well as rural providers and federal health centers—is particularly important because they provide a large fraction of the services delivered by the health care system.1 These providers are often unfamiliar with system safety as an office concept, and they tend to lack champions and administrative support for a broader vision of how health IT fits into the overall picture of health care delivery. As a result, small providers can be at greater risk for not using health IT effectively and, more importantly, can fail to recognize and abate risks even after sentinel events.

Small practices and hospitals may often be more nimble than their larger counterparts in making changes to workflow processes and procedures, but they tend to lag in health IT implementation compared to large integrated delivery or hospital-based systems (see Box 4-3). Compared to their larger counterparts, small practices and hospitals generally have:

• Less administrative capacity or support to undertake the complex new process of health IT implementation and use (and no skills acquisition in this area during their medical training), especially with respect to workflow redesign to optimize the use of health IT (e.g., introduction of an e-prescribing system may require 26 separate mouse clicks to refill a prescription when only a few of those clicks may actually require a physician);

• Less redundancy in staff, which makes training more challenging and burdensome;

• Less capacity to support and train patients in use of new health IT products (e.g., these providers wind up becoming “help desks” for their patients using their IT such as secure email);

• Less access to technical support to keep their systems running reliably;

• Less ability to appreciate, purchase, and afford the amount of training and/or vendor support needed for an optimal implementation;

• Less capacity to monitor for and recognize new failure modes associated with implementation of health IT; and

• An absence of standard recommendations on a variety of key implementation issues such as what to do with paper, and whether to implement as a “big bang” or in phases.

![]()

1 Ninety-three percent of all primary care physicians work in organizations of 10 physicians or fewer; of those, about half practice in organizations with 1 to 2 physicians (Bodenheimer and Pham, 2010).

BOX 4-3

A Small Provider’s Experience with Electronic Health Records (EHRs)

It was mostly on my initiative to implement an EHR. The process itself, which included five round-trip flights to Anchorage and 8 days off of work, was pricey and incredibly disappointing. Most EHRs were too expensive for small offices.

Of all my years in practice, the last year and a half has been my worst. Information is so poorly organized and difficult to access that I now have to remember that Ethel Smorley had an aorta that measured 4.2 cm on the ultrasound, which we did to follow up on her RUQ [right upper quadrant] abdominal pain. At the same time, I must remember that Thomas Richardson needs a RIBA [recombinant immunoblot assay], if and when the reagent becomes available, to sort out the meaning of his hepatitis C antibody.

For one patient I filed, a slightly elevated calcium with inappropriately normal PTH [parathyroid hormone] gets filed under “laboratory.” I even took the time to label it “Ca + PTH-abnl-need short term f/u” so it stands out. Several months later when I look back at the lab-tab to review, that calcium and PTH are buried among all of the subsequent labs the patient has had and the date is off to the right of the “real estate” and without that all-important lateral scroll I can’t tell if the lab was from a week ago or 4 years ago. To review the lab I have to click open one lab—wait one Mississippi, two Mississippi, three Mississippi—look, close, open the next— one Mississippi, two Mississippi, three Mississippi—look, close, open the next—one Mississippi, two Mississippi, three Mississippi—look, and close. It is easy to get lost.

For this patient I made an addendum to the chart noting the patient’s complaint of depression and fatigue as well as the abnormal calcium and PTH and the need to follow up. But this addendum is in the virtual basement of the note and if you don’t do a descending scroll down down down past the Assessment and Plan and the deceptive blank space beneath, the addendum isn’t visible. You have to know it is there to find it, but if you know it is there you don’t need to find it.

I copied a message from another patient’s confidential and encrypted email and pasted it into his chart. I don’t know how it happened but somehow it was also copied to a woman’s chart. Now, according to my EHR, she is suffering from the same symptoms as the previous patient: scrotal pain, decreased urinary stream, and dysuria. It was tucked in the screen pocket out of sight and I immortalized it when I coded and closed the note. Now it lives there forever: A woman with scrotal pain.

If not for my familiarity with long-term patients, the luxury to schedule fewer patients in my day and survive the resultant financial hit, and the resilience of patients, I truly believe that bad outcomes could have happened. As I tell patients, I used to be a doctor but now I am a typist. I am fed up and frustrated and frightened of really missing something bad.

Personal communication, Elizabeth A. Kohnen, M.D., M.P.H., FACP, August 5, 2011.

Central to safe clinical implementation and use of health IT is adoption of redesigned workflows. With the adoption of health IT, each member of the office team or the staff within a small hospital setting needs to approach his job differently and use new tools, while also breaking up tasks and aligning them differently among team members. While this is true for all settings, the challenges of these processes are exacerbated in small practices and hospitals, which often have little to no experience in workflow redesign. New technologies simply inserted in old frameworks and workflows are likely to create new risks for patients.

Challenges also exist in both small practices and small hospitals with the integration of multiple health IT products from boutique niche software systems to legacy software systems. This is due in part to the proprietary nature of some products and the lack of adoption of data standards in other health IT products. The challenges to and costs of switching out vendors are prohibitive for small practices and hospitals, thus creating an obstacle for embracing new and improved technologies.

The great variability in implementation of health IT products in small provider settings is a major reason the literature is limited. Further research is needed to better understand the requirements specific to small practices and hospitals to enable safer deployment and use of health IT, such as the necessary resources and skills that facilitate the change-management process employed during health IT product implementation and adoption. In addition, small practices need self-assessment tools to evaluate the safety of their operational EHR systems.

Small Office Practices

Primary care physicians in small practices face particular challenges. They need to access, integrate, and interpret a large amount of data from multiple sources into a comprehensive picture of a patient. Much of these data are generated outside their offices (e.g., hospitals, specialists, laboratories, pathology laboratories) from information systems that are less likely to be integrated into their own record-keeping system.

In contrast, nonprimary care physicians may focus on more limited aspects of the patient’s condition and are likely to generate much of the clinical data on which they need to act within their offices (e.g., a cardiac imaging machine produces structured data about relevant clinical issues such as left ventricular ejection fraction). Nonprimary care providers may also be more likely than primary care providers to have clinical support staff who assist with data entry, data aggregation, and data extraction in the routine course of patient care.

Small Hospitals

Small hospitals face many of the same challenges as small office practices. Typically there is limited expertise in health IT implementation, workflow redesign, and training, which is compounded by the fact that some small hospitals are struggling to implement the processes and technology needed to meet Health Information Technology for Economic and Clinical Health regulations. There can be large dependencies on vendor-supplied services and expertise, such as the following:

• Technology-related costs, in addition to the major investment needed for organizational and process change, challenge the small hospital already strapped with resource constraints necessary to maintain financial viability.

• Individuals with the required implementation process management expertise are either not present in sufficient numbers or are too immersed in other activities to devote the required time to a safe implementation.

• The small hospital has a huge challenge to assemble and organize the relatively large number of professionals required to ensure a successful implementation while at the same time ensuring that these participants’ other responsibilities are met.

• Day-to-day participation by practicing physicians is especially problematic because small hospitals generally lack the complement of available clinical champions, physician administrators, hospital- based practitioners, intensivists, clinic directors, and training directors who often play critical roles in implementing clinical systems at larger institutions (Frisse and Metzer, 2005).

OPPORTUNITIES TO IMPROVE THE DESIGN AND DEVELOPMENT OF TECHNOLOGIES

Software development is an important determinant of patient safety in all types of health IT. Opportunities exist for vendors, aided by users, to improve safety in the different phases or activities of the software design and development life cycle. Although in theory these activities are identifiable, in practice the boundaries between them are not well defined and require varying levels of intensity to complete.

Health IT Vendor Design Activities

The key activities in the software life cycle are identifying requirements, software development and design, and testing (see Table 4-3).2

Software Requirements and Development Activities

Traditionally, the software development process begins with the explicit statement of what the software is intended to do and the circumstances under which such behavior is appropriate, otherwise known as the performance requirements. Clinicians communicate their needs in detailed statements based on evidence of safe practices whenever possible. Clinicians and software developers need to communicate safety needs and expectations for the clinical environment and the health IT product.

Traditional writing of requirements, although precise, can be a tedious task. When requirements become very complex, it is difficult to be confident that they actually describe what the users want. At times, a sequence of prototypes can be an alternative that allows users to see what a proposed version of the software would actually do. User input can provide for a more effective and accurate product. However, in a safety-critical area, even if the software task is defined by a sequence of prototypes, it will still be necessary to define the task sufficiently rigorously to permit adequate testing. The software functionality, whether developed from requirements or from experiments with prototypes, has to be understood as part of the process of delivering care and ought to reflect the desired changes to that process when it is revised and adapted in the light of operational experience.

Articulating requirements is often difficult. A critical path for identifying and validating requirements for software functionality includes assessment of current-state workflow, mapping the current state to the desired future state, and devising a plan to identify and address the gaps between the two. A process like this includes observation and documentation of the real-life workflow as well as interviews with the clinician. Validation that the software meets redesigned workflow needs is accomplished in multiple phases of testing to obtain iterative feedback from users, as discussed later in this chapter.

Many organizations purchasing health IT products define their requirements only once—immediately prior to purchasing the product off the shelf. Compared to organizations that can specify their requirements to their vendors, organizations purchasing products off the shelf face a somewhat

![]()

2 Traditionally, these activities are called “phases” (e.g., requirements phase, design phase); this report adopts the “activities” terminology to emphasize the point that, although these activities are conceptually distinct, some of them may be occurring simultaneously from time to time.

TABLE 4-3

Health IT Vendor Design Activities

|

Activities/Phases |

Features |

Opportunities to Improve Safety |

|

Requirements Activity |

- Developers articulate what the software must do and the circumstances under which such behavior is appropriate - Developers articulate what the software must not do when safety is an issue - End users of the software must be intimately involved in all aspects of the requirements activity |

- Clinicians communicate their needs - Clinicians identify data that must be captured or imported, with any requirements for conversion and validation such as full text entries - Prototype testing - Safety analyses |

|

Software Development |

- Involves actual programming or coding that reflects the software design - Software development is often undertaken iteratively with testing - Results from testing are used to inform another round of software development |

- Iterative testing identifies unintended consequences for early revisions and informs the next round of development |

|

Design of User Interface Activity |

- Designers define the structure of the software - Software engineers decide on the appropriate technical approaches and solve problems conceptually |

- Clinicians give feedback to designers about effectiveness or improvements needed for usability testing |

|

Activities/Phases |

Features |

Opportunities to Improve Safety |

|

Testing Activity |

- Examines the code that results from software development - Examines code functionality and the extent that the code complies with the requirements - Testing is often split into three separate subactivities: • Unit testing, where individual software modules are tested • Integration testing, where individual modules are assembled into an integrated whole and the whole assembly is tested • Acceptance testing, where the entire assembly is tested to determine compliance with the requirements |

- Involving clinician superusers can reveal code functionality - Involving clinicians in the testing process provide an avenue for identification and correction of code defects and workflow risks - Dress rehearsals–real use by real users with actual data under realistic conditions–allow the clinicians opportunities to identify previously invisible flaws |

NOTE: The design of user interfaces illustrates the concurrent nature of some of the activities of software development–users have an important stake in the design of user interfaces, and their needs must be expressed in the requirements activity.

different challenge—that of assessing whether an existing product is suitable for their organization.

Best practices for developing software emphasize systematicity and quality control. Software developers should identify and record significant risks of failure in development or in performance and articulate a plan to reduce them. They also need to have an explicit definition of the quality criteria for the software, to develop and follow version control procedures, and to track reported bugs and index them. Performing a complete safety analysis requires a comprehensive inventory of the possible clinical harms that might befall a patient and an understanding of how the health IT software might contribute to those harms.

Design of User Interface

Although definitive evidence is limited, the committee believes poor user- interface design is a threat to patient safety (Thimbleby, 2008; Thimbleby and Cairns, 2010). The user interface is one of the most important factors influencing the willingness of clinicians to interact with EHRs and to follow the intended use that is assumed to promote safe habits. The more functional the user interface, the more it enhances usability of the product. Inadequate user interfaces can lead to error and failure. The interface is intended to facilitate a desired clinical task; when the clinician cannot readily locate data or perceives the amount of time required to perform a function is too long, the user interface needs to be evaluated. Poor interface design that detracts from clinician efficiency and affinity for the system will likely lead to under- use or misuse of the system (Franzke, 1995).

The goals of user-centered design are to create an efficient, effective, and satisfying interaction with the user. The interface design starts with an understanding of human behaviors and familiar work patterns. Human nature is such that busy clinicians will trade thoroughness for efficiency, or they will modify their behavior to achieve efficiency. Shneiderman has identified eight heuristically and experientially derived “golden rules” for interface design that, if followed, support the principles of EHR usability (Shneiderman et al., 2009), including consistency for similar tasks; universal applicability for a wide range of expertise; feedback for every user action; communicating closure of an action sequence; design to prevent errors; allowing easy reversal of actions; avoiding complex data entry and retrieval sequences; and reducing memory load (see Table 4-1). Although it is important to develop and follow principles for safe design interfaces, it is equally important that designers do not follow a formulaic checklist. Instead, designers need to continually interact with users to discover and address the safety issues unique to each clinical environment. Formal usability testing during development is essential.

In Vivo Testing: Uncovering Use Error Versus User Error

Often, miscommunications between developers and users leave critical ambiguities that can only be discovered through testing. Therefore, it is critical to test health IT during all stages of development to determine whether user requirements have been translated into software that actually does what the user wants. An important source of information for obtaining information about meeting clinician needs is operational prototype testing. The first versions of software rarely meet clinician needs fully, and observing how a clinician uses a software prototype will yield a great deal of information about what the clinician actually does and does not find

useful and appropriate. Such observations are fed back to software developers, who then revise the software so that its performance better reflects user needs and safe practices as expressed in an operational context.

However, testing cannot be oriented solely to determining if software does what it is supposed to do when users follow all of the proper steps. Because users do make mistakes, a significant portion of testing must be devoted to seeing if the software responds properly when the user does something unexpected. For example, the user may enter data in an unexpected format. Testing is also a longer-term process where the experiences of users based on reported events and workarounds is considered as part of a postmarketing program designed to improve the design of software. To maximize the user-designer feedback loop, EHR products might include a “report here now” button on each screen wherein the user can indicate that a display was confusing, a workflow was cumbersome, or some other way that the design did not support optimal clinical care.

Software Implementation and Postdeployment Activities

Portions of the software life cycle led by vendors in partnership with users influence safer use of health IT such as training prior to implementation, addressing problems that appear during testing and implementation of software, and planning for the ongoing maintenance and upgrade activities that directly impact users. These activities occur not only as part of software development but also during implementation and subsequent phases of use in an organization (see Table 4-4).

Software Implementation Activity

After the technology package is deemed ready for delivery to the user, it is transmitted to the user’s organization for initial use. Prior to using health IT products in the care of patients, extensive training must be done for the specific product and the specific organizational setting. It is customary for organizations to set expectations for training that require documentation of learning modules and demonstrated competency. Resources to support initial and ongoing training are essential components of planned implementation activities. The period of initial use in an operational environment is fraught with patient safety risks, because it is during this period that many problems are most likely to appear. Some of these problems will result from users who have not received adequate training—this will be true even if the technology is designed to require minimal training. Other problems will result from operating the technology with the health of real patients at stake rather than in an artificial environment that is well controlled and does not reflect an actual health care setting.

TABLE 4-4

Health IT Vendor Implementation and Postdeployment Activities

|

Activities/Phases |

Features |

Opportunities to Improve Safety |

|

Software Implementation Activity |

- Installing the software in the users’ organization - Users are trained to use the software |

- Initial planning involves end users prior to deployment - Training is provider- or user-specific to achieve desired learning |

|

Maintenance Activity |

- Fixing problems that appear after deployment, including errors that may have occurred during • Software implementation (i.e., programming errors) • Requirements (i.e., incorrect elicitation of performance requirements from users) • Design (e.g., a dysfunctional architecture) |

- Mechanism for rapid identification of needed maintenance will avoid perpetuating flaws - Performing episodic maintenance maintains trust by users - Planned maintenance is best practice |

|

Upgrade Activity |

Modifying the software to meet new requirements that may emerge over time, such as enabling the software to work with new hardware |

- Planning for upgrades with adequate backup systems is a requirement - Planned safety testing of operational system on routine ongoing basis |

NOTE: Traditionally, these activities are called “phases” (e.g., requirements phase, design phase); this report adopts the “activities” terminology to emphasize the point that, although these activities are conceptually distinct, some of them may be occurring simultaneously from time to time.

An organization typically selects one of two approaches to implementing the technology: either a big bang strategy (i.e., the technology is implemented for use throughout the entire organization at the same time or nearly so) or an incremental approach (i.e., the technology is first deployed for use on a small scale within the organization and then, as operating experience is acquired, it is deployed to other parts of the organization in a gradual, staged manner).

Experience with the implementation of large-scale technology applications suggests that, over time, success is possible with either approach. Nevertheless, a health care organization should plan on the simultaneous operation of both the new and old technologies (even if the old technology is paper based) for some transitional period, so that failures in the new technology do not cripple the organization. Backup and contingency plans

are necessary to help anticipate and protect against a wide range of failures and problems with the newly implemented technology and are an essential part of implementation planning.

Maintenance and Upgrade Activities

Maintenance and upgrade refer to activities carried out after software is initially deployed to keep it operational, to support ongoing use, or to add new functionality to the software. Because upgrading a software package involves many of the same considerations as maintenance, the discussion that follows will speak simply of “maintenance” with the understanding that the term also includes upgrades. During maintenance, health IT products are continuously subject to a variety of activities and interventions by vendors intended to correct defects, introduce new features, optimize performance, or adapt to changing user environment or technologies (Canfora et al., 2010).

Maintenance activities paradoxically can make products increasingly defect-laden and more difficult to understand and maintain in the future (Parnas, 1994). Maintenance activities inadvertently tend to degrade software system structure, increase source code complexity, and produce a net negative effect on system design for a variety of reasons, such as production pressures, limited resource allocation, and the lack of a disciplined process. Maintenance also increases the complexity of health IT, increasing the likelihood for error.

End users and purchasers of health IT are not always aware of the specific risks associated with the maintenance phase. Installation of a patch (code upgrade) can disrupt existing functionality by introducing previously absent dependencies, and functionality can be lost by installing a patch. Maintenance work often requires taking software offline for a number of hours, and requires advance planning and notification of users to minimize disruptions in care. Maintenance also requires that actively engaged users be involved in the testing process prior to the implementation of a patch or upgrade into the production environment.

OPPORTUNITIES TO IMPROVE SAFETY IN THE USE OF HEALTH IT

Safer use is intimately linked to safer design. For example, safer use of an EHR evolves from effective planning and deployment with testing and management of human-computer interface issues, to optimization of tools and processes to improve the application of the system. Users receive education and skill development to learn the utility of the system and accept responsibility to report conditions that could detract from or enhance EHR

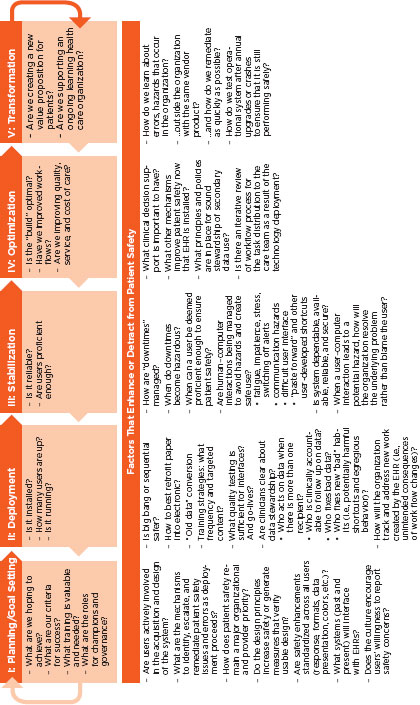

functionality. Observation, measurement, and synthesis of lessons learned about the impact of EHRs on desired work patterns and outputs will help address deficiencies in the design, usability, and clinical behavior related to EHR products. Ensuring safer use relies upon evaluation of workload impact—ergonomic, cognitive, and data comprehension—and its effect on each clinician and the care team. There is a need for metrics to describe accurately user interactions with EHRs and other health IT devices that enable care (Armijo et al., 2009). For users, the opportunities to improve safety of health IT can be divided into the following phases: acquisition, clinical implementation (which includes planning and goal setting, deployment, stabilization, optimization, and transformation), and maintenance activities (see Table 4-5). These stages create a continuous cycle that can be applied throughout the life cycle of health IT. The safety of health IT is contingent on each of these stages. An ongoing commitment to implementation is needed to realize safer, more effective care. It is an iterative process that requires ongoing learning and improvement based on the experience of users.

Acquisition

The first activity for users is the decision to move from an existing environment or status quo to one that better serves the business needs and/or functionalities of the organization or practice. Delineation of business needs and drivers is the foundation of the IT strategy that propels the decision to acquire a system and initiates the acquisition process. Acquiring health IT requires a decision and commitment to change workflows within the organization. Designing new and acceptable workflows, training staff, and dealing with consequential changes will be major drivers of cost and potential risk. Implementing a new health IT product is not an IT project, it is a quality improvement and business change project facilitated by IT.

A needs assessment can help evaluate the current status and the future needs in relation to the health IT solutions targeted to close gaps in clinical, operational, and financial goals. After a need is identified, timelines for acquiring and implementing ought to be developed and an imperative for change be created. Change management is complex and requires organizations to be aware of the potential downstream misalignments between the perceived functionality of health IT and the needs of the organization.

Acquisition requires a deliberative process to select and attain a system that will connect data from clinical and other related IT and support an organization’s clinical and administrative workflows. The organization wants to ensure an effective, safe implementation and not favor speed over quality; the number of systems being integrated may also affect the depth and volume of implementation support required. Similarly, the cost of a health

TABLE 4-5

Health IT User Activities

|

Activities/Phases |

Features |

Opportunities to Improve Safety |

|

Acquisition |

– Selecting and attaining a system that will connect data from clinical and other related IT and support an organization’s clinical and administrative workflows |

– Perform self-assessments and strategic planning before the decision to purchase health IT – Ensure that the resources needed to support adoption and implementation of health IT products are available |

|

Clinical Implementation |

– Planning and goal setting • Assessing needs • Selecting systems based on functionality • Testing quality before go-live – Deployment • Training and demonstrating competence of users • Converting data conversions prior to go-live • Minimizing mix of electronic and paper functions • Planning orderly implementation – Stabilization • Evaluating human-computer interactions for effective design and interface • Correcting functions that disrupt workflow • Minimizing downtime • Planning maintenance – Optimization • Engaging clinical decision support • Retraining for proper and best use • Readdressing changes needed for workflow improvements – Transformation • Measuring improved clinical and efficiency outcomes |

– Analyze existing workflow, envision the optimal workflow, and select the automated system that achieves the optimal automated workflow – Establish mechanisms and metrics to identify, escalate, and remediate patient safety issues – Testing locally to verify safety, interoperability, security, and effectiveness – Monitor and measure the dependability, reliability, and security of the installed system – Take steps to resolve any potential hazards – Learn and improve patient safety by utilizing data generated by the health IT system |

|

Activities/Phases |

Features |

Opportunities to Improve Safety |

|

Maintenance Activities |

– Activities are carried out to keep a system operational and to support ongoing use |

– Schedule any needed downtown and upgrades in advance to minimize disruptions in workflow – Establish workflow procedures for scheduled and/or unexpected downtime |

IT product is often a major driver in the decision-making process, but cost has to be balanced with quality of the product. Maintenance costs also need to be recognized. Unconstrained growth of requirements can result in changing project goals that can lead to frustration and schedule overruns.

Organizations should perform self-assessments and strategic planning before the decision to purchase health IT. Organizations need to consider their innovation temperance, or their tolerance for risk, as well as the stability of the current process. Organizational infrastructure also needs to be considered to ensure that the resources needed to support adoption and implementation of health IT products are available. Not only are the personnel resources and monetary resources important but also the technical resources needed to adopt the proposed technology, such as the ability to test the product and ensure functionality including interoperability with secure exchange of data. The characteristics of having a culture of safety, being a learning organization, having strong staff morale, and having adequate resources are critical to the successful adoption of health IT. These are especially important for smaller organizations with fewer resources to consider in the adoption and decision-making processes. Much of the responsibility for these characteristics occurs at the senior leadership level, but driving change requires both administrative and clinical staff taking on delineated roles.

These antecedents to acquisition will help an organization prepare for both acquisition and implementation of health IT and optimize outcomes in subsequent phases of the implementation life cycle. Unfortunately, sometimes the due process of strategic planning is in place, but the evaluative decision-making model is inadequate. For example, everyone’s opinions and feedback are acquired by the organization leaders, but, in the final decision, critical information from the clinicians may be weighted the least (Keselman et al., 2004).

Some of the generalizable traits organizations must demonstrate for

achieving safer patient care have applicability to the use of health IT. Gleaned from sociotechnical industries such as aviation, nuclear power, chemical, road transportation, and health care, there are five systemic needs health care providers must satisfy to maximize safe performance, which are the needs to:

• Limit the discretion of workers,

• Reduce worker autonomy,

• Transition from a craftsmanship mindset to that of equivalent actors,

• Develop system-level (senior leadership) arbitration to optimize safety strategies, and

• Strive for simplification (Amalberti et al., 2005).

At times, these restraining forces are at odds with traditional values promoting autonomy, creativity, academic expression, safety, and exercising professional judgment. They do, however, demand careful consideration to ensure the very individuals entrusted to provide safe care are not contributing barriers to safe care delivery.

An organization’s readiness to adopt health IT can impact the safety, suitability, and performance of the technology. Managing end-user expectations from the beginning will also help aid implementation. Clinicians expect technology to be perfect and deliver efficient and effective information just in time, every time, with little effort. Whereas most systems are able to perform at these levels, there will be times, such as initial implementation or unanticipated downtimes, that will challenge user patience. These factors need to be considered before implementation both to reduce burdens to the organization and to protect the organization from harming patients in the later phases.

Clinical Implementation

Successful deployment of a health IT product and its effective use are intended to achieve seamless internal information flow as well as to enhance performance in safety, quality, service, and cost. Safe implementation of health IT is a complex, dynamic process that requires continual feedback to vendors and investment by health care organizations. Merely installing health information technologies in health care organizations will not result in improvements in care.

Because each health care organization is different, each organization has different needs and makes choices resulting in varied, customized implementation. Poor implementation can result in the development of processes different from the intended use of a system, otherwise known as use error.

As shown in Figure 4-2, implementation includes the stages of planning and goal setting, deployment, stabilization, optimization, and transformation.

Planning and Goal Setting

In planning and goal setting, organizations target improvements in quality, safety, and efficiency when automating processes. For example, automating orders or medication administration targets improvements of systems composed of nonlinear, complex workflows. The first step is similar to the acquisition phase—determining the organization’s needs and identifying the resources needed to achieve those needs. In aligning technological and organizational change, there needs to be effective management of both technological and organizational change (Majchrzak and Meshkati, 2001). The organization needs to analyze existing workflow, envision the optimal workflow, and select the automated system that achieves the optimal automated workflow. This may involve customization of the purchased system. If workflow analysis and redesign are not completed before implementation, it is possible automation will create unanticipated safety risks. For example, the introduction of a new lab system may require new work and the right person to take on that new work may not have been correctly selected, resulting in the new work falling to the physician or others at the cost of other clinical activities.

Users need to be actively involved in the planning and goal-setting stage. Mechanisms to identify, escalate, and remediate patient safety issues need to be in place as the organization proceeds to the deployment stage. Metrics to be considered at this stage include ensuring that organization leaders have identified objectives, teams, and resources committed to the implementation.

Deployment

When organizations deploy a selected health IT, they make assumptions that vendors have made safety a primary goal in specification and design, that their products support high-reliability processes, and health IT standards (e.g., content, vocabulary, transport) have evolved to address safety, safe use, and value (McDonnell et al., 2010). At the same time, strategies are needed to address potential patient-safety events that can arise from decisions such as whether the organization should take a big bang or sequential approach and how to manage partial paper and electronic systems that can create opportunities for missing data and communication lapses.

Collaboration between user organizations and vendors to improve patient safety is critical and requires a specific and immediate information loop between the parties, allowing detailed information to be exchanged to

FIGURE 4-2

Implementation life cycle.

SOURCE: Adapted from Kaiser Permanente experience.

quickly address safety issues or potential safety issues. Attention to collateral impact in a multivendor environment is also necessary to identify any other corrective actions. For example, in the event a safety incident occurs, rapid notification of health IT stakeholders should be accompanied by rapid correction of system-safety flaws, including vendor notification and collaboration to change software, process, or policy as indicated. Most mechanisms impose a large burden on users to specify what was happening when a problem occurred (e.g., a system error, an instance of inconvenient use, an instance of avoidable provider error). The organization should consider developing mechanisms for providing feedback from users to vendors in an easy-to-use way, such as a “report problem here” button on every screen.

Local testing is needed to verify safety, interoperability, security, and effectiveness, particularly at interfaces with other software systems during the go-live event. Policies to define data stewardship need to address accountability for following up on data when there is more than one recipient, development of processes for correcting incorrect data, and ways to identify and avoid potentially harmful shortcuts or behaviors that result in unintended use of the system. Ongoing monitoring to assure secure exchange of data as well as adherence to predetermined security performance expectations is essential; users must have confidence that data are secure at all times. General metrics for evaluating deployment include failure rates, quality assurance rates for each interface, percentage of users trained, and checklists of essential functions.

Clinicians using multiple EHRs also experience challenges of retaining information about how to use different EHR systems. This affects professionals in training as well as those on staff who care for patients in multiple settings or organizations.

Stabilization

Following deployment, health IT enters a stage of stabilization. During stabilization, potentially hazardous human-computer interactions such as alert fatigue, communication hazards, and workarounds such as “paste forward” must be managed. During this stage, the organization ought to be monitoring the dependability, reliability, and security of the installed system and taking steps to resolve any potential hazards. More specific measures of these system characteristics will guide actions for clinician retraining, software modification, and the need for additional guidance and policies. As with the maintenance activity in the design and development of technology, stabilization also provides time to evaluate how downtime is managed. Organizations can measure the stability of a system by assessing user proficiency (e.g., reduction in helpdesk calls, higher percentage of

e-prescriptions), the percentage of time the system is available, and trends in identified errors for a given timeframe.

Optimization

In the optimization stage, the organization analyzes how well it is using the functions such as decision support and safety effects of computerized provider order entry (CPOE) and other functions. Revisiting revised workflows to measure achievement of intended changes can reveal improvements or degradation in quality and service. Assessing task distribution to the care team can help evaluate the impact of the health IT. Further evaluation is important to assess changes in quality measures over time as well as whether the health IT is being used in a meaningful manner. One example of measuring optimization is by tracking quality over time and the level of an organization’s reliance on paper. Self-assessment tools are an important adjunct approach to assessing aspects of EHR use such as clinical decision support performance (Metzger et al., 2010). A sample set of concepts for metrics to track a successful implementation across the life cycle of an EHR appears in Table 4-6.

Transformation: The Learning Health System

Transformation is the future state of an organization that has extracted learning from the system itself and from application of knowledge. It can be evaluated by identifying changes in practice derived from aggregate data analysis and application of new knowledge that result in improved outcomes. Proactive monitoring for new failure modes created by the implementation and use of health IT is going to be necessary. Proactive monitoring can also help define how such failures occur, and the contributing forces. In the small practice and hospital setting this is particularly challenging because there is limited to no experience in these methods or approaches.

A learning health care organization creates a new value proposition by improving quality and value of care. Ultimately the transformation achieved through optimal use of health IT will improve outcomes over time and achieve a safer system. Continuous evaluation and improvement occurs over the dynamic and iterative life cycle of health IT products (Walker et al., 2008).

Maintenance Activities

Maintenance begins after implementation when activities are carried out to keep a system operational and to support ongoing use. It is a period

TABLE 4-6

Measure Concepts for Successful Implementation

| 1 Planning and Goal Setting |

II Deployment |

III Stabilization |

IV Optimization |

V Transformation |

|

– IT, medical, and operations leaders identified and in agreement on objectives – Teams identified – Money and resources made available |

– System up – Percentage of users trained – Percentage of users logged in – Number and nature of errors identified in the field per week – Quality assurance stats for each interface – Quality assurance user acceptance testing stats for system – “Shakedown cruise” stats—review of functionality in initial period post go-live |

– Percentage of time system is available – User proficiency measures – Trend of errors identified in the field per week – Alert overrides – Event reports |

– Ongoing health IT patient safety with analysis of reporting and remediation of safety issue – Tracking of quality measures over time – Quality improvement (Ql) projects and results – System passes ongoing safety tests after all upgrades, crashed or new application implementations |

– Show improved outcomes over time – Health care is safer based on identified measures – Care processes redesigned – Continuous improvement of new steady state |

when there is shared responsibility between the vendor and the organization. The cost and effort needed to maintain an IT system is influenced by the underlying complexity of the system and design choices made by both vendors and users. A relationship exists between the complexity of the system and the error-proneness of a system immediately after installation. Complexity also increases the overall lifetime effort and cost associated with maintenance activities.

Contingency planning for downtime procedures and data loss is necessary to address both short- and long-term occurrences. Scheduled downtime for maintenance and upgrades typically occurs at the organization’s discretion to minimize work disruption. Downtime procedures for planned outages as well as emergency procedures are necessary to protect security and are to include measures to prevent data loss; these measures will differ based on the type of EHR architecture. Planning for obsolescence and eventual system replacement also requires a contingency plan that includes safeguarding of data (American Academy of Family Physicians, 2011). Any disruption, no matter how small, can present safety risks resulting from unfamiliarity with manual backup systems, delays in care, and data loss. Power interruptions and other unexpected events can result in unavoidable downtime. Procedures should address immediate communication and deployment of contingency plans as well as reentry to normal functioning and subsequent recovery actions. Advance planning, education, training, and practice for downtime can aid in successful performance during planned or unplanned outages.

MINIMIZING RISKS OF HEALTH IT TO PROMOTE SAFER CARE

Although not everything is known about the risks of health IT, there is some evidence to suggest there will be failures, design flaws, and user behaviors that thwart safe performance and application of these systems. To better understand these failures, more research, training, and education will be needed. Specifically, measures of safe practices need to be developed to assess health IT safety. Vendors, health care providers, and organizations could benefit from following a proven set of general safe practices representing the best evidence about design, implementation strategies, usability features, and human-computer interactions for optimizing safe use. Vendors take primary responsibility for the design and development of technologies with iterative feedback from users. Users assume responsibility for safe implementation and work with vendors through the health IT life cycle. The mutual exchange of ideas and feedback regarding any actual or potential failures or unintended consequences can also inform safer design and use of health IT products.

Because of the variations in health IT products and their implementation,

a set of development requirements that stipulates consistent criteria known to produce safer design and user interactions would be beneficial. Consistent testing procedures could then be applied to ensure the safety of health IT products. Inclusion of appropriate requirements and iterative testing can contribute to effective practices for safe design and implementation.

With growing experience of health IT and EHR deployment, gleaning best practices for implementation from case reports and reviews is possible. The importance of testing for safe designs, functioning, and usability to reduce deployment errors can enhance safety in user adoption. Continual testing and retesting, for any change such as when upgrades are installed, will be needed. As high-prevalence and high-impact EHR-related patient safety risks are identified, these should be incorporated into pre- and post- deployment testing. Feedback from testing as well as learning from event reports and detecting workarounds is also important as part of the iterative process of continually improving health IT.

The partnership to design, develop, implement, and optimize systems extends beyond a single vendor and single organization. Many public- and private-sector groups have a stake in the safety of health IT to ensure the very systems intended to help improve quality of care are performing without creating risk of harm. Indeed such a public-private partnership already exists in this area through the National Quality Forum’s safe practices, one of which is focused on CPOE and includes in its standard the routine use of a postdeployment test of the safety of operational CPOE systems in hospitals (Classen et al., 2010). Ensuring this outcome will entail additional requirements for public and private agencies, vendors, and users across the health IT life cycle.