Learning About Evolution:

The Evidence Base

Biological evolution is a difficult concept to learn, as several people at the convocation emphasized. It involves complex biological mechanisms and time periods far beyond human experience. Even when students have finished a high school or college biology course, there is much more to learn about the subject.

The difficulty of teaching evolution both complicates and invigorates research on evolution education. To present what is known and not known about the teaching and learning of evolution—which is a standard feature of convening events organized by the Academies—Ross Nehm, associate professor of science education at Ohio State University, gave an overview of the research literature on evolution education and then talked in more detail about his own research.

The literature on teaching and learning about evolution is extensive. In 2006 Nehm reviewed 200 of more than 750 papers published thus far about evolution education, identifying both strengths and limitations of the approaches taken in those studies (Nehm, 2006). This literature demonstrates that the general public, high school students, undergraduates, biology majors, science teachers, and medical students all have low levels of knowledge and many misconceptions about evolution (Nehm and Schonfeld, 2007). Furthermore, as with other areas of science, many of the same misconceptions persist in all of these populations. “They don’t

go away,” said Nehm. “Whatever instruction is happening at early levels, it’s not ameliorating the problems that we have.”

In education, the only way to make robust causal claims is through a randomized controlled trial (RCT), but no such trials have been conducted for evolution education. “If you want to make causal claims, there is no causal literature to refer to.”

Fortunately, other research tools can be used with educational interventions to draw conclusions that can guide policy. A group receiving an intervention can be compared with a group not receiving the intervention. Interventions can be done without a comparison group—for example, by looking at a group before and after an intervention. Survey research can yield associations, although survey research cannot determine whether these associations are causal. Finally, case studies, interviews, and other forms of qualitative research can reveal new variables and possible associations.

Nehm’s 2006 review of the literature found no intervention studies with randomized control groups, 6 intervention studies with comparison groups, and 24 other studies that employed various intervention techniques. Also, some of the interventions were quite brief—just one to three weeks—a period during which substantial changes are unlikely to occur, given the difficulties of teaching evolution. One conclusion is obvious, Nehm said: “We need to do some randomized controlled trials to see what works causally in terms of evolution education.”

Nehm also pointed out that documenting learning outcomes is critically important in education research. According to the report Knowing What Students Know: The Science and Design of Educational Assessment (National Research Council, 2001), “assessments need to examine how well students engage in communicative practices appropriate to a domain of knowledge and skill, what they understand about those practices, and how well they use the tools appropriate to that domain.” Yet most tests today, including those that dominate biology curricula, assess isolated knowledge fragments using multiple choice tests. Students may be learning about evolution, “but if we can’t measure that progress, we can’t show that what we’re doing has any positive effect. So we need assessments that can measure the way people actually think.”

The problems caused by inadequate metrics are particularly obvious in the literature on teacher knowledge of evolution, Nehm said. Only five intervention studies exist, and three of them assess teacher’s knowledge of evolution using a multiple choice or Likert scale test (Baldwin et al., 2012). This lack of careful metrics “is really concerning,” said Nehm. Evolution assessments must be developed that meet quality control standards established by the educational measurement community, or robust claims, causal or otherwise, cannot be made.

In summary, research has established key variables that should be investigated and many possible beneficial interventions. But the research literature on evolution education lacks robust, causal, generalizable claims relating to particular pedagogical strategies and interventions. It also lacks measurement instruments that meet basic quality control standards and capture authentic disciplinary practices. Finally, the research lacks consistent application of measurement instruments across different populations. “This is a call to action,” said Nehm. “We need to gather and do a national randomized controlled trial of some of the most likely and agreed upon variables and test their causal impact on students’ learning of evolution.”

In his own research, Nehm and his colleagues have been studying how different groups, from novice to expert, think about problems.1 Using performance-based measures in which research participants are asked to solve evolutionary problems, they have looked at 400 people—including non-majors who have completed an introductory biology course, students who have completed a course in evolution, students who have completed an evolution course as well as more advanced coursework, and a group of biology Ph.D. students, assistant professors, associate professors, and full professors (Nehm and Ha, in preparation).

The study measured people’s ability to explain evolutionary change across a variety of contexts, not through multiple choice questions. In general, this technique revealed many more gaps in evolutionary understanding than would simpler assessments. For example, students have a harder time explaining evolutionary change (in writing or orally) than recognizing accurate scientific elements of an explanation when presented in a multiple choice test (Nehm and Schonfeld, 2008). Or, as Nehm put it, knowing the parts and tools needed to assemble furniture does not mean that you can build it. Students may have a lot of knowledge about evolution but not be able to use that knowledge to create a functional explanation. “This is a tough competency,” explained Nehm. “If you asked any of your students, and I encourage you to do this, ‘Can you explain how evolutionary change occurs?’ you will be startled at their inability to articulate their understanding because they are never asked to do that.”

In addition, people have a tendency to mix naïve and scientific information together in their explanations. Naïve ideas include, for example, the notions that the needs of an organism drive evolutionary change or

_________

1 A summary of the general research on differences between novices and experts can be found in National Research Council (2000).

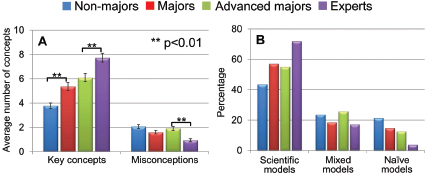

FIGURE 3-1 Misconceptions decrease with educational level but never entirely disappear (left), while mixed models of evolutionary change remain as common in advanced biology majors as in non-majors (right). The vertical scale on the left measures the numbers of key concepts and misconceptions used by respondents, while the vertical scale on the right measures the percentage of respondents using different kinds of explanatory models. SOURCE: Nehm and Ha, in preparation.

that putting pressure on animals will cause them to evolve. The mixing of naïve and scientific ideas is difficult to measure with multiple choice tests, but open response explanations can reveal the relative contributions of each category of information.

Of 428 people—107 from each group—the experts (the combined group of Ph.D. students, assistant professors, associate professors, and full professors) knew more key concepts and had fewer misconceptions (Figure 3-1). Some Ph.D. students still have naïve ideas about evolution, and occasionally a professor, although that was uncommon. People learn more about evolution as they take more courses, but a surprising number do not get rid of their misconceptions.

Moreover, as shown in Figure 3-1, up to 25 percent of the advanced majors who have taken an evolution course and other advanced courses still construct mixed models of evolutionary explanations that combine naïve and scientific ideas. The use of exclusively scientific models increases with educational level, but this use never gets above 60 percent of students in Nehm’s research. Furthermore, many students have only naïve ideas, although this percentage declines with educational level.

Research shows that novices tend to get tripped up by surface features of problems, such as the context, format, or details of a problem, rather than grasping a problem’s underlying structure. They think that similar problems framed in different ways are actually different problems,

whereas experts see the similarities that are not apparent on the surface of those problems. Nehm and Ridgway (2011) applied this analysis to evolutionary biologists and to non-majors who had taken an introductory biology course and found the same thing. For example, elements of natural selection were linked consistently by experts but haphazardly by novices.

An especially intriguing finding was that students tend to draw on different misconceptions in trying to solve different types of problems. In problems involving plants, animals, or bacteria, for example, their misconceptions tend to differ based on the type of organism they are being asked to consider. Thus, in teaching evolution, the types of misconceptions that teachers think they are tackling are correlated with the kind of problem they give students. For example, even though teachers may think they are describing a problem involving natural selection, a particular surface feature of the problem may keep students from recognizing the connection. Likewise, in assessments, the type of misconception being assessed is correlated with the type of problem students are trying to solve. “That’s a big implication,” said Nehm. Students see natural selection in a cheetah and in a bacterium as completely different processes. This is one way in which they glue new information onto preexisting naïve ideas.

The importance of surface features has received almost no attention in evolution education, Nehm observed. Introductory biology textbooks use a variety of contexts but never alert students that bacterial resistance to antibiotics is no different from the many other examples of natural selection being described. “We never help students see those parallels.” Even after completing an evolution course, only 50 percent of students have “expert-like” perceptions of evolutionary problems. As students progress through biology, their courses do little to help them reason across cases.

Novices’ Thinking About Evolution

What are the problems that novices have in thinking about evolution? People have many different kinds of knowledge, including conceptual resources, analytical resources, and factual resources. According to traditional models of problem solving, people draw information from these different kinds of resources and put it in working memory to tackle problems.

Nehm has been testing this concept for evolution. In one experiment, more than 200 participants solved problems in which just one feature was manipulated at a time (Nehm and Ha, 2011). The experiment looked at which surface features are problematic for learners, such as scale (such as intraspecific or interspecific), polarity (such as trait gain versus trait loss), taxon (such as plant or animal), and familiarity. The experiment measured the accuracy of their scientific thinking.

The results of this experiment show that students have more trouble reasoning about the loss of traits than the gain of traits. They also have greater difficulty reasoning about the loss of traits between species than within species. But in reasoning about the gain of traits, there is no difference between the interspecific and intraspecific situations. Similarly, students have more misconceptions about the loss and gain of traits between species than within species. The hardest problem for students to solve, said Nehm, is the loss of traits between species. “If students can handle that, that’s the highest level of competency. But do we ask those questions? No.”

Also, students use more key concepts in solving problems involving familiar animals than unfamiliar animals, but this trend is not seen for plants, all of which seem to strike students as unfamiliar.

These surface features have a remarkably powerful influence, said Nehm. “If you want to show your class is doing great, I can design an assessment for you. If you want to show your students are failing, I can design an assessment for you. All I have to do is manipulate surface features because students’ reasoning is so tied to these features. And yet we pay no attention to this in any textbook or in any assessment.”

The bottom line is that “surface features matter, and we need to be more precise in our instructional strategies to deal with these.” Because misconceptions are surface-feature specific, instructional examples must be carefully chosen. Furthermore, assessments of competency must include authentic production tasks, such as explaining how evolutionary change occurs, not just fragmented knowledge selection tasks.

EVOLUTION ACROSS THE CURRICULUM

In 2007, Nehm reported on an introductory biology course that was changed so that every topic included evolution, while a parallel course was taught using a traditional curriculum (Nehm and Reilly, 2007). The outcomes were not substantially different. “It’s an awful downer at this conference,” he admitted.

However, one single study is not enough to draw broad conclusions. For one thing, students have difficulty learning evolution, so teaching it in the same way is probably not going to lead to progress. “If you have a problem with A and you give lots more A, the chances are it’s not going to lead to a substantial improvement.”

Also, as students work through the biology curriculum, they move from naïve models to mixed models to scientific models, but progress is very slow—25 percent of students who have completed a course on evolution and additional coursework still used mixed models.

Determining the conditions under which students can effectively

learn about evolution will require truly randomized controlled trials, Nehm concluded. Developing such trials will be difficult, he acknowledged. “But my perspective—which, again, is only my personal perspective and may be wrong—is that if people can do it in medicine, where people are dying, we should be able to do it in education.”

Baldwin, B. C., Ha, M., and Nehm, R. H. 2012. The Impact of a Science Teacher Professional Development Program on Evolution Knowledge, Misconceptions, and Acceptance. Proceedings of the National Association for Research in Science Teaching (NARST) Annual Conference, Indianapolis, IN, March 25-March 28.

National Research Council. 2000. How People Learn: Brain, Mind, Experience, and School: Expanded Edition.Washington, DC: National Academy Press.

National Research Council. 2001. Knowing What Students Know: The Science and Design of Educational Assessment. Washington, DC: National Academy Press.

Nehm, R. H. 2006. Faith-based evolution education? Bioscience 56(8):638-639.

Nehm, R. H. 2007. Teaching evolution and the nature of science. Focus on Microbiology Education 13(3):5-9.

Nehm, R. H., and Ha, M. 2011. Item feature effects in evolution assessment. Journal of Research in Science Teaching 48(3):237-256.

Nehm, R. H., and Reilly, L. 2007. Biology majors’ knowledge and misconceptions of natural selection. Bioscience 57(3):263-272.

Nehm, R. H., and Ridgway, J. 2011. What do experts and novices “see” in evolutionary problems? Evolution: Education and Outreach 4:666-679.

Nehm, R. H., and Schonfeld, I. 2007. Does increasing biology teacher knowledge about evolution and the nature of science lead to greater advocacy for teaching evolution in schools? Journal of Science Teacher Education 18(5):699-723.

Nehm, R. H., and Schonfeld, I. 2008. Measuring knowledge of natural selection: a Aomparison of the CINS, and open-response instrument, and oral interview. Journal of Research in Science Teaching 45(10):1131-1160.

This page intentionally left blank.