4

Evaluating Program Access and Participation Trends

In addition to evaluating food and nutrient intake and the barriers and facilitators to providing nutritious meals and snacks, another key research recommendation in the Child and Adult Care Food Program (CACFP) report (IOM, 2011) was to gather more information on program access and participation trends. For example, how many providers and participants are in CACFP? What is the demand from eligible providers to participate? What are the barriers and facilitators to program access (for both providers and participants)? Workshop participants explored methods for evaluating program access and participation trends, beginning with a general examination of the use of administrative data and then proceeding to more detailed examinations of methodological approaches to assessing program access (both providers and participants). This chapter summarizes that exploration. Major overarching themes of the discussion included the wealth of relevant data that already exist in administrative and other databases, with Rupa Datta describing those data as a “gold mine to be tapped”; lessons learned from previous studies about how to collect and analyze program access and participation trend data; and the significance and challenge of defining and identifying comparison groups (i.e., eligible but nonparticipating providers and children) to include in analyses.

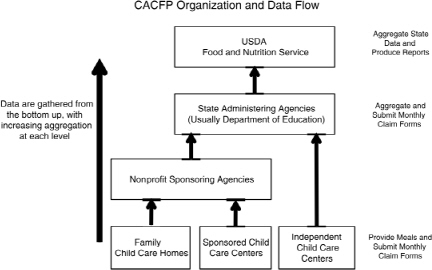

Although most of the discussion focused on the actual child care providers and participating children, Fred Glantz reminded the workshop audience that there are several levels of CACFP participation: children, outlets (the child care centers and homes), sponsors, state agencies, and the U.S. Department of Agriculture (USDA). He described how raw child-level data (who is participating, meals eaten during child care, etc.) aggregates after

outlets send their monthly reports to sponsors (or directly to state agencies in the case of self-sponsored centers), after sponsors send their reports to state agencies, and then again after stage agencies send their report to USDA, making it difficult to analyze anything but state-level national trends. Glantz opined that it would be tremendously helpful if a nationally representative study of CACFP could access some of those raw child-level, outlet-level, and sponsor-level administrative data.

Drawing on lessons learned from a series of studies on the Child Care Development Fund (CCDF) voucher program, Gina Adams and Monica Rohacek discussed key factors likely to shape provider participation (e.g., various provider individual characteristics, and CACFP policies and implementation practices) and ways to measure those factors. Past research by the Urban Institute on the child care voucher system has shown that a similar set of factors impacts both participation (“Are you in?”) and the quality of participation (“If you are in, can you do what you are supposed to be doing?”). As many speakers did throughout the day, Rohacek emphasized the importance of keeping the end in mind, that is, knowing the outcome(s) of interest. For example, is the goal to simply measure participation rates or the quality of participation? Other things to keep in mind are the value of quantitative and qualitative methodology (i.e., they both serve important roles), the importance of knowing whom to survey (i.e., the respondent population), and the reality of heterogeneity (i.e., that there is no single child care system, rather a range of diverse systems).

Arguably one of the most important factors to consider when designing a national study of CACFP is the comparison group, that is, the group of eligible but nonparticipating providers (or participants) to whom the CACFP representative sample of providers (or participants) will be compared. Rupa Datta explained the important role that comparison group data serve in two key quantitative measures of program access and participation: saturation and participation rates. Based on work she has done with the National Survey of Early Care and Education (NSECE), she discussed the anticipated challenge of collecting data not just for the comparison group, but also for CACFP providers. Because of the variable nature of child care providers (centers, licensed homes, unlicensed homes, etc.) and state variability in licensing regulations, the greatest challenge for NSECE has been building a database of providers.

Again, a major theme of not just this session but also the workshop at large was the potential relevance of existing data. Susan Jekielek discussed the relevancy of existing data for two Administration for Children and Families (ACF) early childhood programs that overlap with CACFP: Head Start (and Early Head Start) and the Child Care Subsidy Program. Neither program collects CACFP-specific data, but both collect data that might inform a nationally representative study of CACFP.

USE OF CACFP ADMINISTRATIVE DATA1

Administrative data collected and accumulated by CACFP providers can be very useful for understanding the effects of public policy on dietary intake. The challenge is access to those data. Fred Glantz described how raw child-level data collected by CACFP providers accumulates as it moves up from the provider level. There are several levels of CACFP participation. At the top is USDA, which sets rules based on legislation. Next is the state, usually the state department of education, which administers the program and monitors compliance with federal regulations. Below the state are the nonprofit agencies that sponsor centers and homes. It is the sponsor, not the home or center, that enters into an agreement with the state government and that is legally and fiscally responsible for providers below them. Family day care homes must be sponsored; child care centers must either be sponsored by another agency or self-sponsored. Below the sponsors are the “outlets,” that is, the child care centers and homes where served meals are reimbursed by CACFP. Finally, at the “bottom” are the children. Glantz remarked that all children attending a CACFP center or home participate in CACFP regardless of family income and whether they or their parents know that they are participating.

As the Data Flow Up, They Aggregate

Outlets collect raw child-level data, such as who is participating, the hours and days of the week that they participate, meals that the children eat while in child care, and what those meals contain. Glantz said, “At that bottom level there is a wealth of information if you can get access to it. And right now, you can’t.” Those data are aggregated as soon as the outlets submit their monthly reimbursement claim forms to either the sponsor or the state agency (in the case of self-sponsored child care centers) (see Figure 4-1). Then, sponsors aggregate information received from providers before submitting it to their state agencies. State agencies, in turn, aggregate information they receive before submitting their reports to USDA. Because of the cumulate aggregation, not only are child-level data unidentifiable at the agency levels, so are outlet and sponsor-level data, making it impossible to conduct analyses with children, outlets, or sponsors as the unit of analysis.

The Unreliability of Monthly Data

The challenge of data analysis is compounded by the fact that reimbursement claim forms are submitted on a monthly basis. Many programs

![]()

1This section summarizes the presentation of Fred Glantz from Kokopelli Associates.

FIGURE 4-1 CACFP data (e.g., meals eaten during child care, what those meals contain) flows up, aggregating at every level, with child-, outlet-, and sponsor-level data not accessible to researchers.

SOURCE: Glantz, 2012.

do not operate every month, for example, during the summer months, and therefore do not submit claim forms every month. Plus, programs sometimes submit late claim forms or revise their claim forms later. So data collected during any given month are not reliable, according to Glantz, and not necessarily representative of what a program looks like over the course of the year. USDA uses October and March monthly data submissions in their analyses, with the understanding that months serve only as proxies for the entire year. Although one could aggregate monthly data into annual data, estimates of year-to-year changes in participation are sometimes confounded by state-level changes in eligibility or registration requirements for subsidized child care.

The Challenge of Defining a Comparison Group

More important than the lack of reliable monthly data is the challenge of defining a comparison group for use in an analysis of participation. For example, with respect to outlet participation, comparison groups vary from state to state and can vary even within a state. For example, in New

Mexico, a home does not need to be licensed unless it provides care for five or more children. If it serves fewer than five children, it has an option to register, and then must participate in CACFP. So countless family day care homes in New Mexico (i.e., those with fewer than five children) are not listed anywhere. Because no data are available on a regular basis for the universe of eligible nonparticipating sponsors or outlets, one cannot do any comparative analyses of participating versus nonparticipating eligible providers.

National Data

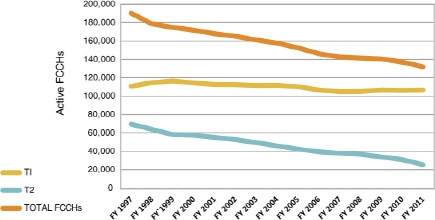

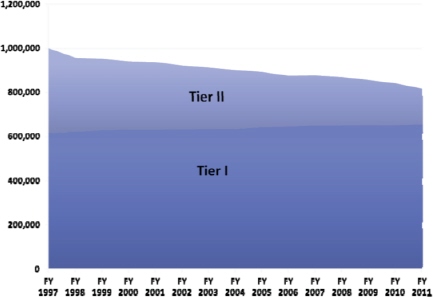

In Glantz’s opinion, the best available administrative data are national trend data, such as the number of child care centers participating in CACFP and the proportion of participating centers that are for-profit versus nonprofit or sponsored versus independent. National trend data can show the impact that policy change can have on provider participation. For example, there were virtually no for-profit centers participating in CACFP in the 1970s and 1980s. But when welfare reform went into effect in 1997, the number of participating for-profit centers increased. Glantz’s interpretation of the shift is that welfare reform not only dramatically increased parent co-payments but, as a result of the two-tier payment system that went into effect that same year,2 also led to lower reimbursement rates for a large segment of the CACFP population, forcing providers to raise their care rates. As a result, parents started looking for more affordable care from other sources. After tiering was initiated, the number of family day care homes that participated in CACFP dropped precipitously, from 190,000 in 1997 to 132,000 in 2011 (see Figure 4-2). Of those initial 190,000, about 111,000 were classified at that time as Tier 1 homes, 80,000 as Tier 2. The number of Tier 1 homes remained relatively constant between 1997 and 2011, but the number of Tier 2 homes dropped 25,000 over the same time period. Also shown in Figure 4-3, the number of children participating in Tier 1 homes stayed fairly constant between 1997 and 2011, while the number of children participating in Tier 2 homes decreased.

![]()

2For a description of the two-tier payment system, see Chapter 2, Footnote 4.

FIGURE 4-2 Number of active family child care homes by tier level, fiscal years 1997–2001. After tiering, FCCHs serving middle-income children dropped out of CACFP but were not replaced by FCCHs serving low-income children.

NOTES: FCCH, family child care home; FY, fiscal year; T1, Tier 1; T2, Tier 2.

SOURCE: Glantz, 2012.

FIGURE 4-3 Average daily attendance in Tier I and Tier II family child care homes, fiscal years 1997–2011.

SOURCE: Glantz, 2012.

LESSONS LEARNED: FACTORS SHAPING PROVIDER PARTICIPATION IN CACFP AND METHODOLOGICAL CONSIDERATIONS3

Many lessons on provider participation can be drawn from previous work conducted by the Urban Institute. Gina Adams remarked that the same lessons could be applied to research on sponsor participation. She referred to three studies in particular, all on the CCDF voucher program.4 The first was a 1999 qualitative study on provider involvement with CCDF during welfare reform, based on focus group and interview data collected on providers, parents, subsidy workers, administrators, and experts at 17 sites across 12 states (Adams et al., 2003). The second study was a 2003–2004 mixed method study that involved a representative survey of centers and family child care homes in five counties across four states, and a qualitative study involving focus groups and interviews with providers, subsidy staff, administrators, and experts (Adams et al., 2008; Rohacek et al., 2008; Snyder et al., 2008). Both studies were designed to flesh out provider participation and experiences and participation with the voucher system. The third study involved in-depth interviews with center directors about factors shaping their ability to provide high-quality care (Rohacek et al., 2010).

Based on these three studies, Urban Institute researchers have identified five clusters of factors that shape provider participation. The same set of factors impact both participation (“Are you in?”) and the quality of participation (“If you are in, can you do what you are supposed to be doing?”). Depending on the research question and the study population, Adams noted that some factors or clusters may be more relevant than others:

1. Provider individual characteristics (i.e., the person making decisions)

a. Motivation (Why are they doing this?)

b. Personality (Are they flexible? Do they like change?)

c. Skills/capacity (Are they literate? Do they speak English? Do they have any business capacity? Do they know how to fill out paperwork?)

d. Beliefs/values (What are their beliefs and values? Are they mission-driven? Do they believe that the government has a role? Do they believe that agency people should be coming into their homes?)

![]()

3This section summarizes the joint presentation by Gina Adams and Monica Rohacek from the Urban Institute.

4For more information on CCDF, visit http://www.casyonline.org/CCDF.html.

e. Beliefs about CACFP (What are their perceptions of or experiences with CACFP?)

2. Provider program characteristics

a. Type (Is it a center or family child care?)

b. Funding/resource supports (Who supports the program? Parents? Public sources? Philanthropy? Religious affiliate?)

c. Clientele (What proportion of clientele is eligible for free or reduced price meals and snacks or Tier 1 reimbursements?)

d. Auspice (What is the profit status? Is it public/private/ school-based?)

e. Decision-making structure (Who is making decisions? A board? A church? A chain?)

f. Size/staffing (What is the administrative capacity?)

3. Community characteristics

a. Client demand (What do clients care about? Level of resources? Sense of other options? Nutritional preferences?)

b. Supply of care (What is going on with competitors? Are competitors lowering their prices such that you have to lower your prices and seek CACFP assistance?)

c. Resources (Who in the community is supporting the program beyond parent fees? Parents? Public sources? Philanthropy? Religious affiliate?)

4. Policy/services context

a. Federal/state/local early care and education policies, programs and requirements (How do other policies, programs, and requirements, such as CCDF or kindergarten programs, impact participation?)

b. Licensing (What are the state licensing exemptions, enforcement patterns, nutrition standards, etc.?)

c. Childcare resource and referral functions (What other levels and kinds of support are being provided? How does that support intersect with CACFP?)

d. Quality supports (What kind of support is being provided for training and technical assistance? How does CACFP interact with the Quality Rating and Improvement System initiatives [QRIS]?)

e. Tax policy (Are there tax disincentives? For example, is it easier to deduct food costs than participate in CACFP?)

5. CACFP policies and implementation practices

a. Outreach (Do providers know about the program? Is what they know accurate or word of mouth from how the program used to be?)

b. Actual reimbursement (What do providers actually receive in payment [as contrasted with what they are supposed to receive]?)

c. Paperwork, both enrollment and reimbursement forms (Do they do the paperwork correctly? Is it done on time? How difficult is it and how long does it take?)

d. Ease of working with funding entity (How much time do you spend on the phone? Can you resolve payment disputes? Can you get help? How are you treated?)

e. Nutrition requirements (How easy or difficult is it to comply? How similar or different are requirements compared to what providers believe are appropriate or what their clients want?)

f. Monitoring/support (How much monitoring support is “carrot” and how much “stick”? What is the relationship between the provider and the individual who enters the home to do the monitoring?)

g. Role/nature of sponsor

Adams emphasized the importance of the fifth cluster of factors, especially those related to implementation. She said, “The real effect of a program is how it is experienced by the provider.” The greater the understanding about how the provider experiences a program, the more clarity about which pieces of policy need to be adjusted. Yet, she cautioned that all five clusters play important roles. Which factors are most important and how the factors interact with each other are highly individual. A benefit for one provider could be a cost to another. Also, for any given provider, the benefit-cost relationships among the various factors can change over time.

Methodological Considerations

Lessons learned from Urban Institute research extend beyond what types of factors to consider when evaluating provider participation in CACFP. They also offer valuable methodological lessons about how to collect those data. Monica Rohacek identified four main methodological issues to consider when designing a national study of CACFP provider participation:

(1) What is the outcome of interest? What is the question? Which aspects of participation are important? For example, one outcome is simply participation; that is, whether the provider is participating in the program or not. But there are variations in the extent of involvement. For example, what percentage of children in a program is income-eligible for CACFP reimbursement? What percentage of meals served in a program are reimbursed by CACFP? What is the quality of participation (e.g., quality of nutritional offerings, child nutrition outcomes)?

(2) Is a quantitative survey sufficient? Rohacek remarked that many research questions listed in the CACFP report (IOM, 2011) could be addressed with a quantitative survey (e.g., Does the program improve participants’ daily or weekly nutrient intake?). But the “why” questions (e.g., Why does the program improve nutrient intake?) are probably better served by a mixed-methods approach (i.e., mixed qualitative and quantitative). For example, the first Urban Institute study of provider involvement in CCDF took a qualitative approach, with focus groups identifying some key challenges and facilitators that providers face when working with voucher programs (Adams et al., 2003). Information from those focus groups was used to design the quantitative survey used in a second, mixed-methods study of provider involvement in CCDF (Adams et al., 2008; Rohacek et al., 2008; Snyder et al., 2008). In addition to the quantitative survey, the second study followed up with focus groups to gather additional details about what the quantitative data revealed.

(3) Which respondents are relevant? Rohacek agreed with Adams that the whole effect of a program should consider the providers’ experiences. How do providers experience the program? What does the program look like to nonparticipants (both past and never participants)? Additionally, other people who might be useful to speak with in terms of understanding what CACFP looks like on the ground include staff at state CACFP agencies and sponsoring organizations, parents, and other key informants.

(4) Accounting for heterogeneity in the field. Although the term “child care system” is common, Rohacek remarked that in fact there is no single child care system. Rather, there is a range of “systems” (e.g., centers versus homes), as well as differences in local implementation practices. When designing a study on provider participation, it is important to keep this heterogeneity in mind.

Rohacek concluded with what she called “stray” thoughts. First, she emphasized the importance of asking effective questions when designing “satisfaction” surveys (i.e., surveys designed to determine program satisfaction). For example, she came across a study conducted in Oregon in which 97 percent of respondents said that they would recommend CACFP to others. While such a high response may indicate that CACFP is working very well, it might not reveal the extent of any problems. Second, she emphasized the value of building on work in related fields. For example, there has been considerable work done in the early childhood field at large that might provide some insights into understanding provider participation in CACFP. Finally, she emphasized the challenge of engaging providers in this type of research. Engaging family child care home providers can be especially challenging. Urban Institute researchers have found it useful to explain to providers that implementation research is very different than compliance monitoring and that the ultimate goal is to help providers.

DESIGNING SURVEY QUESTIONS FOR ESTIMATING TWO KEY CACFP RATES: PARTICIPATION AND SATURATION5

Rupa Datta echoed Fred Glantz’s remarks about the challenge of defining comparison groups for analyzing participation in CACFP. With respect to provider participation, not only do state licensing requirements vary tremendously for center-based care, and even more so for family-based care, but the quality of lists (of licensed facilities) also varies. In some states, unlicensed providers may not be on any list at all. These uncertainties raise several questions about eligibility. What defines an eligible provider? Is it any licensed provider or all providers? Defining participant eligibility is equally difficult. One important factor of eligibility is income, but what other factors need to be considered? Are eligible children only those in licensed care, or are all children in any kind of care considered eligible? By limiting the universe of eligible children to those participating in licensed care, one misses the largest source of nonparental care, that is, family, friends, and neighbors. This is especially true of the youngest age groups (i.e., 0–2 years). Also, because many unlisted providers serve low-income families, one would be missing a large source of data on child care for children from low-income families. For both providers and participants, added to the challenge of defining who is eligible is the challenge of actually finding those outlets and people for data collection.

Comparison group data are useful for calculating two key CACFP rates: saturation and participation. Saturation rate is the number of providers participating in CACFP, divided by the number of eligible providers (i.e., the number of participating providers plus the number of eligible nonparticipating providers). Participation rate is the number of children receiving meals through CACFP (i.e., the number of participants) divided by the total number of eligible children (i.e., the number of participants plus the number of eligible nonparticipants).

National Survey of Early Care and Education

A national study of CACFP could draw on information gathered and lessons learned from the NSECE, a study funded by the Office of Planning, Research and Evaluation (OPRE) in ACF. The goal of NSECE is to document the national supply of nonparental care and the needs, constraints, and preferences of families as they seek and use nonparental care for their children. Datta described it as an “enormous data collection effort.” Data are being collected on (1) center-based providers (Head Start, school- and

![]()

5This section summarizes the presentation of Rupa Datta from NORC at the University of Chicago.

community-based prekindergarten programs, and other community-based centers); (2) home-based providers from state lists; (3) workforce members (home-based providers or center-based staff who are working directly with children); (4) households with children under the age of 13 years; and (5) informal home-based providers (providers not on any state list). Datta remarked that the proportion of informal home-based providers that are not on any list varies by state, with some states having virtually no nonlicensed care.

The greatest challenge for the NSECE has been in constructing a database of existing child care. Datta said that, prior to the study, “Nobody really even knew beyond an order of magnitude how many centers there might be in this country.” The researchers collected child care provider lists from every state and every state department with such lists ( usually licensing agencies, but also education and other departments). They used information on the lists to construct a universe of “listable” providers, identified the exact location of those providers, and segmented providers into low-income versus non-low-income areas. Then they selected a set of respondents for interviewing. At the time of the workshop, the survey had sampled 22,000 providers, including both center-based and licensed homebased (i.e., excluding informal home-based providers who were sampled from another source). Even with that number, Datta said that they expect to generate information about infant care only at the national level because of sample size problems. She cautioned that a nationally representative study of CACFP might come up against the same challenge, especially for the 0-to 5-month and 6-to 11-month age groups.

The NSECE captures CACFP participation only in combination with other government programs, so there is no single measure of CACFP participation (although CACFP participation is the largest factor in an “other” category). Still, Datta opined that the NSECE could generate valuable information for a national study. Notably, providers can be matched with child enrollment numbers to generate estimates of the children that are being reached through CACFP. Providers can also be matched with income level of location and household data on usage of care. Together, the provider and household data could be used to identify potential participants and whether those children are within or outside the reach of CACFP.

In conclusion, Datta suggested that a national study of CACFP do something similar to what the NSECE did with respect to linking provider location data with demographic data (e.g., census data) as well as with food availability and other relevant data. She also suggested exploring child care usage data from some of the ongoing national household studies such as the Survey of Income and Program Participation (SIPP), conducted by the Census Bureau, and the National Household Education Surveys, conducted by the National Center for Education Statistics.

USING DATA COLLECTED BY THE ADMINISTRATION FOR CHILDREN AND FAMILIES TO INFORM CACFP PARTICIPATION AND SATURATION RATES6

ACF, in the Department of Health and Human Services, manages two early childhood programs that overlap with CACFP: Head Start (and Early Head Start) and the Child Care Subsidy Program. Head Start provides grants to local public and private for-profit and nonprofit agencies and provides comprehensive child development services to economically disadvantaged children and families. Unlike Head Start, the Child Care Subsidy Program, also known as CCDF, does not directly make child care available. Rather, it provides subsidies to help low-income families afford child care while the parents are working or engaged in work-related activities. An important characteristic of CCDF is its emphasis on parents being permitted to choose their own type of child care providers (e.g., center-based care, family day care home). Susan Jekielek explored administrative and other data available for each program and their potential relevance to a national study of child care. While neither Head Start nor CCDF collect CACFPspecific data, both programs collect data that might be informative.

Also of potential value to a national study, the ACF Office of Child Care will soon be collecting quality of care and other data on providers (e.g., asking providers whether they participate in their state’s QRIS). Finally, other possible sources of relevant data include the ACF Children’s Bureau (which serves adoption and foster care), the ACF Family and Youth Services Bureau (which serves runaway and homeless youth), and QRIS.

Relevant Data from Head Start

Even though Head Start grantees are encouraged to use the CACFP program, there is no systematic collection of data on CACFP participation among those grantees. Most Head Start data are administrative Program Information Report (PIR) data, which are collected from the grantees annually. Data include the number of children enrolled (in 2009, 904,153 children, with approximately 44,000 enrolled in family-based programs), some age categories (in 2009, the number of children under 3, the number of 3-year-olds, the number of 4-year-olds, and the number of children 5 years and older), the number of grantees (in 2009, 1,591 grantees), and the number of classrooms (in 2009, 49,200 classrooms). Jekielek pointed out that nutritional intake and other dietary data are difficult to collect at the grantee level and that PIR data are extensive enough without those types

![]()

6This section summarizes the presentation of Susan Jekielek from the OPRE in ACF.

of additional questions (although grantees are asked about Supplemental Nutrition Program for Women, Infants, and Children participation).

Head Start has other, nonadministrative data that may be of interest. Jekielek mentioned two datasets in particular. First, the Family and Child Experiences Survey is a nationally representative survey of grantees (n = 60). Data have been collected on multiple cohorts, with each cohort being followed for 3 years. The survey includes questions about family dietary practices, but not classroom dietary practices. Some of those data may be of interest. Jekielek noted that the survey was undergoing a redesign and engaging an expert panel to provide advice. Second, Head Start has engaged ACF in a representative study of Head Start health managers that will involve interviewing health managers at the grantee and lower levels.

The Child Care Subsidy Program

The Child Care Subsidy Program, again also known as CCDF, does not collect CACFP participation data. However, as with Head Start, they do collect some information that may be of interest. For example, they collect data on enrollment (in 2009, 1,629,300 children were enrolled); type of setting (in 2009, 63 percent of the children were enrolled in a center, 26 percent in a family home, 5 percent in a child’s home, 5 percent in a group home, and 1 percent unreported); and licensing (in 2009, 78 percent of providers were licensed, 21 percent legal but unregulated). Jekielek noted that a large percentage of children receive care in settings that are difficult to track (e.g., an unregulated family home) and that many of those difficult-to-track settings probably overlap with CACFP. The states themselves may have more information about CCDF providers (e.g., whether they participate in CACFP), but those data are not available at the federal level.

DISCUSSION

During the question-and-answer period at the end of this session, the main topic of discussion was the challenge of defining and identifying comparison groups. Fred Glantz described the challenge as “the 800-pound gorilla that is sitting on the table.” The believability of a study depends on the validity of the comparison group. The situation in New Mexico described above illustrates the challenge. An audience member urged CACFP researchers to look to the states for relevant state-level data on eligible nonparticipants. Many states have data that could be useful and which are not reported to USDA. The challenge, of course, is that state-level data look very different state to state. (A more in-depth discussion of the value of state-level data took place later during the workshop. A summary of that discussion is included in Chapter 5.)

REFERENCES

Adams, G., K. Synder, and K. Tout. 2003. Essential but often ignored: Child care providers in the subsidy system. Occasional Paper Number 63. Washington, DC: The Urban Institute. http://www.urban.org/UploadedPDF/310613_OP63.pdf (accessed April 17, 2012).

Adams, G., M. Rohacek, and K. Snyder. 2008. Child care voucher programs: Provider experiences in five counties. Washington, DC: The Urban Institute. http://www.urban.org/UploadedPDF/411667_provider_experiences.pdf (accessed April 2, 2012).

Glantz, F. 2012. Understanding and using CACFP administrative data. Presented at the Institute of Medicine Workshop on Review of the Child and Adult Care Food Program: Future Research Needs, Washington, DC, February 7.

IOM (Institute of Medicine). 2011. Child and Adult Care Food Program: Aligning dietary guidance for all. Washington, DC: The National Academies Press.

Rohacek, M., G. Adams, and K. Snyder. 2008. Child care centers, child care vouchers, and faith-based organizations. Washington, DC: The Urban Institute. http://www.urban.org/UploadedPDF/411666_faith-based-organizations.pdf (accessed April 2, 2012).

Rohacek, M., G. Adams, and E. Kisker. 2010. Understanding quality in context: Child care centers, communities, markets, and public policy. Washington, DC: The Urban Institute. http://www.urban.org/uploadedpdf/412191-understand-quality.pdf (accessed April 2, 2012).

Snyder, K., S. Bernstein, and G. Adams. 2008. Child care vouchers and unregulated family, friend, and neighbor care. Washington, DC: The Urban Institute. http://www.urban.org/UploadedPDF/411665_child_care_vouchers.pdf (accessed April 2, 2012).

This page intentionally left blank.