This chapter reviews eight frameworks that are currently used to assess the value of community-based prevention: benefit–cost analysis, cost-effectiveness analysis, Congressional Budget Office scoring, PRECEDE–PROCEED, RE-AIM, health impact assessment, the Community Preventive Services Task Force (CPSTF) guidelines, and the Canadian Health Services Research (Lomas) Model. The committee concluded that existing frameworks are inadequate for assessing the value of community-based prevention because none meet all of the most important criteria outlined in Chapter 3 and this chapter. Most lack community well-being measures other than health, some do not assess the value of the community processes by which prevention activities are planned and undertaken, many do not consider costs, and some do not give sufficient attention to the individual community context.

WHAT IS A FRAMEWORK FOR ASSESSING VALUE?

The committee concluded that a framework for assessing value is a structure for gathering and processing information to aid intelligent decision making and, more specifically, to help decide whether an activity or intervention is worthwhile. (Frameworks for implementation are different: They focus on how best to implement a program. See Chapter 2 for a description of the most important frameworks for the implementation of community-based prevention.)

A framework for assessing value can aid decision making by

- requiring that goals be stated clearly;

- integrating incomplete and sometimes conflicting information and beliefs;

- avoiding decision making based on arbitrary impressions or self-interest;

- clarifying trade-offs;

- promoting transparency; and

- exposing legitimate sources of disagreement and helping to work through them.

Frameworks for assessing value can be geared toward prospective or retrospective assessments of value. A prospective assessment of value is performed before an intervention takes place and is designed to help policy makers decide whether to undertake the intervention. An example of a prospective assessment is a cost estimate produced by the Congressional Budget Office. Program evaluations are concurrent or retrospective assessments of value: What can the evaluators say about an intervention’s value while it is being implemented or after it has occurred? (Stufflebeam, 1999). Benefit-cost analysis, cost-effectiveness analysis, and some other valuation frameworks, may be either prospective or retrospective (Nash et al., 1975).

The committee concluded that a framework for assessing value should include the following elements:

-

A decision-making context

- Who are the decision makers, what are the decisions they are making, and what are the formal and informal mechanisms by which assessments of value feed into the decision-making process?

-

A list of valued outcomes

- What does the user of the framework care about? What should the user of the framework care about?

-

A list of admissible sources of evidence

- What information does the user of the framework use to build the model of causation that links interventions to valued outcomes?

- A method for weighting and summarizing

-

- How is information on all the valued outcomes boiled down and made digestible for decision makers?

A Framework for Assessing Value Is Embedded Within a Decision-Making Context

Frameworks have evolved to feed into specific decision-making contexts. Examples of decision-making contexts include Congress deciding whether to enact a piece of legislation, a local health department deciding how to allocate its budget to specific health promotion activities, and a community group deciding whether to organize its volunteers to undertake a specific health-related project. A framework that is appropriate and helpful in one decision-making context may not be helpful in another. As a result, the description of a framework must take into account the decision-making context in which it is or will be used.

Different decision makers come from different perspectives and emphasize different factors. Possible factors to consider include legal and ethical issues, the nature of the condition, resource availability, administrative factors, and idiosyncratic factors. These can sometimes be taken into account in the valuation framework. At other times, decision makers must consider them outside of—and in addition to—the assessment of value.

For example, while family-planning activities are legal and may be a valued outcome, they can be constrained by ethical attitudes toward abortion and contraception. Differing ethical and religious views in the community may need to be considered outside the valuation framework. The nature of the health condition may also need to be considered separately from valuation. Some conditions, such as conditions that affect young children, may evoke more sympathy and a greater sense of urgency than others.

Another factor that may need to be considered separately from the valuation is resource availability. Lack of the right resources may interfere with the adoption of an intervention, even when its assessed value is high relative to its costs; the right facilities and people may not be available. The availability of administrative mechanisms that can enhance the acceptability of an intervention is another factor that is considered in decision making but that is outside the valuing framework. For example, the Supplemental Nutrition Assistance Program (SNAP, formerly the Food Stamp Program), with its eligibility requirements to assure that benefits reach the intended population, could serve as the administrative base of a community intervention to improve the nutrition of low-income people. Finally, idiosyncratic factors, such as powerful advocates or vested interests, can outweigh assessments of value that the larger community places on interventions.

All of these factors must be taken into account in actual decisions. A framework for valuation provides a way of focusing attention on the valued outcomes and costs of interventions in a systematic way and helps make those outcomes and costs clear to the larger community in a way that promotes better choices.

A Framework Includes a List of Valued Outcomes

To provide effective support for decision making it is critical to list all the valued outcomes and to show, perhaps in a table, how much each intervention contributes to each outcome. That means that there must be some measure of how each outcome is affected by the intervention. For example, health might be measured in years of life gained or quality-adjusted life years gained. Community participation might be measured by the number of people who attend events or the hours of work volunteered in a year. Reductions in crime or in health risk factors might be represented by the statistics already established by police systems or by disease registries or health surveys.

If decision makers are choosing among a number of possible interventions, it helps if measures of valued outcomes can be devised that work across interventions so that the outcomes of the different interventions can be compared. That is, it helps if each health outcome can be measured in the same way for all interventions, if each community process outcome can be measured in the same way for all interventions, and so on. If each program has its own measures that are different from those of every other, it becomes more difficult to compare interventions.

Program costs (i.e., the value of the resources used) should also be measured. All resources should be measured, whether or not they are purchased. The time donated by community volunteers is a major example of a resource used in community-based programs, often in large quantities, that is rarely counted as a cost when choices are evaluated. The true cost of volunteer time, as with any other resource, is that if it is used for one program, it is not then available for other programs or for other activities that the volunteer might engage in. So the program chosen should be a worthwhile use of that time, preferably the best use.

A Framework Includes a List of Sources of Evidence and a Standard for Admissible Evidence

Every assessment of value is built on a model of causation, i.e., a theory of how the world works. Those models of causation can be built up from many different sources and types of evidence. Some frameworks make explicit the sources of evidence that are taken into consideration and the standards that each source of evidence must meet. The Community Preventive Services Guide, for example, includes a clear description of the sources from which the task force draws evidence and the standards that are used to grade the quality of different pieces of evidence (Carande-Kulis et al., 2000). Other frameworks, such as benefit–cost analysis, have clear criteria for what is to be counted and what is not, but the execution of

the analysis must still rely on the analyst’s judgment (Nash et al., 1975; Weisbrod, 1983).

A Framework Includes a Method for Weighting and Summarizing

Ultimately, after the users of a framework have listed all the outcomes they value and have measured program outcomes in those terms, they must choose among the programs. That can be hard to do when the valued outcomes take different forms and the strength and weight of evidence supporting them vary across outcomes. Intervention A may provide safer streets and community participation among parents and children; intervention B may provide meals and social interaction for isolated elderly people; interventions C, D, and E may offer still other things of value. Which programs are most valuable? Which should be done if not all can be? Which should be done first?

If there are only a few interventions and only a few outcomes, listing the contributions of each intervention to each outcome can be sufficient to allow people to choose among them. But the more interventions and the more outcomes, the more difficult the choice becomes. In that case people can end up focusing on one outcome, such as health, and ignoring the others, simply because it becomes too difficult to know how to take them all into account. An overall summary measure can help prevent this narrowing of focus, although this is not always possible.

How Do We Know if a Framework Works?

A framework works if it supports an intelligent decision-making process, that is, a process that clarifies trade-offs, reminds decision makers of the things that are important, and helps decision makers explore and work through, rather than gloss over, disagreements. Of course, a particular decision may seem intelligent to one person, while it seems an awful mistake to another. Disagreements on ultimate decisions are inevitable. One sign that a framework works well is if it is perceived as valid and useful by people who disagree vehemently about what decision should be made.

The committee has identified eight existing frameworks that have been used to assess the value of community-based preventions:

- benefit–cost analysis,

- cost-effectiveness analysis,

- Congressional Budget Office (CBO) scoring,

4. the PRECEDE–PROCEED framework,

5. the RE-AIM framework,

6. the Health Impact Assessment (HIA) framework,

7. the Community Preventive Services Task Force (CPSTF) guidelines, and

8. the Canadian Health Services Research Foundation (Lomas) Model.

Three of the existing frameworks emerged from the field of economics (benefit–cost analysis, cost-effectiveness analysis, and CBO scoring), while the rest have their roots in the field of public health planning and promotion (PRECEDE–PROCEED, RE-AIM, HIA, CPSTF, and the Lomas model).

The committee’s task in analyzing these frameworks is to identify whether they work well for assessing the value of community-based prevention and, if not, why not. The following sections discuss each framework in terms of its decision-making context, its list of valued outcomes, its criteria for admissible evidence, its weights and summarizing, and its limitations.

Benefit–Cost Analysis

Unless otherwise noted, information in the following section was obtained from Carlson et al. (2011) and Weisbrod (1983). The benefit–cost analysis (BCA) framework grew out of the belief that society’s problems can be solved systematically through the rigorous application of quantitative scientific principles. BCA was originally developed to guide decisions regarding large-scale government infrastructure projects, such as dam building and flood control projects, and it is geared toward deciding whether a major capital investment is worthwhile (Subcommittee on Evaluation Standards, 1958). (See Box 4-1 for a thorough description of the BCA methodology.)

Decision-Making Context

In the United States BCA has been used mainly by executive branch agencies of the federal government to guide decisions on whether or not to implement infrastructure projects, job training programs, and other social programs. It has also been applied in regulatory impact analyses to decisions such as limits on toxins in drinking water standards.

List of Valued Outcomes

In principle BCA takes a societal perspective, meaning that it takes into account all the things that all the individuals in society care about. This

completeness of perspective is BCA’s core strength. Ideally the list of valued outcomes includes market-traded goods and services that can be easily expressed in dollars as well as things like fairness and risk avoidance that are either difficult or impossible to express in dollars. In practice analysts find it difficult to document and quantify the value of things like fairness. Generally, a sound BCA will describe the fairness effects in a separate section, sometimes under the label of “intangibles.”

Criteria for Admissible Evidence

In general, it is up to the analyst to decide what evidence to include in measuring the quantitative effects of an intervention and the value of those effects. The evidence that is most clearly admissible is high-quality published quantitative evidence on the effect of the policy on some outcome of relevance. Price data for market-traded goods and services that are program outcomes are also admissible. A difficult problem occurs when an important outcome that is affected by the intervention is not traded in markets and, therefore, has no price. In this case, the analyst must rely on systematic reviews of published academic literature on such topics as willingness to pay as well as on expert opinion and on his or her personal judgments. That flexible approach is essential given the comprehensive list of valued outcomes in BCA and the very wide scope of projects to which BCA can be applied. As with some other frameworks for assessing value, this flexibility inevitably requires users to apply judgments regarding the reliability of estimates of benefits and costs.

Weighting and Summarizing

BCA uses a single metric—dollars (or other currency)—to summarize the good and bad effects of an intervention and the resources used to undertake the intervention. Dollar values are assigned in a straightforward way to market-traded goods and services, but they are also assigned to things, such as extended life expectancy that are not traded directly in markets. The concept of “willingness to pay” is used to provide a dollar value for such things as the chance of a better health outcome due to a proposed intervention. All future benefits of a project are summarized in present-value dollars, as are all future costs. The present value of a cost or benefit that occurs in the future is deflated to the present using a discount factor.

The BCA technique uses methods for measuring and describing the degree of uncertainty in the assessment of value. One prominent technique is called Monte Carlo simulation analysis, in which the value of an intervention is assessed repeatedly, each time using assumptions that are drawn randomly (hence “Monte Carlo”) from a range of possible values defined

BOX 4-1

Benefit–Cost Analysis: Theoretical Basis and Practical Considerations

Benefit–cost analysts seek to identify both private and public decisions in which the benefits (or outputs) are greater than the costs (or inputs).

Market prices should be used to measure the value of both benefits and costs, except where market prices do not exist or when there is a good reason to believe that market prices do not accurately reflect true value. Among the reasons for questioning the appropriateness of observed prices are the existence of monopoly power in particular sectors, economies of scale, a serious lack of information, or external effects (or spillovers) not reflected in market values.

Where the prices of inputs or outputs do not exist, analysts strive to construct values that reflect people’s valuation of inputs and outcomes. (These are known as shadow values or shadow prices.)

Although economic efficiency should be regarded as the primary objective, when decisions have important equity (and other) effects, these should be recorded and, if possible, entered into the benefit–cost analysis itself. An alternative way of describing the efficiency criterion is to state that projects should be designed to maximize total (or per capita) national economic welfare, which is often assumed to be equivalent to national income. To do this the project should maximize the net benefits that it generates.

Benefits (or costs) that will not occur until sometime into the future should be valued (weighted) as less important (per dollar) than benefits or costs expected to be incurred immediately because (and only if) this reflects how people feel about future benefits and costs relative to present ones. The process employed for making benefits and costs which occur at different points in time commensurable is called discounting and requires the use of a discount (or interest) rate to reflect the diminished value today of benefits or costs not expected to occur until some future time period. For projects that generate a stream of future benefits or costs, the benefit–cost ratio (B/C) is

B/C = Total discounted value of future expected benefits/

Total discounted value of future expected costs, or

Net Present Value of Program = Present value of benefits/

Present value of costs

by the analyst. This enables the user to see the range of possible outcomes under various combinations of assumptions as well as the likelihood that they will be realized (Savvides, 1994).

More Details and Examples

Among the many published BCA studies, two of the most comprehensive are Weisbrod’s (1983) analysis of non-institutional care for the

An intervention has externality, or spillover, effects if it affects individuals who do not directly participate. Externalities, which can be positive or negative, should be accounted for in a benefit–cost analysis, even if observed market prices are not available for the valuation of the effect.

The concept of “benefit” (which when negative becomes a “cost”) underlies all benefit–cost analyses; a clear understanding of the meaning of “benefit” is the fundamental requirement for undertaking any sound benefit–cost study of public activities. It is useful to think of the benefits of a public intervention as the extent to which the program produces desirable results. What is or is not desirable depends, in turn, on the goals or objectives of the program. This is to say, the first step in the process of project evaluation or policy analysis should be a statement of goals. The second step should be an attempt to state these goals in operationally measurable form. The third step in the analysis is the development of a set of weights that reflect judgments about the comparative importance of progress toward each of the goals—the goal trade-offs.

Valued outcomes in BCA can be grouped into two principal categories: (1) those related to economic allocative efficiency and (2) those related to distributional equity.

Allocative efficiency as an economic goal reflects the fact that it is sometimes possible to reallocate resources—perhaps increasing or decreasing the amount of resources used in any expenditure program in ways that will bring about an increase in the net value of output produced by those resources. For such reallocations the increase in the value of the output of the good whose production is expanded must be greater than the decrease in the value of the output of the good whose production is decreased. Insofar as benefit–cost analysis is directed at allocative efficiency, it can be viewed as an attempt to replicate for the public sector the decisions that would be made if private markets worked satisfactorily (Haveman and Weisbrod, 1977). However, allocative efficiency ignores considerations of which particular people are made better off or worse off. The issue of how alternative resource allocations affect the well-being of particular people is captured by the distributional—or equity—goals. The goals can and should be incorporated into benefit–cost analysis, but must be done explicitly. One way of examining distributional effects is through sensitivity analysis, which varies the values of certain inputs to determine their influence on output.

mentally ill and Carlson et al.’s (2011) analysis of Section 8 housing subsidies.

Shortcomings of BCA When Applied to Assessing the Value of Community-Based Prevention

BCA requires a monetary assessment of how a community trades one outcome for another. However, some outcomes, such as social cohesion or

civic participation are not readily monetized, and there are other factors, such as years of human life, that can (and have been monetized), but for which monetization is controversial. An example of the latter can be seen in the value of a year of human life assigned in the regulatory impact analyses conducted by the Environmental Protection Agency (IOM, 2006).

Cost-Effectiveness Analysis

Cost-effectiveness analysis (CEA) begins with many of the core elements of BCA framework, but it is tailored to the assessment of medical and health interventions. The key difference between CEA and BCA is that CEA focuses on health as the valued outcome and measures health by methods that avoid the use of dollars (Donaldson, 1998; Gold et al., 1996). The measures of health often used in CEA include cases of disease, life-years, and health-adjusted life expectancy. The core question that CEA answers is how much it costs to produce an additional unit of health using one particular intervention versus a second intervention with which it is compared (Bleichrodt and Quiggin, 1999; Donaldson, 1998; Drummond et al., 2005; Gold et al., 1996).

The answer to this question is given by what is termed the “cost-effectiveness ratio.” An intervention’s cost-effectiveness ratio is, in effect, the dollars spent for an additional unit of health. The health measure that is the standard of good practice is quality-adjusted life years (QALYs), so that the cost-effectiveness of an intervention is thus expressed as the additional cost to achieve an additional QALY (Gold et al., 1996). A cost-effectiveness ratio, sometimes called an incremental cost-effectiveness ratio, is not a fixed or single number associated with an intervention. It is instead defined with reference to some alternative intervention, and thus its value depends on what the alternative is. For example, vaccinating children once in early childhood may be compared with not vaccinating them but instead treating the disease when it occurs. Or providing a single vaccination could be compared with providing multiple vaccinations over time, perhaps including a booster in adulthood. The cost-effectiveness of vaccinating once will differ depending on which comparison is chosen.

Decision-Making Context

CEA is geared toward maximizing the health improvements achieved among a target population and to analyzing the resources or costs likely to be required to achieve those health improvements. An example of this sort of decision-making context is provided by the Department of Veterans Affairs, which operates a health system on a fixed budget with the goal of improving the health of its enrolled population. CEA can help the

administrator of that program prioritize technology adoption and choose the treatment guidelines that produce health improvements most efficiently. Cost-effectiveness analysis is not used explicitly in the development of coverage policy and practice guidelines in the United States.

In other countries cost-effectiveness is often explicitly used to help set standards of care and coverage. The UK National Institute for Health and Clinical Excellence (NICE) uses cost-effectiveness analysis as one element in deciding whether the National Health Service (NHS) will pay for new technologies; its purpose is to help ensure that everyone in the country has access to proven medical care (Steinbrook, 2008). NICE sometimes uses cost-effectiveness analysis to negotiate prices with manufacturers who can improve the cost-effectiveness of their product by reducing its price (Kanis et al., 2008; Steinbrook, 2008). Australia’s Pharmaceutical Benefits Advisory Committee (PBAC) uses cost-effectiveness analysis to develop guidance for the Minister of Health on the medications that should be covered by the national pharmacy benefits plan (Department of Health and Aging, 2007; Henry et al., 2005).

List of Valued Outcomes

Health is the primary valued outcome in CEA. CEA takes into account the health improvements and adverse effects from the intervention that occur over a specific time horizon, often the lifetime of patients, to calculate the net health benefits, which are defined as improvements minus adverse effects. As noted, health improvements include both longer life and better quality of life. CEA calculates the resources used to produce the health improvements separately. Resources include market-traded items, such as physician labor, hospital care, and pharmaceuticals, as well as non-market-traded items, such as patients’ time and the time of unpaid caregivers. The health improvements and costs of an intervention are then compared with an alternative intervention—vaccination with waiting and treating illness, screening annually with screening less often, and so on—in order to arrive at the net addition to health and the net addition to costs (or savings) of the intervention compared to the alternative. The cost-effectiveness ratio is the net costs divided by the net addition to health (Gold et al., 1996; Weinstein and Stason, 1977).

Criteria for Admissible Evidence

In general the modeling team uses the best available evidence, with preference given to published peer-reviewed literature (Gold et al., 1996). When published data are not available, the team is expected to use judgment and expert opinion to fill in key parameters when published data are

not available. In the United States the standards for admissible evidence have moved rapidly in the direction of requiring systematic reviews of the literature, published and unpublished (Harris et al., 2001; U.S. Preventive Services Task Force, 2012). If many studies are available in the literature, a meta-analysis is used to summarize them and arrive at the best estimate of the effectiveness of an intervention. The move toward systematic reviews has followed the trend in other countries set by the Cochrane Collaboration, headquartered in the United Kingdom. The Cochrane Collaboration draws on experts in more than 100 countries to prepare and make available on its website systematic reviews of the medical, public health, and related applied sciences literature on thousands of health topics (Cochrane Collaboration, 2012). The standards it has developed for such reviews are increasingly used around the world.

Weighting and Summarizing

Changes in health status are usually summarized using QALYs, which reflect both length of a life and its quality (e.g., a year of perfect health counts as 1.0 QALY, while years of less-than-perfect health are given scores between 0 and 1 depending on the severity of illness [Gold et al., 1996]). The health effects of an intervention are given by the sum of all the changes in QALYs, good and bad, compared to an alternative intervention. Here, as with BCA, sensitivity analysis can be instructive as to distributional aspects of the proposed intervention. It can be difficult, however, to build equity considerations into the analysis (IOM, 2006). Future changes are discounted so that QALYs are expressed in present value. Resource use—that is, costs—is also summarized in discounted (present-value) dollars. The incremental cost-effectiveness ratio (ICER) compares the discounted QALYs and costs of the intervention with those of alternative interventions, as described, and might be thought of as a price tag to use to prioritize interventions: How much would it cost to produce one healthy year using this intervention rather than the one with which it is compared?

More Details and Examples

Hundreds of CEAs are published each year by medical and health journals and by advisory groups such as NICE and the Australian PBAC. The authoritative description of CEA methodology is by Gold et al. (1996); Appendixes B and C of that report describe two examples of CEA—the cost-effectiveness of interventions to prevent neural tube defects (Appendix B) and the cost-effectiveness of interventions to reduce cholesterol in adults (Appendix C).

Shortcomings of CEA When Applied to Assessing the Value of Community-Based Prevention

The primary shortcoming of CEA as a method for valuing community-based prevention is that it focuses solely on the aggregation of individual health outcomes. It does not include—and has not developed methodologies to measure—the effects of an intervention on community well-being or community process and does not usually take into consideration the differences among communities (Birch and Gafni, 2003).

Congressional Budget Office Scoring

The Congressional Budget Office (CBO) is a nonpartisan congressional support agency that analyzes existing federal programs and proposed legislation to provide budget, economic, and other information for Congress. The agency staff is made up of economists and research staff who develop cost estimates, reports, and other products. Cost estimates, often called CBO “scores,” are projections of the federal budget impact of a piece of legislation being considered by the Congress.

Some of the legislation that CBO scores relates to prevention. Often the scores have been criticized for failing to fully recognize the benefits of prevention (Woolf et al., 2009). But CBO’s framework for assessing value is designed to aid in the federal budget process—it is not designed to provide a comprehensive assessment of the value of prevention activities. It is, therefore, unsurprising that advocates for prevention activities feel ill-served by CBO’s assessments.

Decision-Making Context

CBO scores play a formal role in the federal budget process. Because the scores are designed to help the House and Senate budget committees ensure that legislation fits within a larger budget framework, CBO details the budget impact of proposed policies. A consistent framework for presenting information—defined in law1 and through formal agreements between the House and Senate budget committees—helps lawmakers consider the absolute and relative budget costs of different policies and programs, from health to income security and defense.

____________________

1 Budget Enforcement Act of 1990. Public Law 508, 101st Cong., 1st sess. (November 5, 1990).

List of Valued Outcomes

CBO scoring emphasizes one outcome: changes in the federal deficit over the next 10 years. To measure that outcome, cost estimates detail projected changes in federal revenues and federal outlays. Written cost estimates sometimes include information about health impact and other outcomes of interest (for example, expected changes in smoking rates from amendments to the Food, Drug, and Cosmetic Act or expected changes in insurance coverage under the Affordable Care Act), but discussion of these non-budget outcomes is limited. CBO scores must also include a statement indicating whether proposed legislation would impose an “unfunded mandate” on either the private sector or on state and local governments.

Criteria for Admissible Evidence

In general, modelers at CBO use the best available evidence, including published academic literature, expert opinion, and the modeler’s judgment (Kling, 2011). CBO cannot decline to produce an estimate due to lack of evidence, so casting a broad net for evidence is essential.

Weighting and Summarizing

The primary measure for CBO scoring—the estimated 10-year change in the federal deficit—is presented in total and is also broken down to detail various submeasures, which include changes in revenues, changes in outlays, annual changes in revenues and outlays, changes in outlays for mandatory programs (such as Medicare), and changes in outlays for discretionary programs (such as CDC). Details about the submeasures fulfill the needs of the federal budget process, where revenue, spending, and other budget categories must be tracked separately.

More Details and Examples

For an example of a cost estimate of health-related legislation, see CBO’s scoring of the Family Smoking Prevention and Tobacco Control Act (2008). For a general discussion of CBO’s use of evidence and approach to scoring, see Kling (2011).

Shortcomings of CBO Scoring When Applied to Assessing the Value of Community-Based Prevention

The most obvious shortcoming of CBO’s framework is its focus, as required by legislation, on changes in the federal deficit. By design, CBO’s

framework does not emphasize the inherent value of health improvements or other improvements in well-being from community-based prevention. Policy makers can and do take such non-budget factors into account when making decisions, even when not addressed in CBO analyses.

Another shortcoming is the use of the 10-year budget window, which can be too short to pick up some important outcomes, such as long-term health improvements from policies or programs that reduce childhood obesity. Finally, the process of selecting policies for scoring is very limited—formal cost estimates are produced only for pieces of legislation that have been reported out of a committee of Congress.

The PRECEDE–PROCEED Framework

As noted in Chapter 2, the PRECEDE–PROCEED model is a widely applied framework for decision making in the planning and evaluation of health-promotion and disease-prevention programs and services. The ecological approach of that framework has particular relevance to community- or population-level efforts (Green and Kreuter, 2005). PRECEDE is an acronym for predisposing, reinforcing, and enabling constructs in educational/ecological diagnosis and evaluation, while PROCEED similarly refers to other anchors in the model: policy, regulatory, and organizational constructs in educational and ecological development. The combination of these elements in planning and evaluation constitute a framework both as a logic model and as a procedural model.

Decision-Making Context

This model is used extensively in courses on planning and evaluation in schools of public health and other graduate programs in the health sciences. It is widely applied by program planners, community health advisory boards, and practitioners. Among those applications more than 1,000 have been published (Green, 2012c).

List of Valued Outcomes

In general, the valued outcomes include health and quality of life, but the outcomes used to evaluate a specific program will depend on the program’s goals or, among those, the specific objectives that the community planners select for evaluation. Quality of life can encompass a broad array of community-level indicators of well-being. The framework puts heavy emphasis on the process of developing and carrying out a health promotion process of planning, and the inclusion of community members and

stakeholders in the development of a program is a valued outcome in and of itself (Harvey and O’Brien, 2011; Watson et al., 2001).

Criteria for Admissible Evidence

PRECEDE–PROCEED emphasizes the blending of evidence matched with each of several ecological levels, with theory applicable to each of those levels, with professional experience, and with community perspectives derived from a participatory process of planning and research (Green and Kreuter, 2005).

Weighting and Summarizing

The PRECEDE–PROCEED approach is not designed to produce a weighted summary measure of the expected impact of an intervention, but it does include procedures for the valuing process of considering the relative importance attached to each intended outcome from the professionals’ and public’s perspectives.

More Details and Examples

Many of the more than 1,000 published applications, tests, reviews, and reflections relating to PRECEDE–PROCEED have focused on developing, valuing, or evaluating interventions, programs, and policies in specific community settings, such as school health promotion or worksite wellness programs, or have examined specific components such as the mass media component of a program or the policy impact of a new law or regulation (Buta et al., 2011; Green, 2012a). Some are adaptations of more comprehensive applications of previously tested or mandated programs for mass immunization or screening, which makes them community-placed programs. The full application of the model produces a community-based program as it engages the community more actively in setting priorities and blending components of the policies, programs, settings, strategies, and tactics to be included and monitored.

Shortcomings of PRECEDE–PROCEED When Applied to Prospective Valuing of Community-Based Prevention

The participatory orientation of PRECEDE–PROCEED is its strength, but it also limits its usefulness for a funding agency wanting to assess the value of many different possible interventions. In the PRECEDE–PROCEED framework the value of an intervention—and the nature of the intervention itself—depends on local conditions and the input and guidance of the

community. This framework does not assess the resources used to conduct an intervention, and so cannot be used to weigh costs against benefits.

The Reach, Effectiveness, Adoption, Implementation, and Maintenance (RE-AIM) Framework

Decision-Making Context

The RE-AIM framework has been widely used in the community health promotion field to assess whether a specific intervention is likely to have a positive impact on health (Glasgow et al., 1999).

List of Valued Outcomes

The only valued outcome in the RE-AIM framework is an improvement in population health, although that outcome will be specified differently depending on the intervention. RE-AIM measures resource use as an outcome.

Criteria for Admissible Evidence

In general the modeler uses the best available evidence, including expert opinion and the modeler’s judgment.

Weighting and Summarizing

An intervention is summarized on five dimensions: reach (the share of the population reached by the intervention), effectiveness (of those reached, the share who get a positive result), adoption (the share of the population served by organizations that adopt the program), implementation (the share of adopting organizations that implement the program), and maintenance (the share of implementing organizations that maintain the program). These five elements of a program or intervention can be seen as multiplicative, insofar as each depends for its impact on health on the level of the others (Glasgow et al., 1999). Thus, if each has a yield of 0.5 for a particular program, the result will be .5 × .5 × .5 × .5 × .5 = 3.1 percent of the eligible population getting a positive result.

More Details and Examples

The website http://www.RE-AIM.org offers a growing list of publications that have tested the RE-AIM components or applied RE-AIM in the prospective valuing and retrospective evaluation of programs (NCI, 2012). It is sometimes combined with PRECEDE–PROCEED and other planning

models in health promotion and clinical programs. One application of RE-AIM that is particularly relevant to valuing interventions for programs is its use in the development of guidelines for assessing the external validity, or generalizability, of the results of experimental and other evaluation results (Green and Glasgow, 2006; Green et al., 2009). This set of guidelines developed with the use of RE-AIM has been adopted or recommended by several journals as guidelines for authors (Green, 2012b).

Shortcomings of RE-AIM When Applied to Assessing the Value of Community-Based Prevention

RE-AIM does not specify individual or community-level health measures except insofar as they are used as the measure of “effectiveness.” Ideally, calculations of effectiveness in RE-AIM rely on health measures, but they sometimes use health risk behaviors or changes in community health risk conditions (Glasgow et al., 1999).

Health Impact Assessment

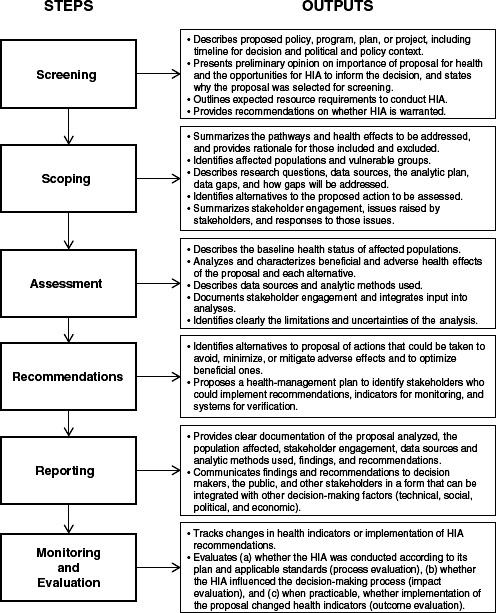

The Health Impact Assessment (HIA) is a framework for assessing the health impacts of interventions primarily in non-health sectors for the purpose of mitigating potential harms or enhancing potential benefits. HIAs can be used to examine policies, such as living wage laws, zoning restrictions to reduce sensitive use development around high-use roadways, or agricultural subsidies as well as to assess projects, such as the development of a subway system or the introduction of a farmer’s market. HIAs are used as a way to introduce health considerations into all policies (i.e, “health in all policies” or HiAP) (IOM, 2011). The steps in conducting an HIA are shown in Figure 4-1.

Decision-Making Context

HIAs are used to provide decision makers, usually in non-health sectors, with information on the health effects of policies and projects. In addition they provide information about how to modify policies or projects so that health impacts can be improved, i.e., the harms reduced or the benefits enhanced. The HIA has been more widely used in Europe than in the United States, but its use has increased in the last 10 years, and the approach has gained increased visibility through capacity building supported by the Pew Charitable Trusts and the Robert Wood Johnson Foundation (Pew Charitable Trusts, 2009; RWJF, 2009). A recent report, Improving Health in the United States (NRC, 2011) provided guidance to provide more standardization for HIAs.

FIGURE 4-1 Framework for a Health Impact Assessment, illustrating steps and outputs.

SOURCE: NRC, 2011.

List of Valued Outcomes

HIAs do not have a specific list of health outcomes to evaluate. Rather, a scoping process, including literature reviews and expert consultation, is used to identify the potential health impacts that are of importance to the affected stakeholders. The level of stakeholder participation varies with the type of policy or project. For example, the development of new residential and commercial infrastructure would engage those currently living in the area as well as individuals in the business and other communities. Considerations might include the effects of displacement, the disruption of social networks and cohesion, increased housing cost, changes in access to public transportation, and changes in job prospects as well as the impact of commercial development (London’s Health, 2000; UCLA HIA-CLIC, 2012).

Criteria for Admissible Evidence

In general the best available information is used to assess the health impact. Evidence may be qualitative or quantitative. The degree of rigor is often contingent on the nature of the project, the analytic resources available, the urgency of the decision makers, and the time available (Snowdon et al., 2010).

Weighting and Summarizing

Changes in health status are usually displayed in natural health units and are sometimes summarized using QALYs.

More Details and Examples

The 2011 NRC report Improving Health in the United States provides a summary of the purposes, methods, and uses of HIAs.

Shortcomings of HIA When Applied to Assessing the Value of Community-Based Prevention

The primary shortcoming of HIA is that it does not capture the costs associated with an intervention. Analyses are adapted to the specific interventions and stakeholders and thus they can vary significantly (Kemm, 2003).

Community Preventive Services Task Force Guidelines

The Community Preventive Services Task Force (CPSTF) is an independent, nonfederal, volunteer body with members appointed by the Director

of the Centers for Disease Control and Prevention (CDC). Those members represent a broad range of research, practice, and policy expertise in community preventive services, public health, health promotion, and disease prevention (Community Guide, 2012d). See Box 4-2 for CPSTF Prioritization Process.

Decision-Making Context

The CPSTF recommendations influence decisions of the CDC and of other funders regarding which activities to fund. Local health departments, community groups, and health systems also use the recommendations to decide which interventions to undertake (Community Guide, 2012b).

BOX 4-2

The CPSTF Prioritization Process

The task force prioritization committee is responsible for overseeing the process of prioritizing topics. The process begins with formally requesting stakeholders to suggest high-priority topics. The task force then collects and evaluates information on each potential topic using the following criteria:

- potential magnitude of preventable morbidity, mortality, and health care burden for the U.S. population as a whole based on estimated reach, impact, and feasibility;

- potential to reduce health disparities across varied populations based on age, gender, race/ethnicity, income, disability, setting, context, and other factors;

- degree and immediacy of interest expressed by major Community Guide audiences and constituencies, including public health and health care practitioners, community decision makers, the public, and policy makers;

- alignment with other strategic community prevention initiatives, including, but not limited to, Healthy People 2020, the National Prevention Strategy; the County Health Rankings, and America’s Health Rankings;

- synergies with topically related recommendations from the U.S. Preventive Services Task Force and Advisory Committee on Immunization Practices;

- availability of sufficient research to support informative systematic evidence reviews; and

- the need to balance reviews and recommendations across health topics, risk factors, and types of services, settings, and populations.

SOURCE: Community Guide, 2011.

List of Valued Outcomes

The CPSTF evaluates the effectiveness of interventions (programs and policies). The key valued outcome is the health of a population, assessed as the sum of the health of individuals. The CPSTF guidelines also measures how effective interventions are in different populations (distributional and equity effects), generalizability, and acceptability (Community Guide, 2012c). For those interventions where there is evidence of effectiveness the resource use and cost-effectiveness are assessed where studies are available, although these are not used for making the primary recommendation (Hahn et al., 2004).

Criteria for Admissible Evidence

The CPSTF guidelines are based on systematic reviews of the published academic literature. The admissibility of published studies is determined according to the appropriateness of the study design for the intervention being examined and the quality of execution. The criteria recognize that randomized controlled trials may not be the most appropriate study design and that they are often impractical for assessment of community-level interventions. Thus, well-done observational studies are often included (Norris et al., 2002).

Weighting and Summarizing

The findings from the literature review are summarized on two dimensions: Is the evidence strong enough to draw a conclusion (i.e., are there enough studies of suitable design and execution)? And, if so, does the evidence indicate that the intervention improves health outcomes? (The Community Guide, 2012a).

More Details and Examples

For a detailed description of CPSTF’s methodology see Briss et al. (2000) and Carande-Kulis et al. (2000). The CPSTF’s recommendations are available at http://www.thecommunityguide.org/index.html.

Shortcomings of the CPSTF Guidelines When Applied to Assessing the Value of Community-Based Prevention

The CPSTF guidelines have several shortcomings: The list of valued outcomes focuses only on outcomes associated with health; the process for determining which interventions are studied is highly centralized; and

conclusions do not consider the tradeoff between benefits and costs, although where cost effectiveness information is available that information is summarized.

The Canadian Health Services Research Foundation Model

The Canadian Health Services Research Foundation (CHSRF) model—also referred to as the Lomas model after Jonathan Lomas, the first chief executive officer of the CHSRF—provides a conceptual framework for combining evidence of different types to inform health system decision making. It is not a full-fledged framework for assessing value because it does not specify a list of valued outcomes. Instead, it enumerates the different types of evidence used by different decision makers (Lomas et al., 2005).

Decision-Making Context

The model focuses on the use of evidence in real-world decision making and recognizes that a broad range of information, correct or incorrect, is used by decision makers. The primary focus is on how those who formulate guidance use different types of evidence in making their recommendations, setting their targets, and providing guidance (Lomas et al., 2005).

List of Valued Outcomes

The Lomas model does not specify a set of valued outcomes.

Criteria for Admissible Evidence

The model describes different types of evidence, but it does not have specific criteria for what may be included. All types of evidence are, in general, admissible, which is the generally accepted practice of the relevant disciplines and decision makers.

The Lomas model distinguishes three types of evidence: scientific evidence, social science scientific evidence, and colloquial evidence. Scientific evidence is considered context-independent—that is, the information is knowable and broadly true. It provides information about whether an intervention can work. The efficacy result of a randomized controlled trial is a typical example. Social science scientific evidence is considered to be context dependent—that is, the information is knowable, but the result depends on the context in which it occurs. Such evidence provides information about whether an intervention does work in a given community. Effectiveness studies are an example. The final type of evidence, colloquial evidence, “can usefully be divided into evidence about resources, expert and

professional opinion, political judgment, values, habits and traditions, lobbyists and pressure groups, and the particular pragmatics and contingencies of the situation” (Lomas et al., 2005, p. 1).

Weighting and Summarizing

The Lomas model does not prescribe a single approach for weighting or summarizing the various pieces of evidence, and it recognizes the importance of all types of evidence and the lack of a technical solution to making the best choice. This framework incorporates a deliberative process of relevant stakeholders to consider and weigh all the different types of information.

More Details and Examples

See Lomas et al. (2005).

Shortcomings of the Lomas Model When Applied to Assessing the Value of Community-Based Prevention

The Lomas model does not specify a list of valued outcomes or a method for weighting and summarizing an intervention’s impacts. The Lomas model is more descriptive of the decision-making process and is not meant to be prescriptive or normative (Lomas et al., 2005).

VALUING COMMUNITY-BASED PREVENTION: IS A NEW FRAMEWORK NEEDED?

This chapter has identified eight existing frameworks for assessing value. Given the profusion of frameworks, is it really necessary to define another one? The answer depends on how well each of the eight frameworks addresses the special characteristics of community-based prevention described in Chapters 2 and 3.

The committee concluded that a framework for evaluating community preventive programs and policies should meet at least three criteria:

- The framework should account for benefits and harms in three domains: health, community well-being, and community process (see Chapter 3). Community-based prevention can create value not only through improvements in the health of individuals but also by increasing the investment individuals are willing and able

to make in themselves, in their family and neighbors, and in their environment. Furthermore, community-based prevention, by definition, involves decisions among groups of people about how to live in society, how the physical environment should be built, what food should be served in schools, and so on. Thus, the process by which interventions are decided upon and undertaken needs to be treated as a valued outcome. If a community decides to tell people what they can or cannot do or what they should or should not do, the decisions need to have the legitimacy—the added value—that comes from an open and inclusive group decision-making process.

2. The framework should consider the resources used and compare benefits and harms with those resources. To make that comparison, and to compare different interventions with each other, it is essential not only to know that some benefit is likely, but also to be aware of the magnitude of the benefits and of the costs associated with each intervention.

3. The framework needs to be sensitive to differences among communities and to take them into account in valuing community-based prevention. In part this reflects the reality that, because communities vary so much in their characteristics, the causal links between interventions and valued outcomes may be different for different communities.

None of the eight frameworks meets all three criteria—that is, accounts for benefits and harms in all three domains identified in Chapter 3, compares benefits with costs, and is sensitive to differences among communities (see Table 4-1). Only three of the eight are comprehensive in accounting for benefits and can thus assess value in all three domains of health, community well-being, and community process. Only three estimate costs as a matter of course. Four are moderate or high in their attention to differences among communities. Benefit–cost analysis, which is comprehensive in accounting for benefits and always estimates costs, does not routinely consider the unique characteristics of the decision-making context and the community. PRECEDE–PROCEED, which measures health and community process benefits and takes the unique characteristics of the community into account, does not require that costs be estimated.

The committee concluded that a new framework is necessary to guide the assessment of value for community-based prevention, one that measures benefits comprehensively, compares benefits with costs, and takes into account the differences among and within communities. Chapter 5 describes such a new framework.

TABLE 4-1 Eight Frameworks Summarized

|

|

|||

| Includes Comprehensive Set of Valued Outcomes | Compares Benefits with Costs | Accounts for Differences Among Communities | |

|

|

|||

| Benefit–cost analysis (BCA) | Yes, can account for all benefits | Yes | Low; can account for contex |

| Cost-effectiveness analysis | No, health only | Yes | Low; can account for context |

| Congressional Budget Office scoring | No, only federal spending and revenue | Yes | Low; designed for Congressional budget process |

| PRECEDE–PROCEED framework | No, although it includes both health and community process | No | High; used in communities |

| RE-AIM framework | No, health only | No | High; used by evaluators |

| Health Impact Assessment framework | No, health only | No | High; used in communities |

| Community Preventive Services Task Force guidelines | No, health only | No | Moderate; focus on community |

| Lomas model | No, valued outcomes not specified | No | Moderate; focus on decision-making process |

|

|

|||

Birch, S., and A. Gafni. 2003. Economics and the evaluation of health care programmes: Generalisability of methods and implications for generalisability of results. Health Policy 64(2):207-219.

Bleichrodt, H., and J. Quiggin. 1999. Life-cycle preferences over consumption and health: When is cost-effectiveness analysis equivalent to cost–benefit analysis? Journal of Health Economics 18(6):681-708.

Briss, P. A., S. Zaza, M. Pappaioanou, J. Fielding, L. Wright-De Agüero, B. I. Truman, D. P. Hopkins, P. D. Mullen, R. S. Thompson, and S. H. Woolf. 2000. Developing an evidence-based guide to community preventive services: Methods. The Task Force on Community Preventive Services. American Journal of Preventive Medicine 18(1 Suppl):35-43.

Buta, B., L. Brewer, D. L. Hamlin, M. W. Palmer, J. Bowie, and A. Gielen. 2011. An innovative faith-based healthy eating program: From class assignment to real-world application of PRECEDE–PROCEED. Health Promotion Practice 12(6):867-875.

Carande-Kulis, V. G., M. V. Maciosek, P. A. Briss, S. M. Teutsch, S. Zaza, B. I. Truman, M. L. Messonnier, M. Pappaioanou, J. R. Harris, and J. Fielding. 2000. Methods for systematic reviews of economic evaluations for the Guide to Community Preventive Services. American Journal of Preventive Medicine 18:75-91.

Carlson, D., R. Haveman, T. Kaplan, and B. Wolfe. 2011. The benefits and costs of the Section 8 housing subsidy program: A framework and estimates of first year effects. Journal of Policy Analysis and Management 30(2):233-255.

CBO (Congressional Budget Office). 2008. Family Smoking Prevention and Tobacco Control Act. Washington, DC: Congressional Budget Office.

Cochrane Collaboration. 2012. About us: The Cochrance Collaboration. http://www.cochrane.org/about-us (accessed May 21, 2012).

Community Guide. 2011. Community Preventive Services Task Force first annual report to Congress. Atlanta, GA: Community Preventive Services Task Force.

Community Guide. 2012a. The Community Guide—systematic review methods. http://www.thecommunityguide.org/about/methods.html (accessed May 22, 2012).

Community Guide. 2012b. The Community Preventive Services Task Force. http://www.thecommunityguide.org/about/task-force-members.html (accessed May 22, 2012).

Community Guide. 2012c. The guide to community preventive services. http://www.thecommunityguide.org/index.html (accessed May 22, 2012).

Community Guide. 2012d. What is the Community Preventive Services Task Force? http://www.thecommunityguide.org/about/aboutTF.html (accessed May 22, 2012).

Department of Health and Aging. 2007. Pharmaceutical Benefits Advisory Committee. http://www.health.gov.au/internet/main/publishing.nsf/content/health-pbs-general-listing-committee3.htm (accessed May 21, 2012).

Donaldson, C. 1998. The (near) equivalence of cost-effectiveness and cost-benefit analyses: Fact or fallacy? Pharmacoeconomics 13(4):389-396.

Drummond, M., M. Schulper, G. Torrance, B. Obrien, and G. Stoddart. 2005. Methods for economic evaluation of health care programmes. 3rd Ed. New York: Oxford University Press Inc.

Glasgow, R. E., T. M. Vogt, and S. M. Boles. 1999. Evaluating the public health impact of health promotion interventions: The RE-AIM framework. American Journal of Public Health 89(9):1322-1327.

Gold, M., J. Siegel, L. Russell, and M. Weinstein. 1996. Cost-effectiveness in health and medicine. New York: Oxford University Press.

Green, L. W. 2012a. Bibliographies. http://lgreen.net/bibliog.htm (accessed May 22, 2012).

Green, L. W. 2012b. Furthering dissemination and implementation research: The need for more attention to eternal validity. In Dissemination and implementation research in health: Translating science to practice, edited by R. C. Brownson, G. A. Colditz, and E. K. Proctor. New York: Oxford University Press. Pp. 305-326.

Green, L. W. 2012c. PRECEDE applications. http://lgreen.net/precede%20apps/preapps-NEW.htm (accessed May 22, 2012).

Green, L. W., and R. E. Glasgow. 2006. Evaluating the relevance, generalization, and applicability of research. Evaluation and the Health Professions 29(1):126-153.

Green, L. W., and M. W. Kreuter. 2005. Health program planning: an educational and ecological approach, 4th ed. New York: McGraw-Hill.

Green, L. W., R. E. Glasgow, D. Atkins, and K. Stange. 2009. Making evidence from research more relevant, useful, and actionable in policy, program planning, and practice: Slips “twixt cup and lip”. American Journal of Preventive Medicine 37(6):S187-S191.

Hahn, R. A., J. Lowy, O. Bilukha, S. Snyder, P. Briss, A. Crosby, and P. Corso. 2004. Therapeutic foster care for the prevention of violence. Morbidity and Mortality Weekly Report 53(RR10):1-8.

Harris, R. P., M. Helfand, S. H. Woolf, K. N. Lohr, C. D. Mulrow, S. M. Teutsch, and D. Atkins. 2001. Current methods of the U.S. Preventive Services Task Force: A review of the process. American Journal of Preventive Medicine 20(3 Suppl):21-35.

Harvey, I., and M. O’Brien. 2011. Addressing health disparities through patient education: The development of culturally tailored health education materials at Puentes de Salud. Journal of Community Health Nursing 28(4):181-189.

Haveman, R., and B. A. Weisbrod. 1977. Public expenditure and policy analysis: An overview. In Public expenditure and policy analysis, edited by R. Haveman and J. Margolis. Skokie, IL: Rand McNally College. Pp. 1-24.

Henry, D. A., S. R. Hill, and A. Harris. 2005. Drug prices and value for money. JAMA 294(20):2630-2632.

IOM (Institute of Medicine). 2006. Valuing health for regulatory cost-effectiveness analysis. Washington, DC: The National Academies Press.

IOM. 2011. For the public’s health: Revitalizing law and policy to meet new challenges. Washington, DC: The National Academies Press.

Kanis, J. A., J. Adams, F. Borgström, C. Cooper, B. Jönsson, D. Preedy, P. Selby, and J. Compston. 2008. The cost-effectiveness of alendronate in the management of osteoporosis. Bone 42(1):4-15.

Kemm, J. 2003. Perspectives on health impact assessment. Bulletin of the World Health Organization 81(6):387-387.

Kling, J. R. 2011. CBO’s use of evidence in analysis of budget and economic policies. http://www.cbo.gov/sites/default/fles/cbofles/attachments/11-03-APPAM-Presentation_0.pdf (accessed May 21, 2012).

Lomas, J., T. Culyer, C. McCutcheon, L. McAuley, and S. Law. 2005. Conceptualizing and combining evidence for health system guidance. Ottawa, Ontario: Canadian Health Services Research Foundation.

London’s Health. 2000. A short guide to health impact assessment: Informing healthy decisions. London: NHS Executive London.

Nash, C., D. Pearce, and J. Stanley. 1975. An evaluation of cost-benefit analysis criteria. Scottish Journal of Political Economy 22(2):121-134.

NCI (National Cancer Institute). 2012. RE-AIM publications. http://publications.cancer.gov/dipubs/reaim.aspx (accessed May 22, 2012).

Norris, S. L., P. J. Nichols, C. J. Caspersen, R. E. Glasgow, M. M. Engelgau, L. Jack, S. R. Snyder, V. G. Carande-Kulis, G. Isham, and S. Garfield. 2002. Increasing diabetes self-management education in community settings: A systematic review. American Journal of Preventive Medicine 22(4):39-66.

NRC (National Research Council). 2011. Improving health in the United States: The role of health impact assessment. Washington, DC: The National Academies Press.

Pew Charitable Trusts. 2009. Overview: Health impact project. http://www.pewtrusts.org/news_room_detail.aspx?id=55601 (accessed May 22, 2012).

RWJF (Robert Wood Johnson Foundation). 2009. RWJF, the Pew Charitable Trusts launch health impact project. http://www.rwjf.org/publichealth/product.jsp?id=50088 (accessed May 22, 2012).

Savvides, S. 1994. Risk analysis in investment appraisal. Project Appraisal Journal 9(1):3-18.

Snowdon, W., J.-L. Potter, B. Swinburn, J. Schultz, and M. Lawrence. 2010. Prioritizing policy interventions to improve diets? Will it work, can it happen, will it do harm? Health Promotion International 25(1):123-133.

Steinbrook, R. 2008. Saying no isn’t nice: The travails of Britain’s National Institute for Health and Clinical Excellence. New England Journal of Medicine 359(19):1977-1981.

Stufflebeam, D. L. 1999. Foundational models for 21st Century program evaluation. Kalamazoo, MI: Evaluation Center, Western Michigan University.

Subcommittee on Evaluation Standards. 1958. Proposed practices for economic analysis of river basin projects. Washington, DC: Inter-Agency Committee on Water Resources.

UCLA HIA-CLIC (University of California, Los Angeles, Health Impact Assessment-Clearinghouse Learning and Information Center). 2012. Phases of HIA: 2. Scoping. http://www.hiaguide.org/methods-resources/methods/phases-hia-2-scoping (accessed May 22, 2012).

U.S. Preventive Services Task Force. 2012. Procedure manual (USPSTF). http://www.uspreventiveservicestaskforce.org/uspstf08/methods/procmanual3.htm (accessed May 21, 2012).

Watson, M. R., A. M. Horowitz, I. Garcia, and M. T. Canto. 2001. A community participatory oral health promotion program in an inner-city Latino community. Journal of Public Health Dentistry 61(1):34-41.

Weinstein, M. C., and W. B. Stason. 1977. Foundations of cost-effectiveness analysis for health and medical practices. New England Journal of Medicine 296(13):716-721.

Weisbrod, B. A. 1983. A guide to benefit-cost analysis, as seen through a controlled experiment in treating the mentally ill. Journal of Health Politics, Policy and Law 7(4):808-845.

Woolf, S. H., C. G. Husten, L. S. Lewin, J. Marks, J. Fielding, and E. Sanchez. 2009. The economic argument for disease prevention: Distinguishing between value and savings. Washington, DC: Partnership for Prevention.