Implications of Cognitive Psychology for Measuring Job Performance

Robert Glaser, Alan Lesgold, and Sherrie Gott

INTRODUCTION

In comparison to a well-developed technology for aptitude measurement and selection testing, the measurement of learned occupational proficiency is underdeveloped. The problem is especially severe for the Services because of the many highly technical jobs involved and the short periods of enlistment in which both training and useful performance must take place. To increase the effectiveness of both formal training and on-the-job learning, we need forms of assessment that provide clear indicators of the content and reliability of new knowledge. Since many of the military's jobs have a major cognitive component, the needed measurement methodology must be able to deal with cognitive skills.

Fundamentally, the measurement of job performance should be driven by modern cognitive theory that conceives of learning as the acquisition of structures of integrated conceptual and procedural knowledge. We now realize that someone who has learned the concepts and skills of a subject matter has acquired a large collection of schematic knowledge structures. These structures enable understanding of the relationships necessary for skilled performance. We also know that someone who has learned to solve problems and to be skillful in a job domain has acquired a set of cognitive procedures attached to

This paper has not been cleared by the Air Force Human Resources Laboratory and does not necessarily reflect their views.

knowledge structures, enabling actions that influence goal setting, planning, procedural skill, flexibility, and learning from further experience.

At various stages of learning there exist different integrations of knowledge, different degrees of procedural skill, differences in rapid memory access, and differences in the mental representations of tasks to be performed. Proficiency measurement, then, must be based on the assessment of these knowledge structures, information processing procedures, and mental representations. Advancing expertise or possible impasses in the course of learning will be signaled by cognitive differences of these types.

The usual forms of achievement test scores generally do not provide the level of detail necessary for making appropriate instructional decisions. Sources of difficulty need to be identified that are diagnostic of problems in learning and performance. An array of subject matter subtests differing in difficulty is not enough. Tests should permit trainees to demonstrate the limits of their knowledge and the degrees of their expertise. The construction of tests that are diagnostic of different levels of competence in subject matter fields is a difficult task, but recent developments, including cognitive task analysis and research on the functional differences between experts and novices in a field, provide a good starting point for a theory to underpin proficiency measurement.

Until recently the field of psychological measurement has proceeded with primary emphasis on the statistical part of the measurement task, assuming that both predictor and criterion variables can be generated through rational and behavioral analysis and perhaps some intuitions about cognitive processing. This has worked quite well when the fundamental criteria are truly behavioral, where the valued capability is a specific behavior in response to a specific type of event. However, when the fundamental performance of value is cognitive, as in diagnosing an engine failure or selecting a battlefield tactic to match a determined strategy, more is needed. The problem is particularly apparent if the true goal is readiness for a situation that cannot be simulated entirely or if it is to decide what specific additional training is required to assure readiness.

We are impressed by the fact that much of the technology of testing has been designed to occur after test items are constructed. The analysis of item difficulty, discrimination indices, scaling and norming procedures, and the analysis of test dimensions or factors take place after the item is written. In contrast, we suggest that more theory is required before and during item design. We must use what we know about the cognitive properties of acquired proficiency and the mental processes and structures that develop as individuals acquire job skills. The nature of acquired competence and the indicators that might signal difficulties in learning are not apparent from a curriculum analysis of the facts and algorithms being taught.

Proficiency measurement should be designed to assess not only algorithmic knowledge but also cognitive strategies, mental models, and knowledge

organization. It should be cast in terms of levels of acquisition and should produce not only assessments of job capability but also qualitative indicators of needed further training or remediation. In this regard, cognitive psychology has produced a variety of methods that can be sources of a new set of measurement methodologies. During the past 2 years, we have been applying these techniques in developing a cognitive task analysis procedure for technical occupations in the Air Force. The conclusions in this paper are based on our experience in this project.

The three sections that follow present a cognitive account of the components of skill, discuss the specific measurement procedures we have employed, and then consider which aspects of measurement in the Services can best use these approaches.

COGNITIVE COMPONENTS OF SKILL

There are three essential elements in cognitive tasks. These are

-

The contents of technical skills: the procedures of which they are composed.

-

The context in which technical skills are exercised: the declarative knowledge needed to assure that skill is applied appropriately and with successful effect.

-

The mental models or intermediate representations that serve as an interface between procedural and declarative knowledge.

These three essential aspects of job proficiency are emphasized throughout this paper.

Procedural Content

A starting point for specifying the procedural content of a task is the GOMS model proposed by Card et al. (1983) in their studies of the acquisition of skill. This model has been further elaborated in work on formal procedures for representing the complexity of machine interfaces for users (Kieras and Polson, 1982). The GOMS model splits technical knowledge into four components: goals, operators, methods, and selection rules. We have adopted a similar split with a few differences from GOMS. Each component is not only a subset of the total knowledge a technical expert must have, but is also a reminder to consider certain issues in attempting to understand the expertise.

Goal Structure

Any task can be represented as a hierarchy of subgoals, and experts usually think of tasks this way. Generally, such goal structures become

very elaborated for complex tasks. The overall goal is decomposed into several nearly independent subgoals, and they in turn are subdivided repeatedly. In many cases, the particular subdivision of a goal depends on tests that are performed and the decisions that are made as part of the procedure that accomplishes the goal. Something like the following example1 is quite common:

TO TRAVEL-TO :X

SUBGOAL CHECK-OUT-POSSIBILITIES

IF :X WITHIN 50 MILES THEN SUBGOAL DRIVE-TO :X

ELSE SUBGOAL FLY-TO :X

END

Because of this sort of contingent branching, an overall subgoal structure for a particular goal may not exist explicitly. Rather, it may be assembled as the goal is being achieved—it is implicit in part.

Without substantial prior experience, it can be difficult to separate a complex task into subgoals that are readily achieved in a coherent manner and that do not interact. A novice, even if intelligent, may separate a task into pieces that cannot be done independently. Consider the following example of a goal structure:

TO HAWAII-VACATION

SUBGOAL GET-TICKETS

SUBGOAL RESERVE-CAR

SUBGOAL RESERVE-HOTEL

SUBGOAL GET-THERE

SUBGOAL PAINT-TOWN-RED

END

Suppose that a person adopts this goal structure. Some problems could develop. For example, he may have a budget limit. If in solving the GET-TICKETS subgoal he uses up too much money, he then will not be able to solve other subgoals. We say the subgoals interact. Also, it is possible that a package deal can solve all three initial subgoals, so subdividing them as shown is unnatural and may divert the novice from a successful approach.

There is another form of novice goal setting that is almost the opposite. This is the use of subgoals that are defined by the methods the novice knows rather than the overall goal to be achieved. Again, this is often a case of intelligent behavior by those not completely trained. For example, a rather inexperienced engineer given the task of designing a conditioned power source for a large computer was heard to say: “What you need is a

|

1 |

The example is stated using some of the formalisms of LOGO. |

UPS [uninterruptible power source] without the batteries.” The engineer happened to have learned about uninterruptible power sources and knew that such systems provided clean power. However, he knew nothing about how, in general, clean power can economically be provided. By looking only at designs for UPS systems, he missed some cost-effective designs that work fine except when battery back-up is also required.

In analyzing a technical specialty, it is necessary to establish what goal structures are held by experts. Sessions in which the expert describes how a task is carried out are very helpful for this purpose, and we have made heavy use of them. Expert-novice comparisons are also helpful. Also, information about novice goal structures can sometimes reveal training problems that could be corrected by specifically targeted instruction.

Basic and Prerequisite Abilities (Operators)

Card et al. (1983) spoke of the basic operators for a task domain, borrowing from the earlier Newell and Simon (1972) approach of specifying elementary information processes from which more complex procedures would be composed. We have unpacked this idea a bit. For any given technical specialty, there are certain basic capabilities that the novice is assumed to have prior to beginning training. For example, one might assume that the ability to use a ruler (at least with partial success) might be a prerequisite for work as an engine mechanic. Ruler use is hardly a primitive mental operation in any general sense, but with respect to subsequent training in an engine specialty, it could be considered as such. Similarly, the ability to torque a bolt correctly might be thought of as a basic entering ability for new three-level airmen coming to their first operational assignments. What is important is that every training approach makes these assumptions concerning prerequisites and that some such assumptions are incorrect. Often, for example, a training approach will assume a highly automated capability but will only pretest for the bare presence of that capability.

In essence, we are asserting that the components of a skill should be subdivided into two relevant parts: those that are prerequisite to acquisition of a particular level of skill and those that are part of the target level. What is prerequisite at one level may be a target component at a lower level. In doing a cognitive task analysis, one must examine the performance of successful novices to determine what the real skill prerequisites are and what new procedures are being acquired. Such an analysis must take account not only of nominal capability but also of the speed and efficiency of prerequisite performance capabilities. Care must be taken to avoid declaring too many skills as prerequisites. A common fault of educational and training systems is to declare many aspects of a skill to be prerequisite and then

bemoan the lack of adequate instruction of those prerequisites in lower level schools.

Procedures (Methods)

At the core of any task analysis is an analysis of the procedures that are carried out in doing the task. This remains the case with cognitive task analyses. A major part of our current work on cognitive task analysis of avionics equipment repair skills is to catalog the procedures that an airman must know in order to perform tasks within the job specialty being studied. This is done in a variety of ways and is probably the aspect of cognitive task analysis that is closest to traditional rational task analysis approaches. We examine technical orders, expert and novice descriptions of tasks, and other similar data. While the resulting procedural descriptions are likely to be similar to those achieved by earlier approaches, they are distinguished by the following new components: (1) we are attending explicitly to the enabling conditions, such as conceptual support (see below), for successful procedure execution, and (2) separate attention is paid to goal structures and selection rules. Further, we use a variety of techniques to verify our analyses empirically.

Procedural descriptions of cognitive tasks will tend to emphasize the domain-specific aspects of performance. However, it will sometimes be appropriate to include certain self-regulatory skills of a more general character in the analyses. It is critical to avoid basing cognitive analyses on the ability of people with strong self-regulatory and other meta-cognitive skills to handle many novel tasks. When a task requires these more general skills in addition to easily trainable specific capabilities, then this should be noted. In general, it can be assumed that a continual supply of trainees with strong self-regulatory skills or other high levels of aptitude cannot be guaranteed, and such skills are not quickly taught.

Selection Rules

The progress of modern psychology has been marked by a slow movement from concern with stimulus-response mappings to a concern with mappings between mental events (including both perceptions and the products of prior mental activity) and mental operations or physical actions. To the extent that they stuck to the earlier methodologies evolved from stimulus-response approaches, trainers knew only that certain physical responses must be tied to certain stimuli. They had only indirect ability to teach by rewarding correct responses and punishing errors. Now, new methodologies are being developed for verifying mappings between internal (mental) events and mental operations. While they still have pitfalls for the unwary, they make possible an interpretation of tasks that comes closer to being useful for instruction.

In particular, we know that the knowledge of experts is highly procedural. Facts, routines, and job concepts are bound to rules for their application, and to conditions under which this knowledge is useful. As indicated, the functional knowledge of experts is related strongly to their knowledge of the goal structure of a problem. Experts and novices may be equally competent at recalling small specific items of domain-related information, but proficient people are much better at relating these events in cause-and-effect sequences that reflect the goal structures of task performance and problem solution. When we assume that some training can be accomplished by telling people things, we are, in essence, assuming that what the student really needs to know are procedures and selection rules for deciding when to invoke those procedures.

In conducting a cognitive task analysis, it is important to attend specifically to a trainee's knowledge of the conditions under which specific procedures should be performed. Combined with goal structure knowledge, selection rules are an important part of what is cognitive about cognitive task analyses.

Conceptual Knowledge

In performing cognitive task analyses, it is important to consider how deep or superficial the knowledge and performance are. Many skills have the property that they can be learned either in a relatively rote manner or can be heavily supported by conceptual knowledge. For example, one can perform addition without understanding the nature of the number system, so long as one knows the exact algorithm needed. Similarly, one can repair electronics hardware without deep electronics knowledge, so long as the diagnostic software that tells one which board to swap can completely handle the fault at hand. However, it appears that the ability to handle unpredicted problems, which is a form of transfer, depends on conceptual support for procedural knowledge.

The difficult issue (as with automation of skill) will be the separation of conceptual knowledge evidenced by experts and quick learners into that which is a mere correlate of their experience and that which is necessary to their experience. This is still very much an issue of basic research, but one on which some good starting points can be specified. The sections below detail three types of conceptual supporting knowledge to which cognitive task analyses should be sensitive.

Task Structure

Some, but not all, experts retain detailed knowledge of the structure of tasks that they perform. We usually call these people the good teachers. Part of their knowledge results from having explicit, rather than implicit, goal

structures. A serious question to be addressed in any analysis is whether possession of certain explicit task structure knowledge is necessary to successful skill acquisition. A corollary issue is whether those who do not possess or have trouble acquiring such explicit knowledge tend to acquire their skill in a different manner from those who are more “high verbal.” This is part of the "basic research" aspect of cognitive analyses at this time, because general domain-independent answers to such questions have not yet been established.

Background Knowledge

The same set of questions applies to a second form of conceptual support knowledge, namely background knowledge. In electronics troubleshooting, for example, it is conceivable that a bright person could perform many (but not all) of the tasks much of the time by simply following the directions in the printed technical orders. While this might take a long time, it seems at least possible. What is added to performance capability by knowing about basic electrical laws or about how solid state devices of various types work? The cognitive task analyst must attempt to determine the role played by such knowledge in successful performance. The knowledge to be examined includes scientific laws and principles as well as more informal background, such as crude mental models and metaphors for processes that are directly relevant to job tasks (e.g., what happens when a circuit is shorted).

Context of Use

Related to this second type of conceptual knowledge is contextual knowledge. For example, a novice jet engine technician whom we studied resorted to knowledge of air flow through a jet engine to answer some of our questions about engine function. The immediate question this raises for us is whether successful module replacement (a role that includes no diagnosis) depends on any knowledge of how a jet plane works, what the pilot sees or experiences, or what other roles are involved in servicing a plane besides module replacement. Again, while we have found that our better subjects know a lot about all of these contexts, the chore of the cognitive task analyst is to determine the extent to which this knowledge is necessary for successful skill development (or to one success track in skill development).

Critical Mental Models

Expertise is generally guided by several kinds of mental models. One kind is the model of the problem space, as one might see in a chess player, who has a rich representation of the board positions to which he can anchor various interpretations and planned actions. A related kind of mental model

is the model of a critical referent domain, such as the model of the patient's anatomy that is maintained by a radiologist. Such a model is not identical to the problem space but rather is an important projection of the problem. Finally, there are critical device and component models that guide understanding and performance, such as the electronics technician's model of a capacitor or of a filtered power supply. Each type of model can be critical to problem solving, and the task of the cognitive task analyst is to discover which models of any type play an important role in expertise.

There are several ways in which this can be done. For some domains, such as electronics troubleshooting, there is an existing literature because cognitive scientists have used the domain to study expertise, have been designing tutoring systems for the domain, and have been building expert systems to supplement human expertise in the domain. Another approach is to ask experts and less-expert workers to think out loud while solving problems in a domain. In our laboratories, we have done this, for example, with radiologists (Lesgold, 1984; Lesgold et al., 1985). Analysis of the verbal protocols from these physicians (taken while they made film diagnoses) led us to a clearer understanding of the specifics of the mental model of the patient 's anatomy that seems to be the focal point of much expert reasoning in this domain. In our more recent work, we have developed much more objective and practical approaches to getting and analyzing protocol data.

A critical finding coming out of the radiology work is that experts have pre-existing schemata that are triggered early in a diagnosis. These schemata tune the patient-anatomy representation and also pose a series of questions that the expert addresses while trying to fit the schema to the specific case. In a sense, then, each disease schema can be thought of as a prototype representation of patient anatomy, and the expert's task is to integrate the schema, the knowledge he has of the patient, and the features of the specific film he is examining. Such schema- and representation-driven processing also occurs in such domains as electronics. For example, good technicians have, among many others, a broad schema for connection failures. When the technical orders fail to provide a basis for a diagnosis, or when computer-based diagnosis fails, schemata such as the connection-failure schema are applied, if possible.

Associated with such schemata in some cases are series of tests that must be performed to either verify or to disprove the fit of schema to fault. In the case of electronics, for example, simply knowing that connection failures cause bizarre and difficult-to-diagnose failures is insufficient. The expert also must be able to build a plan for finding the specific connection that has failed in the piece of gear he is troubleshooting. Detailed study of the technical orders in collaboration with a subject matter expert can be helpful in developing an understanding of the critical schemata for a domain and the content of those schemata. The subject matter expert will often describe the schemata he would apply in a given case if adequately prompted.

Knowledge Engineering is One Aspect of Cognitive Task Analysis

Subject matter experts do not develop clear accounts of expertise on their own. This is why a whole new job category, knowledge engineer, has arisen in the field of expert systems development. Knowledge engineers have, mostly independently of psychologists, developed their own methodology for extracting knowledge from subject matter experts. For example, a standard, perhaps the standard, method is to have the expert critique the performance of a novice (sometimes the knowledge engineer plays the role of novice). The idea is that the knowledge engineer can follow the reasoning of the novice but not that of the expert, yet. By asking the expert to critique the level of performance he understands, the knowledge engineer is essentially asking for a repair of his own understanding. Carrying out this process iteratively is a very effective skill for learning the domain and also an appropriate tactic for a cognitive task analyst.

Our own exploratory efforts in cognitive task analysis lead us to make two important cautionary statements about this aspect of cognitive task analysis in particular and the entire approach in general:

-

A cognitive task analysis stands or falls partly on the level of expertise in the target domain that is assimilated by the analysis team. This is not a chore for dilettantes.

-

Subject matter experts cannot do cognitive task analyses on their own. Because expertise is largely automated, they do not always realize all of the knowledge that goes into their own thinking.

Levels of Acquisition

In addition to specifying the kinds of knowledge needed to do a job well, the cognitive task analyst attempts to understand the level of acquisition that is required. It is important to recognize that knowledge is initially precarious, requiring conscious attention, and relatively verbal. With practice, aspects of skill become sufficiently automated to permit overall performance that is facile and precise. John Anderson (1982) has proposed a theory of skill acquisition that builds upon earlier work on the nature of human thinking and memory (Anderson, 1976). This theory, which elaborates earlier work that was driven by concerns with perceptual-motor skills (Fitts, 1964), provides a useful starting point for analyses of technical skill domains, and it has been incorporated into our approach (Lesgold, 1986; Lesgold and Perfetti, 1978). A number of other researchers (many cited in Anderson, 1982) have anticipated aspects of the approach.

There are qualitative stages in the course of learning a skill. Initially, a skill is heavily guided by declarative (verbal) knowledge. We follow formu-

lae that we have been told. For example, new drivers will often verbally rehearse the steps involved in starting a car on a hill, while experts seem to do the right thing without consciously thinking about it. A second stage occurs when knowledge has been proceduralized, when it has become automatic. Finally, the knowledge becomes more flexible and at the same time more specific; it is tuned to the range of situations in which it must be applied.

Declarative Knowledge

The measurement of declarative knowledge about technical skills is perhaps the chore that traditional test items handle best. Measures that involve telling how a task is performed are ideal for this purpose. Declarative knowledge can, of course, be partitioned into categories such as goal structure, procedures, selection rules, and conceptually supporting information. When analyzing verbal protocols, it is also important to distinguish between verbal protocol content that provides a trace of declarative knowledge of a task and content that reveals the mental representation(s) that guide performance even after it is automated.

A significant aspect of a skill is the ability to maintain mental representations of the task situation that support performance. For example, in diagnosing a failure of a complex electronic system, a technician sometimes has to have a model of what that system is doing and how information and/or current flows. Such a model, because it is anchored in permanent memory, helps the performer overcome temporary memory limits brought on by a heavy job load and helps preserve memory for the current status of a complex goal structure.

Skill Automation

While it seems essential to successful overall performance, the automation of process components of skill has been difficult to measure adequately. We have used some response speed measures, but speed measures depend heavily on norms for their interpretation, and such norms are seldom available or easily established. Also, and perhaps more important, speed is a characteristic outcome of increasing performance facility, whether or not it is a cause of that facility. This issue of causal relationships between subprocessing automaticity and overall performance has been addressed elsewhere (e.g., Lesgold et al., 1985), but the only solution proposed is longitudinal study, which is incompatible with most military measurement needs. (There may be new work soon, from researchers such as Walter Schneider (1985), on this problem.)

One possible approach is to embed the concern over automaticity into all tasks in a test battery, continually watching for evidence of the extent of

skill automaticity and of the extent to which skill shortcomings seem related to lack of automaticity. For example, one can determine the extent to which various external cues, such as diagrams of jet engine layouts, are essential to task performance. Also, one can observe whether the goal structure of a subject exists independent of technical orders that can be referred to. A subject who tells us how to do a task without reference to technical orders must have much of his or her knowledge automated.

Skill Refinement

Finally, one can also look for specific evidence that skills have been refined to the point where there is sensitivity to small but critical situational differences. Flexibility of skilled performance is observed for rapid access to changed representations of a situation given relevant new data.

Summary

Our analysis of job performance highlights the following components of skill:

-

knowledge of the goal structure of a task

-

skill and knowledge prerequisites for successive levels of performance

-

procedural skills and the rules for deciding when to apply them

-

conceptual knowledge and metaphors that support performance

-

mental models and task representations

-

levels of learning, from declarative to proceduralized knowledge, from rigid algorithms to flexible strategies

In general, this approach to job performance is intended to avoid using performance correlates as the basic units of analysis, to instead base measurement and evaluation on an analysis of the specific cognitive procedures and conceptual representations that produce successful performance.

METHODS FOR COGNITIVE TASK ANALYSIS MEASUREMENT

Appropriate methods for cognitive task analysis and for extending cognitive task analysis to the creation of diagnostic test items are continually evolving as more is learned about expertise and the acquisition of proficiency. In this section, we survey several current methods in order to provide a sense of what is possible. The list is by no means exhaustive. It should also be noted that we do not assert that existing military personnel data should be ignored. On the contrary, existing occupational survey data in the Air Force, for example, were extremely useful in focusing our attention on problem areas that merited the expensive cognitive procedures we were developing.

While it is important to be aware of the data already being collected, it is also important to understand their limitations with respect to cognitive analyses. Much of the data are gathered in the course of selecting recruits for specific billets. An instrument might be very effective at picking the right people to be taught a job without being particularly good at specifying how those people who make the cutoff for selection will differ in either their ability to learn or their post-training performance. In essence, when looking at the incumbents within a specific military job specialty, one is looking at a group whose members are chosen because they are classified in the same manner by the available selection tests—as appropriate trainees. There may be further information in the test data, but the tests were designed to serve only as selection instruments. A major purpose for cognitive analyses is to go further than this. The purpose is to identify the kinds of skill and knowledge that must be acquired in school and on-the-job experience, that are basic to the development of job competence. Assessment of these basic skills at the end of training or during the first term on the job might also further inform the selection process.

Procedure Ordering Tasks

For procedural tasks, perhaps the most obvious question one can ask about performance is whether it is carried out correctly. However, it is not always easy to actually have the target performances carried out in a testing situation, nor is it clear how such performances should be scored. Stopping short of actual performance of the target task, one can either ask the subject to tell how the task is done or to reproduce the steps in the task and their ordering from memory, or one can develop tasks in which the steps of the task are displayed to the subject, who must put them into the correct order. This latter approach has the advantage that it is less dependent on the verbal communication and memory skills. However, it still leaves the scoring problem.

When the experimenter tells the subject which steps are included and the subject needs only to order those steps, it appears as if all the hard work is being done by the experimenter. After all, isn 't the problem remembering what to do in the first place? As it turns out, there are many cases, perhaps the cases of greatest interest since they represent the harder, less uniformly mastered skill components, in which specifying the order of steps is quite difficult even if the possible steps are shown.

From another point of view, sequencing of procedures should not be a problem, because technical orders are available that specify exactly how a complex procedure should be done, and military personnel are supposed to follow those orders exactly. Unfortunately, this is not always possible and certainly not always optimal. In assembly/disassembly tasks, the technical orders generally assume a completely disassembled device to start with, but in

practice devices are often only partially disassembled, and the order of steps to reassemble them as shown in the technical orders may not work. For example, if one crosses out the steps already carried out and simply does the rest in the order listed, problems can arise. Sometimes, an earlier step in a technical order cannot physically be carried out if a later step has already been done (i.e., you may have to remove one part to reattach another). On other occasions, a particular ordering is physically possible but will not preserve calibrations that are necessary to overall device function. Thus, there are cases in which the ability to adapt the order of steps in a procedure to specific circumstances not anticipated in training or in work aids is a good indicator of depth of procedural knowledge and procedure adaptability.

These cases seem to involve (1) the possibility that the steps in the procedure could be carried out in several different orders and (2) constraints on ordering that would not be regulated by feedback the subject receives in the course of actually carrying out the procedure (that is, incorrect orders might not result in immediately observable consequences). In our analyses of jet engine mechanics, for example, we found that specifying the order of steps in carrying out certain rigging (calibration) operations was not something every subject did well. Further, there were systematic relationships between error rates and the ratings subjects received from their supervisors for job effectiveness. In addition, the errors subjects made could, in fact, be neatly classified as conceptual errors or procedural errors. Procedural errors were errors that would have led to an impasse in the course of doing the assembly. Conceptual errors would not have blocked the assembly, but the plane would not have functioned properly afterwards.

Sorting Tasks

Sorting tasks are an important exploratory tool for cognitive task analysis. Indeed, under such names as “Q sort,” they have a long history of accepted use in a number of areas of psychology. Initially, the method was used in areas such as social psychology, personality psychology, and advertising research. However, in recent years, the approach has also been used widely in studies of the organization of memory and of expertise (cf. Chi et al., 1982).

The basic theory underlying the approach is that concepts are defined in the mind by a set of characteristic features.2 When asked to sort pictures or

|

2 |

This account is greatly simplified in order to convey the essence of the approach. In fact, psychologists studying concept formation argue over whether all concepts are characterized this way, whether the features for a concept include defining features shared by all instances as well as typical or characteristic features that are not universal over all instances, etc. These arguments do not affect the validity of the sorting procedure as an exploratory method, and it has been repeatedly demonstrated to be effective in elucidating differences between skill levels in domains of expertise. |

words into piles of things that “go together,” these features are the most available information in the subject 's memory that can be used for such a purpose, so they are used. However, there are many features associated with most concepts, and the subject in a sorting task must make some decisions about which are the most appropriate basis for partitioning the items into separate groups. It is these decisions that seem to vary with expertise.

The general method involves having subjects place in separate piles on a table top the various things being sorted, usually cards with words, phrases, or pictures on them. A record is made of which items ended up in which piles. This is easily done if there are code numbers on the backs of the cards. For large-scale administration, bar coding the cards and scoring through use of a portable data entry device with a bar code reader would be very straightforward. Sometimes, after doing an initial sort, subjects are asked to decompose their piles into subpiles, or to collapse piles into a smaller number. This permits a more refined scoring.

When only one level of sorting is used, the number of piles should range between three and eight. For the one-level case, the result of scoring for any one subject is a matrix A, in which aij is 0 if items i and j were not in the same pile and 1 if they were. When piles are further subdivided or collapsed, then aij is 1 if the two items were together only at the grossest level of the sort, 2 if they were together at the next more refined level, etc. Scaling and clustering techniques are used to combine the sorts of a group of subjects into a single picture of their cognitive structure. It is possible to assign a subject a score by measuring the departure of fit of his sorts from the prototypic expert-scaling solution.

Sorting tasks may be particularly sensitive to the restriction of range problems discussed above. That is, while experts and novices show strikingly different sorting solutions, we have not found very striking differences between higher and lower performers within a training cohort. Such differences as have been found seem to involve very small numbers of items that have specific ambiguities of nomenclature that only the better performers are sensitive to.

Characteristically, novices put things together in a sorting task on the basis of their superficial characteristics, while experts sort more on the basis of deeper meaning, especially meaning relevant to the kind of mental models or schemas that drive expert performance. For example, novice mechanics seem to treat parts with the same terms in their names as belonging together, while experts group more on the basis of the functional systems of which the objects being sorted may be parts. This difference is domain specific. That is, experts are not generally less superficial in their general world knowledge —only in the domain of their expertise.

When the technique is used to examine differences between people at the same level of formal training who have different levels of actual compe-

tence, it affords an opportunity to discover which specific aspects of deeper understanding are not being picked up by the less able learner. This in turn can inform the design of improved instructional procedures.

Realistic Troubleshooting Tasks

In job domains that involve substantial amounts of diagnosis or other problem solving, some of the most revealing tasks used in cognitive task analyses are those that provide controlled opportunities for the subjects to actually do the difficult parts of their jobs. We have only begun to work on this approach, but a few possibilities already present themselves, particularly with respect to metacognitive skills of problem solving. To give a sense of our work, we trace the history of our efforts to analyze the performance of electronics technicians when they attempt to troubleshoot complex electronic circuitry. The complex cases are of particular interest because they are the ones where metacognitive skills are needed to organize processes that, in simple cases, might automatically lead to problem solution.

In our first attack on this problem, Drew Gitomer, at the time a graduate student at the Learning Research and Development Center, developed a troubleshooting task and simply collected protocols of subjects attempting to solve our problem. He then examined the protocols and attempted to count a variety of activities that seemed relevant to meta-cognitive as well as domain-specific skills. While the results, published in his thesis (Gitomer, 1984), were of great interest, we wanted to move toward a testing approach that was less dependent on the skills and training of a skilled cognitive psychologist. That, after all, is one aspect of what test development is largely about —rendering explicit the procedures that insightful researchers first apply in their laboratories to study learning and thinking.

Our breakthrough came not so much from deep cognitive thinking but rather from our interactions with an electronics expert who had extensive experience watching novice troubleshooting performances. He pointed out that it was not a big chore to specify all of the steps that an expert would take as well as all of the steps that any novice was at all likely to take in solving even very complex troubleshooting problems. That is, even when the task was to find the source of a failure in a test station that contained perhaps 40 feet3 of printed circuit boards, cables, and connectors, various specific aspects of the job situation constrained the task sufficiently that the effective problem space could be mapped out.

|

3 |

We are grateful to Gary Eggan for his many insights in this work. |

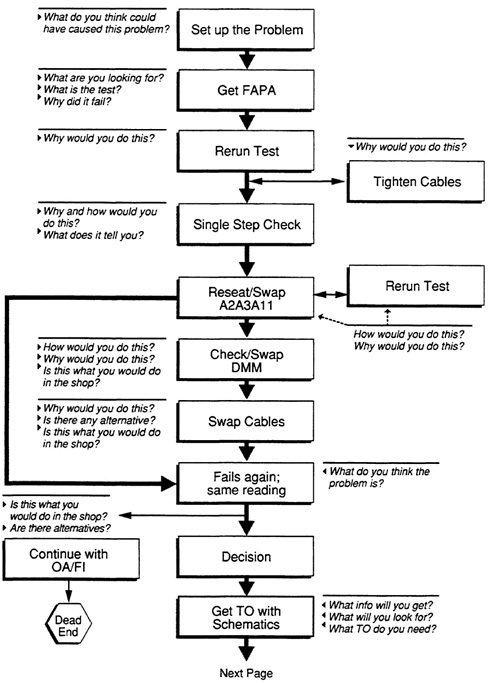

This then created the possibility that we could specify in advance a set of probe questions that would get us the information we wanted about subjects' planning and other meta-cognitive activity in the troubleshooting task. In this most complex troubleshooting task, there are perhaps 55 to 60 different nodes in the problem space, and we have specific meta-cognitive probe questions for perhaps 45. Figure 1 provides an example of a small piece of the problem space and the questions we have developed for it.

An examination of the questions in the figure reveals that some are aimed at very specific knowledge (e.g., “How do you do this?”), while others help elaborate the subject's plan for troubleshooting (consider “Why would you do this?” or “What do you plan to do next?”). Combined with information about the order in which the subject worked in different parts of the problem space, this probe information permits reconstruction of the subject's plan for finding the fault in the circuit and even provides some information about the points along the way at which different aspects of the planning occurred.

Some of the scoring criteria that can be applied to the results of this blend of protocol analysis and structured interview are the following:4

-

Did the subject find the fault? How close did he come?

-

What kind of methods did the subject use? It may be possible to compare histograms of expert and novice distribution over the effective problem space to arrive at specific criteria for these decisions. For example, if there are points in the problem space that are reached only by experts, then getting to those points indicates expertise. Similarly, if there are sequences of steps that are present only in experts, the existence of such a sequence in the troubleshooting protocol of a person being tested might be counted positively.

-

Was the subject explicitly planning and generating hypotheses about the nature of the problem? Did comments by the subject indicate that they had specific goals when they carried out sets of actions?

-

Did subjects understand what they were doing? Did they have an answer to the question “Why did you do that?”

-

Did the subject use available methods to constrain the problem space? Do planning and understanding components serve to help the subject constrain the search?

-

Can the subject use available tools and printed aids? Can he use a schematic?

-

How much information had to be given to the subject to enable him to continue with the problem? How long did it take the subject to complete subsections of the problem space?

|

4 |

Debra Logan generated the first version of these criteria. |

We are currently working on techniques for rating and scoring the responses to these individual components of the troubleshooting task and assessing how well they are integrated.

Connection Specification Tasks

A critical general skill for problem solving is the ability to break down a complex problem into smaller components and then tackle each component in turn. Unfortunately, there are sometimes interactions between components that preclude dealing with each one separately. For example, troubleshooting a device with five components by troubleshooting each component in turn will only work if the problem does not involve interconnections between components. If the problem is that the connector that joins two components is bad, the componential analysis approach based only on those components will fail. Knowledge of interactions between parts of a system, parts of a procedure, or parts of a problem is a critical component of expertise. In the case of system knowledge, this information can be extracted very directly: give the subject a sheet with all the components shown on it and ask them to show the interconnections among the components.

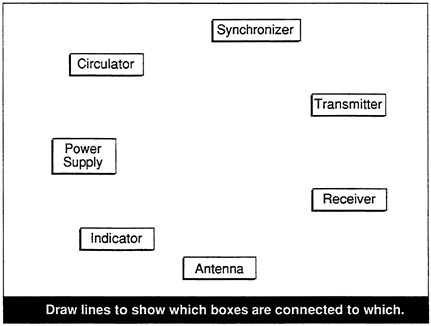

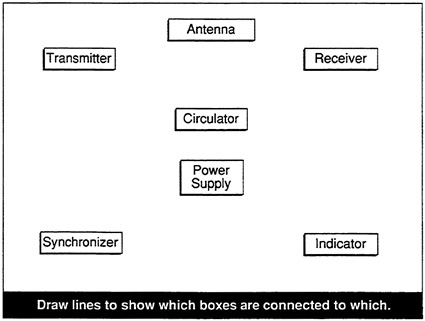

There are two ways such a problem can be presented. In one approach, the items are randomly distributed in such a way that no structure information is conveyed by the test form. Ideally, they should be at random points on the edge of a circle. Figure 2 shows such a display. Often, however, there is a superficial level of organization that all subjects are likely to share. In such cases, this organization might be given to subjects to minimize the extent to which searching the test form becomes part of the problem. An example is shown in Figure 3.

What-How-Why Tasks

Another task we have found very useful is one in which several basic kinds of knowledge about circuit components, tools, or other important job artifacts are measured. This task comes closest to overlapping traditional item types. Specifically, we can look at “what” knowledge, the ability to identify an object, to tell what it is. We can also look at “why” knowledge—what the object is used for. In addition, we can look at “how” knowledge, how it works. We have been quite successful asking such questions very directly and noting and recording the answers. This is preferable to the creation of multiple-choice items, which would also be possible, since sometimes the most interesting data for assessing level of competence is not right versus wrong but rather the terms in which a definition or specification of function is couched.

AREAS OF THE MILITARY WHERE COGNITIVE TECHNIQUES HAVE PROMISE

Given the present high cost of cognitive task analyses and the need to use highly trained personnel because cognitive task analysis methods are still incompletely specified and validated,5 such analyses should be restricted to situations in which a major investment of effort is appropriate. There are certain situations in which task analyses are likely to be productive given these current limitations. These are areas in which rational task analysis has not been able to supply adequate direction to those who design training systems because of the specific lack of an understanding of job difficulties that arise from the nature and limitations of human cognitive function.

In commenting on the specific situations in which cognitive approaches can be useful, we address three related topics. First is the work environment as it has been impacted by the hardware of modern technology. Next, we consider the interface between the worker and the work environment and examine various cultural influences on that union. Third, we consider some of the questions that decision makers in military systems must confront as they make selection, training, promotion, and job design decisions for complex tasks.

In conceiving of the sciences of the artificial, Simon (1981) characterized human performance (and learning) as moving across environments of varying complexity in pursuit of particular goals. With this conception, intelligent performance can mean, among other things, simplifying (and thus mastering) one's environment. Military work environments have grown steadily in complexity in recent decades as weapon systems, maintenance equipment, and other hardware used in the business of national defense have proliferated.

Interacting with complex machines in one's job is now the rule for military workers; however, the nature of intelligent performance in those interactions is not well understood. As a result, measurement of worker performance is often misguided because of the vagueness surrounding what it means to be skilled in a complex technical domain. Formal institutional attempts at simplifying the new high technology work environments consist mainly of thicker instructional manuals and technical documents. This suggests that details about complex systems are important for competence building. However, when we carefully analyze skilled performers to learn how they actually do their work, what we find are not detailed memorial replicas of

|

5 |

These methods have yet to be validated. While there is no reason to believe that existing cognitive task analysis methods will produce misleading results, there is, however, some uncertainty in predicting the extent to which a particular method applied to a particular situation will produce an analysis that is a clear improvement over traditional methods. |

dense technical data, but rather streamlined mental representations, or models, of the workings of the systems about which all the words are written. As skilled workers learn what their duties demand of them, they economically and selectively construct and refine their domain knowledge and procedural skill. That is one way they simplify their jobs. This suggests that the determinants of competence are not always revealed by the surface characteristics of either the worker's performance or the environment in which that performance takes place. Complex job environments require deeper cognitive analyses that can ferret out the conceptions and thinking that lurk behind observable behaviors.

For the military sector, understanding the influence of the machine on work and the dimensions of intelligent human performance in work settings is very important. Such understanding is crucial to decisions about

-

the kind of intellectual talent needed for particular jobs (selection),

-

the ways jobs and work environments could be constituted to optimize the use of available talent (classification),

-

the instruction needed to build requisite skills (training),

-

the basis for promotion,

-

the optimal form for job performance aids and technical documentation, and

-

the ways technical skills may be transferred across occupations (reclassification and retraining).

The complexity of the military workplace increases the difficulty of assessment to support these decisions. Targets of assessment are elusive in these settings.

Problem solving performance presents an interesting example. In the complex conditions we have been alluding to, problem solving cannot be fully programmed in advance because of the imprecise nature and generally ill-structured state of the problems (Simon, 1965). Even with the mounds of technical data that exist, it is impossible to prespecify every problem solving scenario. Thus the assessment dilemma, if one is to capture true problem solving skill, is to measure nonprogrammed decision making, that is, to capture the intuitive reasoning that characterizes this form of expertise.

Recent work of cognitive psychologists interested in expert problem solving has advanced our understanding of reasoning and envisioning processes. For example, Larkin et al. (1980) have shown the rich sets of schemata indexed by large numbers of patterns that underlie the quickness of mind and insightful views of expert intuitive problem solvers, those who can fill in a sketchy representation with just the right pieces of information. Performance measures directed at such networks of influential knowledge in military job experts would be quite informative as predictors of competent problem solving.

A complex work environment further complicates performance assessment because of the inherent variability in human information processing. In a complex environment where so much is to be apprehended, encoded, and represented in memory, individual differences in cognition assume considerable importance. The interactions between a worker's cognitive apparatus (including all-important prior knowledge) and the many features of the complex systems encountered in the workplace are considerable. They seem as resistant to prespecification as the problem solving scenarios just discussed.

Even when a group of people enters the workplace after an apparently uniform initial job training experience, each person brings his or her own set of conceptions about the domain just studied. If performance assessment is directed toward the measurement of individual skill for purposes of improving performance, i.e., if the goal is diagnosis to prescribe instruction, then individual differences in cognition are worth some attention. A cognitive analysis that examines complex human performance in depth may uncover uniformities as well as common misconceptions or bugs that affect the learning process. This can lead to better design and development of the kind of adaptive training that can significantly facilitate learning in complex domains.

There are sources of influence external to the workplace that interact with it to affect even further a worker's performance. These influences have implications for both cognitive analysis and performance assessment. First of all, there are the pressures of the military culture. The apprentice is pressed to learn the job quickly in order to become a contributing member of the work force as soon as possible. Typically, it is the cadre of apprentices who are relied on as the critical mass or core capability of a military operational unit. Simultaneously, however, the demand characteristics of a military unit can be quite severe, which is to say that putting planes in the air, for example, takes precedence over on-the-job training sessions. Learning the job quickly is thus frequently impeded because of the demand to get the work out at all costs. Opportunities to practice and refine skills are characteristically nonexistent, particularly for the worker of average skill or below.

A potential influence on learning and job competence comes as a consequence of the modern tactical philosophies currently favored by some of the Services. In the interest of dispersing weapon systems and maintenance teams for purposes of reducing concentrated resources as inviting targets, actions are being considered to make weapon system maintenance occupations less specialized. By assigning broader responsibilities to a given class of worker, fewer technicians, who would be transformed from specialists to generalists, would be required in field locations to perform maintenance functions. Such a policy would necessitate dramatic changes in instructional practice in order for broader domain knowledge and more flexible reasoning skills to be realistic targets of training.

Already training practices have been weakened under the weight of complex subject matter and formidable workplace machines. An argument that has sometimes prevailed is that smarter machines mean reduced cognitive loads on workers and that consequently less training is required. Of course, machines capable of automating certain workplace tasks, i.e., the relatively easy portions of the jobs, do not in reality appreciably reduce the cognitive workload. Rather, what the machines do is take responsibility for the lower order or programmed tasks, reducing the apprentice to a passive observer who is called into action only when nonprogrammed problem solving is required. In other words, the apprentice loses opportunities to learn by doing some of the routine workplace tasks but is expected to somehow acquire the ability to solve problems either when the machine breaks down or when the problem is beyond the machine's capabilities.

The complex machines also pose logistics problems to the training community who have had the formidable task of evaluating the increasingly complex workplaces of the military to determine instructional goals. Tough questions about the fidelity of the training place vis-à-vis the workplace have been vigorously debated. The training place typically has low priority for the expensive machines that populate the workplace, an unfortunate consequence of which is that during training, hands-on learning opportunities are often replaced by theoretical abstractions that cannot be tied to concrete experience.

All of this translates into the following kind of scenario for the typical apprentice: initial technical training is customarily patterned on an academic model of teaching complex subject matter. Students are told about a work domain instead of receiving practice in it. The academically-trained apprentice is met at the workplace by high expectations and by demand characteristics that simultaneously increase the pressure to learn and eliminate many learning opportunities. The implications for the interplay of performance assessment and cognitive analysis in this context of labored apprenticeship learning can be summarized in the following points:

-

Inventive testing informed by cognitive analyses could conceivably begin to shift the emphasis in technical training away from academic models of learning facts to experiential models of learning procedures. Frederiksen (1984) has reported precedents in military training where changing the test meant instructional reform. The reason for present assessment being focused on declarative knowledge is a familiar one in psychology, namely, that what usually gets measured is that which is easy to measure (e.g., the formula for Ohm's Law versus facility in tracing signal flow). Results of cognitive analyses of procedural knowledge provide a rich basis for constructing items that do more than test recognition skill.

-

Cognitive testing approaches are characterized by the methodology

-

employed in creating test items and not necessarily by the form of those items. We believe that cognitive theory now poses important issues to be considered in evaluating a particular approach to testing. Specifically, the approach we favor is one of identifying the critical mental models, conceptual knowledge, and specific mental procedures involved in competent performance and then asking whether a given test allows one to reliably assess the extent of those aspects of competence. This suggests that traditional paper-and-pencil formats may have to be supplemented by hands-on testing in order to be sure that procedural skills are well established, but it also suggests that even exhibiting competent performance on the job may not predict transfer capability nor the ability to work well with nonstandard problems or work conditions. Thus, cognitive approaches may require that direct demonstrations of competence on “fair” problems under safe and standard conditions be supplemented by computer-based testing that can simulate unsafe, expensive, and otherwise nonstandard problem solving contexts.

-

Computer delivery of diagnostic items affords opportunities for testing environments to double as adaptive learning environments. Intelligent simulation environments are feasible as well where work instruments can be represented for learner exploration, manipulation, even simplification. This kind of microworld approach is an interesting way to cope with the absence of real systems in the training place. Likewise, the computer microworld can move to the workplace to introduce a constant source of on-the-job training and practice experiences.

-

Finally, the prospect of broadening a worker's technical purview presents the dual challenge of uncovering knowledge and skill components that cut across existing specialized occupations and devising instruction that generates transfer. Both parts of the challenge entail performance assessment demands. Cognitive theory-based work following the expert-novice paradigm has amassed some evidence to suggest commonalities in expertise across domains such as physics, electricity, and radiology—e.g., deep versus surface structure in problem representation, knowledge in highly proceduralized form (Chi et al., 1981; Gentner and Gentner, 1983; Lesgold et al., 1988). Components of skill like these, that span multiple domains, represent logical foci for instruction and assessment where movement across domains is of interest.

REFERENCES

Anderson, J.R. 1976 Language, Memory, and Thought. Hillsdale, N.J.: Lawrence Erlbaum Associates.

1982 Acquisition of cognitive skill. Psychological Review 89:369-406.

Card, S.K., T.P. Moran, and A. Newell 1983 The Psychology of Human-Computer Interaction. Hillsdale, N.J.: Lawrence Erlbaum Associates.

Chi, M.T.H., P. Feltovich, and R. Glaser 1981 Categorization and representation of physics problems by experts and novices. Cognitive Science 5:121-152.

Chi, M.T.H., R. Glaser, and E. Rees 1982 Advances in the psychology of human intelligence. In R.Sternberg, ed., Expertise in Problem Solving, Vol. I. Hillsdale, N.J.: Lawrence Erlbaum Associates.

Fitts, P.M. 1964 Perceptual-motor skill learning. In A.W. Melton, ed., Categories of Human Learning. New York: Academic Press.

Frederiksen, N. 1984 The real test bias: influences of testing on teaching and learning American Psychologist 39(3):193-202.

Gentner, D., and D. Gentner 1983 Flowing waters or teeming crowds: mental models of electricity. In D. Gentner and A.L. Stevens, eds., Mental Models. Hillsdale, N.J.: Lawrence Erlbaum Associates.

Gitomer, D. 1984 A cognitive analysis of a complex troubleshooting task. Unpublished dissertation, University of Pittsburgh.

Kieras, D.E., and P.G. Polson 1982 An approach to the formal analysis of user complexity. Working Paper 2, Project on User Complexity of Devices and Systems University of Arizona and University of Colorado.

Larkin, J.H., J. McDermott, D.P. Simon, and H.A. Simon 1980 Expert and novice performance in solving physics problems. Science 208:1335-1342.

Lesgold, A.M. 1984 Acquiring expertise. In J.R. Anderson and S.M. Kosslyn, eds., Tutorials in Learning and Memory: Essays in Honor of Gordon Bower . San Francisco: W.H. Freeman.

1986 Problem solving. In R.J. Sternberg and E.E. Smith, eds., The Psychology of Human Thought. Cambridge, Eng.: Cambridge University Press.

Lesgold, A.M., and C.A. Perfetti 1978 Interactive processes in reading comprehension. Discourse Processes 1:323-336.

Lesgold, A.M., L.B. Resnick, and K. Hammond 1985 Learning to read: a longitudinal study of word skill development in two curricula. Pages 107-138 in T.G. Waller and G.E. MacKinnon, eds., Reading Research: Advances in Theory and Practice, Vol. 4. New York: Academic Press.

Lesgold, A.M., H. Rubinson, P.J. Feltovich, R. Glaser, D. Klopfer, and Y. Wang 1988 Expertise in a complex skill: diagnosing X-ray pictures. In M.T.H. Chi, R. Glaser, and M. Farr, eds., The Nature of Expertise. Hillsdale, N.J.: Lawrence Erlbaum Associates.

Newell, A., and H.A. Simon 1972 Human Problem Solving. New York: Prentice-Hall.

Schneider, W. 1985 Training high-performance skills: fallacies and guidelines. Human Factors 27(3):285-300.

Simon, H.A. 1965 The Shape of Automation. New York: Harper and Row.

1981 The Sciences of the Artificial. Cambridge, Mass.: MIT Press.