Introduction to Part 1

Alfred V. Aho

The telecommunications/information infrastructure in the United States has been evolving steadily for more than a century. Today, sweeping changes are taking place in the underlying technology, in the structure of the industry, and in how people are using the new infrastructure to redefine how the nation's business is conducted.

The two keynote papers in this section of the report set the stage by outlining the salient features of the present infrastructure and examining the forces that have led to the technology, industry, and regulatory policies that we have today.

Robert Lucky discusses how the nation's communications infrastructure evolved technically to its current form. He outlines the major scientific and engineering developments in the evolution of the public switched telecommunications network and looks at the current technical and business forces shaping tomorrow's network. Of particular interest are Lucky's comments contrasting the legislative and regulatory policies that have guided the creation of today's telephone network with the rather chaotic policies of the popular and rapidly growing Internet.

Charles Firestone outlines the regulatory paradigms that have molded the current information infrastructure and goes on to suggest why these paradigms might be inadequate for tomorrow's infrastructure. He looks at the current infrastructure from three perspective—the production, electronic distribution, and reception of information—and proposes broad goals based on democratic values for the regulatory policies of the different segments of the new information infrastructure.

The four papers that follow examine the use of the telecommunications infrastructure in several key application areas, identify major obstacles to the fullest use of the emerging infrastructure, and discuss how the infrastructure and concomitant regulatory policies need to evolve to maximize the benefits to the nation.

Colin Crook discusses how the banking and financial services industries rely on the telecommunications infrastructure to serve their customers on a global basis. He notes that immense sums of money are moved electronically on a daily basis around the world and that the banking industry cannot survive without a reliable worldwide communications network. He underscores the importance of an advanced public telecommunications/information infrastructure to the nation's continued economic growth and global competitiveness.

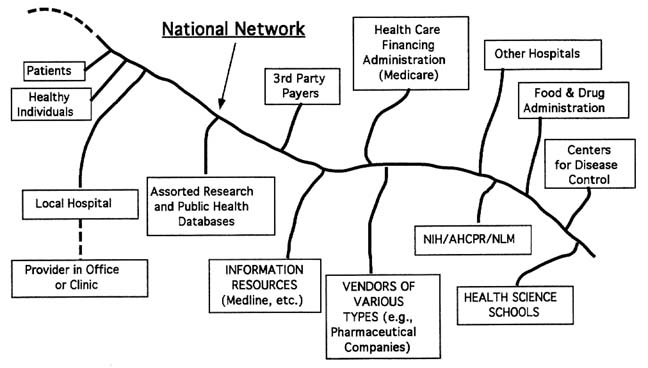

Edward Shortliffe notes that the use of computers and communications is not as advanced in health care as in the banking industry. He gives examples of how the use of information technology can both enhance the quality of health care and reduce waste. He stresses the importance of demonstration projects to help prove the cost-effectiveness and benefits of the new technology to the health care industry.

Robert Pearlman notes that, at present, K-12 education lacks an effective information infrastructure at all levels—national, state, school district, and school site. He presents numerous examples of how new learning activities and educational services on an information superhighway have the potential for improving education. He outlines the major barriers that need to be overcome to create a suitable information infrastructure for effective K-12 schooling in the 21st century.

In the final paper, Clifford Lynch looks at the future role of libraries in providing access to information resources via a national information infrastructure. He examines the benefits and barriers to universal access to electronic information. He notes that a ubiquitous information infrastructure will cause major changes in the entire publishing industry and that intellectual property rights to information remain a major unresolved issue.

The Evolution of the Telecommunications Infrastructure

Robert L. Lucky

I have been asked to talk about the telecommunications infrastructure—how we got here, where we are, and where we are going. I don't think I am going to talk quite so much about where we are going but rather about the problems. I will discuss what stops us from going further, and then I will make some observations about the future networking environment.

First, I will review the history of how we got where we are in the digitization of telecommunications today, and then I would like to address two separate issues that focus on the problems and the opportunities in the infrastructure today. The first issue is the bottleneck in local loop access, which is where I think the challenge really is. Then I will discuss two forces that together are changing the paradigm for communications—what I think of as the ''packetizing" of communications. The two forces that are doing this are asynchronous transfer mode (ATM) in the telecommunications community and the Internet from the computer community. The Internet will be the focus of many of the subsequent talks in this session, since it seems to be the building block for the national information infrastructure (NII).

HOW THE TELECOMMUNICATIONS NETWORK BECAME DIGITAL

It is not as if someone decided that there should be an NII, and it has taken 100 years to build it. The history of the NII is quite a tangled story.

First, there was the Big Bang that created the universe, and then Bell invented the telephone. If you read Bell's patent, it actually says that you will use a voltage proportional to the air pressure in speech. Speech is, after all, analog, so it makes a great deal of sense that you need an analog signal to carry it. In the end Bell's patent is all about analog. Since that invention, it has taken us over 100 years to get away from the idea of analog.

A long time went by and the country became wired. In 1939, Reeves of ITT invented pulse code modulation (PCM), but it was 22 years before it became a part of the telephone plant, because nobody understood why it was a good thing to do. Even in the early 1960s a lot of people did not understand why digitizing something that was inherently analog was a good idea. No one had thought of an information infrastructure. Computer communications was not a big deal. The world was run by voice. But since then the telephone network has been digitized. As it happened, this was accomplished for the purposes of voice, not for computer communication.

So in 1960 we had analog voice and it fit in a 4-kilohertz channel, and we stacked about 12 of these together like AM radio and sent it over an open wire. That was the way communication was done. But if you take this 4-kilohertz voice channel and digitize it, it is 10 times bigger in

bandwidth. Why do we do that? That seems like a really dumb idea. But you gain something, and that is the renewability of the digital form. When it is analog and you accumulate distortion and noise comes along, it is like Humpty Dumpty—you can't put it back together again. You suffer these degradations quietly.

The digits can always be reconstructed, and so in exchange for widening the bandwidth, you get the ability to renew it, to regenerate it, so that you do not have to go 1,000 miles while trying to keep the distortion and noise manageable. You only have to go about 1 mile, and then you can regenerate it—clean it up and start afresh. So that was the whole concept of PCM, this periodic regeneration. It takes a lot more bandwidth, but now you can stack a lot more voice signals, because you don't have to go very far.

The reason that the network is transformed is not necessarily to make it more capable but to make it cheaper. PCM made transmission less expensive, since 24 voice channels could be carried, whereas in the previous analog systems only 6 voice channels could be carried. So digital carriers went into metropolitan areas starting in the early 1960s.

At that time we had digital carriers starting to link the analog switches in the bowels of the network. More and more the situation was that digits were coming into switches designed to switch analog signals. It was necessary to change the digits to analog in order to switch them. Engineers were skeptical. Why not just reshuffle the bits around to switch them?

THE ADVENT OF DIGITAL SWITCHING

So the first digital switches were designed. The electronic switching system (ESS) number four went out into the center of the network where there were lots of bits and all you had to do was time shifting to effect switching. That seemed natural because all the inputs were digital anyway. It was some time before we got around to the idea that maybe the local switches could be digital, too, because the problem in the local switches is that, unlike the tandem switches, they have essentially all of their input signals in analog form.

I was personally working on digital switching in 1976. I did not start that work, but I was put in charge of it at that time. I remember a meeting in 1977 with the vice president in charge of switching development at AT&T. It was a very memorable meeting: we were going to sell him the idea that the next local switch should be digital.

We demonstrated a research prototype of a digital local switch. We tried to explain why the next switch in development should be digital like ours, but we failed. They said to us that anything we could do with digits, they could do with analog and it would be cheaper—and they were right on both scores. Where we were all wrong was that it was going to become cheaper to do it digital, and if we had had the foresight we would have seen that intelligence and processing would be getting cheaper and cheaper in the future. But even though we all knew intellectually that transistor costs were steadily shrinking, we failed to realize the impact that this would have on our products. Only a few short years later those digital local switches did everything the analog switches did, and they did more, and they did it more cheaply.

The engineers who developed the analog switches pointed to their little mechanical relays. They made those by the millions for pennies apiece, and those relays were able to switch an entire analog channel. Why would anyone change the signals to digits? So the development of analog switching went ahead at that time, but what happened quickly was the advent of competition enabled by the new digital technology. There was a window of opportunity where the competition could come in and build digital switches, so AT&T was soon forced to build a digital switch of its own, even though it had a big factory that made those nice little mechanical relays.

The next major event was that fiber came along in 1981. Optical fiber was inherently digital, in the sense that you really could not at that time send analog signals over optical fiber

without significant distortion. Cable TV is doing it now, but in 1980 we did not know how to send analog signals without getting cross-talk between channels. So from the start optical fiber was considered digital, and we began putting in fiber systems because they were cheaper. You could go further without regenerating signals than was possible with other transmission media, so even though the huge capacity of optical systems was not needed at first, it went in because it was a cheaper system. It went in quickly beginning in 1981, and in the late 1980s AT&T wrote off its entire analog plant. The network was declared to be digital.

This was incredible because in 1984—at divestiture—we at AT&T believed that nobody could challenge us. It had taken us 100 years to build the telephone plant the way it was. Who could duplicate that? But what happened was that in the next few years AT&T built a whole new network, and so did at least two other companies! And, in fact, just a few years ago, you would not have guessed that the company with the third most fiber in the country was a gas company—Wiltel, or the Williams Gas Company. So everybody could build a network. All of a sudden it was cheap to build a long-distance network and a digital one, inherently digital because of the fiber.

In the area of digital networking we have been working for many years on integrated services digital network (ISDN). We all trade stories about when we all went to our first ISDN meeting. I said, "Well, I went to one 25 years ago," and he said, "27," so he had me—that kind of thing. ISDN is one of those things that still may happen, but in the meantime we have another revolution coming along beyond the basic digitization of the network—we have the packetizing of communication and ATM and the Internet.

Internet began growing in the 1970s, and now we think of it as exploding. Between Internet and ATM something is happening out there that is doing away with our fundamental concept for wired communications. First we did away with analog, and then we had streams of bits, but now we are doing away with the idea of a connection itself. Instead of a circuit with a continuous channel connecting sender and receiver, we have packets floating around disjointedly in the network, shuttling between switching nodes as they seek their separate destinations. This packetization transforms the notion of communication in ways that I don't think we have really come to grips with yet.

Where we stand in 1993 is this. All interexchange transmission is digital and optical. So is undersea transmission, which is currently the strongest traffic growth area: between nations we have increasing digital capability and much cheaper prices. The majority of the switches in the network are now digital—both the local and the tandem switches. But there is a very important point here. In these digital switches a voice channel is equated with a 64-kilobits-per-second stream. It is not as if there were an infinite reservoir to do multimedia switching and high-bandwidth applications, because the channel equals 64 kilobits per second in these switches. They are not broadband switches in terms of either capacity or flexibility.

Another important conceptual revision that has occurred during the digital revolution has been one involving network intelligence. The ESS number five, AT&T's local switch, has a 10-million instructions per second (MIPS) processor as its central intelligence. That was a big processor at the time the switch was designed, but now the switch finds itself connected to 100-MIPS processors on many of its input lines. The bulk of intelligence has migrated to the periphery, and the balance of the intelligence has been seeping out of the network. I always think of Ross Perot's giant sucking noise or, as George Gilder wrote, the network as a centrifuge for intelligence. This has been a direct outgrowth of the personal computer (PC) revolution.

THE BOTTLENECK: LOCAL LOOP ACCESS

Let us turn now to loop access, where I have said that the bottleneck exists. Loop access is still analog, and it is expensive. The access network represents about 80 percent of the total

investment in a network. That is where the crunch is, and it is also the hardest to change. There is a huge economic flywheel out there to change access, whereas the backbone, as we have seen, can be redone in a matter of a few years at moderate expense.

There are many things happening in the loop today, but I see no silver bullet here. People always say there ought to be some invention that is going to make it cheap to get from the home into the network, but the problem is that in the loop there is no sharing of cost among subscribers. In the end you are on your own, and there is no magic invention that makes this individual access cheap.

Today everybody is trying to bring broadband access into the home, and everybody is motivated to do this. The telephone companies want a new source of growth revenue, because their present business is not considered a good one for the future. The growth rate is very small and it is regulated, and so they see the opportunity in broadband services, in video services, and in information services, and they are naturally attracted to these potential businesses. On the other hand, the cable television companies are coming from a place where they want to get into information services and into telephony services. So from the perspective of the telephone companies, not only is the conventional telephone business seen as unattractive, but there is also competition coming into it, which makes it even less attractive.

There are many alternatives for putting broadband service into the home. There are many different architectures and a number of possible media. Broadband can be carried into the home by optical fiber, by coaxial cable, by wireless, and even by the copper wire pairs that are currently used for voice telephony.

The possibilities for putting fiber into homes are really a matter of economics. The different architectural configurations differ mainly in what parts of the distribution network are shared by how many people. There is fiber to the home, fiber to the curb, fiber to the pedestal, and fiber to the whatever! The fact is that when we do economic studies of all these, they don't seem to be all that different. It costs about $1,100 to put a plain old telephone service (POTS) line into a home and about $500 more to add broadband access to that POTS line. You can study the component costs of all these different architectures, but it just does not seem to make a lot of difference. It is going to be expensive on some scale to wire the country with optical fiber.

The current estimate is that it would be on the order of $25 billion to wire the United States. This is incremental spending over the next 15 years to add optical fiber broadband. Moreover, we are probably going to do that not only once, but twice, or maybe even three times. I picture standing on the roof of my house and watching them all come at me. Now we hear that even the electric power utilities are thinking of wiring the country with fiber. It is a curious thing that we are going to pay for this several times, but it is called competition, and the belief is that competition will serve the consumer better than would a single regulated utility.

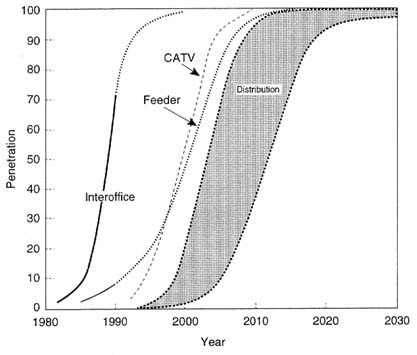

Because the investment in fiber is so large, it will take years to wire the country. Figure 1 shows the penetration of fiber in offices and the feeder and distribution plants by year, and the corresponding penetration of copper. You can see the kind of curves you get here and the range of possible times that people foresee for getting fiber out to the home. If you look at about a 50 percent penetration rate, it is somewhere between the year, say, 2001 and 2010. This longish interval seems inconsistent with what we would like to have for the information revolution, but that is what is happening right now with the economic cycle running its normal course.

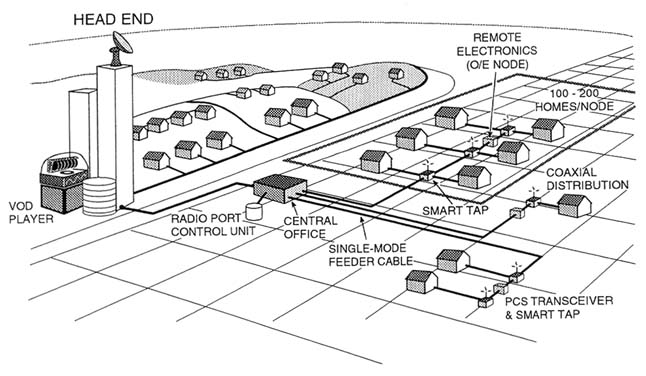

The telephone company is not the only one that wants to bring fiber to your home. The cable people have the same idea, and Figure 2 shows the kind of architecture they have. They have a head end with a satellite dish and a video-on-demand player, or more generally a multimedia server. They have fiber in the feeder portion of their plant, and they have plans for personal communication system (PCS) ports to collect wireless signals. They have their own broadband architecture that will be in place, and, of course, we see all the business alliances that are going on between these companies right now. We read about these events in the paper every morning.

Everybody thinks that the money will be in the provision of content and that the actual distribution will be a commodity that will be relatively uninteresting.

If you look at the cable companies versus the telephone companies, cable has the advantage of lower labor costs and simpler service and operations. Their plant is a great deal simpler than that of the telephone companies. They have wider bandwidth with their current system and lower upgrade costs. They are also used to throwing away their plant and renewing it periodically. Disadvantages of cable franchises have more to do with financial situations, spending, and capital availability.

THE PACKETIZING OF COMMUNICATIONS: ATM

Asynchronous transfer mode (ATM) is a standard for the packetizing of communications, where all information will be carried in 48-byte packets with 5-byte headers. This fixed cell size is a compromise: it is too small for file transfers and too big for voice. It is a miraculous agreement that we do it this way and that, if everybody does it this way, we can build an inexpensive broadband infrastructure using these little one-size-fits-all cells. But it seems to be taking hold, and both the computer and communications industries are avidly designing and building the new packet infrastructure.

ATM has an ingenious concept called the virtual channel that predesignates the flow for a particular logical stream of packets. You can make a connection and say that all the following cells on this channel have to take this particular path, so that you can send continuous signals like speech and video. ATM also integrates multimedia and is not dependent on medium and speed.

Since ATM local area networks (LANs) are wired in tree-like "stars" with centralized switching, their capacity scales with bandwidth and user population, unlike the bus-structured LANs that we have today. Moreover, ATM integrates multimedia, is an international standard, and offers an open-ended growth path in the sense that you can upgrade the speed of the system while conforming to the standard. I think it is a beautiful idea, as an integrating influence and as a transforming influence in telecommunications.

THE NEW INFRASTRUCTURE

Now let us turn to the other force—Internet. The Internet will be the focus of a lot of our discussions, because when people talk about the NII, the consensus is growing that the Internet is a model of what that infrastructure might be. The Internet is doubling in size every year. But whether it can continue to grow, of course, is an issue that remains to be discussed, and there is much that can be debated about that.

To describe the Internet, let me personalize my own connection. I am on a Bellcore corporate LAN, and my Internet address is rlucky@bellcore.com. At Bellcore we have a T-1 connection, 1.5 megabits per second, into a midlevel company, JVNC-net at Princeton. Typically, these midlevels are small companies, making little profit. They might work out of a computer center at a university and then graduate to an independent location, but basically it is that kind of affair. They start with a group of graduate students, buy some routers, and connect to the national backbone that has been subsidized by the National Science Foundation.

THE USER VIEW OF INTERNET ECONOMICS

As for the user view of the Internet, here is what I see. I pay about $35,000 for routers and for the support of them in my computing environment at Bellcore. But people say that we have to have this computer environment anyway, so let's not count that against Internet. That is an interesting issue actually. I don't think we should get hung up on any government subsidies relating to Internet operations, because if we removed all the subsidies it really would not change my cost for the Internet all that much.

All we have to do is take the LANs we have right now, the LAN network that interconnects them locally, and add a router (actually a couple of routers) and then lease a T-1 line for $7,000 a year from New Jersey Bell. That puts us into JVNC-net. We pay $37,000 a year to JVNC-net for access to the national backbone network. For this amount of expenditure about 3,000 people have access to Internet at our company—all they can use, all they want.

A friend recently gave me a model for Internet pricing based on pricing at restaurants. One model is the a la carte model; that is, you pay for every dish you get. The second model is the all-you-can-eat model, where you pay a fixed price and eat all you want. But there is a third model that seems more applicable to Internet. If you ask your kids about restaurant prices, they will say that it does not matter—parents pay. Perhaps this is more like Internet! The pricing to the user of Internet presents a baffling dilemma that I cannot untangle. It looks like it is almost free.

Internet is a new kind of model for communications, new in the sense that while obviously it has been around for a while, it is very different from the telecommunications infrastructure. If I look at it, what I see inside Internet is basically nothing! All the complexity has been pushed to the outside of the network. The traditional telecommunications approach has big switches, lots of people, equipment, and software, taking care of the inside of the network. In the Internet model, you do not go through the big switches—it just has routers. Cisco, Wellfleet, and others have prospered with this new business. Typically, a router might cost about $50,000. This is very different from the cost of a large telecommunications switch, although they are not truly comparable in function.

With a central network chiefly provisioned by routers, all the complexities push to the outside. Support people in local areas provide such services as address resolution and directory maintenance at what we will consider to be the periphery of the network. The network is just a fast packet-routing fabric that switches you to whatever you need.

THE CONTRAST IN PHILOSOPHY BETWEEN THE INTERNET AND TELECOMMUNICATIONS

The contrasting approaches and philosophies are as follows. The telecommunications network was designed to be interoperable for voice. The basic unit is a voice channel. It is interoperable anywhere in the world. You can connect any voice channel with any other voice channel anywhere. Intelligence is in the network, and the provisioning of the network is constrained by regulatory agencies and policies.

Internet, by contrast, is interoperable for data. The basic unit is the Internet protocol (IP) packet. That is in its own way analogous to the voice channel; it is the meeting place. If you want to exchange messages on Internet, you meet at the IP level. In Internet the intelligence is at the periphery, and there have not yet been any significant regulatory constraints that have governed the building and operation of the network.

Pricing is a very important issue right now in Internet. The telecommunications approach to pricing is usage based, with distance, time, and bandwidth parameters. Additionally, there are settlements between companies when a signal traverses several different domains. The argument

that the telecommunications people use is that pricing regulates fairness of use. They feel that without some usage-based pricing, people will not use the network services fairly.

In Internet the policy has been to have a fixed connection charge, which depends on pipe size and almost nothing else. There are no settlements between companies, and there is no usage-based charge. The Internet fanatics would say that billing costs more than it is worth and that people use the network fairly anyway. That has worked so far, but it remains to be seen, as the Internet grows up—and especially as multimedia go on it—whether that simple pricing philosophy will continue to function properly.

Before I conclude I would like to introduce an issue that bothers me as a member of the telecommunications community. There was an article in the Wall Street Journal that said people are now bypassing the telecommunications network by sending faxes over the Internet. Here is the situation: if you send a fax over the Internet, it really appears to be free. You go to the telephone on your desk, and if you send the same fax, it costs you a couple of dollars. Why is this? This paradox sums up the idea that Internet really is almost a free good. Is the difference in price for the fax because Internet has found a cheaper way to build a telecommunications network? Or is it because Internet is a glitch on the regulatory system, that it is cream skimming and that it evades all the regulation and all the shared costs of the network? I can tell you honestly that I don't understand this question. I have had economists and engineers digging down, and I keep trying to think there will be bedrock down there somewhere. I cannot find it. The problem is anytime an engineer like me tries to understand pricing, I have the idea that it ought to have something to do with cost.

Unfortunately, that is not really the case. The biggest single difference we were able to find between the cost of a fax on Internet and on the telephone network was that in the cost of the business telephone there is a large subsidy for residential service built into the tariff. In traditional telecommunications the relationship between the cost of providing a service and the pricing for that service is sometimes seemingly arbitrary. Furthermore, the actual cost of provision may involve a number of rather arbitrary assumptions and in any case is only weakly dependent on technology. At base, telecommunications is a service business.

The arresting fact about Internet is its growth rate of 100 percent. There are approximately 2 million host computers connected to it. It is about 51 percent commercial usage. Moreover, it violates the rules of life—someone must be in charge, someone must pay. Very troublesome. Yet it is a worldwide testbed for distributed information services, and it is forming the prototype information infrastructure of our time.

MULTIMEDIA AND THE FUTURE OF THE INTERNET

As we look to how the Internet might have to evolve in the near future, we see that the IP protocol that has grown up with Internet is going to have to change in some small but fundamental way. As it stands now, the basic unit is the connectionless data packet, and that does not really support video and voice on Internet in spite of the fact that, as we all know, those services are being sent experimentally on Internet right now. However, they only work when there is very little traffic on the network. If the traffic grows, voice and video cannot really be sent on Internet because it presently uses statistical multiplexing of packets, so they don't arrive regularly spaced at the edges of the network. So the future IP protocol has to have some kind of flow mechanism put into it to assure regularity of arrival of certain packets. Furthermore, the new protocol must support more addresses than are presently possible. But these problems are fixable. Security is also often cited as a problem, but that too can be fixed.

Communications people are betting that the future traffic will include a lot of multimedia. That means a great deal of additional capacity will be required, as well as control mechanisms appropriate to the new media. ATM is the answer being propagated by telecommunications

companies, but Internet people say ATM does not really matter, because that is a lower-level protocol to TCP/IP.

Meanwhile, the computer industry is building new LANs that take ATM directly to the desktop. It now seems that we are headed to a situation where we will have ATM in the local LANs, but to be internetworked that ATM will be converted to TCP/IP to enter the routers that serve as the gateways to the network beyond. From there, however, it might again be converted to ATM in order to traverse the highways of the network. Similarly, on the other half of its voyage, it would again be converted back to IP and then to ATM to reach the distant desktop. That seems like a lot of conversions, but they will be easy in the immediate future and may well be the price of interoperability.

The prize in the future is control of multimedia—whether telecommunications controls multimedia through ATM or whether Internet is able to gear itself up, change its protocol, and capture this new kind of traffic. Will people use the Internet for voice five years from now, and if so, will that take any significant traffic away from the network? Those are interesting questions that I think we can debate.

The Search for the Holy Paradigm: Regulating the Information Infrastructure in the 21st Century

Charles M. Firestone

The thrust of this paper is that the electronic information infrastructure, which I define as the production, electronic distribution, and reception of information, has undergone two major regulatory paradigms and is ready for a third. The first is the rather legalistic reaction to monopoly and oligopoly and is manifest in the period of antitrust and regulatory enforcement under the Communications Act, culminating in the late 1960s to early 1970s. The second is the competitive period with its commensurate deregulatory paradigm, which has been present in communications regulation since the late 1970s. These overlapping stages find expression in the regulation of many different aspects of the information infrastructure.

My thesis is that these prior models are inadequate, by themselves, for the complexities of the 1990s and beyond. While I do not specify a definitive new paradigm, I suggest that we might look to scientific or ecological models in beginning to sort out ways for the government to interact, in a dynamic democratic process, with the coevolving technologies, applications, and regulatory environments of the communications and information world: the international information environment within which the national information infrastructure will wind. In that sense, I call for a vision of "sustainable democracy," wherein technological developments preserve and enhance present and future democratic institutions and values.

DEFINITION OF THE INFORMATION INFRASTRUCTURE

The information infrastructure can be broadly or narrowly defined. For the purposes of this paper, I take a broader outlook that corresponds to the nature of the communications process, namely, the production, distribution, and reception of information in electronic form. Thus, while I will allude to print and newspaper, the focus will be on the following elements of electronic communications:

-

Production of information in film, video, audio, text, or digital formats;

-

Distribution media, most notably telephony, broadcasting, cable, other uses of electronic media, and, for our purposes here, storage media such as computer disks, compact disks, and video and audio tape; and

-

Reception processes and technologies such as customer premises equipment in telephony, videocassettes and television equipment, satellite dishes, cable television equipment, and computers.

Others might prefer to distinguish only between software and hardware, between content and conduit, or between information and communications.1 While such an organization is a plausible one, I favor the tripartite approach because it places attention on an increasingly important but often overlooked area of regulation, that of reception. As First Amendment cases move toward greater editorial autonomy by the creators, producers, and even the distributors of information, attention will have to be focused on reception for filtering and literacy concerns (Aufderheid, 1993). I address this in greater detail below.

REGULATION OF THE INFRASTRUCTURE

Regulation of the information infrastructure can also be usefully analyzed from this three-pronged approach. The law of production is associated with information law, with intellectual property concerns, First Amendment cases, and issues of content. Governmental regulatory policy in this area has also been affected by the expenditure of billions of dollars in governmental and public investment in programming for public broadcasting, research and development grants, creation of libraries, and software generation by the government.

Regulation of telecommunications has centered, however, on the distribution media. For many years this took the form of categorizing the form of media—telephony, broadcasting, or print—and placing that medium in the proper regulatory mold, namely, common carrier, trusteeship, or private. The main function of government was to make the electronic media usable, to allocate and assign uses and users to the particular media, and then to regulate the licensees in the public interest.2 The major intention of this paper is to describe the past, present, and future paradigms of such regulation. At the same time, I hope to point out other levers of governmental policy, including investment in technology, or other nonregulatory influences.

Regulation of the receiver or reception process is relatively unusual, but important nevertheless. One familiar area was the early regulation of cable television, which was a technology intended to enhance reception of over-the-air television stations but was regulated to ensure that, at first, it did not undermine the economics of the broadcast television system.3 Most significant in recent forms of regulation of reception is the area of privacy, which, among other rights, protects the receiver to be free from intrusion at the reception end of a communication.4

Goals of Regulation

In each case the aim of regulation was to effectuate or bring about a set of goals for society. I find it useful to categorize these goals into five basic democratic values, as follows.5

Liberty

Foremost among the Bill of Rights is the First Amendment guarantee against abridgment of freedom of expression (speech, press, association, petition, and religion). Beyond this trump card of protection, certain regulations have sought to promote First Amendment values of editorial autonomy,6 diversity of information sources,7 and access to the channels of public communication.8 A long-standing debate centers on the proper legal attention that should be paid to the underlying values of the First Amendment, as opposed to the bare dictates of the amendment, that is, is it a sword as well as a shield?

A second liberty, implicit in the Bill of Rights but explicit in state constitutions and in legislation, is the right to privacy, individual dignity, and autonomy. At the federal level this has

been expressed in the form of sector-by-sector legislative codes; at the state level privacy is protected in common law and statutes.9

A third liberty, which is not often expressed as such, is that of ownership of property. In the world of communications this usually takes the form of a bundle of intangible or intellectual property rights that attach to original works of authorship or invention by the Constitution's copyright and patent clause. Traditionally, individuals have been licensed as trustees but not owners of the electromagnetic spectrum. However, this approach could change with the auctioning of spectrum. Furthermore, the application of intellectual property concepts to new forms of information has raised many new and vexing problems for future regulators.

Equity

A countervailing value to liberty is that of equality. In the field of communications this takes the form, most prominently, of universal service. That is, the Communications Act gives, as one of its seminal purposes, to make available to "all the people" a worldwide communications system.10 Today, the issue of universal service is foremost in the minds of regulators and legislators, who see the need for an evolving definition of which communications and information services should be available to all. Meanwhile, traditional schemes for assuring universal service are dissipating.

Another issue that comes within the equity value is that of information equity—is the gap between rich and poor extending to the information haves and have-nots? Are the technologies that have such potential to bridge the gap between rich and poor instead going to widen it? Governments at all levels have established libraries to provide all citizens with access to a broad array of information resources. The obvious question for the future is the level and extent that information resources will be made available in nonprint formats, and through electronic connections to the home, rather than by extensive collections at libraries.11

Community

The mass media, long attacked for monolithic mediocrity, is also seen as a key cohesive force in a community. The Federal Communications Commission (FCC) has included, as a basic tenet, the concept of localism as a touchstone for licensing and regulating broadcasting stations.12 This includes a preference for locals obtaining the licenses initially and for stations, once licensed, to serve the local needs and interests of the service area.13

Similarly, franchising authorities often grant cable television franchises to locals, who are thought to be more attuned to the needs of the local community. Whoever receives the franchises, furthermore, usually has requirements to include public, educational, and governmental access channels.14 At one time, the FCC required cable operators to include local origination cable-casting channels on their systems.15 The access channels, as well as some public broadcasting and other cable channels, might be viewed as electronic commons or public space for the local community.

At the same time, on a national scale, the broadcast media serve as one of the country's most significant and binding cultural forces. The broadcast media provide common experiences and a larger, more cohesive national community for a country that is increasingly multicultural.

A third manifestation of community values is the advent of public broadcasting—governmental and public financial support of noncommercial fare. Some of that programming is aimed at better serving local communities. This aspect of "community" raises a broader issue for

the future: What is the role of government financing of programming, information resources, and distribution facilities?16

Finally, the whole concept of community is changing in the electronic world of networks and targeted communications. New electronic communities are being formed around the interests of individuals rather than geographic boundaries. This, again, raises profound questions about the impact of modern communications technologies on the concept of community.

Efficiency

The Communications Act specifically refers to the need for an "efficient" communications system by wire and radio.17 Generally, economic regulation values efficiency as its ultimate goal and fairness secondarily. Much of the communications regulatory scheme is premised on making the infrastructure and the pricing system efficient. Title II common carrier regulation seeks to assure that telecommunications facilities serve the entire country at rates that are fair, affordable, and comparable to those that would result from competition.18

In the wireless realm, governmental spectrum allocation is premised on the tenet that, if unregulated, spectrum users would injuriously and therefore inefficiently interfere with each other.19 More broadly, the government considers how communications and information sectors can maximize the citizenry's economic welfare, within the country and on a more global scale. Recently, the United States has been concerned with its competitive position in the world economy. The promotion of an efficient communications and information infrastructure supports that concern and interest.

Participatory Access

The communications system needs to be not only efficient but also workable and accessible to ordinary citizens. Accessibility is an undercurrent in many elements of communications regulation, from the creation of free over-the-air broadcasting stations, to access channels on cable, to common carrier regulation of telephony and its progeny. Indeed, access could be an organizing principle for regulation, in terms of facilities, costs, and, as will be explained later, literacy (Reuben-Cooke, 1993).

Finally, the regulatory scheme seeks to enable citizens to participate in the process on a fair and equal basis. The concepts of due process, sunshine for governmental agencies, and appellate review are all designed to facilitate citizens' fair access to, and participation in, the process. By using new communications technologies, these goals will likely be enhanced.

Whatever regulatory scheme is employed, it will have to consider and balance the above basic values and goals to fashion a system of greatest benefit to the public. The following sections will describe how these goals were addressed in the regulatory paradigms of yesterday and today, along with how they might be considered and treated in the future.

REGULATORY PARADIGMS

At each level of the communications process—production, distribution, and reception—there have been two paradigmatic stages of regulation, one of scarcity and one of apparent abundance and competition. At the scarcity stage, usually obtained after an initial period of skirmishing among pioneers for position, the regulation has taken the general form of governmental intervention in order to promote the broader public interest.20 At the abundant or competitive stage, which

overlaps the earlier stage, there has been a reversal, a deregulation to promote greater efficiency (Kellogg et al., 1992). In each case the paradigm is a regulatory religion, at times demanding faith on the part of the believers. My thesis is that neither of the past regulatory schemes is sufficient alone to address the evolving complexities of the new communications infrastructure and that a new regulatory paradigm, a new religion, will be needed for the global and digital convergence of the telecommunications future.

Stage 1: Scarcity

The overriding characteristic of the first stage, "scarcity," is that in the various levels and industries associated with the information infrastructure there were large centralized, monolithic, top-down, command-and-control-type enterprises, relatively passive receivers, the formation of monopolies or oligopolies, often of a vertical (end-to-end) nature, and a certain potential for anticompetitive practices. These could be symbolized by the early stages of the major movie studios, AT&T, the broadcast networks, and IBM.

In most if not all cases the tendency of the law was for the government to regulate the monopoly industry in the public interest, either through application of antitrust laws or the regulatory apparatus of the Communications Act. Usually, this resulted in the removal or regulation of bottlenecks, to try to approximate the efficiencies of competition where none existed. The Communications Act also sought to foster the values of equality (in the form of universal service) and community (in the form of localism) under the general regulatory standard of the "public interest, convenience, and necessity."

Production

The production of information has been the most widespread, abundant, and competitive of all of the elements of the infrastructure. In almost each case the production industries have not been subject to regulation, for First Amendment and other historical reasons. Rather, they are subject to normal commercial, antitrust, intellectual property, and First Amendment laws. Nevertheless, where economic oligopolies arose at earlier stages of the film, telephone technology, and computer equipment industries, the antitrust laws were used to address the alleged monopolization. Although the antitrust cases had only mixed success, that was the accepted method for governmental activity in this area. In the area of intellectual property, the law imposed a form of regulation in the imposition of fair use and compulsory licensing prescriptions. In the lone industry subject to federal agency regulation—broadcast programming—the FCC imposed both antitrust-like and content-related remedies.

Motion Pictures. In the 1930s and 1940s the major motion picture studios found that vertical integration could enhance their ability to control their products from inception to exhibition. (Some studios, such as Warner Brothers, were formed by theater chains.) The Justice Department, however, alleged that the studios were monopolizing the industry by both their structure and certain anticompetitive practices.

In the Paramount consent decree of 1948,21 the major, vertically integrated motion picture production studios and distribution organizations of the time agreed not to monopolize the production, distribution, and exhibition sectors of the motion picture industry and to divest their theater chains. Thus, a bottleneck at one level (e.g., major movies or control of theaters) could not be used to create scarcities and affect competition at another. This case is instructive to our inquiry, then,

for its principle: to assure competition at each level of the communications process. Today, the analogy would require competition (or openness) at the production, distribution, and reception levels of all communications.

Telephone Equipment.22 Shortly after the Justice Department litigated the Paramount case, it brought an action against AT&T and its Western Electric subsidiary for using its patents to monopolize the telephone equipment business.23 In an antitrust consent decree—the original "Final Judgment"—AT&T agreed to segregate the company into strictly regulated businesses, forsaking competitive businesses.24 Western Electric's equipment would henceforth be sold only to Bell companies. Significantly, it also required that the patents be licensed to other manufacturers, a form of compulsory licensing.25

Broadcast Programming. The broadcasting networks were regulated by the FCC and thus were subject to a broader "public interest" standard. During the 1930s and into the 1940s, NBC controlled both a Red and a Blue network, with only modest competition from CBS. The parent network imposed a set of requirements on its affiliates to take what was offered. After a long inquiry, the FCC (1) ordered NBC to divest one network (thereby creating ABC), (2) restricted certain abusive network practices that took discretion away from local affiliates, and (3) prohibited local stations from affiliating with more than one network.26

Broadcast Content Controls. Content controls were imposed on broadcasters from the inception of the Radio Act of 1927 and the Communications Act of 1934. These included the requirement that a station afford equal opportunities to legally qualified candidates for public office to "use" the facilities27 and restrictions against certain illegal activities such as obscenity.28 After first prohibiting editorializing,29 the FCC imposed a Fairness Doctrine, which encouraged stations to air controversial issues of public importance and to present contrasting viewpoints from responsible spokespeople on such issues.30 Furthermore, at the height of content regulation, the late 1960s and early 1970s, the FCC's regulations extended to changes of formats,31 to programming designed to respond to the needs and interests of the local community,32 and programs on public affairs.33

Copyright. The most common form of "regulation" of the production of programming and other information is through governmentally protected ownership rights, in the form of intellectual property laws. In the field of communications the most prevalent legal issues are in the area of copyright, which relates to original works of authorship. Here, the law provides one with a monopoly over the use and distribution of the work for a limited term.34

But even in the area of intangible ownership, as in real property law, there are limits to the rights of the owner. Thus, the public may have limited rights to use or copy a work for public interest reasons,35 just as it has rights of access to certain properties (e.g., private beachfronts in California) for public purposes. This area of "fair use" is vague and complicated, but even in the pure ownership of intellectual property there is a certain amount of regulation of a monopoly for public benefit.

Similarly, there are certain situations where the copyright owner is entitled to compensation for use of the work but cannot restrict to whom and where the use is extended. Thus, "compulsory licensing" has been extended to cable operators and jukebox owners in order for them to access certain works that are otherwise too cumbersome to license on an ad hoc basis.36 (There are other

instances of cumbersome licensing where compulsory licensing is not extended but, rather, private licensing agencies are employed. The compulsory licensing provisions of the Copyright Act are mainly the result of political compromises.)

Computer Manufacturing. In the second and third quarters of this century, IBM dominated the field of computer manufacturing. The IBM mainframe epitomizes the centralized, top-down, monolithic enterprise of the scarcity stage of regulation. The Justice Department, however, was unsuccessful in its antitrust suit against IBM and dropped the suit on the same day that AT&T agreed to the Modified Final Judgment.37 Nevertheless there is a history of private antitrust suits that did affect, and in a sense "regulate," the computer industry.38 More significant, however, was the research and development work in various government agencies, particularly the Defense Department, that led to many of the advances in computer hardware and software.

Distribution Media

In contrast to the production entities and businesses, the electronic distribution media during the 1920s through the 1970s were characterized by direct regulatory control of centralized gatekeepers under the public interest standard. Here the concepts of scarcity had two distinct meanings and regulatory approaches: common carriage, which was applied to telephony, and public trusteeship, which was applied to broadcasting. These concepts are explained below.

Telephony. In the field of telephony the building of facilities and the operation of a telephone network were thought to have natural monopoly characteristics—large barriers to entry due to high initial capital costs and increasing economies of scale. From the time of Theodore Vail's 1909 use of the words "universal service" to explain his plan for exchanging governmental protection and regulation for the extension of service to all at fair rates,39 the Bell system's end-to-end monopoly had social equity implications. Regulation of that monopoly as common carriers under a broad public interest standard would maintain an efficient, fair, reliable, accessible, and affordable telephone system for the country.

Under the Communications Act, common carriers have been required to apply for permission to enter or exit a market.40 They may not discriminate among their customers; they must provide access to all at reasonable rates, which are reviewed by the government; and they must file tariffs of their rates and practices.41 States generally paralleled this approach for intrastate communications.42 So long as there was a monopoly carrier, the rates could be averaged among all the customers of a given area so that high-cost areas could be subsidized within the rate system by ratepayers from high-volume areas. This regulatory deal, which promised stability, growth, universal service, and restraint against competitors, reached its zenith in the late 1960s and early 1970s.43

Satellites. The use of satellites for communications common carriage derived from Arthur Clarke's model of covering the world by three geostationary orbiting satellites (Clarke, 1945).44 The United States instigated the founding of INTELSAT, an international consortium of countries to foster international communications. The INTELSAT agreement contemplates a monopoly carrier to handle the domestic traffic to and from the INTELSAT satellites.45 This vision of a regulated monopoly was perfectly consistent with the prevailing paradigm of the time. It followed, as well,

the tremendous federal government investment in a space communications system. Thus, during the 1960s and early 1970s. COMSAT was the American monopoly satellite space segment provider.

Broadcasting. In the use of the electromagnetic spectrum it was long perceived as necessary to have the government allocate uses and users in order to avoid destructive interference on each frequency. For example, in the mid-1920s the broadcasters themselves called for federal regulation of the AM airwaves (Barnouw, 1975). Congress responded with the Radio Act of 1927 and the successor Communications Act of 1934, regulatory schemes whereby access to the airwaves was limited by law. Only those relative few who were licensed would be allowed to broadcast. These licensees of "scarce" frequencies, however, would be deemed as trustees for the public and therefore regulated according to the "public interest, convenience, and necessity."46

This regulatory scheme grew through the 1960s to incorporate various requirements on each broadcaster to serve its local community. It required stations to provide access to candidates for federal elective office;47 to provide equal opportunities to opposing candidates for the use of the station's facilities ("equal time");48 to provide its lowest unit charge for political advertising;49 to air contrasting viewpoints on controversial issues of public importance;50 to ascertain the local needs and interests and to design programming aimed at meeting those ascertained needs,51 including those of minority audiences;52 to air news and public affairs programs;53 to have some prime time available for nonnetwork programs;54 to refrain from airing obscenity,55 indecent language,56 lotteries,57 fraudulent programming,58 or too many commercials;59 and to concern themselves with many other regulations considered to be in the public interest.

These requirements were far more stringent than those applicable to newspapers, under the theory that as licensees of scarce radio frequencies, broadcasters must operate under a regime where the public's interest is paramount.60 In the newspaper business, on the other hand, the editorial discretion of the publisher is the primary legal consideration.61 While the scarcity theory has been seriously questioned by many, it is still the applicable law today.

Cable Television. As cable television grew from the hilltops in rural areas to the wiring of towns and cities, local jurisdictions were called upon to franchise cable operators' uses of the streets and rights of way. These franchises were often exclusive, whether de jure or de facto. Once an operator obtained a franchise, it was only rarely that a locality granted another franchise for the same area, even if competitors knocked on the door. Again, like the monopoly distribution media described above, franchising authorities exerted certain regulatory controls over the franchisees.62 At first these included rate regulation; later, and particularly after rate regulation was preempted at the federal level,63 franchising authorities imposed other public interest obligations on the cable operators, which at times took the form of payments to the city for noncommunications-related activities.

Private Carriage. A third type of media entity gained prominence during the 1970s—private users of the spectrum and, eventually, private wire-based facilities providers. While spectrum users had to obtain licenses and were still subject to public interest regulation, the burdens were minimal and technical in nature. Primarily, these entities were treated under the private model of regulation, left to use the medium for the purpose for which it was granted (i.e., a trucking company could obtain frequencies for two-way radiotelephone communications but could not use them for nonbusiness purposes and definitely could not "broadcast" on them).64

Storage Media. Records, tapes, and disks, like print media, have been subject to the "private" model of regulation—application of antitrust and other laws generally applicable to all businesses.65 While the government does not directly regulate, it can potentially impact the production and distribution of these media through large or strategic purchases of selected titles. Thus far, however, government purchases of these media are mainly through normal library procedures.66

Reception

At the scarcity stage, the law of reception was primarily concerned with integrity of the respective systems of communication. With respect to telephony, AT&T retained through its tariffs and its regulatory deal the ability to restrict any foreign attachment to its facilities. This went so far as excluding plastic book covers for the yellow pages directory and cups on handsets to prevent others from hearing a conversation. (For the latter, called a "hush-a-phone," it was possible to obtain appellate court reversal of the FCC's enforcement of AT&T's tariffs under the determination that if something is beneficial and not publicly detrimental, it could be added.)67

A second major battleground during the 1950s and 1960s was the use of community cable television (CATV) antennas to improve reception of nearby television stations. The FCC flip-flopped on its attitude toward CATV but eventually held that it needed to be regulated as ancillary to the commission's power over broadcasting.68 In 1968 the Supreme Court held that cable systems were enhancers of the reception mechanism for television, rather than rebroadcasters, for the purposes of the copyright laws.69 The commission then proceeded to regulate these reception enhancers so as to prevent importation of certain distant television stations or to unduly fragment the audiences of local stations servicing a particular area.70

Other regulatory decisions affected reception, such as the FCC's decisions selecting a particular system for color television71 and Congress's requirement that television sets include UHF channels.72 The color television episode was an example of the government setting a standard: some in the industry win, some lose, but the determination is made by the government.

Summary of Stage 1

In sum, the period of the 1920s to the 1970s was one of regulation in the telecommunications business according to the touchstone of the Communications Act—the "public interest, convenience, and necessity." The major question, in each case, was, What was the "public interest"? This standard, according to the Supreme Court, was a supple instrument for guiding the industries in periods of rapid technological change.73 Nevertheless, it was admittedly vague. Thus, the agenda was essentially set by the lawyers, who would argue as to how far this vague and lofty standard should or could extend before it exceeded the bounds of regulatory authority or the limits of the First Amendment.

In addition, the antitrust laws were used to restrict economic abuses and to promote access to bottlenecks. Through consent decrees, the Justice Department and courts served as a kind of regulator of the movie and telephone equipment industries. Analogous regulation also appeared in other contexts, such as the fair use doctrine in copyright.

Finally, state, local, and private parties enforced other applicable regulatory regimes. Governments had many levers to pull and buttons to push in shaping the form and substance of the communications infrastructure. These included direct regulation, governmental investments, research grants, low-interest loans, tax incentives, and even the bully pulpit.

Despite the logic and general agreement that the public interest was the paramount consideration, this general regulatory regime began to unravel in the 1970s (with certain prior indications of problems well before that). Scarcity was questioned both technologically and philosophically. A deregulatory mood swept the country, symbolized by the election of Jimmy Carter as President. Regulatory systems, such as trucking and airlines, as well as telecommunications, were seen as less efficient than they would be under competition. There was a sense that a new approach would resolve the country's regulatory interests better than a cumbersome, bureaucratic, and failing system. But most particularly, there were significant technological breakthroughs in microwave, broadband communications, and computerization that challenged the natural monopolies, oligopolies, and technological underpinnings of scarcity.

Stage 2: Abundance and Competition

In each of the affected industrial sectors under review, the key industrial elements became abundant, competitive, or both, creating the need for a new regulatory paradigm. Mostly, this process became manifest in the 1970s, strong in the 1980s, and perhaps excessive by the 1990s. I will look at each sector individually, pointing out, where appropriate, the parallels among industrial sectors.

Production

Compared to the distribution media, the production elements of the communications process were essentially competitive even during the "scarcity" stage. But by the 1970s, developments in these industries created new levels of competition and commensurate regulatory responses.

Motion Pictures. We begin with the motion picture industry. The Paramount decree was adopted just as television became a consumer product. With the steady increase in viewership of television, the film industry suffered box office declines for a while. But as the studios (1) sold movies to television as a new window for their products and (2) moved into the production of television programming, they saw a resurgence in their businesses (Owen and Wildman, 1992).74 With more outlets (theater and television), there arose more production entities. Talent agencies, independent producers, and syndicators all added levels of production entities for both film and television. As new windows arose, with pay television, pay cable, and videocassette tapes, still more entities entered the businesses, many of which also produced film, television, and tape. The advent of increasing competition for production, characterized by the independent television and film producer, created an environment of competition. This in turn led to a reintegration at the edges of the new media. Paramount and MCA jointly owned the USA cable network. Other motion picture companies began to own other outlets, such as the Disney Channel. 20th Century Fox was heavily involved in film, television, and videotape production. CBS tried the film business for a while but was unsuccessful. Vestron, an early leader in the videotape business, also tried but failed in the film business.

The Premiere case75 of the early 1980s was the final vestige of Stage 1 antitrust regulation. The major studios, buoyed by the trend toward reintegration and the governmental attitude of relaxed enforcement of antitrust laws, entered into an arrangement whereby the studios would provide their best movies to their jointly owned and operated Premiere pay cable network. The court determined that the studios were prima facie violative of the antitrust laws. The fledgling network was disbanded, but the film and television production entities have since become more and

more integrated. The Paramount decree has become all but a dead letter, and economic regulation has become anathema in the film industry.

For whatever reason, the expanded world of television appears to be producing a diet of more violent and sexual programming. Public reaction to this has taken the form of calls for content controls to minimize violent programming. But even here the call for regulation has recognized a competitive paradigm in production rather than Stage 1-type content controls at the production levels. For violent programming the calls have been to rate programs, a measure aimed at enhancing consumer information instead of restricting output. Another possibility is to require a chip in the receiving apparatus so that the receiving home can edit objectionable programming. These measures, then, are aimed at enhancing competition and markets, consumer choice, and freedom of individuals to receive access to a diverse array of products.

Copyright. The production of information enhanced by the copyright laws has also seen greater competition and reduced regulation in recent times. Fair use applications of the copyright laws became less available after passage of the 1976 Copyright Act amendments, which set forth a set of guidelines for the doctrine and expanded the bundle of rights contained in an author's claim to ownership of his or her original works of expression.76 This has resulted in greater use of contracts, a classic marketplace mechanism, as a means for determining the rights and responsibilities of owners and users of copyrightable material. In the field of cable television, one of the mainstays of compulsory license, cable's carriage of local television signals, was altered by new provisions requiring cable operators to obtain "retransmission consent" from the owner of those signals.77

Computers. The paradigm of competition also manifested itself in the unregulated computer industry. IBM faced competition not only from other manufacturers of mainframes and large top-down-type computers but also from clone manufacturers, Apple, and others. As computer technology became smaller and cheaper, moving to the minicomputer to the microcomputer, competition grew in all aspects and levels of the industry. Indeed, the industry is a metaphor for the whole era in telecommunications regulation: increasing competition, less regulation.

Distribution Media

The heavily regulated distribution media saw the most dramatic paradigm shift in economic structure and regulation.

Telephony. First, two sets of decisions beginning in the late 1960s signaled the decomposition of AT&T's monopoly. The decisions freeing the terminal equipment market from regulation are described below in the "Reception" section. The other decision was to allow competition to AT&T in the intercity, long-distance markets.78 This determination was essentially inevitable as a result of the advent of microwave and satellite transmission technologies. Microwaves allowed signals to be transported cheaply by line-of-sight relays over large distances. The long lines of old became short waves. Costs declined rapidly, and innovative entrepreneurs such as William McGowan of MCI saw some openings.

Long Distance. After the FCC allowed private entities to use microwave frequencies above 890 MHz to build their own private transmission facilities,79 AT&T filed tariffs setting discounts for large users. But these were inflexible tariffs, and MCI sought to customize transmission bandwidth for large customers over its own competitive facilities. In 1969 the FCC allowed MCI to build a line from Chicago to St. Louis.80 Later, it determined that competitors need not show the need for a new service in order to obtain a license as a Specialized Microwave Common Carrier to compete with AT&T for long-distance business.81 MCI eventually won the ability to offer switched long-distance service,82 and others, such as Southern Pacific Railroad, followed with national long-distance networks of their own (which became Sprint). The flood of competition meant not only an open, competitive market for large users, who could pick and choose telecommunications providers and the types of service offerings they might want, but also competition and lower rates for the average subscriber—a sacrosanct market for the internal cross-subsidies contained in the regulatory regime of the scarcity stage.

Satellites. Domestic satellites, at the same period of the early 1970s, won an "Open Skies" decision by the FCC.83 As long as they were legally, technically, and financially qualified, private entities could own and operate a communications satellite. Here, the government again moved to the competitive paradigm. The domestic satellite business would be a business, competitive with wireline services but free of many of the regulatory hamstrings inherent in the common carriage of old. The 22,400 feet to and from the satellite that each signal had to travel made distances on Earth insignificant. "Long distance" became a misnomer. The satellite carriers, who were already competitive in the sense of the Open Skies decision, also found increasing competition—first from resellers of satellite transponders, who were deregulated by the FCC,84 and then from decisions allowing private competition to INTELSAT on the international stage.85

Local Loop. As the interexchange and terminal equipment parts of the telecommunications network became competitive and deregulated, the last frontier for competition was the heavily regulated local loop. Even here, competition has emerged in recent years (Entman, 1992). Two cellular franchises were granted for each market, one to local wireline carriers and one to competitors.86 Now, after many years of building, selling, and trading, culminating in AT&T's purchase of the McCaw systems, the cellular business is not only competitive but also most formidable. Newer competitors are on the horizon, whether they come in the form of a third cellular license in each market, specialized mobile services, new Personal Communications Services (PCSs),87 or other, even newer technologies.

Furthermore, the reduced costs of fiber optic transmission, and FCC orders requiring interconnection to local exchange facilities,88 have also made alternative carriers at the local level an economically viable and burgeoning business. As cable television systems enter the local telecommunications business, with fiber backbones and broadband connections to 65 percent of the country, and as PCSs become a reality, these trends are only going to continue. Thus, even the heavily regulated local loop is beginning to enter the next stage of rapid competition and deregulation.

Television, Cable Television, and Multichannel Distribution. The competition phenomenon hit equally hard in the television delivery media. The number of local television stations steadily increased over the last four decades. This, coupled with the advent of cable television as a common delivery mechanism, has resulted in half the country's viewers having at least 20 channels available to them and over 40 percent with at least 30 channels.89 With the increasing number of signals

(over 80 national networks90), the FCC felt less obligated to regulate each one closely. Indeed, former FCC Chairman Mark Fowler called television a "toaster with pictures" and proceeded to "unregulate" as much as he could during the Reagan administration. This attitude received a healthy boost by the Cable Act of 1984, deregulating cable to a great measure.

Cable television's exclusive franchises have also been subject to several new competitors and challenges. Wireless cable (a compilation of multipoint microwave distribution channels) has finally entered the fray, and direct broadcasting satellites, long heralded but nonexistent until now, will at long last pose still another new threat to the multichannel delivery monopoly held by cable operators. Meanwhile, significant legal challenges to the local franchising monopoly are being waged both by upstart cable operators91 and telephone companies.92 Here the First Amendment, which was written as a prohibition against government infringements of freedom of communication, is being used to forge a wedge by industrial companies into a distribution business, with broad-ranging consequences.93

Reception

Reception devices and processes are becoming more important, for regulatory purposes, during the deregulatory era. The more responsibilities and choices that can be shifted to the consumer or reception end of the process, the less regulation is necessary or appropriate at the production, transmission, or distribution ends. Thus, the FCC and others are looking to creating greater choice and control at the user's discretion. This thirst to shift the regulatory burden to the consumer is being aided by advances in technology that allow for greater user control and targeting.

Terminal Equipment. The FCC broke AT&T's monopolization of terminal equipment on the Bell System in the Carterfone case in 1968.94 There, relying on an obscure precedent from the 1950s, the commission allowed Carterfone to interconnect its device to the Bell network on the grounds that it would not be physically detrimental to the network and could enhance consumers' choices. So long as it was privately beneficial without being publicly detrimental, the foreign attachment could not be excluded.

This decision opened up the terminal equipment market and laid the groundwork for the commission's decision in Computer Inquiry II.95 There the agency declared that customer premises equipment was to be competitive and therefore completely deregulated. The sole area of regulation was the commission's equipment registration rules, aimed at preventing harmful interference from such devices.96 The ensuing terminal equipment business is now an open and competitive one, subject to only minimal regulations.

The AT&T divestiture proceeding in the mid-1980s prevented the divested Bell operating companies from entering into the equipment manufacturing business,97 but this preclusion is now actively under review by Congress and the courts.

Privacy. The law of privacy has grown from a law review article in 1890 (Brandeis and Warren, 1890), to private tort law in the states, to state constitutional and statutory provisions,98 to, eventually in the 1970s, a federal approach.99 The federal laws, however, tend to follow industrial sectors, such as credit-reporting businesses,100 financial records,101 cable television,102 and others.103 The United States has thus far rejected the broad across-the-board regulatory approach that certain European countries have adopted (Reidenberg, 1992a,b). Typically, these federal laws will restrict the industrial company from maintaining certain files beyond a useful time period, will afford the subject individual with rights to ascertain what information is collected and maintained, to

correct errors, and to have the information used only for the collected purposes, unless permission is granted for other uses.

Thus, in addition to their place in the constellation of human and (implicit) constitutional rights, privacy protections are one means of assuring individuals that their use of the information infrastructure will be secure. They have always involved balancing against other rights and interests and therefore a kind of regulation of the use of information.104 While the timetable for regulation and deregulation is later than with the distribution media, there is discussion today of moving to a marketplace solution to some of the privacy issues in telecommunications.105