B

Virtual Engineering: Toward a Theory for Modeling and Simulation of Complex Systems

John Doyle, California Institute of Technology

INTRODUCTION

This paper is a primer surveying a wide range of issues tied together loosely in a problem domain tentatively referred to as “virtual engineering ” (VE). This domain is concerned with modeling and simulation of uncertain, heterogeneous, complex, dynamical systems—the very kind of M&S on which much of the vision discussed in this study depends. Although the discussion is wide ranging and concerned primarily with topics distant from those usually discussed by the Department of the Navy and DOD modeling communities, understanding how those topics relate to one another is essential for appreciating both the potential and the enormous intellectual challenges associated with advanced modeling and simulation in the decades ahead.

Perhaps the most generic trend in technology is the creation of increasingly complex systems together with a greater reliance on simulation for their design and analysis. Large networks of computers with shared databases and high-speed communication are used in the design and manufacture of everything from microchips to vehicles such as the Boeing 777. Advances in technology

NOTE: This appendix benefited from material obtained from many people and sources: Gabriel Robins on software, VLSI, and the philosophy of modeling, Will O'Neil on CFD, and many colleagues and students.

have put us in the interesting position of being limited less by our inability to sense and actuate, to compute and communicate, and to fabricate and manufacture new materials, than by how well we understand, design, and control their interconnection and the resulting complexity. While component-level problems will continue to be important, systems-level problems will be even more so. Further, “components” (e.g., sensors) increasingly need to be viewed as complex systems in their own right. This “system of systems” view is coming to dominate technology at every level. It is, for example, a basic element of DOD's thinking in contexts involving the search for dominant battlefield awareness (DBA), dominant battlefield knowledge (DBK), and long-range precision strike.

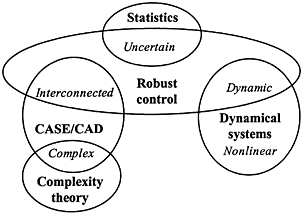

At the same time, virtual reality (VR) interfaces, integrated databases, paperless and simulation-based design, virtual prototyping, distributed interactive simulation, synthetic environments, and simultaneous process/product design promise to take complex systems from concept to design. The potential of this still-nascent approach is well appreciated in the engineering and science communities, but what “it” is is not. For want of a better phrase, we refer to the general approach here as “virtual engineering” (VE). VE focuses on the role of M&S in uncertain, heterogeneous, complex, dynamical systems—as distinct from the more conventional applications of M&S. But VE, like M&S, should be viewed as a problem domain, not a solution method.

In this paper, we argue that the enormous potential of the VE vision will not be achieved without a sound theoretical and scientific basis that does not now exist. In considering how to construct such a base, we observe a unifying theme in VE: Complexity is a by-product of designing for reliable predictability in the presence of uncertainty and subject to resource limitations.

A familiar example is smart weapons, where sensors, actuators, and computers are added to counter uncertainties in atmospheric conditions, release conditions, and target movement. Thus, we add complexity (more components, each with increasing sophistication) to reduce uncertainties. But because the components must be built, tested, and then connected, we are introducing not only the potential for great benefits, but also the potential for catastrophic failures in programs and systems. Evaluating these complexity versus controllability tradeoffs is therefore very important, but also can become conceptually and computationally overwhelming.

Because of the critical role VE will play, this technology should be robust, and its strengths and limitations must be clearly understood. The goal of this paper is to discuss the basic technical issues underlying VE in a way accessible to diverse communities—ranging from scientists to policy makers and military commanders. The challenges in doing so are intrinsically difficult issues, intensely mathematical concepts, an incoherent theoretical base, and misleading popular expositions about “complexity.”

In this primer on VE, we concentrate on “physics-based” complex systems, but most of the issues apply to other M&S areas as well, including those involving “intelligent agents.” Our focus keeps us on a firmer theoretical and empirical basis and makes it easier to distinguish the effects of complexity and uncertainty from those of simple lack of knowledge. Our discussion also departs from the common tendency to discuss VE as though it were a mere extension of software engineering. Indeed, we argue that uncertainty management in the presence of resource limitations is the dominant technical issue in VE, that conventional methods for M&S and analysis will be inadequate for large complex systems, and that VE requires new mathematical and computational methods (VE theory, or VET). We need a more integrated and coherent theory of modeling, analysis, simulation, testing, and model identification from data, and we must address nonlinear, interconnected, heterogeneous systems with hierarchical, multi-resolution, variable-granularity models—both theoretically and with suitable software architectures and engineering environments.

Although the foundations of any VE theory will be intensely mathematical, we rely here on concrete examples to convey key ideas. We start with simple physical experiments that can be done easily with coins and paper to illustrate dynamical systems concepts such as sensitivity to initial conditions, bifurcation, and chaos. We also use these examples to introduce uncertainty modeling and management.

Having introduced key ideas, we then review major success stories of what could be called “proto-VE” in the computer-aided design (CAD) of the Boeing 777, computational fluid dynamics (CFD), and very large scale integrated circuits (VLSI). While these success stories are certainly encouraging, great caution should be used in extrapolating to more general situations. Indeed, we should all be sobered by the number of major failures that have already occurred in complex engineering systems such as the Titanic, Tacoma-Narrows bridge, Denver baggage-handling system, and Ariane booster. We argue that uncertainty management together with dynamics and interconnection is the key to understanding both these successes and failures and the future challenges.

We then discuss briefly significant lessons from software engineering and computational complexity theory. There are important generalizable lessons, but—as we point out repeatedly—software engineering is not a prototype for VE. Indeed, the emphasis on software engineering to the exclusion of other subjects has left us in a virtual “pre-Copernican” stage in important areas having more to do with the content of M&S for complex systems.

Against this background, we draw implications for VE. We go on to relate these implications to famous failures of complex engineering systems, thereby demonstrating that the issues we raise are not mere abstractions, and that achieving the potential of VE (and M&S) will be enormously challenging. We touch

briefly on current examples of complex systems (smart weapons and airbags) to relate discussion to the present. We then discuss what can be learned from control theory and its evolution as we move toward a theory of VE. At that point, we return briefly to the case studies to view them from the perspective of that emerging theory. Finally, we include a section on what we call “soft computing,” a domain that includes “complex-adaptive-systems research, ” fuzzy logic, and a number of other topics on which there has been considerable semi-popular exposition. Our purpose is to relate these topics to the broader subject of VE and to provide readers with some sense of what can be accomplished with “soft computing” and where other approaches will prove essential.

In summary before getting into our primer, we note that several trends in M&S of complex systems are widely appreciated, if not well understood. There is an increasing emphasis on moving problems and models from linear to nonlinear; from static to dynamic; and from isolated and homogeneous to heterogeneous, interconnected, hierarchical, and multi-resolution (or variable granularity and fidelity). What is poorly understood is the role of uncertainty, which we claim is actually the origin of all the other trends. Model uncertainty arises from the differences between the idealized behavior of conventional models and the reality they are intended to represent. The need to produce models that give reliable predictability of complex phenomena, and thus have limited uncertainty, leads to the explicit introduction of dynamics, nonlinearity, and hierarchical interconnections of heterogeneous components. Thus the focus of this paper is that uncertainty is the key to understanding complex systems.

A few simple thought experiments can illustrate the issues of uncertainty and predictability—as well as of nonlinearity, dynamics, heterogeneity, and ultimately complexity. Most of the experiments we discuss here can also be done with ordinary items like coins and paper.

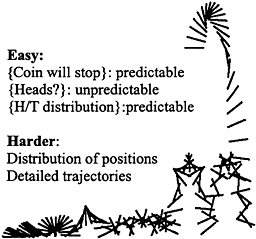

Consider a coin-tossing mechanism that imparts a certain linear and angular velocity on a coin, which is then allowed to bounce on a large flat floor, as depicted in Figure B.1 . Without knowing much about the mechanism, we can reliably predict that the coin will come to rest on the floor. For most mechanisms, it will be impossible to predict whether it will be heads or tails. Indeed, heads or tails will be equally likely, and any sequence of heads or tails will be equally likely. Such specific predictions are as reliably unpredictable as the eventual stopping of the coin is predictable.

The reliable unpredictability of heads or tails is a simple consequence of the sensitivity to initial conditions that is almost inevitable in such a mechanism. The coin will bounce around on the floor in an apparently random and erratic manner

FIGURE B.1 Coin tossing experiment.

before eventually coming to rest on the floor. The coin's trajectory will be different in detail for each different toss, in spite of efforts to make the experiment repeatable. Extraordinary measures would be needed to ensure predictability (e.g., dropping the coin heads up a short distance onto a soft and sticky surface, so as always to produce heads).

Sensitivity to initial conditions (STIC) can occur even in simple settings such as a rigid coin in a vacuum with no external forces, not even gravity. With zero initial velocity, the coin will remain stationary, but the smallest initial non-zero velocity will cause the coin to drift away with distance proportional to time. The dynamics are linear and trivial. This points out that—in contrast with what is often asserted—sensitivity to initial conditions is very much a linear phenomenon. Moreover, even in nonlinear systems, the standard definition of sensitivity involves examining infinitesimal variations about a given trajectory and examining the resulting linear system. Thus even in nonlinear systems, sensitivity to initial conditions boils down to the behavior of linear systems. What nonlinearity contributes is making it more difficult to completely characterize the consequences of sensitivity to initial conditions.

Sensitivity to initial conditions is also a matter of degree; the coin-in-free-space example being on the boundary of systems that are sensitive to initial conditions. Errors in initial conditions of the coin lead to a drifting of the trajectories that grows linearly with time. In general, the growth can be exponential, which is more dramatic. If we add atmosphere, but no other external force, the coin will eventually come to rest no matter what the initial velocities, so this

is clearly less sensitive to initial conditions than the case with no atmosphere. A coin in a thick, sticky fluid like molasses is even less sensitive.

Not all features of our experiment are sensitive to initial conditions. The final vertical position is reliably predictable, the time at which the coin will come to rest is less so, the horizontal resting location even less so, and so on, with the heads or tails outcome perfectly unpredictable. It follows that any notion of complexity cannot be attributed to the system, but must include the property of it that is in question.

We can get a better understanding of sensitivity to initial conditions with some elementary mathematics. Suppose we have a model of the form x(t+1) = f(x(t)). This tells us what the state variable x is at time t+1 as a function of the state x at time t. This is called a difference equation, which is one way to describe a dynamical system—i.e., one that evolves with time. If we specify x(t) at some time, say t = 0, then the formula x(t+1) = f(x(t)) can be applied recursively to determine x(t) for all future times t = 1,2,3, . . . . This determines an orbit or trajectory of the dynamical system. This only gives x at discrete times, and x is undefined elsewhere. It is perhaps more natural to model the coin and other physical systems with differential equations that specify the state at all times, but difference equations are simpler to understand. For the coin, the state would include at least the positions and velocities of the coin, and possibly some variables to describe the time evolution of the air around the coin. If the coin were flexible, the state might include some description of the bending and its rate. And so on.

A scalar linear difference equation is of the form x(t+1) = ax(t), where a is a constant (the vector case is x(t+1) = Ax(t), where A is a matrix). If x(0) is given, the solution for all time is x(t) = atx(0). Thus, if a>1, nonzero solutions grow exponentially and the system is called unstable. Since the system is linear, any difference in initial conditions will also grow exponentially. (If a<1, then solutions decay exponentially to zero and the origin is a stable fixed point.)

Exponential growth appears in so many circumstances that it is worth dramatizing its consequences. If a = 10, then in each second x gets 10 times larger, and after 100 seconds it is 10100 larger. With this type of exponential growth, an error smaller than the nucleus of a hydrogen atom would be larger than the diameter of the known universe in less than 100 seconds. Of course, no physical system could have this as a reasonable model for long time periods. The point is that linear systems can exhibit very extreme sensitivity to initial conditions because of exponential growth. Of course, STIC is a matter of degree. The quantity ln(a) is one measure of the degree of STIC and is called the Lyapunov exponent.

Suppose we modify our scalar linear system slightly to make it the nonlinear system x(t+1) = 10x(t) mod10 and restrict the state to the interval [0,10]. This system can be thought of as taking the decimal expansion of x(t) and shifting the

decimal point to the right and then truncating the digit to the left of the units place. For example, if x(0) = p = 3.141592 ..., then x(1) = 1.41592 ... and x(2) = 4.1592 ... and so on. This still has, in the small, the same exponential growth as the linear system, but its orbits stay bounded. If x(0) is rational, then the x(t) will be periodic, and thus there are a countable number of periodic orbits (arbitrarily long periods). If x(0) is irrational, then the orbit will stay irrational and not be periodic, but it will appear exactly as random and irregular as the irrational initial condition. As is well-known, this system exhibits deterministic chaos. The Lyapunov exponent can also be generalized to nonlinear systems, and in this case would still be ln(a).

The several alternative mathematical definitions of chaos are all beyond the scope of this paper, but the essential features of chaotic systems are sensitivity to initial conditions (STIC), periodic orbits with arbitrarily long periods, and an uncountable set of bounded nonperiodic (and apparently random) orbits.

The STIC property and the large number of periodic orbits can occur in linear systems. But the “arbitrarily long periods” and “bounded, nonperiodic, apparently random ” features require some nonlinearity. Chaos has received much attention in the popular press, which often confuses nonlinearity and sensitivity to initial conditions in suggesting that the former in some way causes the latter, when in fact both are independent and necessary but not sufficient to create chaos. The formal mathematical definitions of chaos involve infinite time horizon orbits, so none of our examples so far would be, strictly speaking, chaotic. A simple way to get a system that is closer in spirit to chaos would be to put our coin in a box and then shake the box with some periodic motion. Even though the box had regular motion, under many circumstances the coin's motion in bouncing around the box would appear random and irregular.

A simple model with linear dynamics between collisions and a linear model for the collisions with the box would almost certainly be chaotic, although even this simple system is too complicated to prove the existence of chaos rigorously and it must be suggested via simulation. Very few dynamical systems have been proved chaotic, and most models of physical systems that appear to exhibit chaos are only suggested to be so by simulation. One-degree-of-freedom models of a ball in a cylinder with a closed top and a periodically moving piston have been proved chaotic. The ball typically bounces between the piston and the other end wall of the cylinder with the impact times being random, even though the dynamics are purely deterministic, and even piecewise linear.

To get a sense of the notion of bifurcation in dynamical systems, consider the following experiment. Drop a quarter in as close to a horizontal position and with as little initial velocity as possible. It will drop nearly straight down, and the air will have little effect at the speeds the coin will attain while it bounces around the floor. Now take a quarter-size piece of paper and repeat the experiment. The paper will begin fluttering rapidly and fall toward the floor at a large angle, landing far away from where a real quarter would have first hit the floor. This is

an example of a bifurcation, where a seemingly small change in properties creates a dramatic change in behavior. The heavy coin will reliably and predictably hit the floor beneath where it is dropped (at which point subsequent collisions may make what follows it quite unpredictable), whereas the paper coin will spin off in any direction and land far away, but then quickly settle down without bouncing. Thus one exhibits STIC, while the other does not.

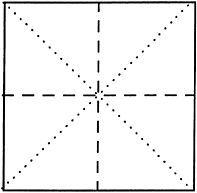

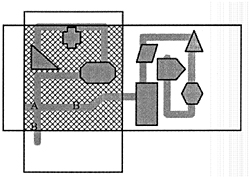

A simple variant on this experiment illustrates a bifurcation more directly. Make two photocopies of the diagram in Figure B.2 (or just fold pieces of paper as follows), and cut out the squares along the solid line. The unfolded paper will flutter erratically when dropped, exhibiting STIC. Next, take one of the papers and fold it along one of the dashed lines to create a rectangularly shaped object. Turn the object so that the long side is vertical. Then make two triangular folds from the top left and bottom left corners along the dotted lines to produce a small funnel-shaped object. If this is dropped it will quickly settle into a nice steady fall at a terminal velocity with the point down. This is known as a relative equilibrium in that all the state variables are constant, except the vertical position, which is decreasing linearly. It is locally stable since small perturbations keep the trajectories close, and is also globally attracting in the sense that all initial conditions eventually lead to this steady falling.

If the folds are then smoothed out by flattening the paper more back to its prefolded shape, then only when the paper is dropped very carefully will it fail to flutter. This nearly flat paper has a relative equilibrium consisting of flat steady falling, but the basin of attraction of this equilibrium is very small. That is, the more folded the paper is, the larger the set of initial conditions that will lead to steady falling. If the folds are sharp enough and the distance to the floor great enough, then no matter how the paper is dropped it will eventually orient itself so the point is down, and then fall steadily.

This large change in qualitative behavior as a parameter of the system is changed (in this case, the degree of folding) is the subject of bifurcation analysis

FIGURE B.2 Proper folding diagram for bifurcation experiment.

within the theory of dynamical systems theory. In these examples, bifurcation analysis could be used to explore why a regular coin shows STIC only after the first collision, while the paper coin shows it only up to hitting the floor, as well as why the dynamics of the folded paper change with the degree of folding. Of course, bifurcation analysis applies to mathematical models, and developing such models for these examples is not trivial. To develop models that reproduce the qualitative behavior we see in these simple experiments requires advanced undergraduate level aerodynamics. These models will necessarily be nonlinear if they are to reproduce the fluttering motion, as this requires a nontrivial nonlinear model for the fluids.

Bifurcation is related to chaos in that bifurcation analysis has often been an effective tool to study how complex systems transition from regular behavior to chaotic behavior. While chaos per se may be overrated, the underlying concepts of sensitivity to initial conditions and bifurcation, and more generally the role of nonlinear phenomena, are critical to the understanding of complex systems. The bottom line is as follows:

-

We can make models from components that are simple, predictable, deterministic, symmetric, and homogeneous, and yet produce behavior that is complex, unpredictable, chaotic, asymmetric, and heterogeneous.

-

Of course, in engineering design we want to take components that may be complex, unpredictable, chaotic, asymmetric, and heterogeneous and interconnect them to produce simple, reliable, predictable behavior.

We believe that the deeper ideas of dynamical systems will be important ingredients in this effort.

It is tempting to view complexity in this context as something that arises in a mystical way between complete order (that the coin will come to rest) and complete randomness (heads or tails) and to settle on chaotic systems as prototypically complex. We prefer to view complexity in a different way. To make reliable predictions about, say, the final horizontal resting place, the distribution of horizontal resting positions, or the distribution of trajectories, we would need elaborate models about the experiment and measurements of properties of the mechanism, the coin, and the floor. We might also improve our prediction of, say, the horizontal resting location if we had a measurement of the positions and velocities of the coin at some instant after being tossed. This is because our suspicion would be that the greatest source of uncertainty is due to the tossing mechanism, and the uncertainty created by the air and the collisions with the floor will be less critical, but this would also have to be checked. The quality of the measurement would obviously greatly affect the quality of any resulting prediction, of course.

To produce a model that reliably predicted, say, the distribution of the trajectories could be an enormous undertaking, even for such a simple experiment. We would need to figure out the distributions of initial conditions imparted on the coin by the tossing mechanism, the dynamics of the trajectories of the coin in flight, and the dynamics of the collisions. The dynamics of the coin in the air is linear if the fluid/coin interaction is ignored or if a naive model of the fluid is assumed. If the coin is light, and perhaps flexible, then such assumptions may allow for too much uncertainty, and a nonlinear model with dynamics of the coin/ fluid interaction may be necessary (imagine a “coin” made from thin paper, or replace air by water as the fluid). If the coin flexibility interacts with the fluid sufficiently, we could quickly challenge the state of the art in computational fluid dynamics.

The collisions with the floor are also tricky, as they involve not only the elastic properties of the coin and floor, but the friction as well. This now takes us into the domain of friction modeling, and we could again soon be challenging the state of the art. Even for this simple experiment, if we want to describe detailed behavior we end up with nonlinear models with complex dynamics and the physics of the underlying phenomena is studied in separate domains. It will be difficult to connect the models of the various phenomena, such as fluid/coin interaction, and the interacting of elasticity of the floor and coin with frictional forces. It is the latter feature that we refer to as heterogeneity. Heterogeneity is mild in this example since the system is purely mechanical, and the collisions with the floor are relatively simple.

Our view of complexity, then, is that it arises as a direct consequence of the introduction of dynamics, nonlinearities, heterogeneity, and interconnectedness intended to reduce the uncertainty in our models so that reliable predictions can be made about some specific behavior of our system (or its unpredictability can be reliably confirmed in some specific sense, which amounts to the same thing). Complexity is not an intrinsic property of the system, or even of the question we are asking, but in addition is a function of the models we choose to use. We can see this in the coin tossing example, but a more thorough understanding of complexity will require the richer examples studied in the rest of this paper.

While this view of complexity has the seemingly unappealing feature of being entirely in the eye of the beholder, we believe this to be unavoidable and indeed desirable: Complexity cannot be separated from our viewpoint.

Up to this point, we have been rather vague about just what is meant by uncertainty, predictability, and complexity, but we can now give some more details. For our coin toss experiment, we would expect that repeated tosses would produce rather different trajectories, even when we set up the tossing mechanism identically each time to the extent we can measure. There would

presumably be factors beyond our control and beyond our measurement capability. Thus any model of the system that used only the knowledge available to us from what we could measure would be intrinsically limited in its ability to predict the exact trajectory by the inherent nonrepeatability of the experiment. The best we could hope for in a model would be to reliably predict the possible trajectories in some way, either as a set of possible trajectories or in terms of some probability distribution. Thus we ideally would like to explicitly represent this uncertainty in our model. Note that the uncertainty is in our model (and the data that goes with it). It is we—not nature—who are uncertain about each trajectory. 1 We now describe informally the mechanisms by which we would introduce uncertainty into our models.

A special and important form of uncertainty is parametric uncertainty, which arises in even the simplest models such as attempting to predict the detailed trajectory of a coin. Here the “parameters” include the coin's initial conditions and moments of inertia, and the floor's elasticity and friction. Parameters are associated with mechanisms that are modeled in detail but have highly structured uncertainty. Roughly speaking, all of the “inputs” to a simulation model are parameters in the sense we use the term here. 2

How do we deal with parametric uncertainty (see also Appendix D )?

-

Average case. If only average or typical behavior is of interest, this can be easily evaluated with a modest number of repeated Monte Carlo simulations with random initial conditions. In this case the presence of parametric uncertainty adds little difficulty beyond the cost of a single simulation. Also, in the average case the number of parameters does not make much difference, as estimates of probability distributions of outcomes do not depend on the number of parameters.

-

Linear models. If the parameters enter linearly in the model, the resulting uncertainty is often easy to analyze. To be sure, we can have extreme sensitivity to initial conditions, but the consequences are easily understood. Consider the linear dependence of the velocity and position of the first floor collision as a function of the initial velocities and positions of the coin. A set in the initial

1

Except in our discussion of VLSI later in this appendix, we ignore quantum mechanics and the intrinsically probabilistic behaviors associated with it. Quantum effects are only very rarely significant for the systems of interest here.

2

Some workers distinguish between “parameters” that can be changed interactively at run time, or in the course of a run, and “fixed data,” that can be changed only by recompiling the database. Both are parameters for the purposes of this paper.

-

condition space is easily mapped to a set of collision conditions. Both average and worse-case behavior can be evaluated analytically. For example, suppose we have some scalar function F(p), where p is some vector of n parameters. If F is linear, then evaluating its largest values over a convex set of parameters is straightforward, and in the case where each component of the parameters is in an interval, it is trivial.

-

Nonlinear/worst-case/rare event. If the parameters enter nonlinearly, then the analysis becomes more difficult—particularly if we are interested in worst-case behavior or rare events. For example, if F(p) is nonlinear and has no exploitable convexity properties, we may have to do a global and exhaustive search of p to determine, say, the maximum value of F. Such a search will grow exponentially with the dimension n of p. While this is an entirely different role of exponential growth than in sensitivity to initial conditions, the consequences are no less dramatic. If we only choose to examine a gridding of the space of parameters where we take 10 values of each element (this could be too coarse to find the worse case), then the number of functional evaluations will be 10n. No matter how quickly we do the functional evaluations, this exponential growth prohibits exploring more than a handful of parameters.

It does not take highly nonlinear dynamics to produce nonlinear dependence on parameters. A simplified model of coin flipping with a linear model for collisions and a linear model for flight between collisions is piecewise linear, but this is enough to produce complicated dependence on initial conditions. In a model with linear dynamics, the dependence of final conditions on initial conditions is linear, but parameters such as mass, moments of inertia, resistance, and so on, can enter the equations nonlinearly, thereby producing a nonlinear dependence of the trajectories on these parameters.

Evaluating worst-case or rare events can also be more difficult than the average case, because Monte Carlo requires an excessive number of trials or we have to exhaustively search all parameters for the worst case. This too can be computationally intractable, depending on the number of the parameters and the functional dependence on them. In some cases, exact calculation of probability density functions can be easier, and numerical methods for evaluating probability density functions by advanced global optimization methods is a current research topic.

Of course, we would always hope to discover some property of the parametric dependence that makes it possible to avoid this exponential growth in computation with the number of parameters. Unfortunately, it has been proved that evaluating, say, the stability of even the simplest possible nontrivial linear system depending on parameters is NP-hard, which implies that exponential growth is in a certain sense unavoidable. To explain the implications of this for evaluating parametric uncertainty will require several additional concepts, including obviously the meaning of NP-hard, and will be taken up in more detail later.

Not all uncertainty is naturally modeled as parametric. Suppose the fluid in which our coin is tossed cannot be neglected, as would be the case for a light paper coin in air, or a coin in water. The coin may exhibit erratic and apparently random motion as it falls. While we could in principle model the fluid dynamics of the atmosphere in detail, the error-complexity tradeoff is very unfavorable. Traditionally, noise is instead modeled as a stochastic process even when it is more appropriate to think of it as chaotic. That is easy to see in the case of wind gusts generated by fluid motions in the air. Just because the wind appears random and we do not want to model the fluid in detail does not mean that wind is naturally viewed as a random process. We model it as a stochastic process for convenience.

Other examples of phenomena that are often modeled as noise include arrival times for queues, thermal noise in circuits, and even the outcome of the process of coin flipping itself. In each case, the mechanism generating the noise is typically not modeled in detail, but some aggregate probabilistic or statistical model is used. Robust control has emphasized set descriptions for noise, in terms of statistics on the signals such as energy, autocorrelations, and sample spectra, without assuming an underlying probability distribution. Recently, the stochastic and robust viewpoints have been reconciled by the development of set descriptions that recover many of the characteristics and much of the convenience of stochastic models. Finally, Monte Carlo simulations adequately generate noise with pseudo-random number generators, which are neither truly chaotic nor random, but are periodic with very long periods. 3 Thus, it makes little sense to be dogmatic about insisting that models be stochastic, per se, but it is important that noise sources be explicitly modeled in some reasonable way. This may be accomplished in a number of ways:

-

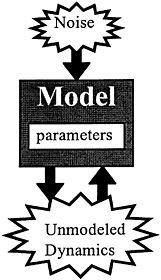

Unmodeled dynamics. The use of constant parameters or noise to model aerodynamic forces generated by the fluid around our coin means not treating explicitly the complex, unsteady fluid flows that more accurately describe the physics. Even if we attempt to model the fluids in some detail, the forces and moments that the resulting model predicts will be “felt ” by the coin will be wrong, and the difference may depend on the coin's movements. It may be undesirable to then model these forces as noise, since that assumes that they do not depend on the coin 's movement. We may choose to model this type of uncertainty with a mechanism similar to noise above, but involving relationships between variables, such as coin velocities and forces. Like noise modeling, we

|

3 |

Some MC simulations have been done with genuinely random physical sources, such as decay of radioactive isotopes. |

-

use bounds and constraints, but now on the signals describing both the coin and the fluid. Similarly, rigid body models assume that forces directly generate rigid body motion, while models that included flexible effects allow for bending as well. Rather than modeling the flexibility in detail, we may choose to bound the error between forces and rigid body motions as constraints on signals. 4

FIGURE B.3 Relationships of models and their parameters with noise and unmodeled dynamics. Noise excites model dynamics but not vice versa.

Noise, then, is similarly a special case of unmodeled dynamics where one assumes that the unmodeled dynamics excite the modeled dynamics but not vice versa (see Figure B.3 ). For example, modeling atmospheric gusts as noise assumes that the vehicle or coin motion has a negligible effect on the atmosphere compared to the fluid motions in the atmosphere generated by other sources. This is a reasonable assumption in many circumstances but might be unreasonable for, say, airplanes flying near large fixed objects such as the ground.

|

4 |

This issue of how to treat unmodeled dynamics comes up in quasi-steady aerodynamics of airplanes, which is a particular problem when considering the interactions of closely spaced vehicles whose flows affect each other, or when the vehicle is highly flexible. It is currently beyond the state of the art to do computational fluid dynamics (CFD) for a moving vehicle in a way that would allow the use of CFD codes in vehicle simulation. It is possible to put explicit uncertainty models into the coefficients and analyze their effects using methods from robust control. In fact, exactly this type of analysis for the Shuttle Orbiter during reentry and landing was among the first commercial successes of robust control, and the use of robustness analysis software is now standard in the Shuttle program. CFD, robust control, and the Shuttle reentry will be discussed in more detail later. |

-

Sensitivity to uncertainty. While we have discussed STIC with simple examples, models can be sensitive to all forms of uncertainty, not just initial conditions. This is a much more subtle notion and requires, unfortunately, deeper mathematics to explain. Indeed, as we will see from the engineering example later, sensitivity to unmodeled dynamics is a much greater problem generally than sensitivity to initial conditions.

To get some sense of the issues, here, suppose that we develop a model of the paper falling that assumes the paper is rigid and the flow is very simple, and that it seems to capture the qualitative dynamics of the experiment. Suppose we then try to use this model to predict, before doing any experiment, what would happen with tissue paper, where there is substantial flexibility. The behavior might be totally different, and no choices of parameters in our simpler model would capture the behavior of the falling tissue. One way to approach the tissue would be to try initially to bound the effects of flexibility in a rough way and check if this makes any difference to the outcome (assume for the moment we have some tools to do this). Presumably, this would reveal that the small flexibility makes little difference for the bifurcation analysis with regular paper, but makes a large difference with the tissue. The uncertain model of the tissue would suggest that we would be unable to reliably predict details of the trajectory as well as in the regular paper case without doing a more detailed model of the tissue flexibility. Thus, our initial model of the tissue would have been sensitive to unmodeled dynamics.

-

Games with hostile adversaries. This appendix deals largely with “hard” engineering examples, but before leaving the subject of uncertainty, it is worth noting a complication of particular importance in military applications—notably, the presence of a hostile adversary. It is a complication without direct parallel in normal science and engineering. Nature, while perhaps sometimes seeming to be capricious, does not consciously plan how to complicate our lives. In conflict, all the participants may have strategies that change in response to perceived actions of the other participants. These changes may seek to optimize some feature of events, or may merely be dictated by doctrine. They may be objectively “optimal” in some sense, or they may be idiosyncratic and risk-taking. Some adversaries may wish to optimize the likelihood of complete success, caring little about “expected value.” And all humans are subject to well-known cognitive biases that influence decisions.

Now, some of the methods discussed in this appendix might well be useful in modeling potential adversary behaviors. We could, for example, model all participants' options and reasoning, and then look at, say, minimax strategies. Indeed, robust control theory often models parameters, noise, and unmodeled dynamics in such a way that control design can be viewed as a differential game between the controller and “nature.” Such methods could be quite useful for designing robust strategies. We will not pursue this subject further here, except

to notice parenthetically that it is much easier to produce a computer program that plays grandmaster-level chess (which has been done) than it is to model accurately the actual play of a grandmaster (which might not be done in the foreseeable future). 5

The motivation for introducing uncertainty mechanisms into our models is to predict the range and distribution of possible outcomes of our experiments. What is somewhat misleading about coin tossing with respect to the broader VE area is the small scale of the experiment and its relative homogeneity. In VE modeling we must expect huge numbers of components with extremely diverse origins. We have discussed the features of a model that included nonlinearity, dynamics, interconnection of heterogeneous components, complexity, and uncertainty. Of these, only uncertainty is an intrinsic property of the modeling process, with the others introduced—perhaps reluctantly—to reduce the uncertainty in our models. This uncertainty management is the driving force behind complexity in models.

Conventional modeling in science and engineering is basically reductionist. Experiments are designed to be controlled and repeatable, usually with certain phenomena isolated. It is widely thought that if we model a system with sufficient accuracy, then we can reliably predict the behavior of that system. This standard modeling paradigm is poorly suited to VE. The phenomenon of deterministic chaos has shed some doubt on the conventional view, and it is now widely appreciated, even among the lay public, that quite simple models can produce apparently complex and unpredictable behavior. What is less well appreciated is an entirely different issue of how uncertainty in components interacts with the process of interconnection of components to produce uncertainty at the system level: There is simply no way of telling how accurately a component must be modeled without knowing how it is to be connected. Thus both the component uncertainty and the interconnection determine the impact of that uncertainty.

One reason that explicit uncertainty modeling is uncommon is that the typical “consumer” of a conventional model is another human sharing domain-specific expertise and an understanding of standard assumptions. Model uncertainty and the interpretation of assumptions are typically implicit and also part of the domain-specific expertise. Most scientific and engineering disciplines can almost be defined in terms of what they choose to both focus on and neglect about

|

5 |

Within the military domain, there are examples to illustrate all of these points. For example, RAND's SAGE algorithm, developed by Richard Hillestad, is used in theater-level models to “optimally” allocate and apportion air forces across sectors and missions. This not only establishes bounds on performance, but also reduces the number of variables, which makes it much easier to focus on tradeoffs among weapon systems and forces. This said, it is important to run cases in which the sides follow doctrine, because that behavior may be quite different and more realistic. |

the world. Within domains, the dominant assumptions and viewpoints are taken for granted and never mentioned explicitly. In the coin flipping experiment, terms such as inviscid flow might be mentioned, but not something like the assumption of chemical equilibrium.

Problems with conventional modeling arise in VE because the consumer of a model will be a larger model in a hierarchical, heterogeneous model, with possibly no intervention by human experts. We cannot rely on theory or software that is domain-specific, implicit, and requires human interpretation at every level. The more complex the system, the less critical individual components may become but the more critical the overall system design becomes. Furthermore, we are interested in modeling real-world behavior, not an idealized laboratory experiment.

The next question we address is, What constitutes a sensible notion of model error or fidelity? Again starting with a simple “naive” view, let us imagine that reality has some set of behaviors, that our model has some set of behaviors, and that we have some measure of the mismatch between these two sets. These may be heroic assumptions, but let us press forward for now.

Putting aside the cognitive preference for simplicity, we would perhaps obviously prefer high-fidelity models. The obstacles to model fidelity are the costs of modeling due to limited resources, mainly:

-

Computation,

-

Measurement, and

-

Understanding.

These limitations are ultimately connected to time and money. This suggests tradeoffs.

For the time being, we can think of computation cost naively as simply the cost of the computer resources to simulate our model, and measurement cost as the cost to do experiments, take data, and process those data to determine parameters in our model. It is obviously not so easy to formalize what we mean by understanding, but it is clear that we can have vastly different levels of understanding about different physical processes and this can greatly affect our ability to effectively model them.

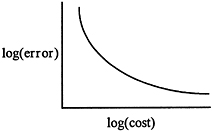

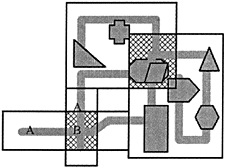

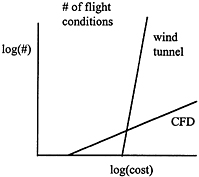

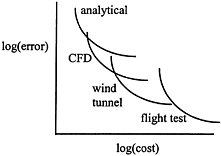

Figure B.4 illustrates the tradeoff between fidelity and complexity, or more precisely the tradeoff between model error and resources. As we use more resources, we can reduce our error, but there are strong effects of diminishing returns. 6

|

6 |

Actually, errors could increase with cost if resources are used to build extra complexity to the point that the model begins to collapse under its own weight, but here we assume optimal use of the resources. |

FIGURE B.4 Error-cost tradeoff.

A well-known example occurs in weather prediction. For standard computer simulations used in weather prediction, the error between the prediction and the actual weather grows with time due to sensitivity to initial conditions. Long-term predictability is viewed by experts as being impossible, and even massive improvements in computation and measurement will yield diminishing returns.

We can summarize the previous discussion as follows. No matter how sophisticated our models, there is always some difference between the model and the real world. Model uncertainty leads to unpredictability, which mirrors the unpredictability of real systems. This has two important aspects. One is that models can exhibit extreme sensitivity to variations in model assumptions, parameters, and initial conditions. The second, discussed below, is the combinatorial complexity of evaluating all the model combinations that arise from possible variations in assumptions, parameters, and initial conditions in all the subsystems, which makes a brute force enumeration prohibitively expensive. These are some of the fundamental limitations on the predictability of models, which will not be eliminated by advances in computational power, measurement technology, or scientific understanding. Thus in developing robust VE, there are certain “hard” limits on predictability, and it is important to understand and quantify the limits on the predictability of full system models in terms of the uncertainties in component models. Current VE enterprises generally do not have good strategies for dealing with these uncertainties, or for understanding how they propagate through the system model and ultimately affect the decision-making process they were intended to serve.

To make reliable predictions about the systems being modeled under uncertainty, we are often forced to add complexity in the form of nonlinearities, dynamics, and interconnections of heterogeneous components. Unlike uncertainty, none of these are intrinsic properties of our systems and models, but are added, perhaps reluctantly, to reduce uncertainty. It is tempting to say that all real systems are nonlinear, dynamic, and heterogeneous, but this is meaningless since

these are properties of models, not reality (although we will use the convenient shorthand of referring to some phenomena as, say, nonlinear when it might be more precise to say that high-fidelity models of the phenomena must be nonlinear). It would be more appropriate to say that modeling of physical systems leads naturally and inevitably to the introduction of such mathematical features. Complexity is due, in this view, simply to the presence of these features, and to the degree of their presence. This view of complexity will be a theme throughout, so let us consider a bit more detail on the meaning of dynamics, nonlinearity, and heterogeneity.

A dynamic model is simply one whose variables evolve with time, perhaps described by differential or difference equations, or perhaps more abstractly. For partial differential equations, the situation is even more complicated, since solutions can vary continuously with space as well as time. Thus, dynamical systems tend to be much more difficult to work with than static systems.

The importance of linear models is that they satisfy superposition, so that a linear function of a list of variables can be completely characterized by evaluating the function on one value of each variable taken one at time. In other words, the local behavior of a linear function completely determines its global behavior. Nonlinearity is the absence of this property, so that local information may say nothing about global behavior. What is critical in modeling uncertain systems is how the quantity that we want to predict depends on the uncertainty we are modeling. A linear dynamical system can depend nonlinearly on parameters in such a way as to make evaluation of the possible responses of the system very difficult. In turn, a nonlinear dynamical system may have outputs that depend only linearly on some parameters, and this may be easily evaluated. What is critical is the dependence on the uncertainty.

Again, nonlinearities are not responsible for sensitivity to initial conditions. It is when nonlinearities are combined with sensitivity to initial conditions that behavior can be a complex and unpredictable function of the initial conditions.

Heterogeneity in modeling arises from the presence in complex systems of component models dealing with material properties, structural dynamics, fluids, chemical reactions, electromagnetics, electronics, embedded computer systems, and so on, each represented by a separate and distinct engineering discipline. Often, modeling methods in one domain are incompatible with those in another.

Even when we can break the system into multiple levels with the lowest level containing only one modeling domain, the component models must be combined. Thus, a critical issue that must be dealt with in every aspect of modeling is how to combine heterogeneous component models.

A classical example of a mildly heterogeneous system occurs in the phenomenon of flutter, an instability created by the interaction of a fluid and an elastic solid, or more generally with a solid that has nontrivial dynamics. It is a critical limiting factor in the performance of many aircraft wings and jet engines, and a simple version of it could be seen in the “flutter” of the paper in the experiment above. There are two approaches to treating such heterogeneous systems. One is to do a fully integrated model of the system, which in this case is the domain of the field of aeroelasticity. The other is to make simplifying approximations about the boundary conditions between the heterogeneous components and then interconnect them. For flutter, this is typically done when assuming that the fluid is treated quasi-statistically, so forces and moments generated by the fluids do not depend on the dynamics of the solid material. This way, the dynamics of the fluid can be treated separately, approximated as a static map, and then simply connected with the dynamics of the solid. If this approximation is reasonable, as it is in our paper experiment, then simple models can predict the presence or absence of flutter.

Flutter is only a mildly heterogeneous system, because it involves continuum mechanical phenomena, but, for example, no chemistry, electromagnetics, or thermodynamics. In more profoundly heterogeneous systems, it is not possible to create huge new engineering domains to address the unique modeling problems associated with each possible combination of modeling problems. We must make suitable approximations of the boundary conditions between domains/components.

In simple situations it is often possible to use aggregated models that capture the system behavior without detailed treatment of the system's internal mechanisms. However, in developing detailed models of complex systems, it is common practice to break the system up into components, which are then modeled in detail. This can continue recursively at several levels to produce a hierarchical model, and the full system must then be built up from the component models.

This disaggregation approach is essentially the only way that complex system models can be developed, but it leads to a number of difficult problems, including the need to connect heterogeneous component models, and the need for multi-resolution or variable granularity models. Perhaps more importantly, this neo-reductionist approach to modeling may represent a reasonable scientific program for discovering fundamental mechanisms, provided one never wants to

reconstruct a model of the whole system. Applied naively, it simply does not work very well as a strategy for modeling complex systems.

There is need for multi-resolution or variable granularity models, because the process of component disaggregation can continue indefinitely to create an infinitely complex model (see also Appendix E ). It is possible, and even likely, that this process will not converge, as there can easily be extreme sensitivity to small component errors that are the consequence of interconnection and that can be evaluated only at the system level. For example, there might exist quite simple models for components and their uncertainty that interconnect to reliably predict system behavior, but it can be essentially impossible to discover these models by viewing the components in isolation. Thus one is in the paradoxical position of needing the full system to know what the component models should be, but having only the component models themselves as the route to creating a system model. Neither a purely top-down nor a bottom-up approach will suffice, and more subtle and iterative approaches are needed. Even the notion of what is a “component” is not necessarily clear a priori, as the decomposition is never unique and often the obvious thing to do is severely suboptimal.

The standard approach to developing variable error-complexity component models is to allow multi-resolution or variable granularity models. Simple examples of this include using adaptive meshes in finite element approximations of continuum phenomena, or multi-resolution wavelet representations for geometric objects in computer graphics. In hierarchical models, the problem of developing analogous variable resolution component models is quite subtle and will surely be an important research area for some time. Using these same examples, there are difficult problems associated with modeling continuum phenomena involving fluid and flexible structure interaction, as well as phase changes. Similarly, building aggregate multi-resolution models of interconnected geometric objects from multi-resolution component models is a current topic of research in computer graphics.

With this background of basic concepts, let us now consider some case studies to illustrate successful VE. Advocates of the power of modeling and simulation typically extrapolate from three shining examples, which could be thought of as “proto-VE” case studies: the computer-aided design (CAD) of the Boeing 777, computational fluid dynamics (CFD), and very large scale integrated circuits (VLSI). Each has made great use of computation and has challenged in various ways available hardware and software infrastructures. While these success stories are certainly encouraging, great caution should be used in extrapolating to more general situations, as a review of the state of the art will reveal. Indeed, each of these domains has serious limitations and faces major challenges. Examples of major failures of complex engineering systems (e.g., the Titanic, the

FIGURE B.5 The Boeing 777. SOURCE: Boeing Web site ( www.boeing.com/companyoffices/gallery/images/commercial/ ).

Tacoma Narrows bridge, the Denver baggage handling system, and the Ariane booster) should cause us all to be sobered. We will argue that uncertainty management together with dynamics and interconnection are the key to understanding both these successes and failures and the future challenges.

Boeing invested more than $1 billion (and insiders say much more) in CAD infrastructure for the design of the Boeing 777 (see Figure B.5 ), which is said to have been “100 digitally designed using three-dimensional solids technology. ” Boeing based its CAD system on CATIA (short for Computer-aided Three-dimensional Interactive Application) and ELFINI (Finite Element Analysis System), both developed by Dassault Systemes of France and licensed in the United States through IBM. Designers also used EPIC (Electronic Preassembly Integration on CATIA) and other digital preassembly applications developed by Boeing. Much of the same technology was used on the B-2 program.

While marketing hype has exaggerated aspects of the story, the reality nonetheless is that Boeing reaped huge benefits from design automation. The more

than 3 million parts were represented in an integrated database that allowed designers to do a complete 3D virtual mock-up of the vehicle. They could investigate assembly interfaces and maintainability using spatial visualizations of the aircraft components to develop integrated parts lists and detailed manufacturing process and layouts to support final assembly. The consequences were dramatic. In comparing with extrapolations from earlier aircraft designs such as those for the 757 and 767, Boeing achieved the following:

-

Elimination of >3,000 assembly interfaces, without any physical prototyping,

-

90 percent reduction in engineering change requests (6,000 to 600),

-

50 percent reduction in cycle time for engineering-change request,

-

90 percent reduction in material rework, and

-

50-fold improvement in assembly tolerances for the fuselage.

While the Boeing 777 experience is exciting for the VE enterprise, we should recognize just how limited the existing CAD tools are. They deal only with static solid modeling and static interconnection, and not—or at least not systematically—with dynamics, nonlinearities, or heterogeneity. The virtual parts in the CATIA system are simply three-dimensional solids with no dynamics and none of the dynamic attributes of the physical parts. For example, all the electronics and hydraulics had to be separately simulated, and while these too benefited from CAD tools, they were not integrated with the three-dimensional solid modeling tools. A complete working physical prototype of the internal dynamics of the vehicle was still constructed, a so-called “iron-bird” including essentially everything in the full 777.

While there was finite element modeling of static stresses and loads, all dynamical modeling of actual flight, including aerodynamics and structures, was done with “conventional” CFD and flight simulation, again with essentially no connection to the three-dimensional solid modeling. Thus while each of these separate modeling efforts benefited from the separate CAD tools available in their specialized domains, this is far from the highly integrated VE environment that is envisioned for the future, and is indeed far from even some of the popular images of the current practice. Thus while a deeper understanding of the 777 does nothing to reduce our respect for the enormous achievements in advancing VE technology or dampen enthusiasm for the trends the 777 represents, it does make clear the even greater challenges that lie ahead.

What are the next steps in CAD for projects like the 777? Broadly speaking, they involve much higher levels of integration of the design process, both laterally across the various subsystems, and longitudinally from preliminary design, through testing, manufacturing, and maintenance. They will require more systematic and sophisticated treatment of uncertainties, especially when dynamics

FIGURE B.6 Two multicomponent subassemblies in the 777 connecting only at point A.

are considered in a unified way. This will require introducing nonlinearities, heterogeneity, and variable resolution models.

Boeing engineers view these steps as enormous challenges that must be faced. Even something as simple-sounding as using the CATIA database describing the three-dimensional layout of the hydraulics and their interconnections as a basis for a dynamic simulation of the hydraulics remains an open research problem, let alone using CATIA as a basis for dynamic modeling and simulation of aerodynamics and structures. What is difficult to appreciate is how the sheer scale of keeping track of millions of components can be computationaily and conceptually overwhelming.

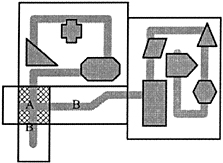

To illustrate some of the issues in the three-dimensional solid modeling for the 777, consider yet another simple experiment in two dimensions. Suppose that we have two two-dimensional subassemblies, each consisting of several components, that we wish to interconnect at point A as shown in Figure B.6 (the components shown have no meaning and are simply for illustration.) We want to be sure there are no unwanted intersections in the design, and it is clear from Figure B.6 that this assembly has no connections except at point A.

The 777 has millions of such parts. A virtual mock-up can be made from a parts and interconnection list so that designers can “fly through” the design to check for unwanted interconnections. The computer can also automatically check for such interferences so that these can be identified and redesigned before they are discovered (more dramatically and at much greater expense) during physical assembly. If there are n components, we can think of an n × n matrix of pairs of potential collisions, so 3,000,000 parts would have approximately n*(n -1)/2 = 4.5 × 1012 possible intersections to be checked. Although this grows only quadratically with the number of parts (not the exponential growth we are so concerned with elsewhere), the sheer number of parts makes brute force enumeration unattractive. Fortunately, there are standard ways to reduce the search.

FIGURE B.7 Simple bounding boxes with large intersection and not conclusively eliminating unwanted subassembly interconnection.

We could begin by putting large bounding boxes around the subassemblies, as shown in Figure B.7 . This could be used to eliminate potential intersections far away from these subassemblies (resulting in large sections of our interconnection matrix that would not need to be checked), but would not conclusively eliminate unwanted connections between the subassemblies. At this point, all the pairwise components of the subassemblies could be checked, or we could refine the bounding boxes, as shown in Figure B.8 . At this point, we would have eliminated all but 2 components, and they could be checked to see that the only intersection was at A. The bounding boxes in this case reduced the cost from computing 24 pairwise (4 × 6) intersections to computing 1 pairwise component and a few bounding boxes. The bounding boxes have simple geometries, so are more easily checked than the components, but need to be constructed. Clearly, there is a tradeoff, and one does not want to use too many or too few bounding boxes.

This is an example of a general technique for searching called divide and conquer, where the problem is broken up into smaller pieces using some heuris-

FIGURE B.8 Refined boxes show only intersection is at A.

FIGURE B.9 Refined boxes help find interferences.

tic. It is related to branch and bound, where, say, a function to be minimized is searched by successively breaking its domain into smaller pieces on which bounds of the function are computed. Suppose we want to compute the minimum distance between components that are not supposed to be connected (we want to make sure this function is bounded away from zero). A bounding box gives us upper and lower bounds on this function for the component combinations that are included in the bounding boxes. We can ignore pairs of boxes that are separated by more than the smallest upper bound we have, thus pruning the resulting tree of refined bounding boxes. This is illustrated in Figure B.9 , where the subassemblies are connected at point B, instead of A. The refined bounding boxes show how they can be used to focus the search for unwanted interconnections.

Note that if we introduce uncertainty in our description of the components, it can drastically increase the computation required to do pairwise checking and make the bounding box approach even more attractive. Actually, while the basics of solid modeling are well developed, there is no standard approach to uncertainty modeling even here and many open questions. Once we introduce uncertainty in a general way, then exponential growth in evaluating all the possibilities becomes a worry. Because three-dimensional solids inherently have limited dimensionality of contacts, it should be possible to avoid this. As we will see later, uncertainty in dynamical systems is even more challenging, and a version of the bounding box idea is quite useful in doing robustness analysis of uncertain dynamical systems as well.

It is interesting to note that the Boeing 777 used an advanced, though conventional, approach to modeling and simulation of the aerodynamics and flight. Aeromodeling is a convenient example because there is a long history of successful systematic modeling, yet substantial challenges remain. It also offers us a

FIGURE B.10b Cost versus number of points at which aerocoefficients are obtained.

FIGURE B.10a Schematic of error-cost relationship of different approaches to obtaining aerocoefficients for flight modeling.

chance to discuss computational fluid dynamics (CFD) in a broader context that includes analytic tools, wind tunnels, and flight test. This is simply for illustration, but it will touch on broader issues in VE.

Standard rigid body airplane models typically consist of a generic form of the model as an ordinary differential equation (ODE) with vehicle-specific parameters for mass distributions, atmospheric conditions (dynamic pressure), and aerodynamic coefficients. This rigid body model determines what motion of the vehicle will result from applied forces due to propulsion and aerodynamics. Aerocoefficients are parameters that give the ratio of forces and moments generated on the vehicle to surface deflections and angular changes of the vehicle with respect to the ambient air flow. The standard quasi-steady assumption is that these aerocoefficients depend only on sideslip angle and angle of attack, and not on the history of the motion of the vehicle or the complex flow around it. Therefore the aerocoefficients are most compactly represented as a set of six functions, three forces and three moments, on the unit sphere (the two angles), plus additional functions for surface deflections. Obtaining these functions dominates standard aeromodeling and the use of CFD.

Aerocoefficients can be estimated analytically, computed using CFD, or measured in wind tunnels or flight tests. Figure B.10a shows schematically the error-costs associated with the various methods. Once the coefficients are obtained, they can be plugged into a dynamic model of the airplane, and the resulting nonlinear differential equations can be simulated. This figure is misleading in many ways, one of which is that the error-cost tradeoff between CFD and wind tunnel is oversimplified. Figure B.10b shows the cost to find the aerocoefficients at a certain number of points. Building a wind tunnel model is relatively expensive, but once it is available, the incremental time and cost to do an additional experiment are small. Research is being done to speed up both CFD and the

building of wind tunnel models, thus shifting both curves to the left. One of the most revolutionary developments currently going on in this area is the improvement in rapidly generating wind tunnel models from computer models. Researchers are currently working to change the traditional turnaround time from months to hours.

These figures do not address the fact that CFD and wind tunnels do not give exactly equivalent results. To a first approximation, wind tunnel tests can give lower overall modeling error by allowing the modeler to include additional factors, such as unsteady effects. On the other hand, CFD can provide detailed flow field information that is difficult to obtain experimentally and avoids experimental artifacts like wind-tunnel wall effects. Finally, flight tests give the most reliable predictions of aircraft behavior, although even here there are errors, since not all possible operational conditions can be tested or even necessarily anticipated. This is summarized in Figure B.10a , which shows the error versus complexity for various modeling methods, where, vaguely speaking, error is the difference between predicted operational behavior and actual operational behavior, and cost could be taken as the total dollar cost to achieve a given error with a specific method.

Note that the methods are complementary, each best for some particular error-cost level. They are also complementary in other ways, as the deeper nature of the errors is different as well. While the details may change with technology, the overall shape of this figure will not. One goal of VE is to reduce the error associated with simulation-based methods and thus reduce the need for wind tunnel and flight testing. If this is not done carefully, it is quite easy to simultaneously increase error and cost.

This discussion has taken a very superficial view of modeling and particularly of uncertainty, but has hopefully illustrated the tradeoff between error and cost that holds across both virtual and physical prototyping. One important point to note is that despite earlier euphoric visions of the role of CFD in aircraft design that suggested it would almost entirely replace wind tunnels, only a tiny fraction of the millions of aerodynamic simulations generated for a modern aircraft design are done using CFD. The remainder continue to be done with physical models in wind tunnels, and this is not expected to change in the foreseeable future. To get a slightly deeper picture of these issues, we need to examine CFD more closely.

Our second case history involves computational fluid dynamics (CFD). Fluid dynamics is a large and sophisticated technical discipline with both a long history of deep theoretical contributions and a more recent history of major technological

impact, so it is not surprising that it is poorly understood by outsiders. We focus on the aspects relevant to the broader VE enterprise.

Rather than following a particular group of molecules, fluid dynamical models adopt a continuum view of the fluid in terms of material elements or volume elements through which the material moves. Because of the simplicity of Newtonian fluids (those for which viscosity is constant, such as air flowing about an airplane), a fairly straightforward system of partial differential equations relate the dynamics of a fluid flow element to its local velocity, density, viscosity, and externally acting forces. These are the Navier-Stokes (N-S) equations, which have been known for more than a century and a half. They merely express conservation of mass and the other of momentum, but the three-dimensional case has 60 partial-derivative terms.

The N-S equations are thought to capture fluid phenomena well beyond the resolution of our measurement technology. Thus fluid dynamics holds a very important and extreme position in VE as an example of a domain where the resource limitation is due primarily to computation and measurement, rather than to ignorance about the phenomena (although this is not true for granular or chemically reacting flow). As one might expect, then, a major effort in fluid dynamics involves various numerical approximations to solving the N-S or related equations. Such numerical airflow simulations are the subject of computational fluid dynamics (CFD). The obvious approach is direct numerical solution (DNS) of a discrete approximation to the N-S equations. Unfortunately, turbulence makes this extremely difficult.

In effect, turbulence is a catch-all term for everything complicated and poorly understood in fluid flow. While there is no precise definition of turbulence, its general characteristics include unsteady and irregular flows that give something of the appearance of randomness; strong vorticity; stirring and diffusion of passive conserved quantities such as heat and solutes, and dissipation of energy by momentum exchange. Under typical aircraft flight conditions at high subsonic speeds, turbulence takes the form of a nested cascade of eddies of varying scale, ranging from on the order of meters to on the order of tens of micrometers; a span of 4 or more orders of magnitude. On average, the largest eddies take energy from the free flow and, through momentum exchange, feed it down, step by step in the cascade of eddies, to the smallest eddies, where it is dissipated as heat. However, it is also possible for energy to feed from smaller eddies to larger over limited times or regions, and these reverse energy flows can play a significant role. Turbulence is no more random than the trajectories of our coins, but its sensitivity to initial conditions is even more dramatic, since there are so many more degrees of freedom. Turbulence is considered one of the classic examples of chaotic dynamics, although this has not been proved.

To fully to capture the dynamics of the airflow in DNS, it is necessary to integrate numerically over a mesh fine enough to capture the smallest turbulent eddies but extensive enough to include the aircraft and a reasonable volume of air

about it. This is beyond current computational facilities. To overcome this, various approximations must then be made, that are at the heart of CFD. Here merely make a few observations. First, as was noted earlier, only a tiny fraction of the millions of aerodynamic simulations generated for a modern aircraft design are done using CFD. The remainder are still done with physical models in wind tunnels, and this is not expected to change soon. Second, CFD is used primarily to compute the static forces on objects that are fixed relative to the flow, and any dynamical vehicle motion combines these static forces with vehicle kinematics. Using CFD for computing dynamically the forces on objects that are themselves moving dynamically adds substantially to the computational complexity.

Finally, the various approaches to CFD result in widely varying computational requirements, yet there is no integrated “master” model other than the N-S equations themselves, and substantial domain-specific expertise is needed to create specific simulation models and interpret the results of simulations. Some approximations assume no viscosity and others large viscosity; some approximations focus on material elements and their motion (called Lagrangian formulations); others focus on volume elements through which material passes, and thus fluid velocities are the focus (called an Eulerian formulation); and still others try to track the movement of the larger-scale vortical structures in the fluid. The choices are dominated by the boundary conditions, as the fluid (air) being modeled in each case is identical, and even different approximations may be made in different parts of the same flow. For example, viscosity might be modeled only near a solid boundary, while the flow far from the boundary would be assumed to be inviscid. Thus, while the material itself is perfectly homogeneous, inhomogeneities arise in our necessary attempts to approximate the fluids.

What is particularly interesting for this appendix is the fact that, according to Paul Rubbert, 7 the chief aerodynamicist for Boeing, uncertainty management has become the dominant theme in practical applications like aircraft design. He claims that uncertainty management is replacing the old concept of “CFD validation.” He argues that both CFD and wind tunnels are “notorious liars,” yet modern aerodynamic designs are increasingly sensitive to small changes and error sources. Thus, better attention needs to be paid to modeling uncertainty and its consequences. CFD and wind tunnels are complementary, and the goal is to be able to reconcile the differences between their results and not to expect that they should give the same results. In this context, CFD users must be provided with the insight and understanding to allow them to manage the various sources

|

7 |

Private communication with John Doyle, California Institute of Technology, December 1996. |

of uncertainty that are present in their codes, and to understand how those uncertainties affect the specific aircraft behavior they are trying to predict.

In many respects, commercial aviation already is a remarkable feat in uncertainty management. We routinely get on airplanes and reliably arrive at our destination, and fortunately our airplanes crash much less frequently than our computers. Airplanes move in the very complex system of the earth's atmosphere and together with air traffic control constitute one enormously complex system delivering remarkably reliable transportation. At small time and large scales the atmosphere is also turbulent and chaotic, and occasional crashes due to atmospheric disturbances remind us that this is not a triviality.