APPENDIX D

Elements ISO 9000

The International Organization for Standardization (ISO) is a federation of organizations that represent 92 member countries established in 1946 to "promote the development of international standards and related activities to facilitate the exchange of goods and services" (Breitenberg, 1993). The United States representative to the ISO is the American National Standards Institute (ANSI).

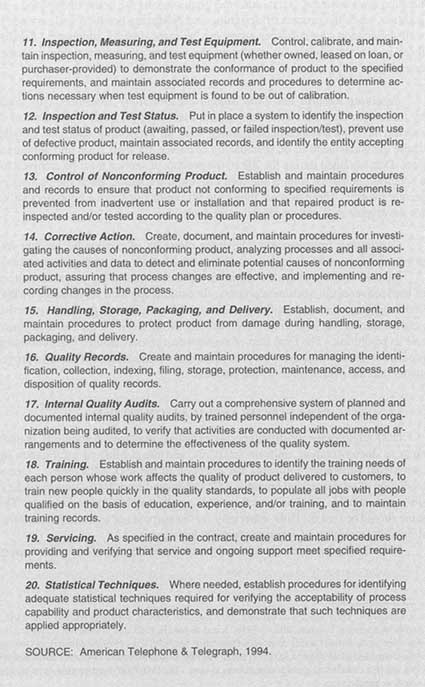

In 1987 ISO issued the ISO 9000 Standard Series, a set of five individual but related standards (9000 through 9004) that provide guidance on requirements for quality systems; see Box D-1. The most comprehensive set of standards are ISO 9001; its various elements or specific requirements are listed in Box D-2. Significant changes were made to the ISO 9000 standards in 1994. ISO 9004-1 is now the overall guidance standard in the basic set of quality management standards; however, it also retains its original role of a separate standard for organizations to use internally to improve their quality management practices. Further revisions are anticipated in 1998 to move the ISO 9000 family toward "total quality management." The ISO's Technical Committee 176 has already started developing the revisions, based on quality management principles and extending beyond the product realization process.

Initially designed to be used in contractual arrangements between two parties or for internal auditing, use of ISO 9000 standards has greatly expanded over the last 10 years. More than 96 countries have adopted the ISO quality standards, and most industries throughout the world, including many in the United States. recognize them. Today, many government agencies and private industries worldwide require the organizations with which they do business to comply with one of the standards in the series. The standards have been applied to manufacturing,

service industries, software development organizations, and the process industries. Currently, the standards are most often applied to business processes that directly affect the products and service provided by an organization. In the future, the scope of application will be expanded to all areas of enterprises, including finance, accounting, human resources, market research, and marketing.

BASIC PRINCIPLES

The ISO 9000 standards are based on three basic principles: processes affecting the quality of products and services must be documented; records of important decisions and related data must be archived; and when these first two steps are complied with, the product or service will enjoy continuing improvement. We now discuss each of these principles and discuss how adherences results in product and service improvement.

Documenting Processes Documentation often helps to discover important ad hoc processes that are inconsistently applied. This discovery then leads to changes in the process that results in their more consistent application, which, in turn, reduces the variability in the output of these processes.

Documenting processes also provides a better understanding of how an orga

nization does business. First, customer requirements are better understood. In addition, since the process of designing and producing products and services is well understood, the customer needs can be more directly addressed. Finally, there is a clear process for exploring the sources of and resolving customer complaints.

Retaining Records and Data Supporting Decisions Decisions, only made by those with the authority to make them, that affect the quality of a product or service, need to be documented, along with all supporting data and records. These decisions need to be periodically reviewed for their basis and authorization. Data obtained during the life of the product or service must be archived for a specified period of time if the data provide information about the product or service quality. These data are then used for product or service improvement, or for development of new products or services.

Continuing Improvement The three elements of continuing product improvement are: audit, corrective and preventive action, and management review.

Internal auditing provides important information as to the potential sources of ineffectiveness in a business process. In conjunction with surveillance by ISO 9000 registrars, internal auditing and external surveillance produce corrective action requests that lead to an understanding of the cause of the ineffectiveness and its resolution. The final step of management review assures that continuing product or service improvement is in place and the integration of these improvements into the overall goals of the business.

BENEFITS OF APPLICATION TO DoD

DoD might draw constructively on industrial practices, particularly in such areas as documentation, uniform standards, and the pooling of information on operational suitability, following the direction of the ISO 9000 series.1 Documentation of processes and retention of RAM-related records (for important decisions and valuable data) are practices now greatly emphasized in industry. The same should be true for DoD, especially for the purposes of assessing operational suitability in support of major production decisions.

DoD should document, consistently across the services, all relevant sources

of test data and information. Development teams would not have to ''reinvent the wheel" when beginning a project. Instead, they would identify and learn from previous studies and findings. Setting multiservice operational test and evaluation standards similar to those in ISO 9000 is critical to the creation of an environment of more systematic data collection, analysis, and documentation.

The panel recommends (see Chapter 3) that DoD and the services develop a centralized test and evaluation data archive and standardize test data archival practices based on ISO 9000. This archive should include both developmental and operational test data; use of uniform terminology in data collection across services; and careful documentation of development and test plans, development and test budgets, test evaluation processes, and the justification for all test-related decisions, including decisions concerning resource allocation.

Standardization, documentation, and data archiving facilitate use of all available information for efficient decision making. Routinely taking these steps will:

-

provide the information needed to validate models and simulations, which in turn can be used to plan for (or reduce the amount of) experimentation needed to reach specified operational test and evaluation goals;

-

allow the "borrowing" of information from past studies (if they are clearly documented and there is consistent usage of terminology and data) to inform the assessment of a system's performance based upon limited testing, by means of formal and informal statistical methods and other approaches;

-

make possible the use of data from developmental testing for efficient operational test design;

-

allow learning from best current practices across the services; and

-

lead to an organized accumulation of knowledge within the Department of Defense.

A key benefit of documentation and archival of test planning, test evaluation, and in-use system performance is the creation of feedback loops to identify system flaws for system redesign, and to identify where tests or models have missed important deficiencies in system performance.

Retention of records may involve additional costs, but it is clearly necessary for accountability in the decision-making process. The trend in industry is to empower employees by giving them more responsibility in the decision-making process; along with this responsibility comes the need to make people accountable for their decisions. This consideration is likely to be an important organizational aspect of the operational testing of defense systems.