6

Analyzing and Reporting Test Results

Chapter 5 argued that substantial improvements in the cost-effectiveness of operational testing can be achieved by test planning and state-of-the-art statistical methods for test design. It was also noted that achieving the full benefit of improved test design requires a design that takes account of how test data are to be analyzed. This chapter focuses on how data from operational tests are analyzed and how results are reported to decision makers. The panel found potential for substantially improving the quality of information that can be made available to decision makers by applying improved statistical methods for analysis and reporting of results.

In its review of reports documenting the analysis of operational test data, the panel found individual examples of sophisticated analyses. However, the vast majority focused on calculating means and percentages of measures of effectiveness and performance, and developing significance tests to compare aggregate means and percentages with target values derived from operational requirements or the performance of baseline systems.

The panel found the following problems with the current approach to analysis and reporting of test results:

-

While significance tests can be useful as part of a comprehensive analysis, exclusive focus on these tests ignores information of value to the decision process. There is often no explicit estimate of the variability of estimates of system performance. This makes it difficult to assess whether additional testing to narrow the uncertainty ranges is cost-effective. It also makes it difficult to evaluate the relative risk associated with moving ahead to full-rate production on

-

a system that may still have problems, versus holding back a system that is performing acceptably.

-

Decision makers typically are concerned not just with aggregate measures, but also with the performance of systems in particular environments. While the panel found this concern reflected in the analyses we examined, the reporting of results for individual scenarios and prototypes was almost always informal. The panel was often told that statistics could not be applied to the problem of performance of individual scenarios because sample sizes were too small. Analysts were frequently unaware of formal statistical methods and modeling approaches for making effective use of limited sample sizes.

-

Information from operational tests is infrequently combined with information from developmental tests and test and field performance of related systems. The ability to combine information is hampered by institutional problems, by the lack of a process for archiving test data, and by the lack of standardized reporting procedures.

This chapter discusses problems with current procedures for analyzing and reporting of operational test results, and recommends alternative approaches that can improve the efficiency with which decision relevant information can be extracted from operational tests. The evaluation of the operational readiness of systems can be substantially improved by implementing these changes. In addition, money can be saved through more efficient use of limited test funds.

LIMITATIONS OF SIGNIFICANCE TESTING

Significance testing has a number of advantages for presenting the results of operational tests and for deciding whether to pass (defense) systems to full-rate production.1 Significance testing is a long-standing method for assessing whether an estimated quantity is significantly different from an assumed quantity. Therefore, it has utility in evaluating whether the results of an operational test demonstrate the satisfaction of a system requirement. The objectivity of this approach is useful, given the various incentives of participants in the acquisition process. Certainly, if a system performs significantly below its required level, it is a major concern.

However, significance testing has several real disadvantages as the primary quantitative assessment of an operational test: knowing only the acceptance or rejection of a test's outcome simply does not provide enough information to decision makers. The panel has found several problems with the reliance on significance testing, which are detailed in the remainder of this section.

Significance Tests Focus Inappropriately on Pass/Fail The decision maker is typically interested not just in whether a system has passed or failed, but in what is actually known about how well the system performed. Did it almost pass? What range of performance levels are consistent with the test results? How much uncertainty still remains about its performance? What are the relationships between characteristics of the environment and test results? If there is doubt about whether the system meets a requirement, what is the operational significance of that doubt? What is the impact on the system's mission from any doubt the test casts on whether it meets the stated requirement?

Significance Tests Answer the Wrong Question A significance test answers the question, "How unlikely is it that the results, as extreme or more extreme than I have observed, would occur if the given hypothesis is true?" Consider the problem of testing whether a missile system meets a specified requirement for accuracy. The hypothesis selected as the "null hypothesis" might be that the system fails to meet a minimum acceptable level of performance that would justify acquisition.2 What is reported to the decision maker is whether the system passed or failed the significance test (passing the test would mean the null hypothesis was rejected).

In the defense testing context, the term "null hypothesis" has sometimes been used to indicate the compound hypothesis of performance at or above the required level—which already represents an improvement from the baseline or control system's performance—with rejection indicating a substandard performance. This is a non-standard use of the term. A preferable way of considering the decision structure of the testing problem is to consider the various hypotheses in a symmetric way by setting values a1 and a2 such that if the system's performance is less than a1, the system should be considered deficient; if the system's performance is greater than a2, it should be considered satisfactory; and if the system's performance is between a1 and a2, one should be indifferent to the assessment. This is a more natural framework to this decision problem. Related

to this approach, one could set the null hypothesis to be that the system is less than a minimum acceptable level of performance that was below the required level but above the level of the baseline. Then, the power of the test at the required level of performance should be relatively close to one for an effective test. The null hypothesis should not generally be set equal to the performance of the baseline system since only a modest improvement over the baseline system might not be worth acquiring. Also, the null hypothesis should not be that the performance is less than the requirement, since there is then a substantial probability of rejecting systems worth acquiring.

We believe that decision makers often interpret a passing result as confirming that the system probably meets its requirement and a failing result as an indication that the system probably does not meet its requirement. This interpretation is sometimes valid, but there are times when it is not. The correct interpretation of a passing result, when the null hypothesis is that the performance is less than minimally acceptable, is that the results that were observed are inconsistent with the assumption that the system does not meet the minimal acceptable level. While it is clear that the question answered by a significance test is related to a problem decision makers care about (whether the system meets its requirement), the significance test does not directly address this question. The panel found that test analysts and decision makers alike often failed to understand this point.

Significance Tests as Applied Often Fail to Balance the Risk of Rejecting a "Good" System Against the Risk of Accepting a "Bad" System This issue is not a criticism of significance testing per se, but of how it is often applied. In deciding whether to pass a system, one can make two different types of error: one can "fail a good system" or ''pass a bad system."3 The two types of error need to be compared with each other and both related to the cost of testing. Assuming that one hypothesis is that the system meets a minimum acceptable level of performance, making the test criteria more stringent increases the first kind of error and reduces the second; making the criteria more lenient does the reverse. By setting the cutoff between passing and failing, the tester trades off one type of error against the other. There are certainly situations where one error is more problematic than the other and so should be set lower. For example, with a missile system, requiring more hits on test shots to pass increases the probability of rejecting a good system, but reduces the probability of accepting a bad system, and vice versa. To reduce both types of error simultaneously requires more test shots and more test funds.

The panel stresses the importance of considering both types of error when determining pass-fail thresholds and of balancing cost against the ability to discriminate between levels of performance when selecting test sample sizes. What we found, however, is that test thresholds are usually set to achieve error levels set by arbitrary convention. We often heard comments to the effect that although everyone knows that statistical tests should have significance levels of .05 or .10, operational testers were forced by resource limitations to use significance levels of .20. Although viewed as regrettably high, .20 is often commonly used. Similarly, tests with power .80 are also commonly used. We found it rare that test designers made explicit trade-offs between the two types of error.

Sufficiently Powerful Significance Tests May Require Unattainable Sample Sizes The most frequent criticism of the use of statistics in operational test and evaluation was that tests with a high ability to statistically discriminate good from bad systems cost too much. We often heard comments such as, "We can't afford the 'statistical answer,'" or "We can't use statistics because we can't afford large samples." There was a concern that test budgets do not allow the tests to make use of more standard error levels for many situations. Yet, the use of tests with significance levels of 20 percent and power of only 80 percent for important alternatives, which seems typical, is troubling to system developers and the general public: using a test that is designed to incorrectly identify as deficient one-fifth of a group of systems that actually deserve to pass will result in unnecessary, costly retesting or further development; using a test that is designed to incorrectly identify as satisfactory one-fifth of a group of systems that actually deserve to fail will result in the acquisition of deficient systems and potentially expensive retrofitting. Unfortunately, when faced with the complex task of assessing the trade-offs, the acquisition community has reduced the emphasis on the use of statistics for these problems, precisely for those situations where statistical thinking is most critical to making efficient use of the limited information that is available on system performance, system variability, and how performance depends on test conditions. It is extremely important to understand the trade-offs between decreases in both error probabilities and increases in test costs, and when this trade-off supports further testing, and when it does not.

INSUFFICIENT FORMAL ANALYSIS OF INDIVIDUAL SCENARIOS AND VARIABILITY OF ESTIMATES

The focus on the reporting of significance tests as summary statistics for operational test evaluation and their prominence in the decision process de-emphasizes important information about the variability of system performance across scenarios and prototypes. For example, understanding which scenarios are the most challenging helps indicate how system performance depends on characteristics of the operating environment and which types of stresses are the

most problematic for the system. Because of smaller sample sizes for individual scenarios than for the overall test, there will be more uncertainty about performance estimates in particular conditions than for an overall aggregate estimate of performance. Understanding this variability is important to the question of whether a system's poor performance in a given environment should be attributed to a serious problem that must be addressed or simply to the expected variability in test outcomes. Unfortunately, given the resources that can realistically be devoted to testing, answering this question conclusively may be difficult for many systems.

Information on which prototypes performed worst may indicate a faulty manufacturing process. To address this, one may want to analyze the reduced test data set through excluding data for some prototypes, which would demonstrate the performance that one might expect if the manufacturing process were improved. It is our impression that scenario-to-scenario variability will dominate prototype-to-prototype variability for the vast majority of systems, but this impression would be useful to investigate to identify the kind of systems for which this is not true.4

FAILURE TO USE ALL RELEVANT INFORMATION

Chapter 4 discussed the need, especially given the cost and therefore limited size of much operational testing, for making use of data from alternative sources (tests and field performance of related systems and developmental tests of the given system). Except for the pooling of hours under operational testing with those from developmental testing for reliability assessment (see Chapter 7), the panel has seen little evidence, in the evaluation reports it has examined, of a consistent strategy of examining the utility of these other data sources, an analysis of how they might best be combined with information from operational test, or the use of these alternate sources of information.

This failure has at least four explanations: (1) there is a justifiable concern as to the validity of this combination of information from different experiments; (2) there are perceived legal restrictions, stemming from this concern, to the use of developmental test and other information in evaluation of a system's operational performance; (3) there is no readily accessible test and field use data archive; and (4) the testing community lacks the expertise required to carry out more sophisti

cated statistical analyses. Although each of these arguments involves real hurdles, each can be overcome.

As we note in other chapters, there is no archive for test data and, therefore, it is often very hard to combine information from operational testing with that from developmental testing or to combine developmental and operational testing with that from test and field experience of related systems (possibly systems with identical components). The lack of use of such information is often inefficient since, when the test circumstances are known, each of those sources can provide relevant information about operational performance. An archive would also make it possible to share information about problems in measurement techniques and threat simulation: Are there good ways to present data or to analyze missile hit distances? Are there useful things to know about design effects (e.g., learning) in trials run over multiple days? We note that there are (at least perceived) legal obstacles to using some of these sources of information for operational evaluation.5 Finally, even if these other data sources were available and accessible, there is a scarcity of statistical modeling skills available in the test community that would be needed to make full use of this data; this issue is discussed in more detail in Chapter 10.

LIMITED ANALYSES AND STATISTICAL SKILLS

There are also some limitations concerning the use of sophisticated statistical techniques to fully analyze the information provided by operational tests and alternative data sources. These limitations stem from the acquisition process itself and the lack of requisite skills in those charged with the evaluation of operational tests. The analysis of operational testing data is typically accomplished under strong time pressure. Systems have often been in development for as much as a decade or more and when operational testing is concluded, especially if it is generally believed that the system performed adequately, there is understandable interest in having the new system produced and available. There is therefore pressure to carry out the evaluation quickly. Given current schedules, large amounts of data need to be organized, summarized, and analyzed in as little as two months. This schedule greatly reduces the time to investigate and understand anomalous results, to try out more sophisticated statistical models, to validate any assumptions used in models, to explore the data set graphically, and to generally understand what information is present in the operational test data set. This work goes well beyond the computation of summary statistics and the asso

ciated significance tests which are relatively straightforward in their application. The section above on significance testing describes a number of analyses that would be worth undertaking. Failure to carry out these analyses due to time pressures may result in a failure to completely represent the information provided by operational testing and therefore lead to an incorrect decision.

The individuals charged with operational test evaluation often have limited expertise with the analysis of large, complicated data sets. The ability to examine a large data set, understand what is in it, identify its major features and more subtle details, and represent what has been discovered in an accessible form to decision makers, requires considerable training and guided experience. Knowledge of some of the more sophisticated modeling tools—for instance, non-homogeneous Poisson processes, classification and regression trees, robust analysis of variance, and others that are likely relevant to the analysis of operational test data—are unlikely to be familiar to those currently involved with operational test evaluation. This limited statistical expertise is very understandable, given what test managers need to know about the acquisition process and the system under test. In addition, given the typical career path, that is, how long test managers are likely to spend with their operational test agency, the test manager's lack of statistical expertise is probably impossible to overcome.

RECOMMENDATIONS FOR IMPROVING ANALYSIS AND REPORTING

Perform More Comprehensive Analysis

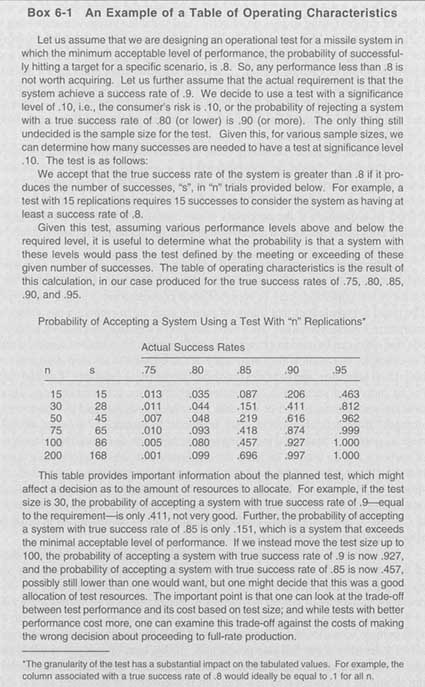

Test evaluation should provide several types of decision relevant information in addition to point estimates for major measures of performance and effectiveness and their associated significance tests. First, a table of potential test sizes and the associated error levels for various hypothesized levels of performance for major measures should be provided to help guide decisions about the advantages of various test sizes. Since this could be constructed before testing has begun, this should be a required element of more detailed versions of the Test and Evaluation Master Plan, with additional knowledge acquired during the test about distributions and other characteristics used to update the table in the evaluation report. This tabulation of the impact of test size on error levels of a significance test is referred to as the test's operating characteristics. We advocate its calculation in Recommendation 4.2 (in Chapter 4); for an example of a table of operating characteristics, see Box 6-1.

In addition, there should be an understanding of the costs, as a function of system performance, one faces by making the decision on whether to proceed to full-rate production. That is, the benefits and costs of passing systems with different levels of performance should be compared with the benefits and costs of failing to pass such systems. Explicit treatment of benefits and costs is far better

than applying standard significance levels to balance the probabilities of the two types of errors (failing to pass an effective and suitable system versus passing a deficient system). Methods from decision analysis provide a natural framework for addressing such benefit-cost comparisons (see, e.g., von Winterfeldt and Edwards, 1986; or Clemen, 1991 ). The panel acknowledges that explicit quantitative assessment of the benefits and costs of decision alternatives can be controversial in a situation in which different parties have differing objectives, though this framework is natural for identifying the source of these differences which can lead to their resolution.

Each point estimate, including those for individual scenarios and for more targeted analysis, should be accompanied by a confidence interval (or related construct) that provides the values that are consistent with the test results. There should be an accompanying discussion of what the implications would be with respect to acquisition if the endpoints of the confidence intervals for the major measures of performance and effectiveness were the actual performance levels of the system.

Furthermore, test analyses should consider variability across test scenarios and system prototypes. The panel found that while results for individual scenarios or test conditions may be reported, their consideration is usually informal. The panel acknowledges that limits on sample sizes often constrain the ability to analyze individual scenarios. However, there exist sophisticated statistical methods that often can be used to extract useful information from limited sample sizes. For example, hierarchical Bayesian analysis can be used to develop estimates for individual scenarios, and to assess the variability of these estimates. Limited data for an individual scenario is augmented both by prior information (e.g., from developmental tests, or test or use data for related systems) and by "borrowing strength" from data obtained in other scenarios, to produce as accurate an estimate as possible. Statistical modeling approaches such as regression or analysis of variance can be used to increase the efficiency with which information about individual scenarios can be extracted from small sample sizes. Such analyses should be accompanied by an evaluation of the adequacy of the assumptions underlying the models and the impact of violations of the assumptions. As noted in Chapter 5, test design must consider the analyses to be performed in order to allocate resources as effectively as possible.

As mentioned above, decision makers need to be informed as to what range of between-scenario, between-prototype, and other types of heterogeneities are consistent with the operational test results so that they can understand the evidence for variability in system performance. For example, if an estimated hit rate of 0.80 that met the requirement was achieved by having an observed hit rate of 1.00 in three scenarios but a hit rate of only 0.20 in the fourth scenario, that might affect a decision about full-rate production. If this kind of analysis requires the use of statistical modeling, it should be performed, and the results of the modeling should be communicated in a way that is accessible to decision makers. All

important assumptions underlying such modeling should be described, along with the support for those assumptions and the robustness of the methods if the assumptions do not hold.

In addition, there should be investigation of the existence and analysis of any discovered, anomalous results: features of the test data that show patterns that are unexpected. Such results may also suggest further testing to help understand their cause. Anomalous patterns could include time or order effects of unknown source, time of day effects, lack of consistency of results across user groups, and other counterintuitive results.

Finally, related to the need for use of confidence intervals, if modeling and simulation is used, there should be a full discussion of the extent to which the simulation model has been validated for the current purpose and an assessment of uncertainty due to the use of the simulation model to assist in evaluation, especially uncertainty due to model misspecification. One common technique for this purpose is sensitivity analyses to determine how changes in assumptions would affect the resulting estimates. Presentation of additional uncertainty due to the use of simulation models can be done using uncertainty intervals (discussed in Chapter 9).

The test community should adopt the view that the purpose of statistics in this setting is to provide tools for helping to extract as much information as possible from a test of limited size (and from related information sources). Statistics is useful for planning and conducting tests and interpreting test results to provide the best information to support decision making. Adopting this view means moving away from rote application of standard significance tests and toward the use of statistics to estimate and report both what is known about a system's performance and the amount of variability or uncertainty that remains. Rather than thinking of a significance test as a comprehensive evaluation of a system's performance with respect to a measure of interest, significance testing instead should be thought of as a method for test design that is very effective in producing operational tests that provide a great deal of relevant information and for which the costs and benefits of decision making can be compared. Significance testing is one of several methods of test evaluation that need to be used together to provide a comprehensive picture of the test results to inform the decision about passing to production.

Combine Information from Multiple Sources

Given the resource constraints inherent in operational testing of complex systems, it is important to make effective use of all relevant information. As noted above, the panel found that information from developmental tests, as well as operational and field performance of related systems, is rarely used in any formal way to augment data from operational tests, other than pooling of developmental and operational test data for reliability assessment.

Certainly, use of data from alternative sources can entail considerable risk, given the substantive differences in test conditions and sometimes in the systems (say, with a shared component) whose information is being combined. If the argument could be supported that data from alternative sources (for example, developmental test) was directly relevant in the sense that the data could be pooled with the operational test data, that would be a reasonable method for combining information. However, this naive sort of combination (for example, reliability estimation) will often result in strongly biased estimates of operational performance because of the unique properties of each system and the different failure modes that occur in developmental, in contrast to operational, test. As a result, appropriate combination of this information will usually require sophisticated statistical methods, in addition to a substantial understanding of the failure modes for the system. For example, knowledge of the reliability of a component from a related system can be used to help understand the reliability of the same component in a different system, but aspects of how it is used—such as the impact on the weight of the overall system through addition of the component—may cause different problems in the new system than were experienced in the previous application.

Especially with respect to suitability issues, but also with respect to effectiveness, knowledge of how a system performed in developmental testing could provide some qualitative information, such as which components were most error prone and which scenarios were most difficult for the system. More quantitatively, the measurement of the reliability or effectiveness of the system could possibly be broken into stages, with operational and developmental testing used to estimate the probability of success for each stage. This approach was taken in the assessment of the reliability of the O-rings in the space shuttle (Dalal et al., 1989).

While we are sympathetic with the need to be extremely careful in combining data from different types of tests, methods now exist for combination of information that reduces the risk of inappropriate combination, and these methods could be successfully applied in a variety of situations. (See National Research Council, 1996, for a description of many of these techniques.) When properly supported by statistical and subject-matter expertise, application of these techniques could both improve the decision process concerning passing to full-rate production and also help to save test funds.

To pursue this greater use of relevant information, expert assessments of the utility of the system's developmental tests and information from related systems should be included in the operational evaluation report to justify a decision either to use or not use such information to augment operational evaluation. If the decision is made to use the additional information, the validation of assumptions of any statistical models used to combine information from operational tests with that from alternative sources should be carried out and communicated. In addition, examination of the validity of any system-based assumptions that are used,

such as linking system reliability to component reliability, should also be carried out and reported.

State-of-the-art methods currently exist and are being constantly refined and expanded to permit combination of information from disparate sources; see, for example, Carlin and Louis (1996) and Gelman et al. (1995). The applicability of these methods to the evaluation of defense systems under development remains unexplored, with substantial potential benefits for improving the decision process by making it more efficient and effective. Such methods are beyond the statistical expertise of the typical test manager, and in fact carrying out these methods in new applications often pose difficult research problems. (Development of increased access to statistical expertise is discussed in Chapter 10.)

The panel believes that the combination of information from developmental tests and from tests of other systems is particularly promising for estimating system reliability (suitability), since our experience suggests that system effectiveness is more specific to an individual system than suitability. However, the use of these methods should be examined for measuring both suitability and effectiveness.

An important final point is that if the new paradigm outlined in Chapter 3 is adopted, the use of screening or guiding tests will facilitate combination of information, since these tests will then have operational aspects that will reduce the risk from combination.

Recommendation 6.1: The defense testing community should take full advantage of statistical methods, which would account for the differences in test circumstances and the objects under test, for using information from multiple sources in assessing system suitability and effectiveness. One activity with potentially substantial gains in efficiency and effectiveness is the effort to combine reliability data from different stages of system development or from similar systems.

Archive Data from Developmental and Operational Tests

In order to facilitate combination of information, the results of developmental and operational test and evaluation need to be archived in a useful and accessible form. This archive, described in Chapter 3, should contain a complete description of the test scenarios and methods used, which prototypes were used in each scenario, the training of the users, etc. To do this in a form that will be useful across services, standardization of the reporting will be needed. In addition, feedback from the performance of a system in the field can be used to inform as to whether the combination of information produced improved estimates of operational performance; see Recommendation 3.3 (in Chapter 3).

SUMMARY

The objectives of an expedited but thorough analysis are not necessarily in conflict. Analysis can begin much earlier using preliminary test data, or, better yet, data from the screening or guiding tests recommended in Chapter 3. As a result, data base structures can be set up, analysis programs can be debugged, statistical models can be (at least partly) tested and assumptions validated, and useful graphical tools and other methods for presentation of results identified. This would greatly expedite the evaluation of the final operational test data. However, when time is not sufficient to permit a thorough analysis, ways should be found to extend an evaluation if it can be justified that there is non-standard analysis that is likely to be relevant to the decision on the system.

The members of the testing community are committed to do the best job they can with the resources available. However, they are handicapped by a culture that identifies statistics with significance testing, by lack of training in statistical methods beyond the basics and inadequate access to statistical expertise, and by lack of access to relevant information. By addressing each of these deficiencies, the test evaluation reports will be much more useful to acquisition decision makers.

The panel advocates that the analysis of operational test data move beyond simple summary statistics and significance tests, to provide decision makers with estimates of variability, formal analyses of results by individual scenarios, and explicit consideration of the costs, benefits, and risks of various decision alternatives.